Notes

Article history

The research reported in this issue of the journal was funded by the HS&DR programme or one of its preceding programmes as project number 14/19/43. The contractual start date was in August 2015. The final report began editorial review in May 2017 and was accepted for publication in October 2017. The authors have been wholly responsible for all data collection, analysis and interpretation, and for writing up their work. The HS&DR editors and production house have tried to ensure the accuracy of the authors’ report and would like to thank the reviewers for their constructive comments on the final report document. However, they do not accept liability for damages or losses arising from material published in this report.

Declared competing interests of authors

none

Permissions

Copyright statement

© Queen’s Printer and Controller of HMSO 2018. This work was produced by Reuber et al. under the terms of a commissioning contract issued by the Secretary of State for Health and Social Care. This issue may be freely reproduced for the purposes of private research and study and extracts (or indeed, the full report) may be included in professional journals provided that suitable acknowledgement is made and the reproduction is not associated with any form of advertising. Applications for commercial reproduction should be addressed to: NIHR Journals Library, National Institute for Health Research, Evaluation, Trials and Studies Coordinating Centre, Alpha House, University of Southampton Science Park, Southampton SO16 7NS, UK.

2018 Queen’s Printer and Controller of HMSO

Chapter 1 Introduction

Background to the study

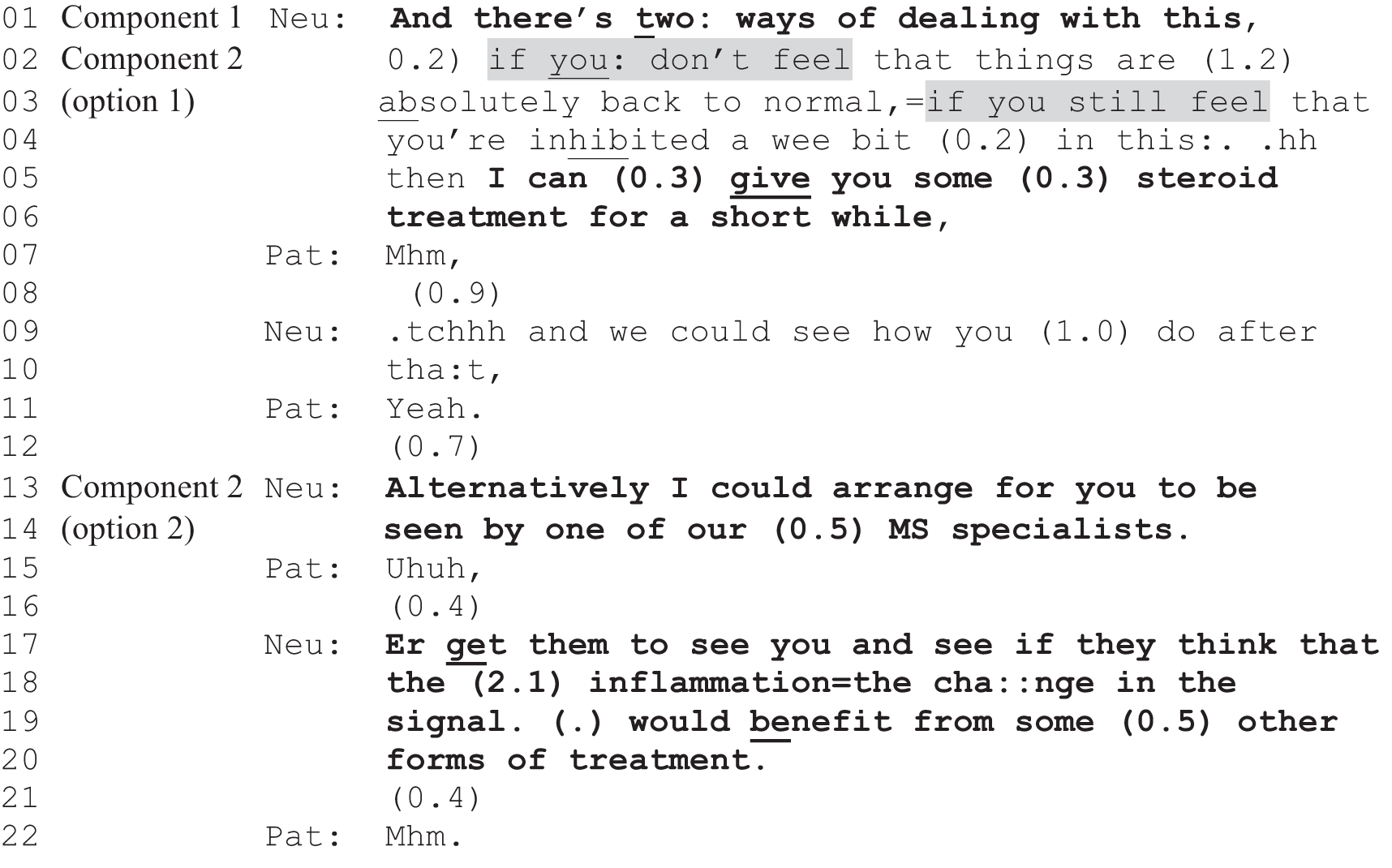

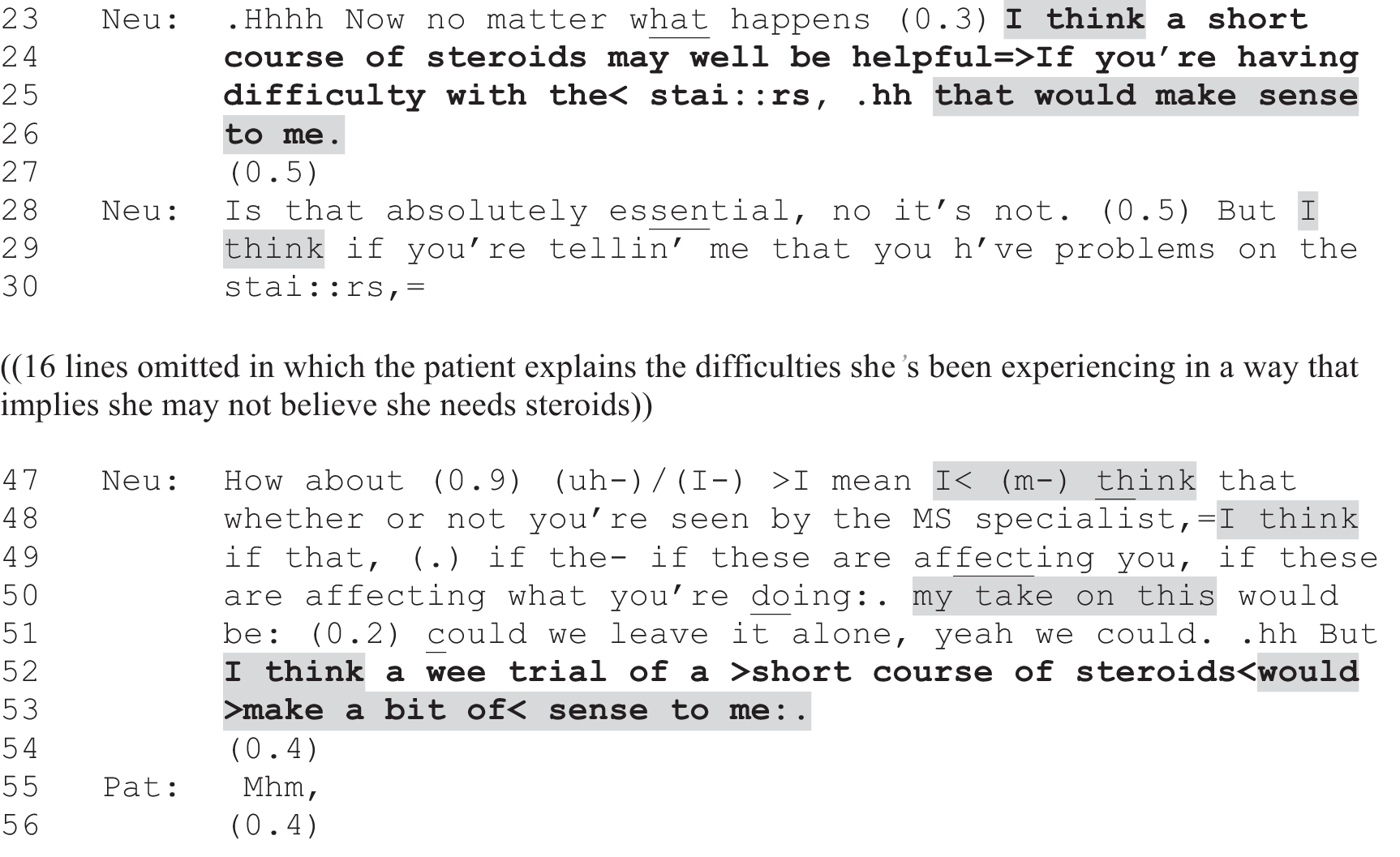

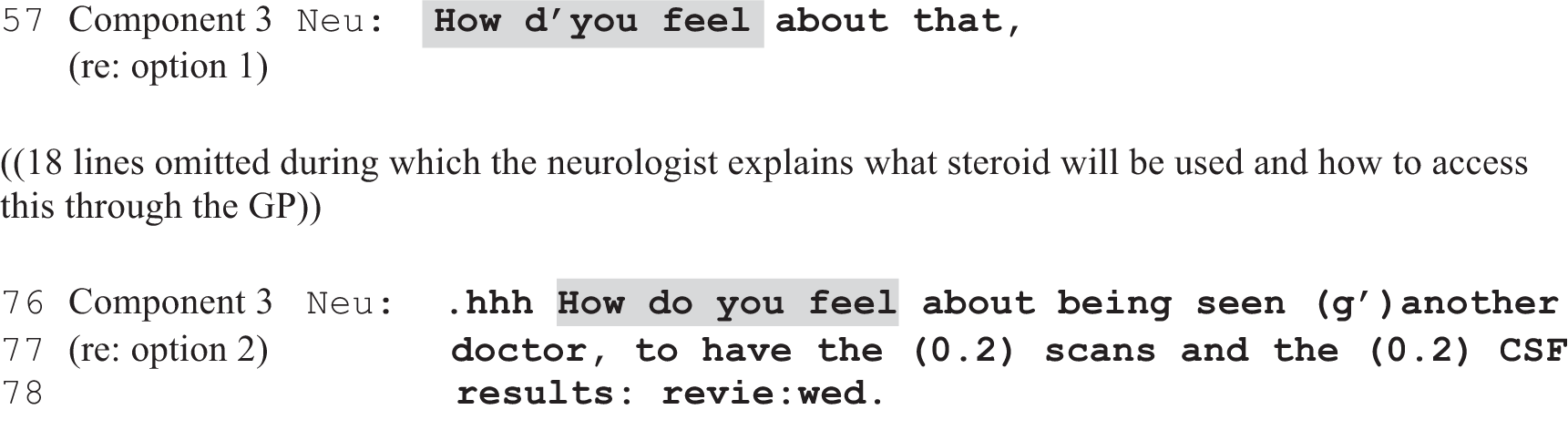

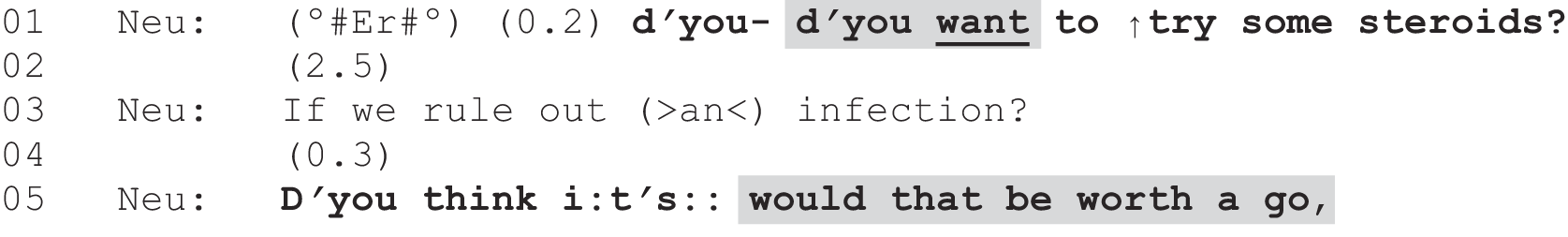

This follow-on study arose directly out of our previous NIHR-funded research on patient choice in neurology outpatient consultations. 1 The primary project involved using a fine-grained qualitative approach called conversation analysis (CA), widely recognised as the leading method for understanding how medical interactions work in practice,2,3 to investigate a sample of 223 recorded consultations collected in Glasgow and Sheffield in 2012. The primary study successfully met its objectives to identify the key communication practices that neurologists were using in this sample to offer patients choice. Specifically, we identified, described and explored some of the interactional consequences of two such practices, which we called ‘option-listing’ and ‘patient view elicitors’ (PVEs), both of which can be used to create a slot for the patient to make a choice and/or voice their views with respect to treatments, investigations or referrals. In brief, option-listing consists of an explicit listing of alternatives, from which the patient may choose one or more. It often includes an initial announcement by the neurologist that there is a decision to be made, flagging up that a list is to come. The term PVE incorporates a range of turn designs that may invite the patient to express a preference [e.g. ‘Well um do you want to try a new drug, is that what you would ideally like?’ (G075)], how they feel about an option, their thoughts on a proposed course of action or other variants on this theme.

The primary study demonstrated how these practices could be used effectively to create the opportunity for patients to make a choice. However, we also found a range of complexities that made it problematic to suggest, straightforwardly, that clinicians adopt these practices to enact NHS policy on patients’ right to make their own ‘informed choices’ (see Reuber et al. 1). These include:

-

The practice of option-listing may also be used to do actions other than offering choice. For example, we have identified cases in which the machinery of option-listing was used to try to persuade the patient to agree to the neurologist’s preferred option, amounting in practice to a form of recommendation. This indicates that it is not a straightforward matter to say: use practice X and patient choice will be achieved. Precisely how the practice is employed can have very significant consequences for the type of conversational ‘slot’ created for patient responses.

-

There was some evidence of patients struggling to make a choice and/or not wanting to do so, including explicit attempts to get the neurologist to give a recommendation.

-

Although option-listing and PVEs were clearly key decisional practices within our data set, the more traditional ‘recommendation’ was also recurrently used, either instead of an option-list or PVE or as part of a longer decision-making sequence that included more than one practice. There was also some preliminary evidence to suggest that recommendations themselves can be designed so differently that they are probably best thought of as lying along a continuum from something akin to a directive to something that invites the patient to ‘weigh in’ on the decision.

-

When doctors offered patients choice about a single option using a PVE, the detailed qualitative analysis indicated that patients quite often declined these offers.

However, our primary study was not designed to include an investigation of recommendations. It aimed neither to map out the relative occurrence of recommendations as opposed to the practices we identified for offering choice, nor to compare these alternative approaches to enacting the same general activity (i.e. initiating decision-making about future courses of action). We therefore obtained follow-on funding to address the broad question: how do the practices through which clinicians might offer choice to patients compare with those practices through which they might deliver a recommendation for a particular course of action? In this follow-on study, we address this question through mixed-method analyses of the original data set. This includes further qualitative analysis, directly developing the work begun in the primary study. Furthermore, the follow-on funding allowed us to code the full set of recorded consultations to enable quantitative analysis. Together, our mixed-method work has made it possible to investigate systematic relationships between the interactional practices and various demographic and self-report variables for which we also collected data (during the primary study). As we discuss in this chapter (see Chapter 11), our approach makes a novel contribution in three main ways: (1) we add to a very limited body of research on the actual interactional practices through which choice may be offered, (2) we extend previous conversation analytic research on decision-making by comparing markedly different approaches to decision-making (recommendations vs. those approaches that orient explicitly to the patient’s right to choose) and (3) we describe our innovative strategies for transforming conversation analytic findings into coded data suitable for quantitative analysis.

Why focus on choice?

Our focus on patient choice is related to, but distinct from, the significant body of research around the broader notion of shared decision-making (SDM). Understood by many as the ‘pinnacle of patient centred-care’,4 there have been extensive efforts to theorise and foster SDM in health care, both in and beyond the UK’s NHS. Although there is no single agreed definition,5 the principles of SDM are well documented5–7 and the general perspective may be summarised as ‘an approach where clinicians and patients share the best available evidence when faced with the task of making decisions, and where patients are supported to consider options, to achieve informed preferences’. 8 Models of SDM thus include multiple dimensions, such as the ‘three key steps’ described by Elwyn et al. ,8 which we describe in more detail in Models versus practices: our methodological starting point. SDM, then, clearly transcends (and may encompass) the more limited concept of ‘patient choice’.

So why focus on the latter? There were two main reasons for our decision (when designing the primary study) to study patient choice. First, alongside the widespread value placed on the principles of SDM within the NHS, there is also a recurrent commitment to patient choice specifically. This is exemplified in the opening of The NHS Choice Framework: What Choices are Available to Me in the NHS? (contains public sector information licensed under the Open Government Licence v3.0),9 which moves quite fluidly between the notion of choice and wider ideals such as ‘patient empowerment’: ‘The government is committed to giving patients greater choice and control over how they receive their health care, and to empowering patients to shape and manage their own health and care’. However, even within the context of the NHS, patient choice is taken to mean very different things. On the one hand, there are limited, measurable legal ‘rights to choose’, with explicit limitations. These are accorded to all NHS patients and are laid out in The NHS Choice Framework: What Choices are Available to Me in the NHS?9 and The NHS Constitution10 and include choosing one’s general practitioner (GP) practice,11 which hospital to attend for a first outpatient appointment12 and which consultant will be in charge of one’s treatment,13 as well as a range of choices relating to maternity14 and end-of-life care. 15

On the other hand, a much broader conceptualisation of choice is evident within NHS policy, dating back at least to the 2000 NHS plan: The NHS Plan: A Plan for Investment, A Plan for Reform. 16 As Sir Nigel Crisp, then Chief Executive for the NHS, put it: ‘The ambition is to change the system to one that is patient led, so that there is more choice, personalised care and real empowerment of people to improve their health’. 17 Working towards this goal, the NHS provides the tellingly named public information service NHS Choices,18 with the strapline: ‘your health, your choices’, and lays out fairly wide-ranging rights and pledges to patients in its 2015 NHS Constitution. 10 These include both the more limited notion of having a right to ‘accept or refuse treatment that is offered to you’ and the broader right to ‘receive care and treatment that is appropriate to you, meets your needs and reflects your preferences’ (contains public sector information licensed under the Open Government Licence v3.0). 10 In summary, patient choice, specifically, is articulated as a value in NHS policy. However, it is a contested and slippery concept,19 making further study an important endeavour.

Our second, related, reason for focusing on patient choice is the significant lack of research on what this might entail in practice. In the report of our primary research,1 we summarised the only two previous studies that we could find that examined real interactional practices for offering choice specifically. To our knowledge, no further studies have emerged since then. There is not space to reproduce our review of those studies here, but both clearly indicated a gap between policy and practice around choice, as well as the enormous complexity of successfully ‘creating an interactional environment in which [. . .] choice can actually be exercised’. 20 Both studies showed that, despite professionals’ overt orientations to people’s right to choose (in one case pregnant women20 and in the other case residents in a care home for those with intellectual difficulties21), the practices used to offer choice often made it difficult for choice to be exercised in reality. Both studies reached the conclusion that further research is needed. As Pilnick20 argued:

. . . what the offering and exercising of choice actually looks like in practice [. . .] remains unclear. The potential implications of these interactional processes, though, are immense, since [. . .] ‘good’ practice is ultimately achieved through interaction rather than through policy or regulation.

Choice in practice is thus worthy of being placed under the microscope in its own right. In both our primary study and this follow-on research, we have taken up the challenge implicit in the quotation above: to extend our limited understanding of how practitioners offer choice in real interactions with (in our case) patients and ‘accompanying others’. This entailed using a methodology known as CA, which although well established in the study of medical interaction generally, has not previously been used specifically to investigate choice of treatments, investigations and/or referrals, as we do here. We discuss our approach in more detail in Chapter 2. In the next section, Models versus practices: our methodological starting point, we briefly outline our methodological starting point both to foreground its novelty and to further contextualise our focus on specific practices for offering choice (as opposed to, say, a wider examination of SDM).

Models versus practices: our methodological starting point

As we discussed in our primary report,1 models of decision-making in medical consultations have been very useful for identifying contrasting approaches to the doctor–patient relationship, typically summarised as a cline, ranging from paternalist to consumerist, with the ideal of a ‘shared’ approach somewhere in the middle,22 and for unpacking the key components of decision-making as a broad process. For example, Charles et al. 22 have influentially distinguished between ‘information exchange, deliberation about treatment options and deciding on the treatment to implement’. Relatedly, Elwyn et al. 8 summarise three key steps for enacting SDM in clinical practice, which they call ‘choice talk, option talk and decision talk, where the clinician supports deliberation throughout the process’ (italics in original). Elwyn et al. 8 offer detailed guidance to clinicians on how to engage in each of these forms of talk, listing multiple components of each and suggesting forms of words for putting these into practice. In brief, choice talk is described as a ‘planning step’, focused on making patients aware that ‘reasonable options exist’, offering choice, justifying the existence of choice and ‘deferring closure’ to ensure that the options are explored. Option talk involves undertaking that exploration, including providing a clear list of the options and their pros and cons, and checking the patient’s understanding. Finally, decision talk focuses on eliciting the patient’s preferences, alongside a willingness ‘to guide the patient, if they indicate that this is their wish’. 8

Such models build on extensive prior work, both empirical and conceptual, but unavoidably ‘simplif[y . . .] a complex, dynamic process’, as Elwyn et al. 8 acknowledge. This can be an advantage (e.g. to help in training)8 but comes at the cost of smoothing over many of the subtle differences that are evident in the detail of real interactions. As we argued in our primary report, this is a problem because there is a growing body of evidence that the precise wording used by clinicians can make a significant difference to patient involvement in the consultation. 23–25 Moreover, the starting point for models of decision-making is, quite appropriately, normative: they seek to provide a ‘best practice’ guide. Again, this is important for facilitating training and continuing professional development. However, even when following the most detailed guidance, clinicians must – unavoidably – translate that, in real time, into the specifics of each unique interaction with a patient. As noted in the previous section, we know that this ‘translation’ process can end in real interactional practices that do not map onto the policy/training, even in cases for which there is evidence that the practitioner intended to enact that policy/training.

For these reasons, our starting point was neither normative nor theory driven. Our primary study started with a largely descriptive and interactionally grounded aim: to identify the real-world practices that neurologists are using to initiate decision-making in a way that is demonstrably oriented to patient choice, whether or not a shared decision is actually achieved. 1 Our search for practices was thus carefully delimited and inductive in nature. Our primary aim was to provide an empirical understanding of how choice is actually offered to patients in authentic clinical consultations and the consequences of the different approaches that clinicians take. Nevertheless, it is clear that our findings resonate with, and raise important questions in relation to, the broader models of SDM. We therefore consider these points of connection further in Chapter 11.

What we already know about real-time decision-making in the clinic

Our primary study built on previous interactional research on real-time decision-making in the clinic, much of which has been summarised already in the original report. 1 We will not repeat that review here; however, it is important to note the following. For the purposes of situating this secondary analysis, the prior conversation analytic research has tended to focus on the treatment recommendation (i.e. just one of the broad practices for initiating decision-making that we analyse here) with a particular interest in the

-

strategies that clinicians have for persuading patients to accept their recommendations24,26–34

-

strategies patients have for resisting recommendations30,34–37

-

subtle interplay between clinician and patient that means that recommendations are unavoidably a form of ‘joint social practice’38 (see also Angell and Bolden39 and Kushida and Yamakawa40), even though the parties to that process are seldom contributing from an equivalent footing. 41

As noted above, very few studies have focused on choice in interaction specifically (for important exceptions see Pilnick20 and Antaki et al. 21). However, the distinction we make between recommendations on the one hand and option-lists and PVEs on the other maps closely onto work by Collins et al. 42 and Opel et al. 30,36,43 Collins et al. 42 demonstrated a continuum of approaches to decision-making in oncology and diabetes mellitus consultations, which they described as ranging from ‘unilateral’ (or clinician determined) to ‘bilateral’ (or shared). Comparable to the findings we report, they showed how clinicians might sometimes replace the more conventional recommendation with efforts to:

. . . actively [pursue the] patient’s contributions, providing places for the patient to join in, and building on any contributions the patient makes: e.g. signposting options in advance of naming them; eliciting displays of understanding and statements of preference from the patient.

Similarly, Opel et al. 36 drew a distinction, in the context of parental decision-making about whether or not to vaccinate their children, between ‘presumptive initiation formats’ and ‘participatory’ ones. They describe these as follows:

Presumptive formats were ones that linguistically presupposed that parents would vaccinate [. . .] (e.g., ‘Well, we have to do some shots’) [. . . while] participatory formats were ones that linguistically provided parents with relatively more decision-making latitude [. . .] (e.g., ‘Are we going to do shots today?’ [. . .] ‘What do you want to do about shots?’).

Likewise, we can understand the recommendations in our study as being comparatively more presumptive (see especially the formats discussed in Chapter 9) relative to option-lists and PVEs, which we can understand as participatory formats (see Chapter 4 for more on this point). Addressing this kind of distinction across a wide range of decisions in neurology, our primary study1 sought to provide empirical evidence ‘to address the [still significant] gap in our understanding of how practitioners actually go about offering choice, as a crucial step towards developing well-founded guidelines for use in practice’ (contains public sector information licensed under the Open Government Licence v2.0). The present follow-on study seeks to build on this further, as described in more detail in Building on the primary conversation analytic study. Specifically, we examine qualitatively and quantitatively how neurologists initiate and negotiate real-time decisions about investigations, treatments and referrals in face-to-face consultations with patients and, when present, third parties (usually patient relatives or partners).

Why neurology as the setting for studying choice in practice?

As we outlined in more detail in our report of the primary study, neurology is an ideal setting for examining patient choice because most neurology outpatient appointments involve discussions of chronic conditions, the treatment of which is partially or largely dependent on active patient participation and because a ‘person-centred service’44 has been an explicit quality requirement for neurological practice for over a decade. This concept underpins 10 other requirements listed in the National Service Framework for Long-term Conditions (NSF),44 which states that:

People with long-term neurological conditions [. . .] are to have the information they need to make informed decisions about their care and treatment.

More specifically, two neurological conditions [epilepsy (the most common serious neurological disorder in the UK) and multiple sclerosis (MS) (one of the most common disabling central nervous system diseases)] are among seven chronic conditions identified by the UK’s Department of Health and Social Care as particularly suited to involving patients in decisions about their care. 45 In part, this reflects health professionals’ recognition that patients with chronic conditions often understand their disease as well as (or even better than) health professionals do, but that:

. . . this knowledge and experience by the patient has [. . .] been an untapped resource.

Moreover, because many neurological conditions have an uncertain trajectory, decision-making in this context is seldom cut and dried. This can make it difficult for neurologists to provide a simple recommendation for the ‘known-to-be-best’ option. Even when the diagnosis is certain, the effects of the different treatment options may not be. For example, in many clinical scenarios there is no medical evidence base for which antiepileptic drugs (AEDs) would be the best next choice, and the selection of a particular drug or dose very much depends on patients’ thoughts and feelings about the relative importance of seizure control and particular adverse effects. 46,47 Decision-making, then, will at least partly rest on factors that only the patient can contribute, such as the extent to which their condition and/or treatment impacts their quality of life, as well as their willingness to risk or manage certain side effects. 48 It is generally agreed, therefore, that epilepsy is best managed through a patient-centred approach. 49,50 Similarly, as Pietrolongo et al. 51 put it in relation to MS, involving patients in decisions ‘is especially important in grey-zone situations where available treatments have important risks as well as benefits, and where evidence is lacking’ (see also Colligen et al. 52). As Rieckmann et al. 53 put it, in a patient-centred approach to managing MS the patient is seen as the ‘lynchpin’ of decision-making and, furthermore, that:

Just as patients are required to change their role from healthcare ‘receiver’ to ‘engager’, the role of the healthcare professional also needs to evolve from being a ‘provider’ of healthcare to become a ‘motivator’ and ‘supporter’ of patients to help them achieve this.

Yet there is some evidence that neurology patients may experience the decision-making process as clinician dominated. For example, an interview study54 in which patients were questioned about AED treatment decisions found that most of the sample (42 out of 47 patients) attributed ‘decisional ownership’ to the neurologist. The authors argue that this may be particularly common for conditions that engender feelings of desperation (e.g. to be seizure free). Similarly, a study51 that rated the ways in which physicians at four Italian MS centres spoke with patients about disease-modifying treatments concluded that patient engagement with decision-making was ‘not a prominent part of patient care’. There is also evidence that MS specialists in the UK are exerting a significant influence over patients’ selection between four disease-modifying treatments. Although the same options were available in all 72 prescribing centres, Palace55 found that the relative use of these options varied significantly; moreover:

. . . the percentage of MS specialists in each centre prescribing the disease modifying drugs to patients with secondary progressive (versus relapsing remitting) MS varied from 0% to nearly 50%. These variations are likely to be due to physician preference and opinion and not patient influenced.

Such findings indicate a discrepancy between the Department of Health and Social Care’s vision and (at least some) patients’ experience in neurology, which warrants further research. This coheres with a range of findings regarding the inconsistency of participatory decision-making more broadly. 56–59

Despite such good reasons for focusing on neurology, this study should not be assumed to apply only to consultations in this setting. Because our focus is on communication strategies, our findings should have relevance for other specialties. Although the content of a choice may be specific to particular conditions or patient groups (e.g. different drug or surgical options, patients of different ages with different kinds of disabilities), practices for making choice available in interaction with patients are not. The findings from this study should therefore be of practical use to clinicians working in a range of settings.

Building on the primary conversation analytic study: research approach and objectives for this follow-on secondary analysis

Our primary study used the qualitative methodology known as CA to meet its objective of identifying two key communication practices used by neurologists to offer patients choice. CA is widely recognised as the leading methodology for investigating precisely how doctor–patient interaction operates, on the micro-level, in everyday practice;2,60 it uses audio- and video-recordings of authentic interactions, and transcripts thereof, to enable direct observation and fine-grained analysis, focusing on not only what is said but how it is said (e.g. the exact words used and evidence of hesitation, emphasis, interruptions, laughter or misunderstanding). Its key advantages are that it does not rely on recall, which can often be incomplete or inaccurate, and it investigates how people behave at a level of detail that they could not be expected to articulate (e.g. in a research interview). In line with other CA studies,24,27,28,35,37,61–68 the original research was intended to provide detailed foundational evidence about what clinicians actually do in interaction with patients. For a general introduction to CA and more specific information about how we applied it in the primary study, please see Reuber et al. 1

As outlined in Background to the study, our original study was not designed to compare the effectiveness of those practices identified as methods for offering choice with the alternative practice of recommending. The primary purpose of this follow-on study is to do just that: we aim to document and evaluate any differences between three practices used by the clinicians in our data set to initiate decision-making in interaction with patients: option-listing, PVEs and recommending. In documenting the three practices, we began by ‘mapping’ them out across the data set in a way that maintained their ‘form’ (i.e. recommendation, option-list or PVE), their ‘positioning’ in each consultation (i.e. whether it occurred as a first or subsequent decision point in discussion about a particular matter) and their responses. This allowed for further, in-depth, qualitative analysis, which we report in Chapters 9 and 10.

However, our work raised a range of research questions that cannot be answered by qualitative means alone. As leading conversation analysts (especially those working in the field of doctor–patient interaction) have been arguing for over a decade now,69,70 it is necessary to combine qualitative, CA-based findings with further statistical analysis if we are to answer such research questions, which Heritage71 refers to as ‘distributional’ in nature. As Robinson69 puts it: ‘comparisons of the operation of different CA practices do require statistical evidence’ (italics in the original).

One prominent example72 on which our approach was modelled involved statistical comparison of the effect of GPs’ use of open-ended versus closed questions for eliciting patients’ presenting concerns on patients’ subsequent report of satisfaction with the visit. This study showed that the open-ended format was associated with significantly higher scores on key items of the Socioemotional Behaviour subscale of the Medical Interview Satisfaction Scale (MISS-21) (which we have also used in our study). In such studies, the outcomes of interest are external to the clinical consultation (e.g. questionnaire scores). Other studies have investigated the relationship between interactional practices and outcomes that are internal to the consultation. For example, Heritage et al. 23 showed that patients were significantly more likely to reveal, during the consultation, additional medical concerns they had (other than their main reason for attending) if the GP asked if there was ‘something else’ rather than ‘anything else’ that he/she could do for the patient.

Comparably, our follow-on study was developed to conduct meaningful comparative analysis regarding the possible effects of our three core interactional practices (option-lists, PVEs and recommendations) on outcomes that are both internal to the recorded interactions (e.g. how does the patient respond immediately and is an agreement reached by the end of the consultation?) and external, based on self-report (e.g. patient satisfaction scores and whether or not participants thought the patient had been offered choice). This necessitated reducing the complexity of the qualitative data into countable codes. Doing this effectively depends crucially on the quality of the foundational conversation analytic research. 69,70 This is because any attempt to ‘code and count’ is meaningless if the practices for which one is coding are not clearly described and thoroughly understood. In the examples outlined above, the statistical analysis was based on solid findings from extensive prior conversation analytic work. We strongly agree with Robinson’s69 argument that:

Prior to statistical testing, analysts need to be able to answer at least the following questions in specific terms: what is the claimed practice (i.e. what are its constitutive features as an orchestration of conduct-in-interaction), the action(s) it accomplishes, the norms/rules it instantiates, and its range of interactional consequences?

This is why our primary study focused exclusively on describing and explicating, in fine-grained qualitative detail, the practices neurologists were using to offer patients choice. It is also the reason for devoting a significant proportion of this follow-on study to further qualitative work to ensure that our coding is robust and thoroughly rooted in the nuanced interactional realities at play in each consultation. Moreover, our inductive starting point meant that already established coding systems, such as the highly influential Roter Interaction Analysis System (RIAS), were not suitable for our aims. Although the RIAS can be used flexibly, with some studies adding subcategories,73 its primary purpose is to enable an exhaustive classification of whole medical visits, using 39 pre-established categories that incorporate both socioemotional and task-related elements. 74 Thus, the starting point for the RIAS is a particular theoretical perspective on the doctor–patient relationship, which is captured in these categories. The RIAS has been used very effectively to address a wide range of research questions across diverse clinical settings in different parts of the world. However, for our purposes, we needed a coding scheme that allowed us to focus specifically on comparing the three key approaches identified in our primary research. We discuss our approach in more detail in Chapter 2.

The research reported here, then, is a mixed-methods study that aims to address the following main objectives.

-

Map out (a) the three interactional practices we have previously identified for initiating decision-making in the neurology clinic, together with (b) their interactional consequences (e.g. patient engagement or resistance, and whether or not the patient ended up agreeing, by the end of the consultation, to whatever option was proffered).

-

Identify, both qualitatively and quantitatively, any evident interactional patterns across our data set (e.g. to assess whether or not and how different interactional practices typically lead to patient acceptance/resistance).

-

Examine, statistically, the relationship between the interactional practices identified and the self-report data we collected as part of the primary study (i.e. patient satisfaction data from the MISS-21 questionnaire and other variables, such as how certain the neurologist was of the diagnosis and whether or not the neurologist and patient thought a choice had been offered).

-

Use the findings from the above analyses to address our overarching aim of comparatively evaluating the three practices as methods for initiating decision-making with patients in the clinical encounter.

The practical goal motivating this work is to provide evidence-based and contextualised (as opposed to abstract) guidance regarding how these practices actually work to enable clinicians to use them in ways that are sensitive to the nuances of interaction (as opposed to mechanistically).

Structure of the rest of the report

In what follows, we describe our innovative methodological approach and procedures (see Chapter 2) and overview the data set, exploring the extent to which the working sample used in this follow-on study is comparable to the sample used in the original study (see Chapter 3). In Chapter 4 we provide some brief qualitative analyses to illustrate our three key practices and we present evidence that PVEs and option-lists are associated with choice not only for us as analysts but, crucially, also for neurologists and patients. In Chapters 5 and 6 we report how the core decisional practices (recommendations, PVEs and option-lists) are distributed across our data set. Chapter 5 reports the frequencies of each practice and in Chapter 6 we explore whether or not each practice is employed at different frequencies depending on the type of decision being made (about investigations, treatments or referrals), the neurologist’s ‘style’, patient demographics and clinical factors. Chapters 7 and 8 report findings on outcome measures. Chapter 7 describes the relationship between interactional practices and patient satisfaction, as well as further findings on the perception of choice. Chapter 8 explores the interactional consequences of each practice in terms of immediate responses from patients and whether or not agreement was reached within the consultations. Chapters 9 and 10 present conversation analytic investigation of contrasting interactional practices: strongly formulated recommendations (see Chapter 9) and PVEs (see Chapter 10). Our findings and conclusions are discussed in Chapter 11.

Chapter 2 Methodology

Introduction

We employed an innovative mixed-methods approach using qualitative and quantitative approaches. In this chapter, we describe the primary data collection, design, testing and application of our coding scheme and our analytic procedures. As this is a secondary follow-on study, some of this information has been reported previously, but we briefly describe those processes that are necessary for understanding the present report. For a more detailed account of the methodology underpinning the primary study, see Reuber et al. 1

Primary data collection: recorded consultations plus pre- and post-consultation questionnaires

Data collection primarily entailed recording clinic appointments to identify the strategies clinicians use to offer patients choice. This took place in the outpatient departments of two major clinical neuroscience centres (the Southern General Hospital in Glasgow and the Royal Hallamshire Hospital in Sheffield) between February and September 2012. Each site serves a diverse population and employs a diverse team of neurologists, with different specialties, ensuring a broad range of consultations. The eligible sample population was all neurologists (20 in Sheffield and 23 in Glasgow) at the two sites and all patients (aged ≥ 16 years) attending the clinics, provided they were able to give informed consent in English. We had a target of 200 recordings (100 per site) with at least 10 clinicians (five per site). These targets were exceeded (see Chapter 3).

Neurologists could opt in to the study. The two collaborating neurologists were responsible for making initial contact with their colleagues and providing written information sheets describing the study and what involvement would entail. The collaborating neurologists then passed on the details of any potentially interested colleagues to the research assistant at each site, who explained the study face to face and obtained written informed consent from participating colleagues. The nature of the sample was, therefore, partially determined by which neurologists were willing to participate.

A full-time research assistant was employed at each site: Fiona Smith (Glasgow) and Zoe Gallant (Sheffield). They approached patients about the study just prior to the start of the clinic appointment. Patients also received written information about the study in advance. When appropriate, written informed consent was taken. The consultations between consenting patients and neurologists were audio- or video-recorded depending on the level of consent provided by participants. The researchers set up the recording equipment but were not present during the consultations.

Clinician, patient and accompanying other information sheets included an explanation of the key research questions, as follows: ‘This study aims to answer the following two questions: (1) How do neurologists offer patients choice? (2) How do patients respond to these offers?’ We thus cannot rule out the possibility that participants may have adapted their decision-making practices in accordance with what they thought the researchers might consider to be best practice. Nevertheless, as we show in Chapter 5, recommendations remained far more common in our data set than the practices that we analyse as a means for offering choice. This indicates that the neurologists continued to use a range of approaches even when taking part in this study. Moreover, as we were primarily concerned with the precise detail of how neurologists may enact the patient-choice agenda in practice, their approaches to doing so (in real time) were of analytic relevance regardless of whether or not these appeared more regularly as a consequence of taking part in our study.

Self-report information was also collected by means of questionnaires, which patients completed immediately before and after the consultation and neurologists completed after. The researchers administered these. The pre-appointment questionnaires captured a range of potentially relevant factors, including:

-

Patients’ demographic details.

-

Patients’ health-related quality of life (HRQoL) as measured using the UK-validated75 Short Form questionnaire-12 items (SF-12) scale. The SF-12 provides separate summary scales for physical and mental health. 76

The post-appointment questionnaires included the MISS-21 as a measure of patient satisfaction with the preceding consultation. The MISS-21 has been validated in UK patient populations77 and used in previous CA research to measure patient satisfaction in primary care. 72 Part of the output from our primary study included an investigation of patient satisfaction and perception of choice. We conducted exploratory factor analysis of the MISS-21 data and identified four subscales that were broadly consistent with the four subscales (rapport, Distress Relief, doctor’s understanding and communication difficulties) that have previously been identified and validated in British general practice. 77,78 Although this cannot be said to provide full validation of the use of the MISS-21 in the context of neurology secondary care (particularly because the last two subscales listed above showed only moderate levels of internal consistency), the identification of the same subscales does provide some evidence that the MISS-21 is of use for investigating patient satisfaction in this context.

Patients (see Appendix 3) and neurologists (see Appendix 4) were asked whether or not a choice had been offered to the patient during the consultation and neurologists were asked to record information about the patient’s condition. This included their levels of diagnostic certainty, reported on a scale from 1 for ‘very uncertain’ to 10 for ‘very certain’, and the extent to which they thought that the patient’s symptoms were medically explained. They could select ‘completely/largely explained’, ‘partly explained/partly unexplained’ or ‘completely/largely unexplained’ (see Appendix 4).

Ethics approvals

Ethics considerations in relation to the primary study are discussed in the original report. 1 Ethics approval was granted for the primary study by the National Research Ethics Service Committee for Yorkshire and the Humber (South Yorkshire) on 11 October 2011, following revisions to supporting documentation requested following the research ethics committee meeting held on 29 September 2011 and attended by MT.

As the follow-on study involved further analysis of data we had already collected, the only new ethics consideration was the inclusion of two new team members, who needed access to those data: Clare Jackson (a conversation analyst) to take the place of Rebecca Shaw, who was not available to continue with the project, and Paul Chappell, to bring vital statistical expertise to the team to meet the objectives of the follow-on study. We applied for proportionate review because:

-

the aims of the follow-on study were so closely aligned with those of the primary research that it amounted to an extension of the original study

-

no further recruitment or data collection was required

-

participants in the original study had consented to data collected being subjected to additional analyses

-

the data to be analysed continued to be held securely by Merran Toerien, one of the original team members, at the University of York (as specified in the original study sheets and consent forms)

-

the study continued to be overseen by the same principal investigator, Markus Reuber.

Further approval, allowing the additional two researchers to join the follow-on study team, was granted by the proportionate review subcommittee of the National Research Ethics Service Committee North West (Greater Manchester South) on 20 July 2015.

Patient and public involvement

As we discussed in our primary report, the direction of our analytic thinking was influenced significantly by the service users’ group (SUG) and steering group. The latter involved discussion between patients, neurologists and academics, who rarely seemed to be coming from the same starting point. All agreed, however, that choice should not be assumed to be inevitably a good thing. We were regularly reminded to take this point more seriously when we inadvertently slipped into talking about the more participatory practices as if they were inherently better than recommendations.

We were fortunate that two members of the SUG from the primary study were able to continue to participate in the follow-on SUG. We also recruited, with the help of a neurologist at Sheffield, four additional patients to support our work-in-progress. The father of one of the patients also joined the group, usefully adding the perspective of an accompanying other to discussions. As we discuss further in Chapter 11, the SUG produced lively and insightful discussion about the nature of choice and whether or not patients necessarily want it. Service users also commented on our work-in-progress. This was facilitated through brief presentations by the team at SUG meetings and by selecting anonymised pieces of the recorded data for discussion.

The two patients who were part of the primary SUG helped with the two dissemination workshops carried out following completion of the first study and facilitated contact with patient groups (e.g. Epilepsy Action) for dissemination purposes. Their contributions have been invaluable over several years now and we hope that they feel (as we do) that their voices are woven into our work in significant ways. We look forward to further dissemination activities in collaboration with members of the SUG on the basis of our follow-on findings.

Analytic approach

As our approach to the primary analysis is already detailed in our first report,1 we will not repeat it here. However, we would emphasise, as discussed in Chapter 1, that the secondary analysis reported here relied fundamentally on the conversation analytic findings already produced. Moreover, the coding process reported here was underpinned by a CA mentality. In what follows, we describe the methods employed in the secondary analyses.

An innovative adaptation of framework analysis

Our analytic process can be seen as analogous to that used in framework analysis (FA), an approach that has become increasingly popular in policy-oriented health research79–83 since its development by Ritchie and Spencer84,85 in the 1980s. FA was created to manage qualitative data in large-scale social policy research, its aim being to gain an overview of the data to facilitate its mapping and interpretation. 80 FA allows for systematic, rigorous and transparent management of large amounts of qualitative data without losing the richness and flexibility of those data. 83,86 In essence it achieves ‘a holistic descriptive overview of the entire data set’. 80 These are qualities that are valued in the CA method we used previously. 60 However, insofar as FA is a formal system for thematic analysis, we used an adapted version that maps out interactional practices rather than themes.

Ritchie and Spencer85 propose a five-step process for undertaking FA:

-

familiarisation

-

identifying a thematic framework

-

indexing

-

charting

-

mapping and interpretation.

As we outline in more detail in Innovative use of an online extraction questionnaire, we adapted this process to meet the aims of our secondary study. Specifically, we developed an innovative data extraction questionnaire (EQ) that enabled us to answer specific questions about the data set. Although we did not produce the tree diagrams and maps that are often employed in FA, the final output of the data reduction process – a matrix that describes the key characteristics of each coded decision – is similar to the framework matrix. Gale et al. 80 suggest that:

Good charting requires an ability to strike a balance between reducing the data on the one hand and retaining the original meanings and ‘feel’ of the interviewees’ words on the other. The chart should include references to interesting or illustrative quotations.

Our final matrix, contained in a Microsoft Excel® (Microsoft Corporation, Redmond, WA, USA) spreadsheet, went beyond that to include all the original quotes from the consultations on which the codes were based. Thus, although each consultation was reduced to a series of decision points and associated categorical data, the portions of text representing each decision point were retained. Crucially, then, our use of FA enabled us to integrate the interactional and self-report data collected in the primary study. Coding entailed a single, integrated process for the purposes of subsequent qualitative and quantitative analyses. This made the study truly mixed-methods rather than a parallel use of multiple methods.

In Coding scheme design and Intercoder reliability testing, we describe our coding scheme in detail and the results of our intercoder reliability testing. In Innovative use of an online extraction questionnaire, we describe the EQ we designed to enable us to produce the final matrix and the statistical methods we employed. We end with a brief overview of the further CA work undertaken. When relevant, further specification of our approaches to data analysis is presented in later chapters.

Coding scheme design

The first step was to ensure that the three researchers responsible for coding (PC, CJ and MT) were familiar with the data through a process of watching and listening to a sample of recordings. This was particularly important for PC and CJ, who were new to the research team. MT guided this process on the basis of her intensive qualitative work on the primary study. As described above, much of the groundwork had already been laid, but the secondary analysis nevertheless required a considerable amount of further qualitative work before a reliable coding approach was developed. This was because we produced the coding scheme through an iterative bottom-up process to adequately capture what was going on in the interactions themselves. We needed to carry out the act of data reduction (for quantitative analysis) without sacrificing the sensibility of CA. 70

We identified all instances of decision-making in the transcripts and spent considerable time ensuring that we were all applying the same definitions of our core practices, derived from the primary study and developed further during the analytic period of the follow-on study, to the data. We considered the different ways in which patients could immediately respond to the different decisional practices and considered how best to capture the often extensive decision-making sequences that occurred. The iterative process proceeded as follows:

-

A coding scheme comprising a codebook and an online data extraction form was constructed. This recorded categorical information about each consultation.

-

Each member of the team worked independently to code the same subset of 5–10 consultations using the coding scheme.

-

The team met to discuss our coding process and any disagreements.

-

A new version of the codebook and coding scheme was constructed, taking into account discussions and agreed changes, before the independent coding process started again.

Through the repeated application of this iterative process, a final coding scheme was developed that would eventually allow consistent quantitative classifications to be applied. The process facilitated a continued familiarisation with the data set and allowed researchers to share qualitative insights about patterns, associations and themes identified. After eight rounds of this iterative process, final versions of the codebook (see Appendix 5) and code EQ (see Appendix 6) were constructed. The team would like to acknowledge the enormously helpful input provided by the study steering group and SUG, which each met twice during the development of the codebook and provided additional patient and doctor perspectives on the coding process.

The coding scheme was designed so that, when applied, the following could be coded for each consultation:

-

How long (in minutes) the consultation lasted.

-

All decisions about treatments, investigations and/or referrals that were initiated by the neurologist during the consultation using an option-list, PVE or recommendation.

-

For each decision identified, we classified the practices used by the neurologist into option-lists, PVEs or recommendations to construct a series of decision points. This reflects the fact that decisions were most commonly made through extended sequences rather than just a single initiating turn by the neurologist and response by the patient. Our coding retained the sequential order of decision points so that it was possible to compare first decision points with later ones for a single decision. This was achieved by structuring the data extraction form in a way that required the first decision point for a decision to be coded first, followed by the second decision point, and so forth (this ordering was then retained by the way the resulting spreadsheet was structured). Note that our original coding made a distinction between the strongest forms of recommendation (which we called pronouncements) and recommendations that were produced as some form of proposal. Although this distinction allowed us to explore, qualitatively, the variability in the design of recommendations (see Chapter 9), it went beyond the aims of the quantitative analysis, which focused on the full set of recommendations (collapsing pronouncements with the rest).

-

For each decision point, we identified how the patient and/or accompanying other responded. Coders selected one of the following mutually exclusive options to describe the immediate response of the patient (or accompanying other) to the neurologist-initiated decision point: no opportunity to respond, acknowledges, goes for option, no audible response, seeks information, does not go for option (in any way not coded for above) and patient and third party respond differently. Note that ‘no opportunity to respond’ occurred when the neurologist went on to say something further immediately after delivering the coded decision-point turn.

-

For each decision point, we also recorded the specific section of transcribed text that comprised the decision point.

-

For each decision, we noted whether or not one or more of the possible courses of action (treatment, investigation or referral) introduced by the neurologist had been agreed on by the end of the consultation. Coders could select ‘yes’, ‘no’ or ‘decision deferred’ in response to the coding question: ‘Is the course of action going to happen in principle?’ In other words, was agreement reached, by the end of the consultation, that the patient would go ahead with whatever referral/treatment/investigation had been propogathering (e.g. through consultation with another specialist) or more deliberation by the patient (e.g. in consultation with significant others). If the neurologist suggested that more than one course of action might be appropriate, then, if any of these options were subsequently agreed on, this variable would be coded ‘yes’. To handle recommendations against doing something, we also recorded ‘yes’ for this variable if a decision was made to go for the ‘negative’ course of action (e.g. agreeing not to change a medication or that further diagnostic testing was not necessary).

The following sorts of decisions were excluded because they went beyond the scope of our research aims:

-

decisions about something other than treatments, investigations or referrals (e.g. lifestyle changes or when to meet for a follow-up appointment)

-

decisions that were initiated by someone other than the neurologist (e.g. the patient or accompanying other or by another clinician during a previous consultation)

-

decisions that were presented as a simple fact about the absence of any available treatment (versions of ‘there’s nothing we can do for you’) – to be distinguished from recommendations against using a treatment that does exist, which we did code

-

decisions that could potentially arise in the future (discussed as hypothetical scenarios in the current consultation)

-

decisions that lay in the domain of another clinician (e.g. where a patient was experiencing comorbidities and was thus also under the care of another consultant).

In line with the study aims, the coding scheme focused only on identifying decision points that were designed using an option-list, PVE or recommendation. However, we noted when other practices were used to pursue a decision (e.g. by providing a justification for a prior recommendation). All exclusions warrant future research attention, but were necessary to ensure that our cases were as directly comparable as possible.

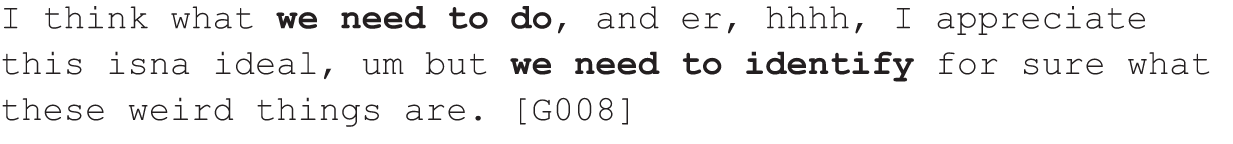

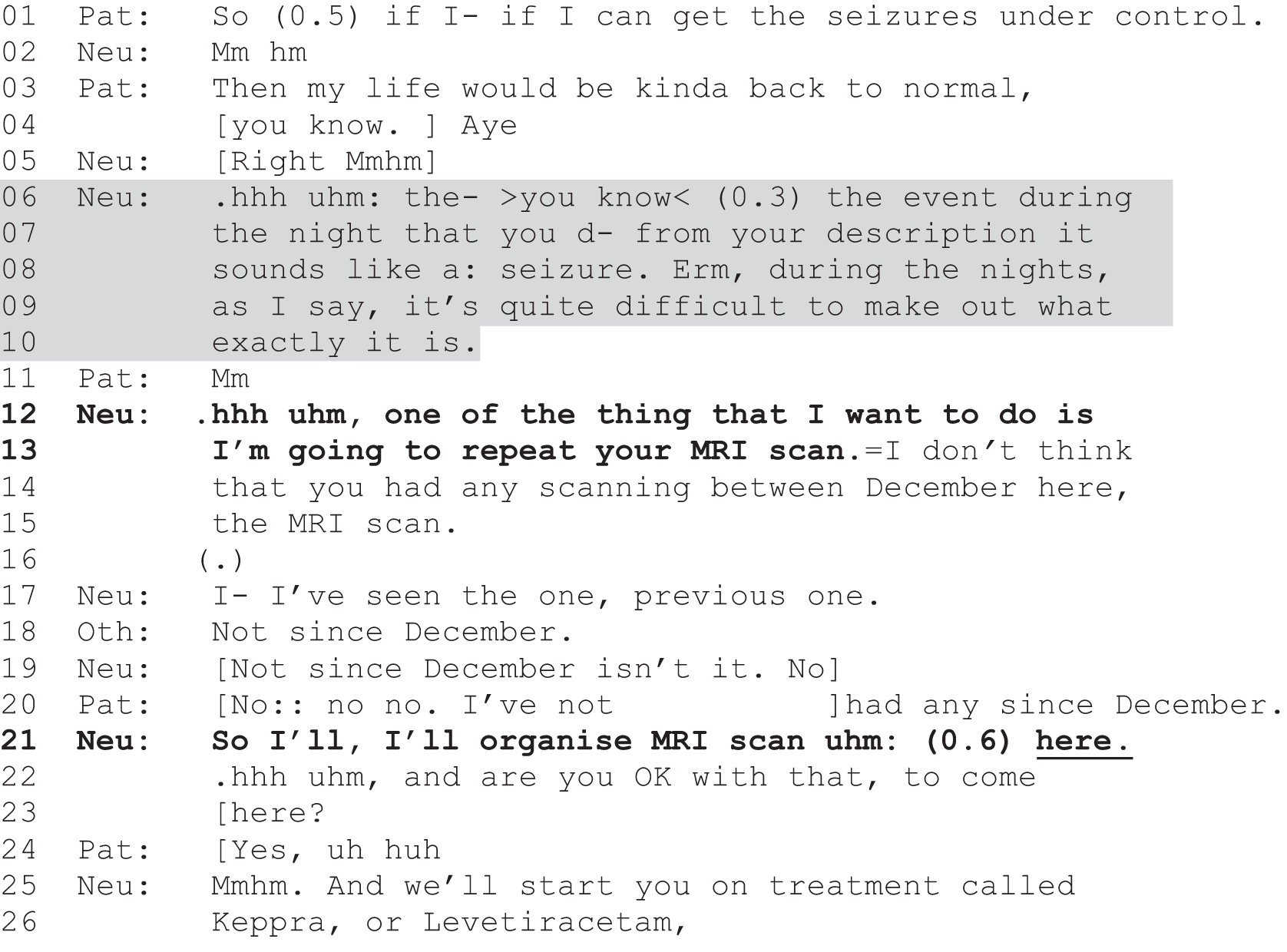

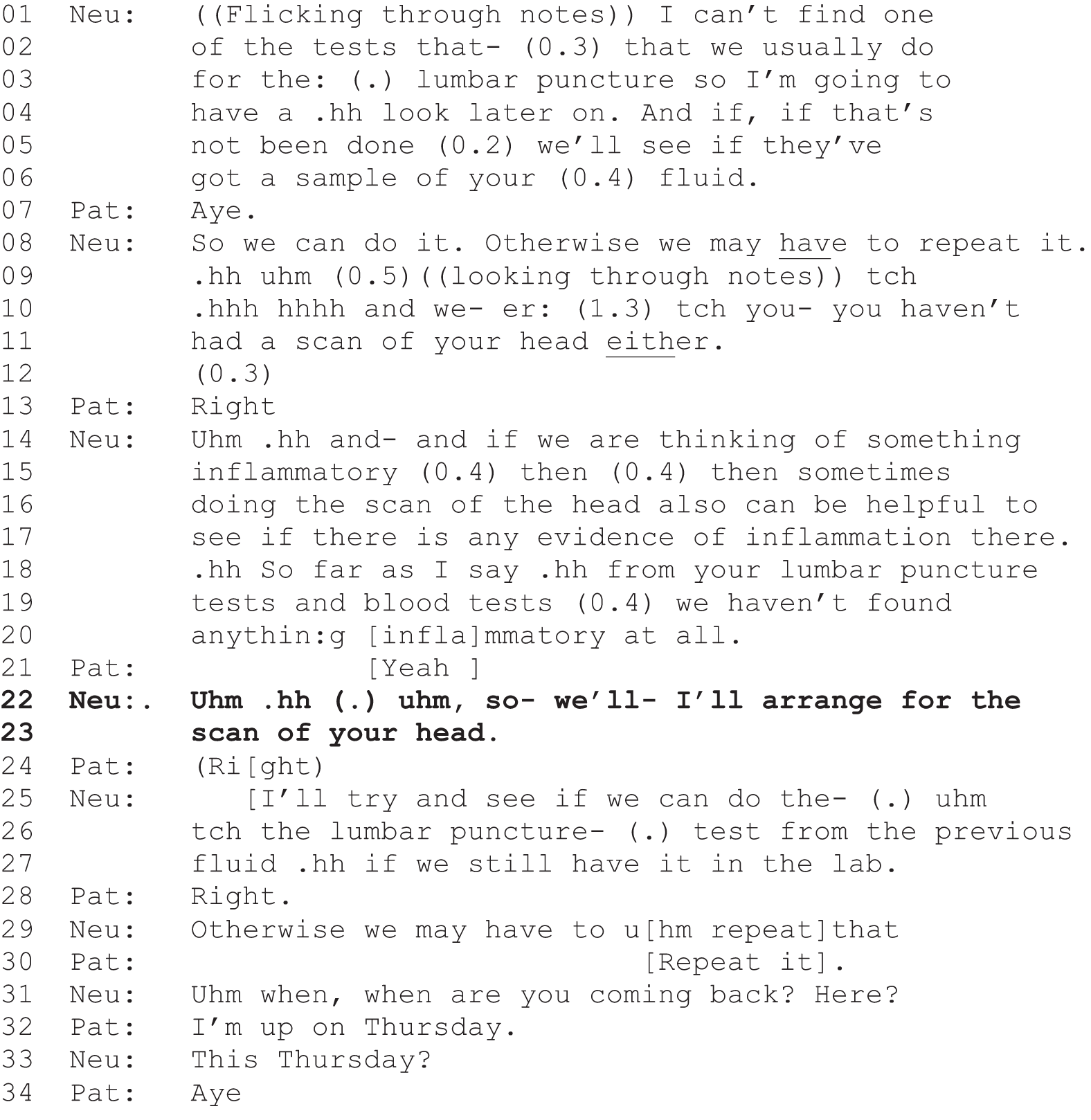

Table 1 provides our codebook definitions for each practice, with examples. For ease of reading, Jeffersonian transcription notation is not used here, but was used to conduct the CA work (transcription conventions are shown in Appendix 1). The identifiers show where the consultation was recorded (Glasgow or Sheffield), the number of the recording (numbered consecutively at each site from 001) so that, for example, G001 was the first recording made in Glasgow. In addition, each clinician was given a two-digit number as a personal identifier. However, to maintain their anonymity, as our sample of neurologists is relatively small and we have (with permission) named both study sites, we have decided not to include these personal identifiers in interactional data extracts. Note that for the same reason we have chosen not to identify the neurologists’ gender; hence, we have used gender-neutral pronouns (e.g. ‘s/he’, ‘his/her’, ‘they/their’) when referring to individual clinicians. It is worth pointing out here that we did not have sufficient numbers of neurologists in the study to be able to examine any gender differences statistically.

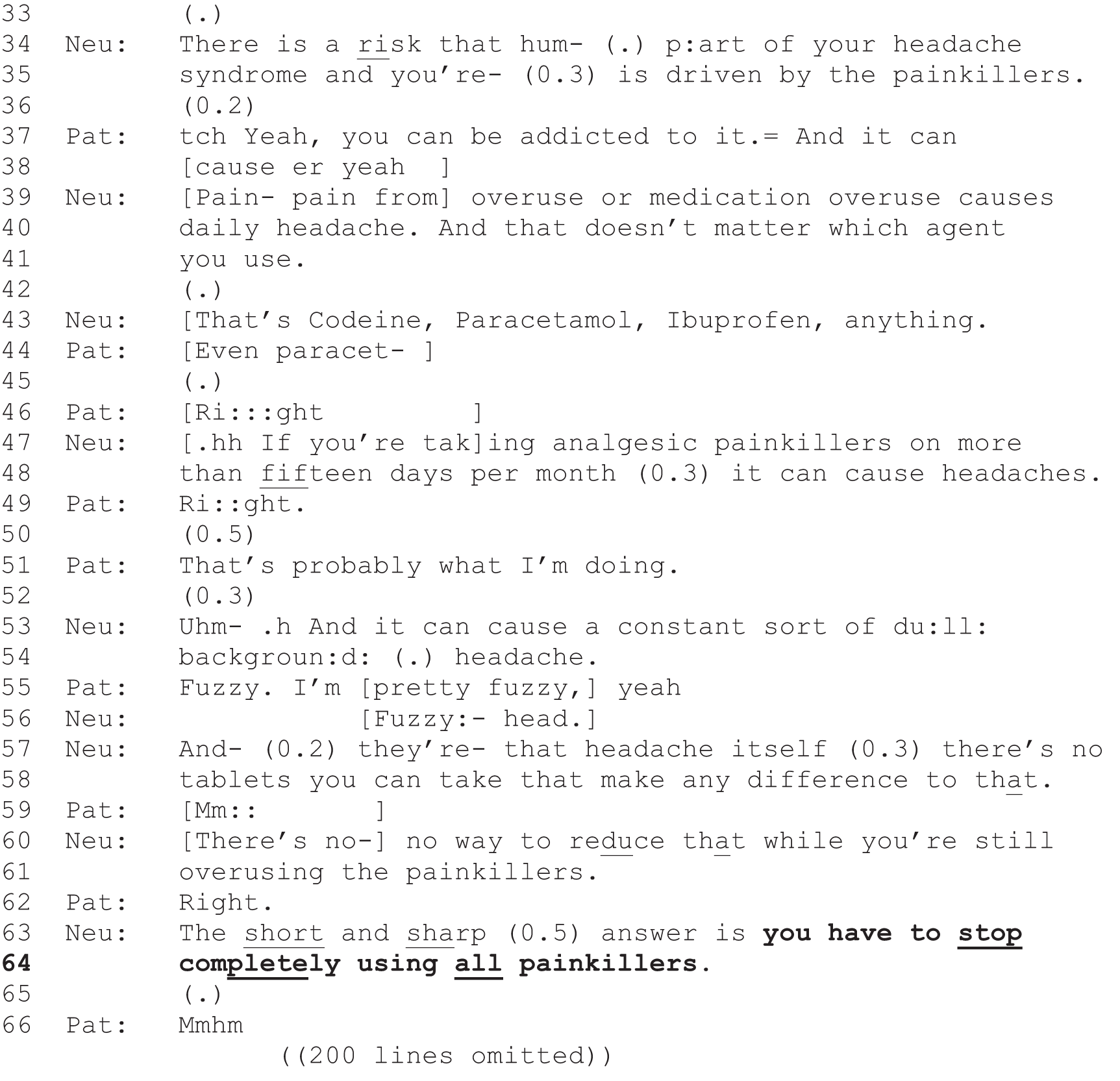

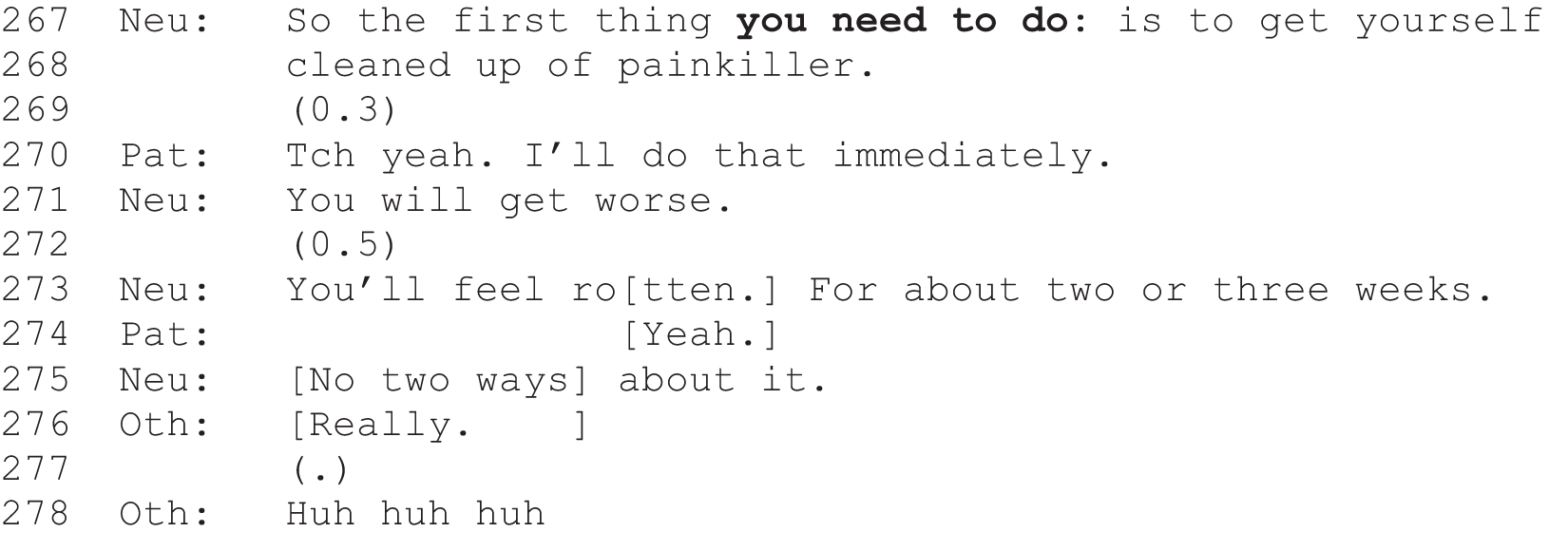

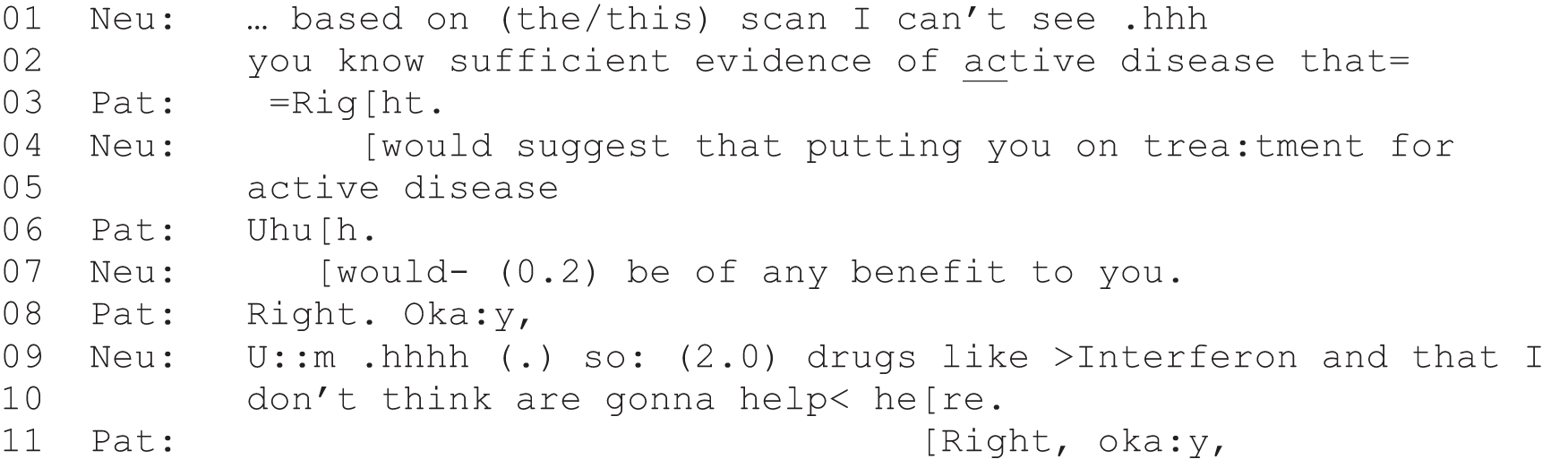

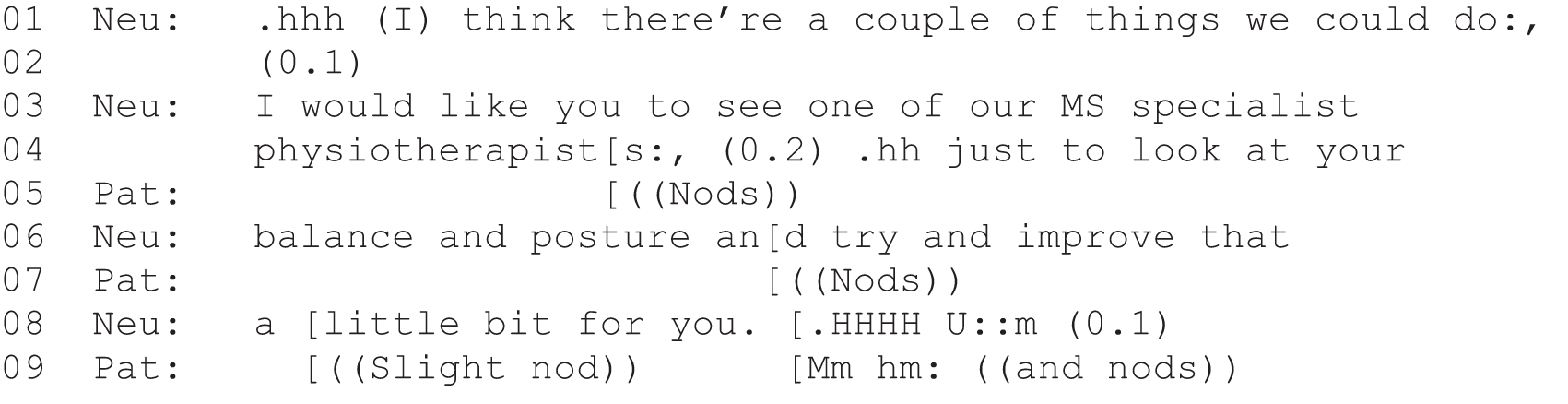

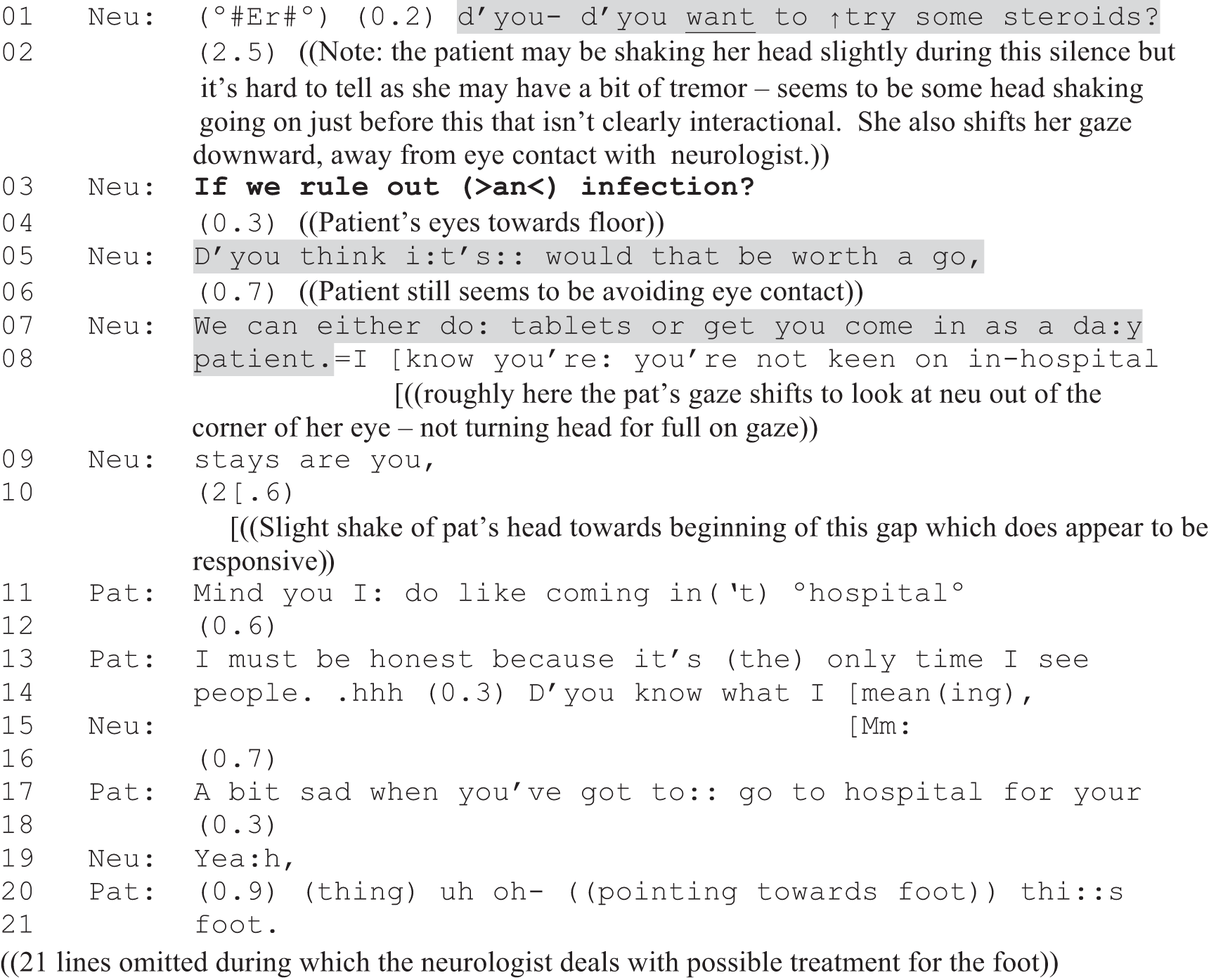

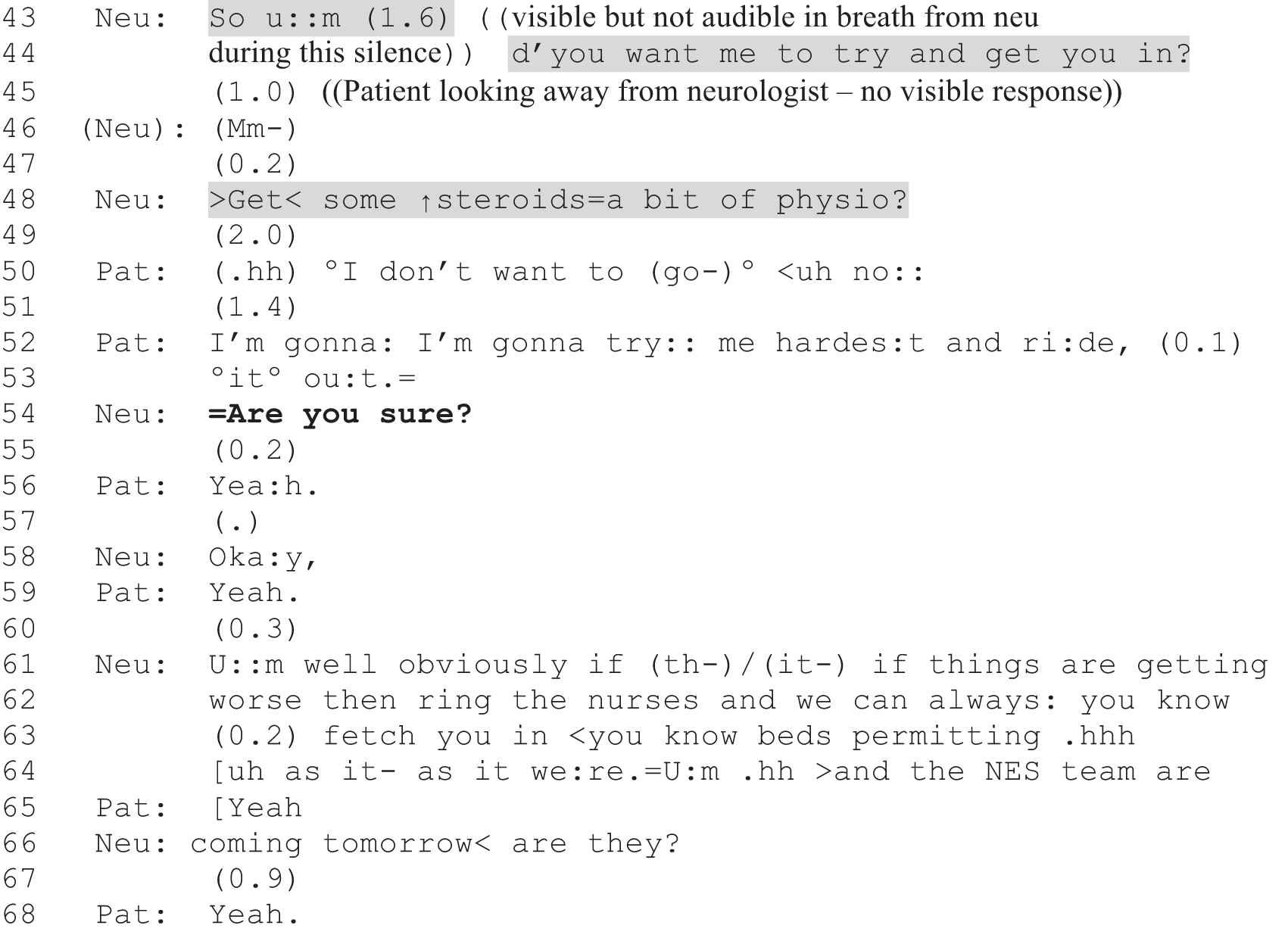

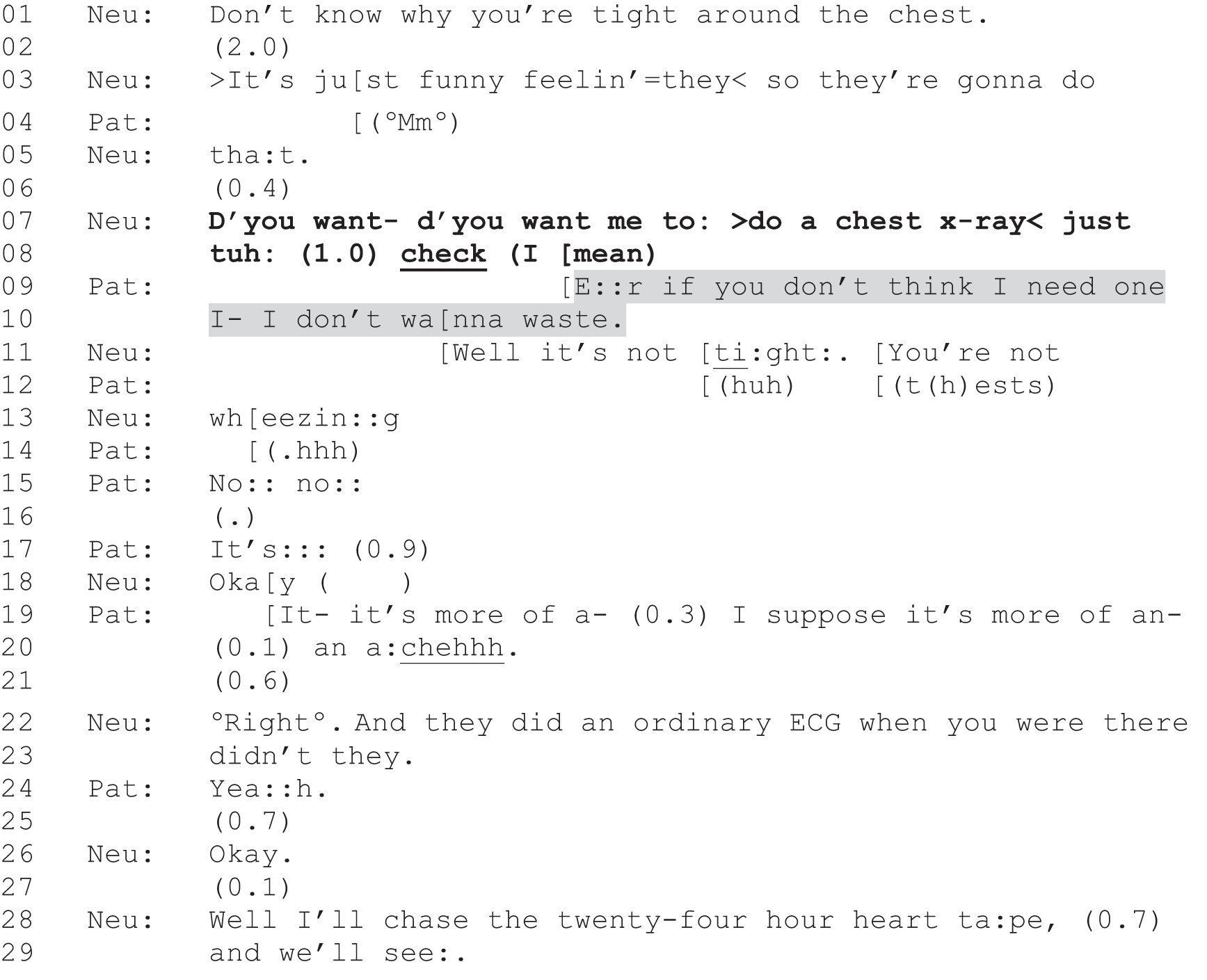

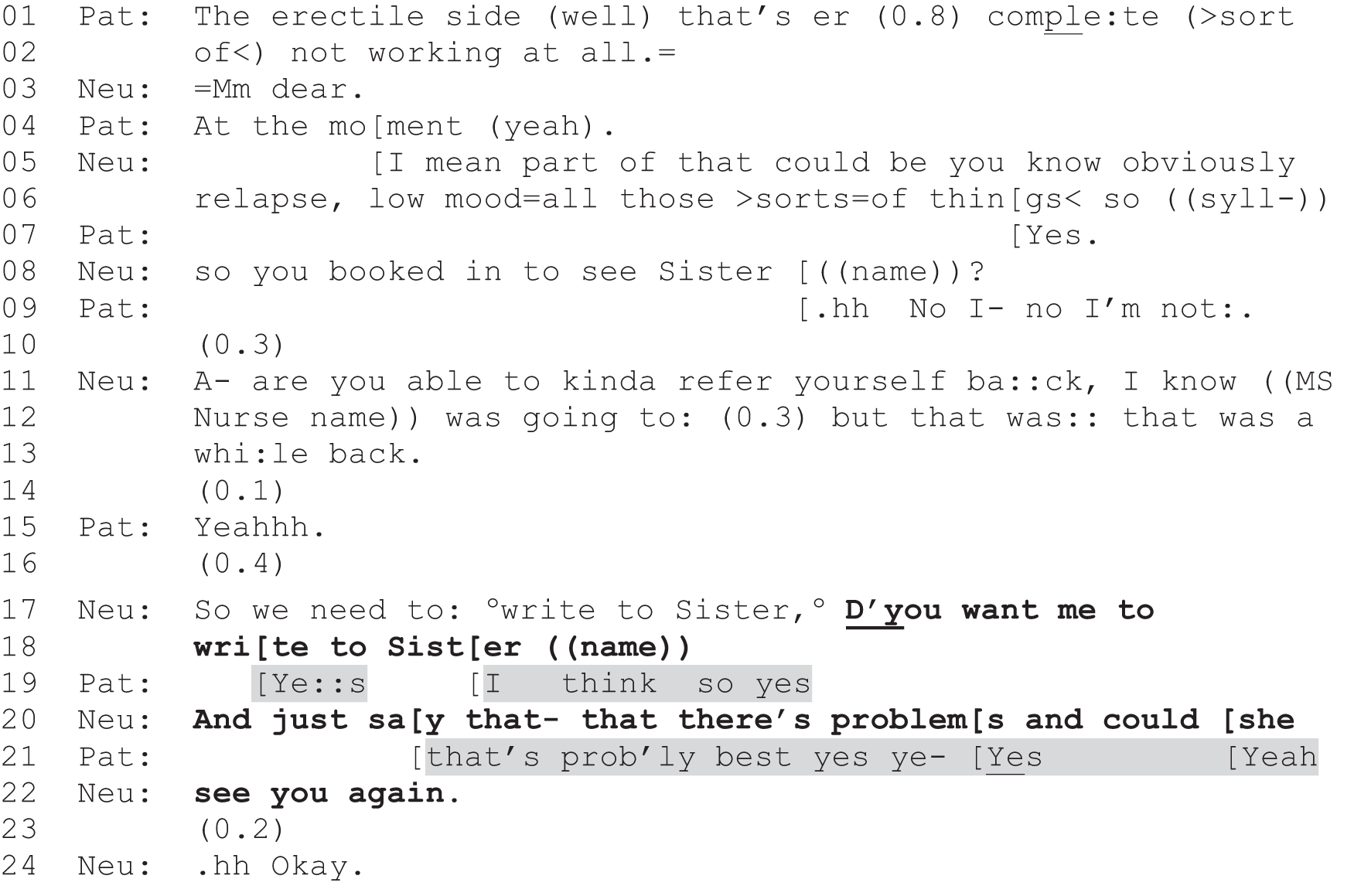

| Practice | Definition | Examples (consultation code) |

|---|---|---|

| Recommendation | Neurologist asserts what treatment, investigation or referral is necessary or is going to happen in a way that suggests a decision has already been made. Patient is given no slot to make a choice |

|

| or | ||

| The neurologist proposes the action in more tentative terms, suggesting an element of choice, e.g. ‘I recommend that you try’, ‘I wonder if we could try’ or ‘I suggest’ |

|

|

| PVE | Neurologist indicates that there is at least one option that they are willing to offer the patient, but seeks the patient’s view on this, thereby creating an explicit slot for the patient to participate in the decision-making process | |

| Option-list | Neurologist provides a menu of options from which the patient may be invited to select |

|

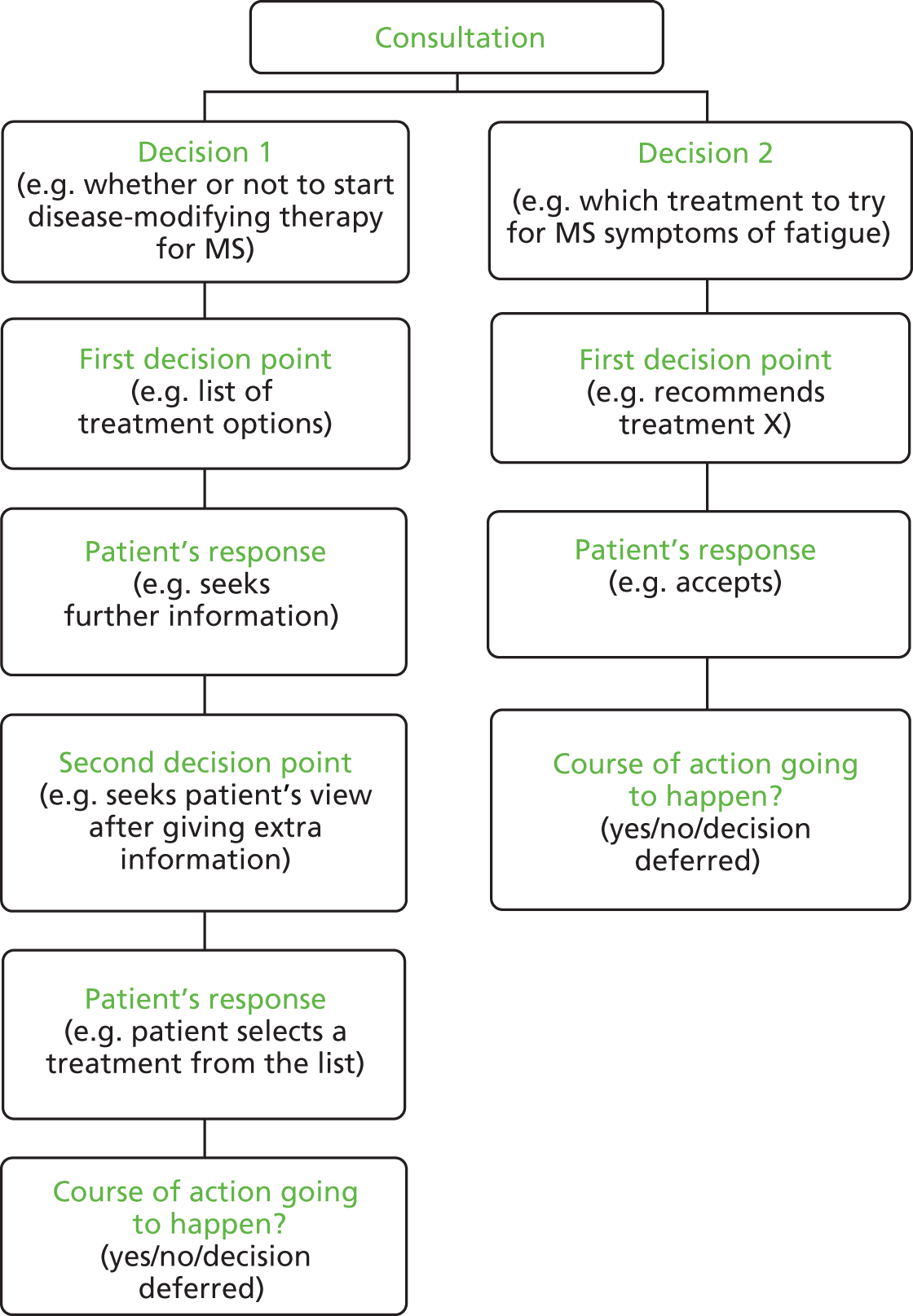

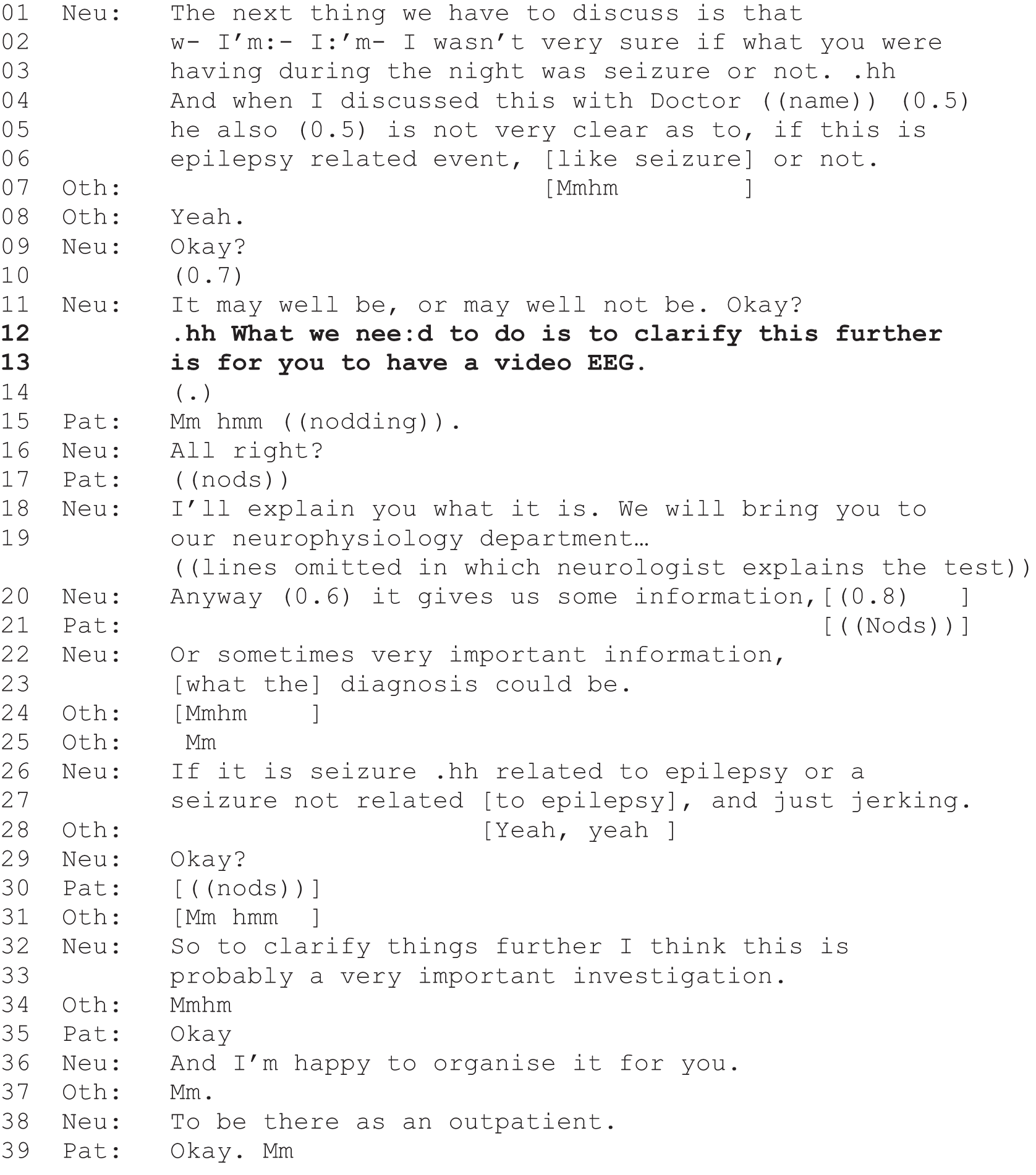

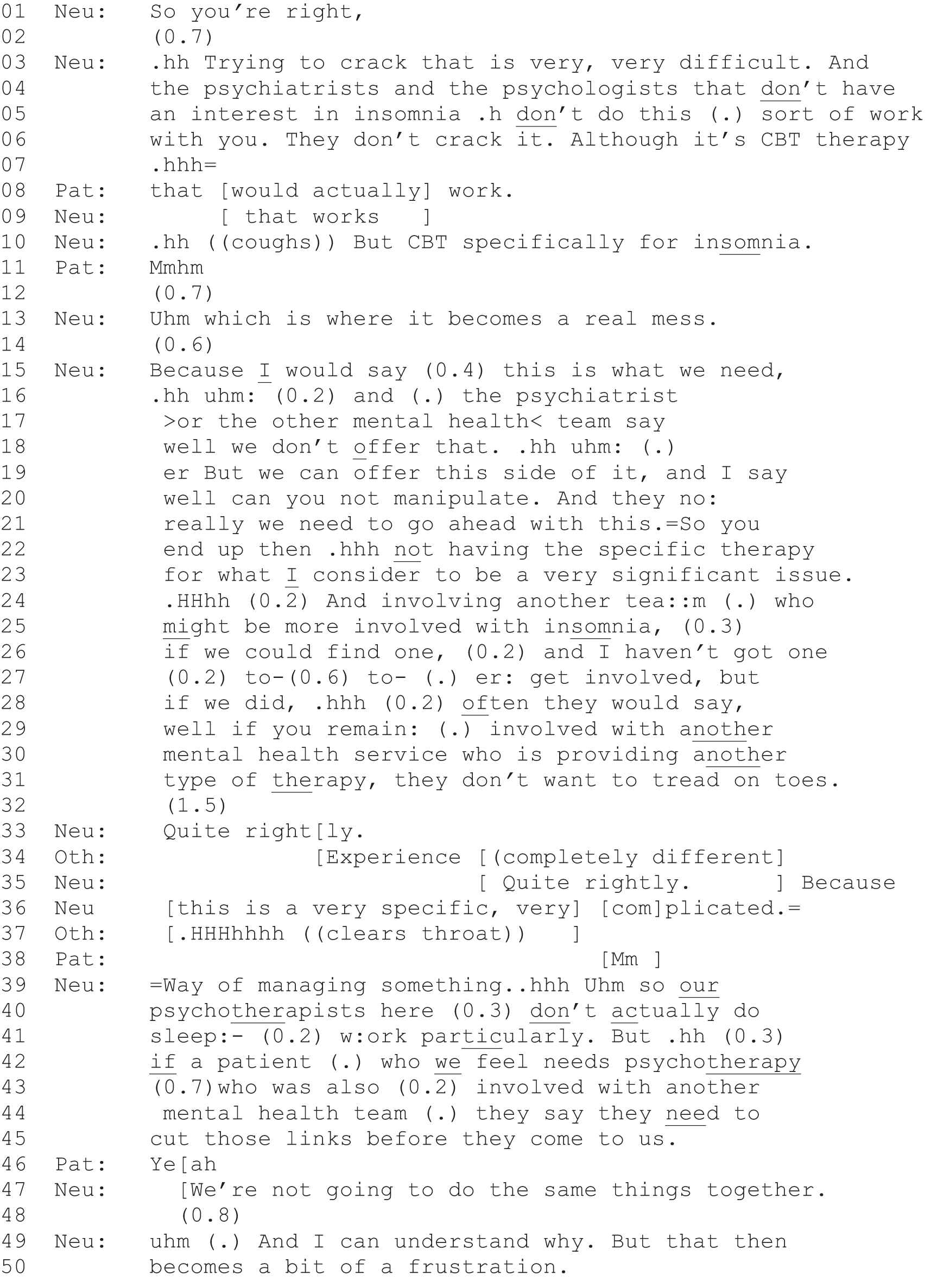

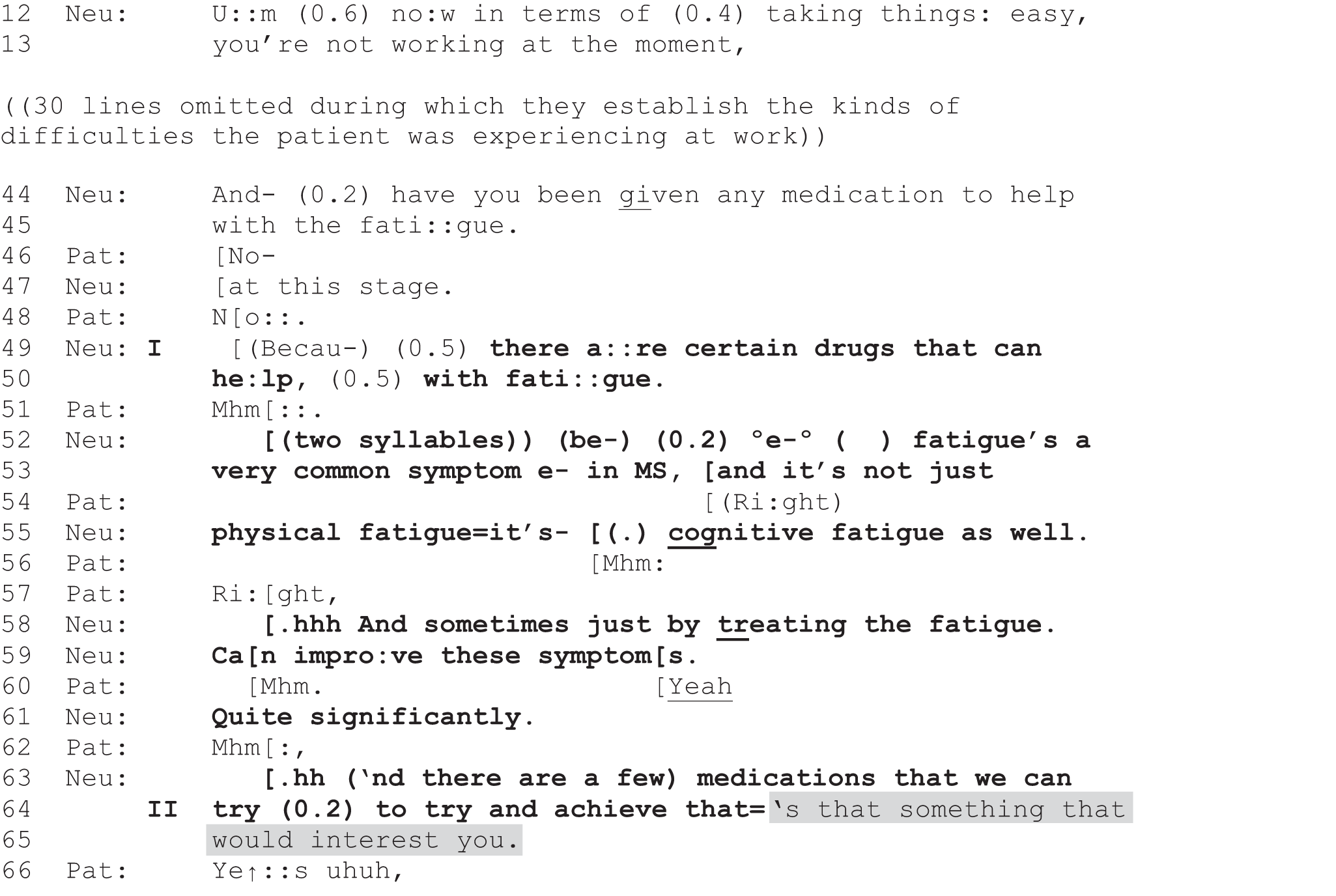

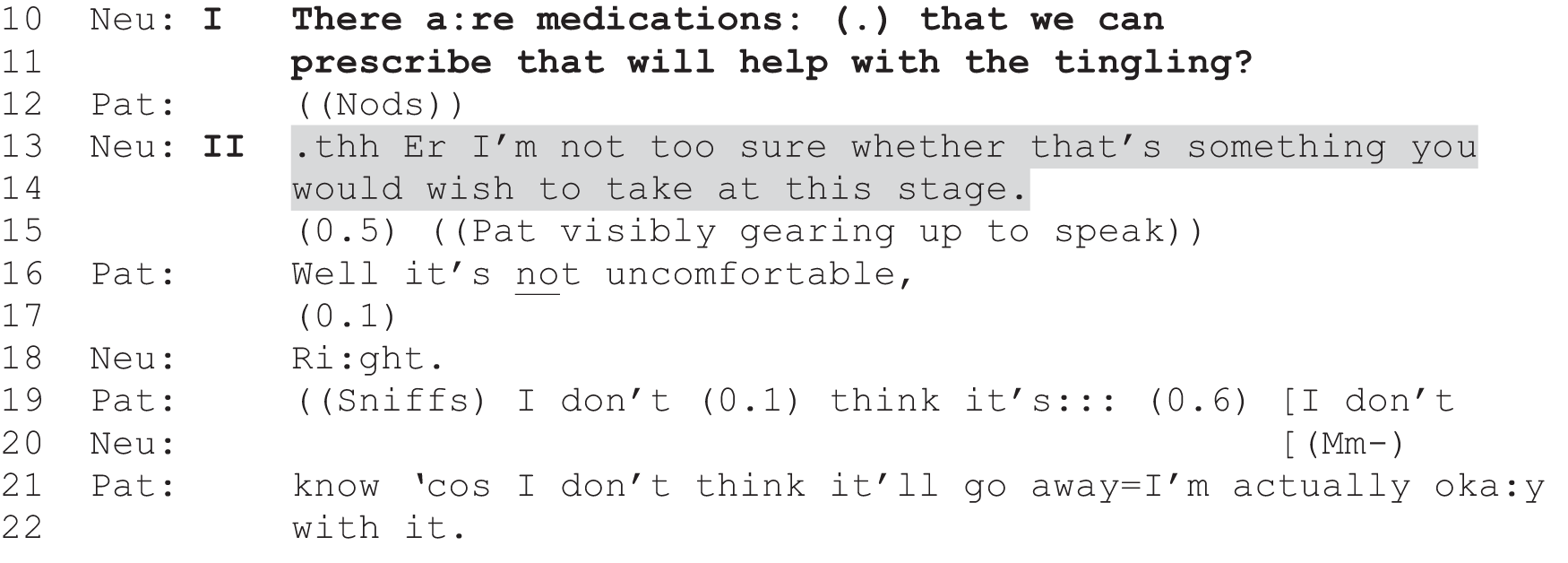

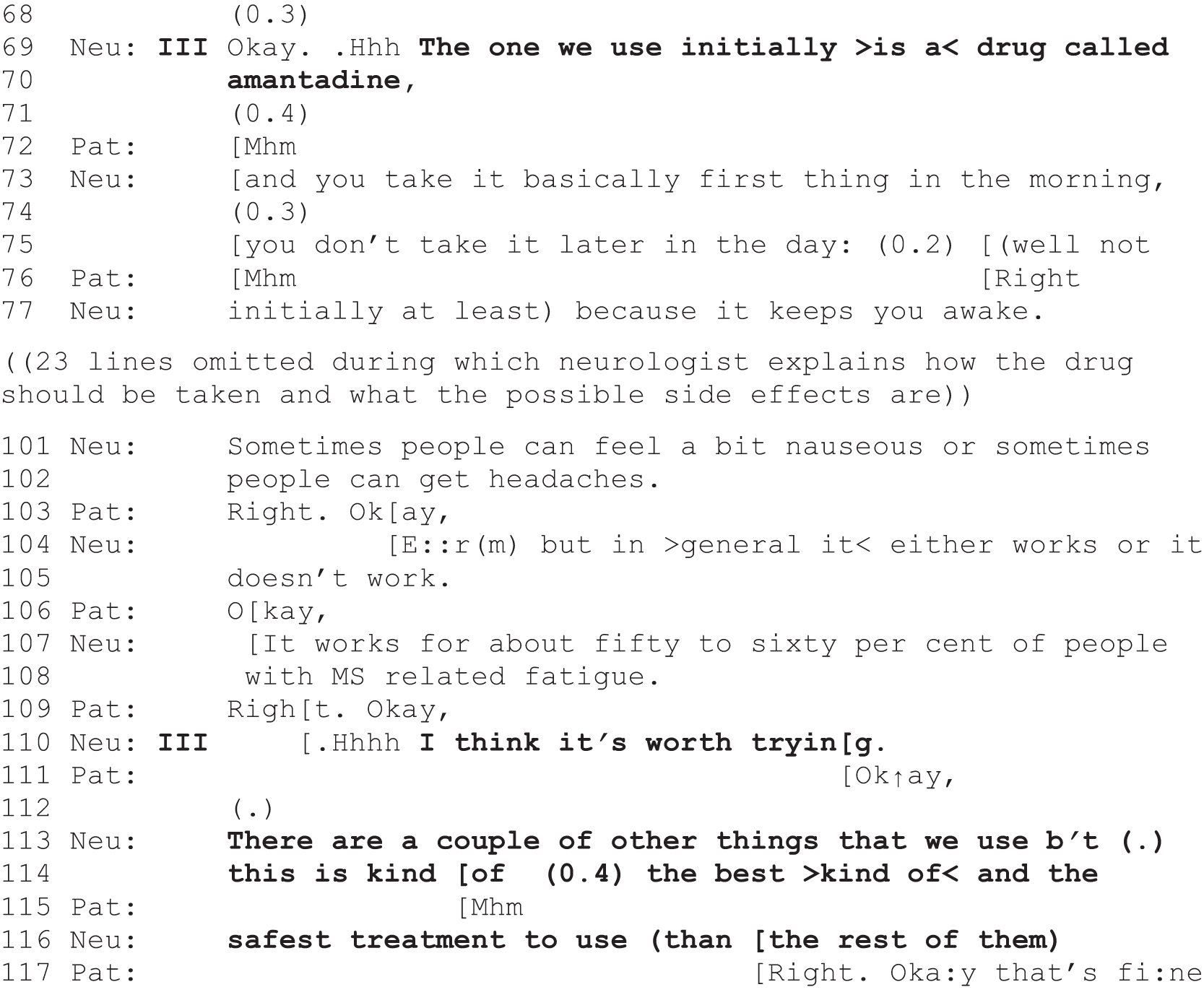

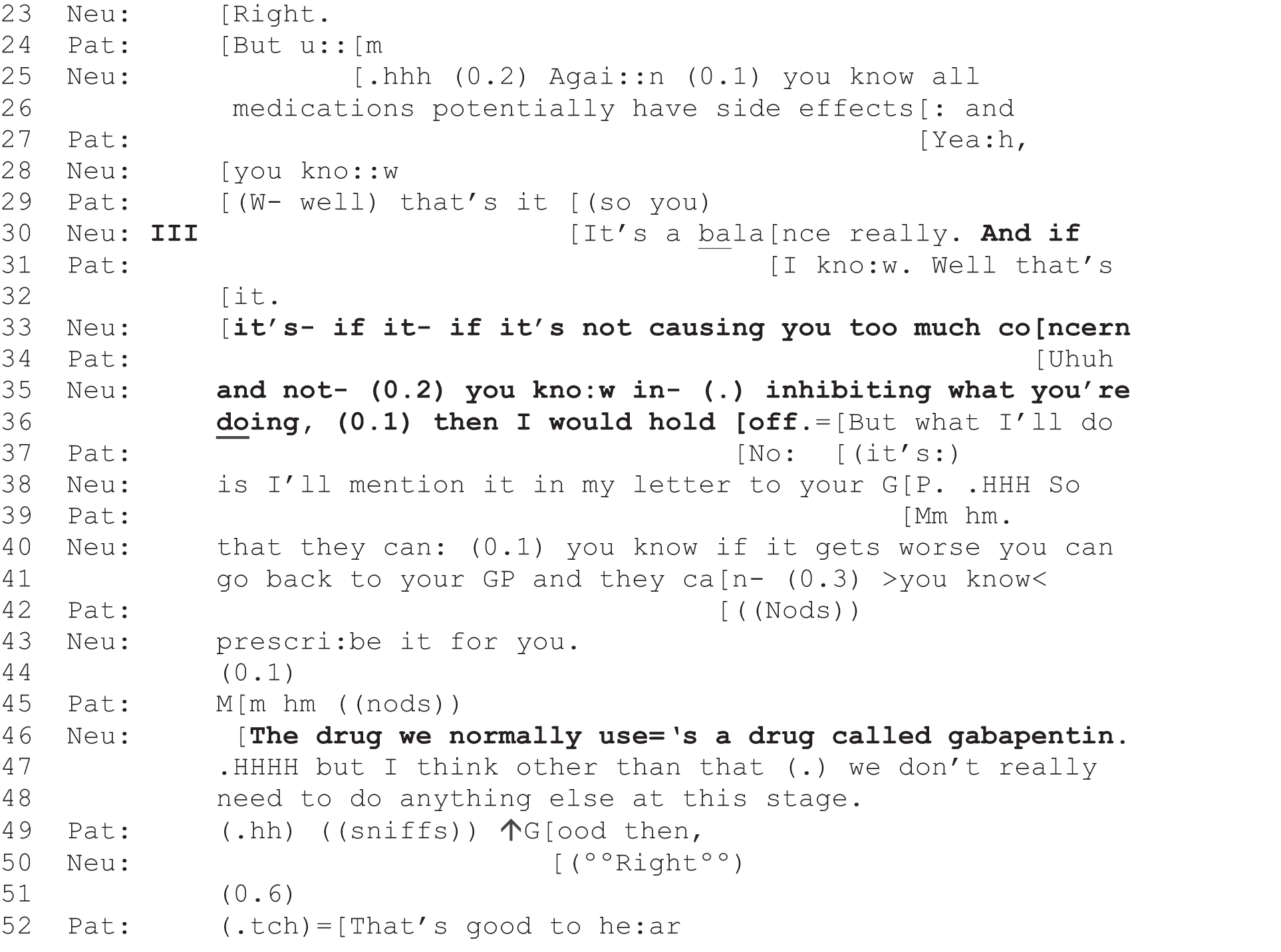

Figure 1 shows how a single consultation might have more than one decision and how each decision may have one or more decision point requiring coding. There is a nested, multilevel structure to the data outputted from the application of the coding scheme. The highest level relates to the consultation; the variable measuring the length of the consultation is at this level. The middle level is decision-level information. Each consultation contains a number of different decisions; an example is the variable classifying the type of decision being made. The lowest level of information is the decision-point level. Each decision is made up of one or more decision points, each entailing one of the three types of practice.

FIGURE 1.

Example of how a consultation might be coded for more than one decision and one or more decision point per decision.

Intercoder reliability testing

A total of 30 cases were randomly selected from the remaining sample (i.e. those cases that had not been part of the development of the coding scheme) and independently coded. Each of the three coders coded 20 cases, 10 with each of the other two coders. Formal intercoder reliability testing was conducted to quantify the extent to which the process could be described as reliable.

Intercoder reliability testing focused on the first decision point of each decision. Two aspects of reliability were assessed: first, the extent to which coders were able to identify the same parts within the consultations as initiation points; and, second, the extent to which coders were able to agree on the classifications applied to the decision points they had identified (the type of decision that was being made, the patient’s response, etc.).

Coder A (Paul Chappell) identified 32 initiation points within 20 consultations. A total of 24 (75%) of these initiation points were also identified by the second coder (half of the time the second coder was B and half of the time the second coder was C). Coder B (Merran Toerien) identified 37 initiation points within 20 consultations. A total of 30 (81%) initiation points were also identified by the second coder. Coder C (Clare Jackson) identified 37 initiation points within 20 consultations. A total of 24 (65%) of these initiation points were also identified by the second coder. This gives a total agreement rate of 74%. Although this indicates that there is some disagreement over which interactional turns should be coded as relevant to our study, both coders agreed on a large majority of first decision points.

In the second part of the intercoder reliability analysis we used cross-tabulations and Cohen’s kappa to explore intercoder agreement in the classification of the 39 decision points that coders had both identified across the 30 consultations (although it is worth noting that not all of the consultations contained initiation points). Table 2 shows the results for intercoder agreement on decision-level questions related to those decision points. Separate percentage agreement and kappa scores have been estimated for each of the different variables. Landis and Koch87 suggest kappa scores ranging from 0.40 to 0.59 show moderate agreement, 0.60 to 0.79 substantial agreement and ≥ 0.80 show outstanding agreement.

| Variable | Agreement (%) | κ |

|---|---|---|

| Type | 92.3 | 0.87 |

| Turn design | 79.4 | 0.70 |

| Who responds? | 84.6 | 0.60 |

| Response | 87.1 | 0.83 |

| Is the outcome in line with what the neurologist thinks best? | 87.1 | 0.46 |

| Is the outcome in line with what the patient appears to prefer? | 76.9 | 0.59 |

| Is the course of action going to happen in principle? | 97.4 | 0.92 |

Some level of disagreement between coders is to be expected when applying quantitative classifications to complex interactional data. However, the results of our reliability analysis are encouraging. In percentage terms, coders agreed with each other most of the time for all of the different variables. Kappa scores indicate that there is a substantial or outstanding level of agreement for all variables, with the exception of the questions regarding whether or not the end outcome is one that the neurologist and patient appear to prefer. These questions relied on coders to some extent interpreting the motivations of the participants, so it is perhaps not surprising that it was hard to achieve high intercoder reliability for these variables. Nevertheless, these results indicate that the coding scheme is reliable for all but these two variables. It is also worth noting that these results may occasionally be overestimating the final differences between coders because some of the categories were combined in later analyses. For example, we collapsed pronouncements and recommendations together. This means that all cases in the reliability analysis that were coded as a recommendation by one coder but as a pronouncement by another would have been counted as ‘disagreement’ under this testing process. However, if the categories had been collapsed together at the point of testing, all these cases would have counted as ‘agreement’.

After the 30 cases had been coded, the coders discussed their differences and decided on agreed codings for any disagreements, and agreed how similar issues would be dealt with in future. After intercoder reliability testing, CJ and PC coded the remainder of the consultations, independently coding a randomly selected half of the remaining cases each. Where coders found problematic cases, with decision points that were hard to identify or classify, these were discussed by all three coders and agreed with reference to the rules outlined in the codebook.

Innovative use of an online extraction questionnaire

The coding was handled through a bespoke online data EQ designed by PC using Google Forms (Google Inc., Mountain View, CA, USA ) (see Appendix 6). The EQ incorporated both categorical data (selecting from among fixed-choice options) and the relevant section of text that comprised the decision point from the transcript. This forced coders to be decisive without losing the original text as evidence for their coding decisions. The EQ retained the sequential order of decision points by releasing subsequent questions depending on how the researcher had answered the first set of questions. For example, after inputting data about a first decision point for a particular decision, the questionnaire required the researcher to note whether or not there were any more decision points for that decision. If they responded yes, they were given an opportunity to input details about the next decision point for the decision. When all decision points for a single decision had been input, decision-level questions were then completed. If there was another decision for the consultation then a new decision-point chain was begun. If not, the consultation-level question (how long is the consultation?) was asked.

The EQ was thus designed such that the basic unit of analysis was the decision point, but the different decision points were linked together through reference to the decision (coded for what the decision was about and whether it related to an investigation, treatment, referral or some combination) and through the use of a numbering system to keep the decision points in order. In turn, the decision-level data were linked to the consultation-level data through the use of unique consultation identifiers. By taking this approach to coding, we were able to distinguish between first and subsequent decision points (to see, for example, whether a particular practice was more commonly used to start or pursue decision-making) and to track through chains of decision points for single decisions to see whether or not certain trajectories were more common than others (e.g. an option-list followed by a PVE). The methodology we describe here is unique, as far as we are aware. It provided multiple benefits in the development, testing and application stages of the coding process – a point to which we return in Chapter 11.

The end product of the application of the coding scheme was a matrix, held in a Microsoft Excel spreadsheet, in which each decision point from a given EQ was represented by a single row in a spreadsheet, with identifiers and numbering linking together each row so that they could be readily recoded into decision-level and consultation-level data. As described in more detail in Chapter 3, 144 out of the 223 cases coded had at least one decision that met our coding criteria.

Quantitative data analysis

The decision-point matrix described above represented the lowest level of data available for analysis. This data set was collapsed into further data sets so that analyses could be conducted at higher levels (decision level and consultation level), facilitating comparisons of practice at each of the three levels. The consultation-level data resulting from this process were merged with consultation-level data derived from the patient/neurologist questionnaires so that the relationships between interactional variables and questionnaire variables could be investigated. Quantitative analyses were conducted using Microsoft Excel and SPSS (Statistical Package for Social Sciences) version 22 (IBM SPSS Statistics, Armonk, NY, USA). These focused on the links between forms of decisional practice and other relevant variables. Analyses were conducted at the three different levels of analysis, depending on their appropriateness for addressing different research questions.

Consultation-level analyses

Consultation-level analyses were mainly focused on examining the links between practices and the characteristics of patients and consultations. For example, in Chapter 6 the links between demographic characteristics of patients and the use of different practices are investigated. To facilitate these analyses, consultations were classified on the basis of the practices that were present in them using binary categorical coding.

This method of classification was required because many of the consultations have more than one decision point and because the presence or absence of a certain decisional practice does not preclude the presence or absence of another practice (in other words, the three practices are not mutually exclusive at the consultation level). There are some consultations that involve all three decisional practices, some which contain two of the three and some that contain only one. Therefore, when we conduct comparisons between different consultations, it is not possible to classify each consultation as including one of the three types and to compare the different types to one another. To address this issue, our three binary variables contrast all cases with:

-

a PVE and all cases without a PVE

-

an option-list and all cases without an option-list

-

at least one PVE or option-list and all cases with neither.

The third comparison is potentially the most interesting because it allows a comparison of all the cases for which a patient’s view was explicitly elicited (through the use of a PVE and/or an option-list) with all the cases for which the neurologist employed only a single recommendation or chain of recommendations.

Bivariate consultation-level analyses were conducted to investigate the links between perceived choice and decisional practice (see Chapters 4 and 7); demographic, clinical and consultation-based factors and decisional practice (see Chapter 6); and patient satisfaction and decisional practice (see Chapter 7). Crosstabs, comparison of means, chi-squared tests, t-tests and one-way analysis of variance (ANOVA) tests were employed where appropriate. Multivariate analyses were conducted using generalised estimating equations to take into account the clustered nature of the sample.

Decision-level analyses

Decision-level analyses were conducted in a similar way to the consultation-level analyses described above, in that binary variables describing whether or not each decision had a PVE, an option-list and at least one PVE or option-list were derived. Associations between these variables and the decision outcome variable (that recorded whether or not the outcome ended up being agreed on in principle) are reported in Chapter 8 using cross-tabulations and chi-squared tests.

Decision-point-level analyses

Decision-point analyses were in some ways more straightforward because each decision point was already coded as a form of decisional practice so no recoding was required in this case. In Chapter 8, the link between practices and immediate responses is investigated. Chapter 5 includes decision-point analysis that makes use of the data recording the sequence of decision points. Decisions often take more than one round of decision point and might involve different types of practice. Decision points were therefore numbered on the basis of the order in which they occurred for each decision. Hence, it was possible to investigate if certain practices tend to be used more frequently at the beginning of decision-making sequences, and to explore what types of decision points tend to follow others in decision-point chains. This decision-point analysis is descriptive and exploratory and involves charting the distribution of practices and responses across the data set.

Qualitative data analysis

One of the advantages of our approach to coding is that data were outputted in a format that allowed an easy integration of quantitative and qualitative analyses. We discuss these advantages further in Chapter 11. In brief, the data matrix allowed us to sort the data by quantitative categories to identify certain types of decision point. The result of this sorting is that all the relevant portions of transcript are then in a list on a single screen, so they can be explored for qualitative patterns. For example, one of the findings from our initial quantitative analysis (see Chapter 6) was that one neurologist used PVEs at a much higher frequency than the others. Using the spreadsheet of all decision points, it was straightforward to sort the data set by neurologist and by PVE. As one of the columns in the spreadsheet contains the qualitative text, all of this neurologist’s PVEs can be viewed on a single screen, and it was therefore possible to explore the precise formulations used and then go back to the original transcripts to undertake additional CA work.

We used a similar process to identify all relevant extracts that form the basis of the two CA chapters (see Chapters 9 and 10). For Chapter 9, we sorted the matrix into all first decision points and then into all first recommendations. We were then able to examine this subcollection of decisional practice both quantitatively, using descriptive statistics, and qualitatively, using CA. In Chapter 10, the matrix was first organised by PVEs occurring at any decision point and then these formats were explored using both descriptive statistics and CA.

We return to the methodological innovation of our approach in Chapter 11. For more on our use of CA, see the primary report. 1 In the next chapter, Chapter 3, we present an overview of our data set to establish that our current working data set is comparable to that used in the primary study.

Chapter 3 Overview of the data set

Introduction

The aim of this chapter is to provide a descriptive overview of the data set and to explore the extent to which the working sample used in this follow-on study is comparable to the sample used originally. Some information is repeated here from the primary study report,1 but only when directly relevant to understanding our follow-on work.

Data collection sites

The two data collection sites serve large populations: the Royal Hallamshire Hospital in Sheffield serves as the clinical neuroscience hub to a population of 2.2 million; the general population served by the Southern General in Glasgow is 2.5 million. Each site offers general neurology as well as a range of subspecialty clinics (such as epilepsy, MS, dementia, ataxia, headache, cerebrovascular disease, neuromuscular disorders and movement disorders), ensuring a broad range of consultation types. New patient appointments are usually scheduled to last for 30–45 minutes and follow-up appointments for 15–20 minutes.

Recruitment figures for each site

A total of 14 clinicians (seven at each site) agreed for recordings of their consultations to be made, subject to patient consent. As detailed in Chapter 2, the eligible patient population included all patients (aged ≥ 16 years) attending clinics run by the participating clinicians, provided they could give informed consent in English. In total, 223 patients agreed to take part (Glasgow, n = 114; Sheffield, n = 109). One appointment per patient was captured. In addition to patients and clinicians, 114 accompanying others (including spouses, parents, carers and friends) consented to participate (Glasgow, n = 63; Sheffield, n = 51). These 223 cases formed the sample for our original study. 1 Descriptive statistics showing the characteristics of this sample can be found in the original study. 1

Follow-on study working sample

Of the 223 consultations, 144 were classified as involving a decision, including one or more decision-point type, according to the criteria outlined in Chapter 2. These 144 consultations make up the working sample for this study on which all quantitative data analysis reported here is based. Reuber et al. 1 demonstrated the suitability of the full (n = 223) sample for analysis (providing justification that there was no reason not to combine the data from Glasgow and Sheffield) but, because the working sample is significantly smaller than the full sample, we analysed the descriptive characteristics of the working sample, investigating how it may differ from the original.

Participant demographics

Within the working sample, the patients ranged from 17 to 80 years of age, with a mean age of 46 years. More than half of the patients were female (62%). A total of 93% of the sample described themselves as white (English, Welsh, Scottish, Northern Irish or British). A total of 41% of the sample was in employment (full or part time) or education. Educational qualifications beyond school leaving age (16 years) had not been obtained by 42% of the sample. The mean duration of consultations was 21.3 minutes [ranging from 5 to 62 minutes, standard deviation (SD) 11.2 minutes]. There were more than twice as many follow-up appointments as first appointments and most patients (83%) were seen in specialist rather than general clinics.

Table 3 shows the demographic features of the working sample in more detail, including a breakdown per study site. There were no differences between the two sites in terms of patient demographics. Furthermore, the demographic characteristics of our working sample (n = 144) are very similar to the whole sample (n = 223) (see original report1 for comparison).

| Variable | Study site | Combined | |

|---|---|---|---|

| Glasgow | Sheffield | ||

| Patients | |||

| n | 71 | 73 | 144 |

| Gender (%) | |||

| Female | 63.4 | 60.3 | 61.8 |

| Male | 36.6 | 39.7 | 38.2 |

| Ethnicity (%) | |||

| White | 94.4 | 89.0 | 91.7 |

| Other | 5.6 | 11.0 | 8.3 |

| Post-school qualifications? (n = 119)a (%) | |||

| Yes | 58.0 | 58.0 | 58.0 |

| No | 42.0 | 42.0 | 42.0 |

| Employment (%) | |||

| In work/education | 33.8 | 38.4 | 36.1 |

| Not working | 66.2 | 61.6 | 63.9 |

| Age (years), mean (SD) | 44.1 (15.0) | 48.1 (14.4) | 46.1 (14.8) |

| Consultations | |||

| Clinic type**** (%) | |||

| Seen in general clinic | 31.0 | 2.7 | 16.7 |

| Seen in specialist clinic | 69.0 | 97.3 | 83.3 |

| Accompanied? (%) | |||

| Accompanied | 50.7 | 52.1 | 51.4 |

| Alone | 49.3 | 47.9 | 48.6 |

| First appointment? (%) | |||

| First appointment | 35.3 | 24.6 | 29.5 |

| Follow-up appointment | 64.7 | 75.4 | 70.5 |

| Duration*** (minutes), mean (SD) | 18.8 (9.2) | 23.8 (12.4) | 21.3 (11.2) |

| Clinical features | |||

| Symptoms*** (%) | |||

| Completely/largely explained | 53.6 | 74.3 | 64.0 |

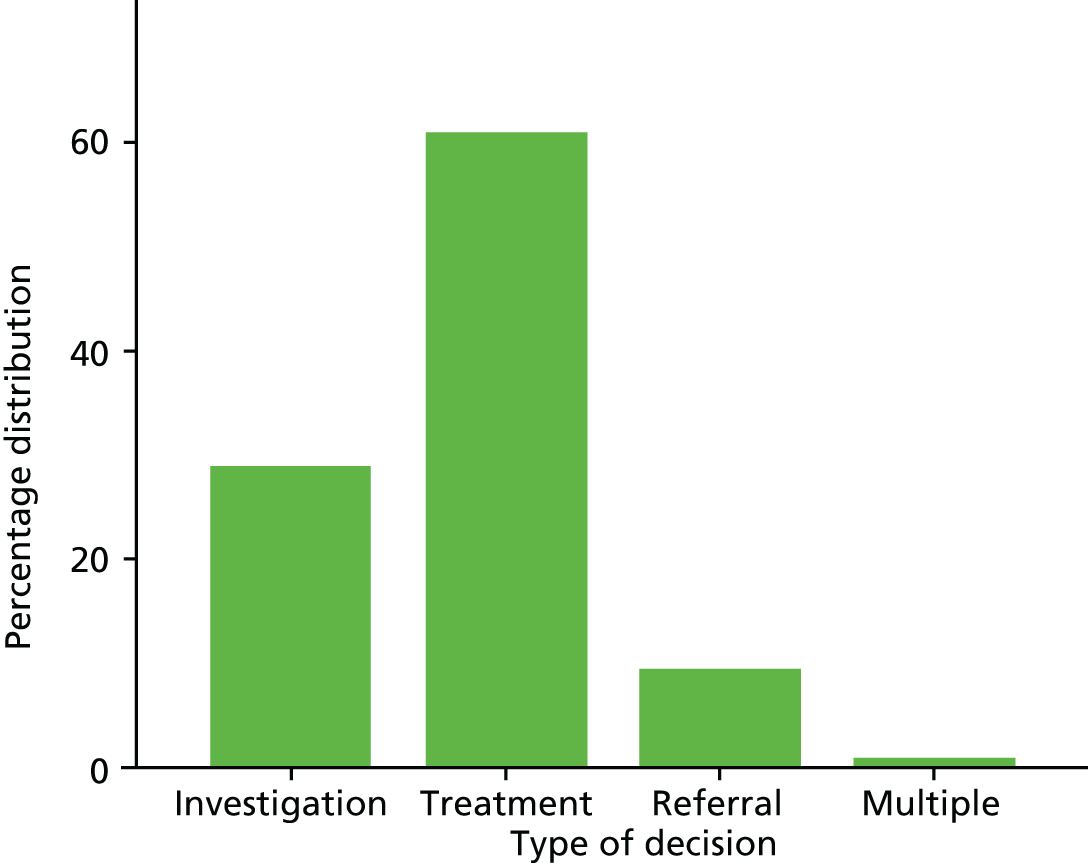

| Partly explained | 27.5 | 21.4 | 24.5 |