Notes

Article history

The research reported here is the product of an HS&DR Evidence Synthesis Centre, contracted to provide rapid evidence syntheses on issues of relevance to the health service, and to inform future HS&DR calls for new research around identified gaps in evidence. Other reviews by the Evidence Synthesis Centres are also available in the HS&DR journal. The research reported in this issue of the journal was funded by the HS&DR programme or one of its preceding programmes as project number 16/47/17. The contractual start date was in April 2018. The final report began editorial review in June 2018 and was accepted for publication in October 2018. The authors have been wholly responsible for all data collection, analysis and interpretation, and for writing up their work. The HS&DR editors and production house have tried to ensure the accuracy of the authors’ report and would like to thank the reviewers for their constructive comments on the final report document. However, they do not accept liability for damages or losses arising from material published in this report.

Declared competing interests of authors

Andrew Booth is a member of the National Institute for Health Research Complex Review Support Unit Funding Board.

Permissions

Copyright statement

© Queen’s Printer and Controller of HMSO 2019. This work was produced by Chambers et al. under the terms of a commissioning contract issued by the Secretary of State for Health and Social Care. This issue may be freely reproduced for the purposes of private research and study and extracts (or indeed, the full report) may be included in professional journals provided that suitable acknowledgement is made and the reproduction is not associated with any form of advertising. Applications for commercial reproduction should be addressed to: NIHR Journals Library, National Institute for Health Research, Evaluation, Trials and Studies Coordinating Centre, Alpha House, University of Southampton Science Park, Southampton SO16 7NS, UK.

2019 Queen’s Printer and Controller of HMSO

Chapter 1 Background

Some parts of this chapter have been reproduced from Chambers et al. 1 © Author(s) (or their employer(s)) 2019. Re-use permitted under CC BY. Published by BMJ. This is an open access article distributed in accordance with the Creative Commons Attribution 4.0 Unported (CC BY 4.0) license, which permits others to copy, redistribute, remix, transform and build upon this work for any purpose, provided the original work is properly cited, a link to the licence is given, and indication of whether changes were made. See: https://creativecommons.org/licenses/by/4.0/. The text includes minor additions and formatting changes to the original text.

Digital and online symptom checkers and assessment services are used by patients seeking guidance about an urgent health problem. These services generally provide people with possible alternative diagnoses based on their reported symptoms and/or suggest a course of action [e.g. self-care, attend a general practitioner (GP) appointment or go to an emergency department (ED)].

In England, the NHS111 service provides assessment and triage by telephone for problems that are urgent but not classified as emergencies. The latest data from NHS England2 show that in April 2018 there were 1,338,253 calls to NHS111, which is an average of approximately 46,100 calls per day. Outcomes of these calls were that 12.1% of calls resulted in ambulances being despatched, 8.7% of patients who called were recommended to attend an ED, 60.7% of patients who called were recommended to attend primary care, 4.6% of patients who called were recommended to attend another service and 14% of patients who called were not recommended to attend another service (e.g. their condition was considered suitable for self-care).

NHS England is planning to introduce a digital platform for NHS111. This would include a possibility for patients to be referred to the NHS111 telephone service for further assessment.

A beta version of the service (referred to as ‘NHS111 Online’) is available at the following website: https://111.nhs.uk/ (accessed 1 June 2018). The ‘digital 111’ service is seen as key to reducing demand for the telephone 111 service, enabling resources to be redirected to support ‘integrated urgent and emergency care systems’ (contains public sector information licensed under the Open Government Licence v3.0 www.nationalarchives.gov.uk/doc/open-government-licence/version/3/), as outlined in the NHS Five Year Forward View3 and its 2017 update Next Steps on the NHS Five Year Forward View. 4

It is thus hoped that a digital 111 platform will help to manage demand and increase efficiency in the urgent and emergency care system, complementing the agenda of locally based Sustainability and Transformation Partnerships. However, there is a risk of increasing demand, duplicating health-care contacts and providing advice that is not safe or clinically appropriate. For example, an evaluation of the NHS111 telephone service at four pilot sites and three control sites found that in its first year the service was not successful in reducing 999 emergency calls or in shifting patients from emergency to urgent care. 5 A recent study of 23 symptom checker algorithms providing diagnostic and triage advice that would form the basis of a digital 111 platform found deficiencies in both their diagnostic capabilities and their triage capabilities. 6

In 2017, NHS England carried out pilot evaluations of different systems in four regions of England. 7 The evaluations aimed to assess whether or not digital/online triage was acceptable to users and connected them with appropriate clinical care. 7 The full report from NHS England is not yet published at the time of writing this review. The objective of this systematic review was to inform further development of the proposed digital platform by summarising and critiquing the previous research in this area, from both the UK and overseas.

Chapter 2 Review methods

Some parts of this chapter have been reproduced from Chambers et al. 1 © Author(s) (or their employer(s)) 2019. Re-use permitted under CC BY. Published by BMJ. This is an open access article distributed in accordance with the Creative Commons Attribution 4.0 Unported (CC BY 4.0) license, which permits others to copy, redistribute, remix, transform and build upon this work for any purpose, provided the original work is properly cited, a link to the licence is given, and indication of whether changes were made. See: https://creativecommons.org/licenses/by/4.0/. The text includes minor additions and formatting changes to the original text.

This systematic review was commissioned to provide NHS England with an independent review of previous research in this area to inform strategic decision-making and service design. The research questions that the review addressed are as follows (in relation to digital and online symptom checkers and health advice/triage services):

-

Are these services safe and clinically effective? Do they accurately identify both patients with low-acuity problems and patients with high-acuity problems?

-

What is the overall impact of these services on health-care demand? In particular, is there evidence that they drive patients towards higher-acuity services (e.g. ambulance and ED use)?

-

Do individuals comply with these services and the advice received? Are these services used instead of, or as well as, other elements of urgent and emergency care?

-

Are these services cost-effective?

-

Are patients/carers satisfied with the services and the advice received?

-

What are the implications of these services for equality of access to health services and inclusion/exclusion of disadvantaged groups?

Literature search and screening

Initial scoping searches revealed that a highly sensitive search strategy (designed to retrieve all relevant items), typically conducted for systematic reviews, retrieved a disproportionately high number of references on GPs’ decision-making and triage. These references are outside the scope of the NHS Digital 111 review and would have unnecessarily diverted resources from the review focus. Therefore, to optimise retrieval and sifting we devised a three-stage retrieval strategy as an acceptable alternative to comprehensive topic-based searching. This involves:

-

Targeted searches of precise, high-specificity terms (designed to limit the search to items likely to be relevant). Box 1 shows an example for MEDLINE.

We searched the following databases –The searches were not restricted by language or date.

-

MEDLINE via Ovid

-

EMBASE via Ovid

-

The Cochrane Library via Wiley Online Library

-

CINAHL (Cumulative Index to Nursing and Allied Health Literature) via EBSCOhost

-

HMIC (Health Management Information Consortium) via OpenAthens

-

Web of Science (Science Citation Index and Social Science Citation Index) via the Web of Knowledge at the Institute for Scientific Information, now maintained by Clarivate Analytics

-

the Association of Computing Machinery (ACM) Digital Library.

-

-

Phrase searching for named and generic systems. The list below was used for phrase searching and was compiled from a study of symptom checker performance6 and an examination of internet search results for symptom checkers –The phrase searches were conducted on the databases listed in point 1, with no language or date restrictions.

-

Askmd

-

askmygp

-

BetterMedicine

-

DocResponse

-

Doctor Diagnose

-

Drugs.com

-

EarlyDoc

-

Econsult

-

engage consult

-

Esagil

-

Family Doctor

-

FreeMD

-

gp at hand

-

Harvard Medical School Family Health Guide

-

healthdirect

-

Healthline

-

Healthwise

-

Healthy Children

-

Isabel

-

iTriage

-

Mayo Clinic

-

MEDoctor

-

NHS Symptom Checkers

-

online triage

-

push doctor

-

Steps2Care

-

Symcat

-

Symptify

-

Symptomate

-

webgp

-

WebMD.

-

-

Citation searches of key included studies and reviews. Reference checking of included studies and key reviews. This was complemented by contact with service providers, directly and via websites.

Ovid MEDLINE(R) Epub Ahead of Print, In-Process & Other Non-Indexed Citations, Ovid MEDLINE(R) Daily and Ovid MEDLINE(R) <1946 to Present>.

Search strategy-

(symptom checker or symptoms checker or symptom checkers or symptoms checkers).tw.

-

(‘self diagnosis’ or ‘self referral’ or ‘self triage’ or ‘self assessment’).tw. (10403)

-

TRIAGE/

-

2 or 3

-

(online or on-line or web or electronic or automated or internet or digital or app or mobile or smartphone).tw.

-

4 and 5

-

(‘online diagnosis’ or ‘web based triage’ or ‘electronic triage’ or etriage).tw.

-

1 or 6 or 7

Search results were stored in a reference management system (EndNote; Clarivate Analytics, Philadelphia, PA, USA) and imported into EPPI-Reviewer software for screening (version 4; Evidence for Policy and Practice Information and Co-ordinating Centre, University of London, London, UK). The search results were screened against the inclusion criteria by one reviewer, with a 10% sample screened by a second reviewer. Uncertainties were resolved by discussion among the review team.

Inclusion and exclusion criteria

Population

Members of the general population, which includes adults and children, who are looking for information online or digitally to address an urgent health problem, which includes issues arising from both acute illness and long-term chronic illness. Non-urgent problems, such as possible Asperger syndrome or memory loss/early dementia, were excluded.

Intervention

Any online or digital service designed to assess symptoms, provide health advice and direct patients to appropriate services. This reflects the role of the NHS111 telephone service. Services that provide only health advice were excluded, as were those that offer treatment (e.g. online CBT services).

Comparator

The ‘gold standard’ comparator is the current practice of telephone assessment (e.g. NHS111) or face-to-face assessment (e.g. general practice, urgent-care centre or ED). However, studies with other relevant comparators (e.g. comparative performance in tests or simulations) or with no comparator were included if they addressed the research questions.

Outcomes

The main outcomes of interest were:

-

safety (e.g. any evidence of adverse events arising from following or ignoring advice from online/digital services)

-

clinical effectiveness (any evidence of clinical outcomes associated with use of online/digital services)

-

cost-effectiveness (including costs and resource use)

-

accuracy – this refers to the ability to provide a correct diagnosis and distinguish between high and low acuity/urgency problems (and hence direct patients to appropriate services, avoiding over- or undertriage)

-

impact on service use/diversion (including possible multiple contacts with health services)

-

compliance with advice received

-

patient/carer satisfaction

-

equity and inclusion (e.g. barriers to access, characteristics of patients using the service compared with the general population).

This list is not exhaustive and other relevant outcomes from included studies were extracted.

Study design

We did not restrict inclusion by study design (and included relevant audits or service evaluations in addition to formal research studies), but included studies had to evaluate (quantitatively or qualitatively) some aspect of an online/digital service. Studies from any high-income country’s health-care system were eligible for inclusion.

Excluded

The following types of studies were excluded from the review:

-

studies that merely describe services without providing any quantitative or qualitative outcome data

-

conceptual papers and projections of possible future developments

-

studies conducted in low- or middle-income countries’ health-care systems.

Data extraction and quality/strength of evidence assessment

We extracted and tabulated key data from the included studies, including study design, population/setting, results and key limitations. The full data extraction template is provided in Appendix 1. Data extraction was performed by one reviewer, with a 10% sample checked for accuracy and consistency by another reviewer.

To characterise the included digital and online systems as interventions, we identified studies reporting on a particular system and extracted data from all relevant studies using a modification of the Template for Intervention Description and Replication (TIDieR) checklist8 that we designated Template for Intervention Description for Systems for Triage (TIDieST). The checklist is presented in Appendix 2 and the completed checklists in Appendix 3. Characteristics of included systems are summarised in Chapter 3, Characteristics of included systems.

Quality (risk-of-bias) assessment was undertaken for peer-reviewed full publications only (i.e. not grey literature publications or conference abstracts). The rationale for this approach was that non-peer-reviewed publications tend to lack the detail required for assessment of risk of bias and/or tend not to follow standard study designs. Randomised controlled trials (RCTs) were assessed using the Cochrane Collaboration risk-of-bias tool. 9 For diagnostic accuracy type studies, we used the Cochrane Collaboration version of Quality Assessment of Diagnostic Accuracy Studies (QUADAS)10 and for other study designs we used the National Heart, Lung and Blood Institute’s tool for observational cohort and cross-sectional studies. 11 Details of quality assessment tools can be found in Appendix 4. Quality assessment was performed by one reviewer, with a 10% sample checked for accuracy and consistency by another reviewer. Assessment of the overall strength (quality and relevance) of evidence for each research question is part of the narrative synthesis. Overall strength of the evidence base for key outcomes was assessed using an adaptation of the method described by Baxter et al. 12 This involves classifying evidence as ‘stronger’, ‘weaker’, ‘inconsistent’ or ‘very limited’, based on study numbers and design. Specifically, ‘stronger evidence’ represented generally consistent findings in multiple studies with a comparator group design or comparative diagnostic accuracy studies, ‘weaker evidence’ represented generally consistent findings in one study with a comparator group design and several non-comparator studies or multiple non-comparator studies, ‘very limited evidence’ represented an outcome reported by a single study and, finally, ‘inconsistent evidence’ represented an outcome for which < 75% of the studies agreed on the direction of effect. All studies included in the review were included in the analysis of the overall strength of evidence.

Evidence synthesis

We performed a narrative synthesis structured around the prespecified research questions and outcomes. This included an ‘evidence map’ summarising the quantity and strength of evidence for each outcome and identifying gaps that may need to be filled by further research. We did not perform any meta-analyses because the included studies varied widely in terms of design, methodology and outcomes.

Public and patient involvement in the study

The study aimed to be informed by patient and public involvement (PPI) at all stages of the research process. The Sheffield Health Services and Delivery Research (HSDR) evidence synthesis centre patient and public advisory group provided input during the design, analysis and reporting phases, including exploration of the study parameters, discussion regarding the meaning and interpretation of the study findings, drafting of the Plain English summary and help with disseminating the findings and maximising the impact of the research.

The advisory group comprised nine members drawn from the Yorkshire and Humber region and two members from other regions of England. Because this study was of a relatively short duration, the group provided input at two advisory group meetings. At the meetings there was discussion regarding the focus of the work, including a presentation on previous research on NHS111 services to provide a context for understanding the current work. The meetings also included the presentation and discussion of the findings of the review in order to explore key messages for patients that could inform the dissemination of the findings.

The discussion during one meeting was structured using a SWOT (strengths, weaknesses, opportunities and threats) analysis approach that revealed a number of potential concerns among patients as well as potential perceived benefits. The group members expressed some concern over the reliability and consistency of symptom checker algorithms, particularly if different systems are being used in different parts of the country. The economic benefits of the systems were also questioned in view of the high costs of programming and system development. Possible equity issues were identified because digital 111 might be less accessible to some groups (people with cognitive impairment were mentioned). The group expressed uncertainty about the impact of this type of service on the wider urgent and emergency care system (e.g. would the creation of a new access point complicate patient pathways and increase demand overall?). The possible vulnerability to external threats leading to the breakdown of the system or the loss of data was identified as a weakness. There was discussion of whether or not patients would feel able to trust the advice they were given. Some group members saw the desire of patients to be able to talk to someone about their problems for reassurance and empathy as a threat to the success of digital 111. Potential benefits included increased access to urgent-care advice at any time and appeal to younger people and to those who might feel anxious or embarrassed about discussing their problem with a health professional.

Involvement of the advisory group was also beneficial in highlighting some issues that had emerged from the systematic review and enabled the reviewers to structure the review findings while taking this into account. The group’s uncertainty about the probable impact of digital 111 was reflected in the report’s findings and recommendations for ongoing evaluation and further research. The review report also reflects the group’s relatively cautious attitude (while recognising the need to update the way services are accessed), which contrasts with the strong belief in some quarters that digital 111 will help to ensure that patients receive appropriate care more quickly while reducing ‘inappropriate’ visits to EDs and GP appointments. Advisory group members reported that they had limited experience of using symptom checkers for real-life health problems in the urgent-care setting, which echoes the limited available information and uncertainty regarding the probable effects of digital 111 services.

Study registration and outputs

The protocol was registered prospectively with PROSPERO (registration number CRD42018093564) and is also available via the National Institute for Health Research (NIHR) HSDR programme website (www.journalslibrary.nihr.ac.uk/programmes/hsdr/164717/) and the Sheffield HSDR Evidence Synthesis Centre website (https://scharr.dept.shef.ac.uk/hsdr/).

Chapter 3 Review results

Some parts of this chapter have been reproduced from Chambers et al. 1 © Author(s) (or their employer(s)) 2019. Re-use permitted under CC BY. Published by BMJ. This is an open access article distributed in accordance with the Creative Commons Attribution 4.0 Unported (CC BY 4.0) license, which permits others to copy, redistribute, remix, transform and build upon this work for any purpose, provided the original work is properly cited, a link to the licence is given, and indication of whether changes were made. See: https://creativecommons.org/licenses/by/4.0/. The text includes minor additions and formatting changes to the original text.

Results of literature search

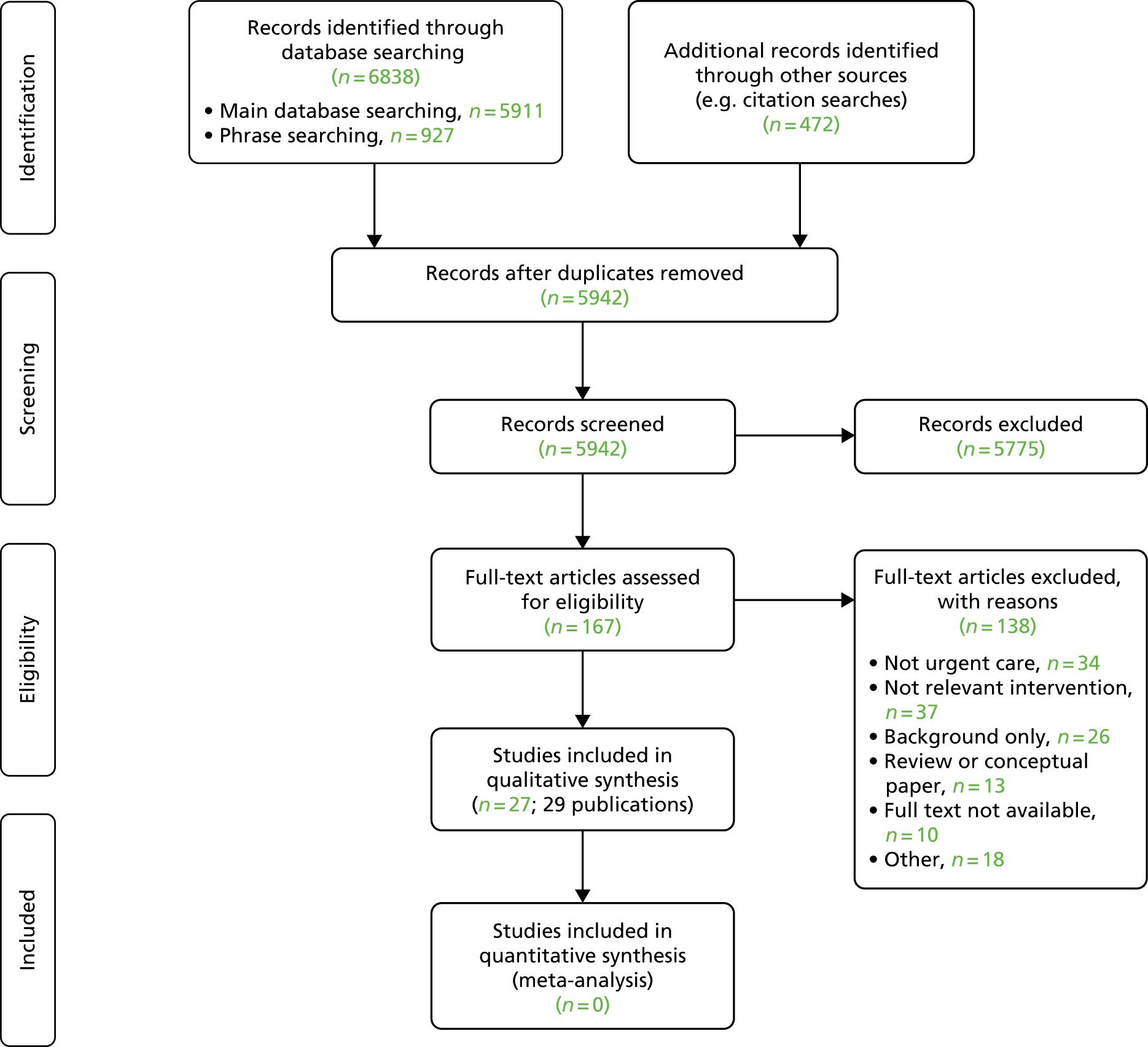

This chapter presents the studies that were included in the review. A PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-analyses) flow diagram (Figure 1) details the search process.

FIGURE 1.

The PRISMA flow diagram. Some parts of this chapter have been reproduced from Chambers et al. 1 © Author(s) (or their employer(s)) 2019. Re-use permitted under CC BY. Published by BMJ. This is an open access article distributed in accordance with the Creative Commons Attribution 4.0 Unported (CC BY 4.0) license, which permits others to copy, redistribute, remix, transform and build upon this work for any purpose, provided the original work is properly cited, a link to the licence is given, and indication of whether changes were made. See: https://creativecommons.org/licenses/by/4.0/. The figure includes minor additions and formatting changes to the original text.

All titles and abstracts were screened by one researcher from the review team, with a subset (about 10%) of the titles and abstracts screened by one other researcher from the review team. A calculation of the inter-rater agreement was made. A kappa coefficient was calculated, demonstrating moderate agreement between reviewers [κ = 0.582, 95% confidence interval (CI) 0.274 to 0.889]. Any queries were resolved by discussion. A similar process was followed for final decisions on inclusion/exclusion, based on full-text documents.

Characteristics of included studies

We included 29 publications that represented 27 studies (one study13 had two associated comments14,15). Nine studies were performed in the UK. Seventeen studies (Table 1) evaluated symptom checkers as a self-contained intervention, of which eight covered a limited range of symptoms (e.g. respiratory16,17,25 or gastrointestinal28,30 symptoms that we considered to be ‘urgent’). The remaining studies in this group evaluated symptom checkers that covered a wider range of common urgent-care symptoms. Studies evaluated either a single system21,22,24,27 or multiple systems. 6,13 We found only one study of a symptom checker specifically intended for the assessment of children’s symptoms, a development of the Strategy for Off-Site Rapid Triage (SORT) system for influenza-like illnesses. 23 Two reports with some overlap of content evaluated the ‘babylon check’ application (app). 26,27

| Reference | Study design | System type | Comparator | Population/sample |

|---|---|---|---|---|

| Peer-reviewed papers | ||||

| Kellermann et al.16 | Simulation: the developed algorithm was tested against past patient records | Online: SORT was available on two interactive websites | Health professional performance on real-world data. The algorithm was tested against clinicians’ decision based on past patient records |

Population/condition: specific condition(s); influenza symptoms Sample size (participants/data set): the algorithm was assessed against patients with influenza-like illnesses visiting EDs in spring before 2009 H1N1 influenza outbreak |

| Little et al.17 | Experimental RCT | Online: Internet Doctor website | Other: usual GP care without access to the Internet Doctor website |

Population/condition: specific condition(s); respiratory infections and associated symptoms Sample size (participants/data set): 3044 adults registered with a general practice; of these, 852 in the intervention group and 920 in the control group reported one or more RTIs over 20 weeks |

| Luger et al.18 | Simulation: described as ‘human–computer interaction study’ using think-aloud protocols | Online: Google (Google Inc., Mountain View, CA, USA) and WebMD (Internet Brands, New York, NY, USA) | Other: comparing two internet-based health tools |

Population/condition: general population; older adults (≥ 50 years) Sample size (participants/data set): 79 participants |

| Marco-Ruiz et al.19 |

Qualitative: qualitative element Other: |

Online: Erdusyk | None |

Population/condition: general population; internet tool users Sample size (participants/data set): 53 participants completed the evaluation, 15 participants completed the think-aloud phase |

| Nagykaldi et al.20 | Uncontrolled: observational | Online: a customised practice website, including a bilingual influenza self-triage module, a downloadable influenza toolkit and an electronic messaging capability. A bilingual seasonal influenza telephone hotline was available as an alternative | None |

Population/condition: specific condition(s); influenza Sample size (participants/data set): the website or hotline was available to patients at nine primary care practices through the peak of 2007–8 influenza season. After technology testing, a random convenience sample of patients was selected from participants who had used the website or telephone hotline. Qualitative feedback was obtained from 37 patients and six clinicians |

| Nijland et al.21 | Uncontrolled: observational and a retrospective analysis of 15 months’ data | Online: a web-based triage system (www.dokterdokter.nl) | None |

Population/condition: general population Sample size (participants/data set): 13,133 participants started triage, 6538 participants entered a complaint and 3812 participants received medical advice. A total of 192 patients completed a follow-up survey on compliance, of whom 35 reported on actual compliance |

| Poote et al.22 | Uncontrolled: observational | Online: a prototype self-assessment triage system | Health professional performance on real-world data. GPs’ triage rating was compared with the rating from the self-assessment system |

Population/condition: general population; students attending a university student health centre with new acute symptoms Sample size (participants/data set): 207 consultations; full data available for 154 students attending a university student health centre with new acute symptoms; seven GPs participated in the study |

| Anhang Price et al.23 | Uncontrolled: observational | Online: a web-based decision support tool, SORT for Kids, designed to help parents and adult caregivers decide whether or not a child with possible influenza symptoms needs to visit the ED for immediate care | Health professional performance on real-world data. The sensitivity of the algorithm was compared with a gold standard (i.e. evidence from the child’s medical records that they received one or more of five ED-specific interventions) |

Population/condition: specific condition(s) – influenza in children Sample size (participants/data set): 294 parents or adult caregivers presenting to one of two EDs in the National Capital Region, USA |

| Semigran et al.6 | Experimental: described as an audit study | Multiple: 23 symptom checkers were evaluated. Symptom checkers available as apps [via the App Store (Apple Inc., Cupertino, CA, USA) and Google Play (Google Inc., Mountain View, CA, USA)] were identified through searching for ‘symptom checker’ and ‘medical diagnosis’, and the first 240 results were screened. Symptom checkers available online were identified through searching Google and Google Scholar (Google Inc., Mountain View, CA, USA) for ‘symptom checker’ and ‘medical diagnosis’ and the first 300 results were screened | Other: vignettes had a diagnosis and triage attached to them and these were compared with the symptom checker advice |

Population/condition: general population, a single class of illness was examined by the symptom checker; the symptom checker was excluded from the study Sample size (participants/data set): 23 symptom checkers and 45 standardised patient clinical vignettes. These varied by urgency; of the 45 clinical vignettes, 15 required emergency care, 15 required non-emergency care and 15 required self-care. They also varied by how common/uncommon the condition was |

| Semigran et al.13 | Experimental: a comparison of physician and symptom checker diagnoses based on clinical vignettes | Multiple: ‘Human Dx’ is a web- and app-based platform | Health professional performance on test/simulation. Clinical vignettes: a comparison of 23 symptom checkers with a physician’s diagnosis for 45 vignettes |

Population/condition: general population; of the 45 condition vignettes there were 15 low-, 15 medium- and 15 high-acuity vignettes. There were 26 common and 19 uncommon condition vignettes Sample size (participants/data set): 45 vignettes were distributed to 234 physicians (211 physicians were trained in internal medicine and 121 physicians were fellows/residents) |

| Sole et al.24 | Uncontrolled: observational, a descriptive comparative study | Online: a web-based triage system (24/7 WebMed) | Health professional performance on real-world data. Data were evaluated from students who had used the web-based triage and then requested an appointment via e-mail (so triage data were available for comparison) |

Population/condition: general population Sample size (participants/data set): students who used the web-based triage system between February and May 2004 (4 months) |

| Yardley et al.25 | Experimental: an exploratory randomised trial | Online: Internet Doctor website | Other: self-care information provided as a static web page with no symptom checker or triage advice |

Population/condition: specific condition(s), minor respiratory symptoms (e.g. cough, sore throat, fever, runny nose) Sample size (participants/data set): 714 participants (368 participants allocated to Internet Doctor and 346 participants to the control group) |

| Reports | ||||

| Babylon Health26 |

Uncontrolled: observational No control group but some comparison with NHS111 telephone data |

Digital: smartphone app |

Health professional performance on real-world data Other: NHS111 data for 12 months from February 2017 |

Population/condition: general population; participants in the London pilot evaluation of digital 111 services Sample size (participants/data set): 12,299 interactions with the system, of which 5250 were classified as genuine (user agreed to share their data with NHS); 74 cases triaged to urgent and emergency care settings were reviewed in depth |

| Middleton et al.27 | Simulation | Digital: ‘babylon check’ automatic triage system | Health professional performance on test/simulation. Twelve clinicians and 17 nurses |

Population/condition: general population Sample size (participants/data set): 102 vignettes with professional actors playing patients |

| Conference abstracts | ||||

| Berry et al.28 | Simulation: an evaluation of symptom checker performance on clinical vignettes | Online: 17 symptom checkers | None |

Population/condition: specific condition(s); gastrointestinal symptoms Sample size (participants/data set): 10 clinical vignettes multiplied by 17 symptom checkers = 170 diagnoses |

| Berry et al.29 | Controlled: observational | Online: three online symptom checkers (WebMD, iTriage and FreeMD) | Health professional performance on real-world data |

Population/condition: specific condition(s), patients with a cough presenting to an internal medicine clinic Sample size (participants/data set): 116 adult patients |

| Berry et al.30 | Controlled: observational | Online: three online symptom checkers (WebMD, iTriage and FreeMD) | Health professional performance on real-world data |

Population/condition: specific condition(s), abdominal pain Sample size (participants/data set): 49 adult patients presenting with abdominal pain |

Five studies7,31–34 evaluated symptom checkers as part of a broader self-assessment and consultation system (often referred to as electronic consultation or e-consultation). Study characteristics are summarised in Table 2. In this type of system, the role of symptom checkers is to help patients decide whether their symptoms require a consultation with a doctor or other health professional or can be dealt with by self-care. If a consultation is required, details of the symptoms and a request for an appointment or a call-back can be submitted electronically. This type of study is important because it considers the service in the broader context of the urgent and emergency care system. A limitation is that some studies focused mainly on the ‘downstream’ elements of the pathway (e.g. consultation with GPs) and provided limited data on the symptom checker element of the system.

| Reference | Study design | System type | Comparator | Population/sample |

|---|---|---|---|---|

| Peer-reviewed papers | ||||

| Carter et al.31 | Uncontrolled: observational, a mixed-methods evaluation | Online: webGP (subsequently known as eConsult) | Other: investigate patient experience by surveying patients who had used webGP and by comparing their experience with controls (patients who had received a face-to-face consultation during the same time period) matched for age and gender |

Population/condition: general population, general practices in NHS Northern, Eastern and Western Devon CCG’s area Sample size (participants/data set): six practices provided consultations’ data, 20 GPs completed case reports (regarding 61 e-consults), 81 patients completed questionnaires, five GPs and five administrators were interviewed |

| Cowie et al.32 | Uncontrolled: observational, a 6-month evaluation at 11 GPs in Scotland | Online: eConsult, accessed via general practice surgery websites. Service provides self-care assessment and advice, including symptom checkers, triage, signposting to alternative services, access to NHS24 (telephone service) and e-consults allowing the submission of details by e-mail | None |

Population/condition: general population; patients registered with participating GPs Sample size (participants/data set): sample size for quantitative analysis not reported; 48 practice staff took part in focus groups or interviews |

| Nijland et al.33 | Other: online survey | Online: responses of interest related to ‘indirect e-consultation’ (consulting a GP via secure e-mail with the intervention of a web-based triage system) | None |

Population/condition: general population; patients with internet access but no experience of e-consultation Sample size (participants/data set): 1066 patients |

| Reports | ||||

| Madan34 | Uncontrolled: observational, a report of a 6-month pilot study | Online: webGP (subsequently known as eConsult) | None |

Population/condition: general population Sample size (participants/data set): service available to 133,000 patients of 20 London GPs via practice websites |

| NHS England7 | Uncontrolled: observational, an analysis of data from four pilot studies together with data from other sources | Multiple: pilots featured NHS Pathways (web-based, West Yorkshire), Sense.ly (‘voice-activated avatar’, West Midlands), Espert 24 (web-based, Suffolk) and babylon (app, North Central London) | None. Authors stated that it was not appropriate to compare pilot sites because of differences in starting date, ‘footprints’ covered, method of uptake and underlying population differences |

Population/condition: general population Sample size (participants/data set): 10,902 downloads or registrations across all pilot sites between January and June 2017 |

A final group of five studies (Table 3) examined patient and/or public attitudes to online self-diagnosis in the context of urgent care. 35–39

| Reference | Study design | System type | Comparator | Population/sample |

|---|---|---|---|---|

| Backman et al.35 | Qualitative interview, questionnaire and registry of health-care contacts following the index visit | Multiple: participants were asked whether they had used telephone consultation, the internet or any other source to obtain health advice | None |

Population/condition: general population; non-urgent ED patients Sample size (participants/data set): 543 patients (396 in primary care and 147 in ED) eligible, of whom 428 (79%) were interviewed and received a questionnaire and a 30-day follow-up health-care contact |

| Joury et al.36 | Other: cross-sectional search for first 30 websites using ‘chest pain’ keyword. Quality assessment and content analysis | Online: internet websites that provide patient information on chest pain | None |

Population/condition: specific condition(s); chest pain Sample size (participants/data set): 27 websites included |

| Lanseng and Andreassen37 | Other: cross-sectional (survey) scenario and questionnaire, adapted version of the Technology Readiness Measurement instrument | Multiple: technology (i.e. the study looked at attitudes to digital technology in general rather than any specific system) | None |

Population/condition: general population aged 18–65 years Sample size (participants/data set): TRI – 160 participants randomly selected from one county, of which 132 participants responded manually, 28 participants responded online and six participants responded verbally. Women constituted 46.3% of the sample and nearly half the sample was aged 31–50 years. TAM – 470 inhabitants of an affluent Oslo suburb were sampled. Women constituted 59.1% of the sample |

| Luger and Suls38 | Simulation: vignettes simulating either appendicitis symptoms or sinusitis symptoms | Multiple: WebMD symptom checker, Google search, no electronic aid | Other: compared the two online health information sources to no electronic aid |

Population/condition: specific condition(s); appendicitis or sinusitis Sample size (participants/data set): undergraduate population of students (n = 174, mean age 19.22 years) |

| North et al.39 | Controlled observational comparison of telephone triage and internet triage (calls and clicks, matching of items) | Multiple: online vs. telephone triage |

Other: Ask Mayo Clinic – all assessments in 2009 (70,370 symptom assessments) Categorisation: paediatric (0–17 years) or adult |

Population/condition: general population; assessment of 28 online symptoms vs. 20 matched telephone symptoms Sample size (participants/data set): data set from Ask Mayo Clinic. All assessments in 2009 (2,059,299 symptom checker clicks). Categorisation – paediatric (0–17 years) or adult |

The included studies used a wide range of designs, some of which were challenging to classify. Studies that assessed the systems’ performance in terms of clinical and service use outcomes, including patient satisfaction, were generally observational or qualitative, although one web-based system was evaluated in two RCTs. 17,25 Diagnostic accuracy was assessed by measuring performance in simulations (e.g. using vignettes to describe symptoms for a known condition) or by comparing the system’s performance with that of doctors in diagnosing the cause of symptoms and choosing an appropriate level of triage. This was carried out using either simulated data or real-world data (e.g. by asking patients to complete a symptom checker assessment before seeing a doctor and comparing the two assessments). Risk of bias in studies and the overall strength of evidence for different outcomes are discussed further below (see Risk-of-bias assessment and Overall strength of evidence assessment, respectively).

The publication status of the included studies also varied. In addition to peer-reviewed journal articles and reports, we included four studies published as conference abstracts18,28–30 and three reports characterised as grey literature. Two of these reports27,34 were written and published by the developers of specific systems and may therefore be subject to potential conflicts of interest. The third grey literature report was a draft report of NHS England’s ongoing pilot studies of NHS111 online in four regions of England. 7 This report, dated December 2017, was not officially published by NHS England but was easily available online.

Characteristics of included systems

Using studies included in the review, we were able to extract data on eight systems using the TIDieST checklist. When appropriate, data from multiple studies were combined in one checklist. We noted that some of these systems were no longer in use. We were also unable to obtain sufficient data for some systems that are currently being used and/or evaluated. A summary of extracted data is presented below (Tables 4 and 5) and full data extractions can be found in Appendix 3.

| Brief name | Objective of the intervention | Interface | Procedures involved in the intervention | How the intervention is accessed | Tailoring | Modifications/versions | Simulation/laboratory testing | Real-world testing | Reference(s) |

|---|---|---|---|---|---|---|---|---|---|

| Babylon check | To provide an automated service allowing patients to check symptoms and receive fast and clear advice on what action to take | ‘App with a chat bot-style interface’7 | The user selects a body part and answers a series of multiple-choice questions. This process leads to a list of possible outcomes, of which the highest priority one is presented to the user27 | Smartphone app | Not reported | Not reported | Tested by the manufacturer in two stages27 | Included in the NHS England pilot evaluation26,27 | Middleton et al.,27 NHS England7 and Babylon Health26 |

| Un-named prototype | To enable patients to undertake a self-assessment triage and receive advice on an appropriate course of action based on their symptoms | A simple user interface and a menu from which patients could select their main presenting symptom from a list of several hundred presenting complaints | System-generated age- and gender-specific questions with associated potential answers. Each answer carried a weighting that contributed to the final triage outcome. Triage advice provided by the system consisted of one of six courses of action | In the study evaluating the system, access was via a desktop computer22 | Not reported | Not reported | Not reported | The system was tested in a university student health centre by Poote et al.22 Students used the system before a face-to-face consultation with a GP. The system’s rating of the urgency of the student’s condition was compared with that of the GP (who had access to the output from the automated system) | Poote et al.22 |

| WebGP (subsequently renamed eConsult) | To provide an electronic GP consultation and self-help service for primary care patients | Screenshot of the original webGP available in the 2014 pilot report34 | WebGP consists of five services: symptom checker, self-help guidance, signposting to other services, information about the 111 telephone service and an e-consultation that allows the patient to complete an online form that is e-mailed to the practice. Details of how the system is integrated into practice procedures are reported to vary between practices | Via practice websites | Not reported | Not reported | Not reported | A 6-month pilot report was produced by the Hurley Group, which was involved in developing the system.34 Subsequent evaluations have been reported in the UK, including in six practices in Devon31 and 11 practices across Scotland32 | Madan et al.,34 Carter et al.31 and Cowie et al.32 |

| 24/7 WebMed | To enhance services provided by a SHS by helping students decide whether or not to seek care | The system is no longer available | The system collected basic demographic data and then users answered a series of questions based on algorithms. The system could analyse > 600 chief complaints and classified assessments into six different levels of urgency. After completing triage, students could request a SHS appointment by e-mail | Via a link from the SHS website | Not reported | Not reported | Not reported | Testing of the system by the SHS at the University of Central Florida was reported by Sole et al.24 | Sole et al.24 |

| Brief name | Objective of the intervention | Interface | Procedures involved in the intervention | How the intervention is accessed | Tailoring | Modifications/versions | Simulation/laboratory testing | Real-world testing | Reference(s) |

|---|---|---|---|---|---|---|---|---|---|

| Influenza Self-Triage Module | To enhance patient self-management of seasonal influenza and to facilitate patient–provider communication | No longer available | The self-triage module was developed by a practice-based research network multidisciplinary stakeholder group and was provided to primary care practices as part of an influenza management website that was tailored to the needs of each participating practice | Via the websites of participating practices | English and Spanish versions available | Additional questions added to improve patient safety | Not reported | The system was tested in 12 primary care practices during the peak of the 2007–8 influenza season20 | Nagykaldi et al.20 |

| SORT | To create a simple but accurate tool that could help minimally trained health-care workers screen large numbers of patients with influenza-like illness | See online figures E1–E3. SORT versions 1.0–3.0. No screenshots. Two interactive websites (www.flu.gov and www.H1N1ResponseCenter.com) | Three-step process resulting in the assignment of a level of risk and specific recommended action | Websites including www.flu.gov | Potential for adaptation to other acute illnesses (e.g. severe acute respiratory syndrome) | Initially intended for use by minimally trained health-care workers, SORT was modified to allow use by call centres and for self-assessment | Retrospective assessment by the Kaiser Permanente Colorado Institute for Health Research, using their health system’s computerised records | Analysis of data from websites that made SORT available | Kellermann et al.16 |

| SORT for Kids | To help parents and adult caregivers determine if a child with influenza-like illness requires immediate care in an ED | For a screenshot see figure 2 in Anhang Price et al.23 | Four age-specific pathways resulting in assignment to one of three risk levels and associated recommended actions | Accessed via website (URL not provided) | Tailored for different age groups and also takes account of child’s general state of health | Not reported | Not reported | SORT for Kids was tested by Anhang Price et al.23 at two paediatric EDs in the National Capital Region of the USA | Anhang Price et al.23 |

| Internet Doctor | To provide tailored advice on self-management of minor respiratory symptoms | A screenshot of the interface is provided in Yardley et al.25 Further details are provided in a multimedia appendix to the paper | The diagnostic pages asked a series of questions about the participant’s symptoms. These were completed for one symptom at a time and the algorithm provided advice on whether or not the participant should contact health services (NHS Direct) for that symptom | Via web pages (www.internetdr.org.uk) | Not reported | Not reported | Not reported | A preliminary RCT primarily involving university students,25 followed by a larger RCT in a UK primary care population17 | Yardley et al.25 and Little et al.17 |

Four of the included systems were designed to cover a full range of symptoms (see Table 4) and four covered a more limited range (three for influenza-like illness and one for minor respiratory symptoms; see Table 5). Most systems were accessed through web pages, often linked to health-care providers or government organisations. The ‘babylon check’ system was the main exception, being designed for access using a smartphone app. Published research studies provided relatively little detail about the systems that, in some cases, possibly reflected a need for commercial confidentiality. Details of published studies are included in Tables 4 and 5 for reference; the findings of these studies are summarised in the following section for each key outcome.

Results by outcome

Safety

Patient safety is an important outcome in evaluating any urgent-care intervention. Misdiagnosis or undertriage of serious conditions could lead to death or serious adverse events. However, none of the six included studies that reported on safety outcomes identified any problems or differences in outcomes between symptom checkers and health professionals (Table 6). Most of the studies compared system performance with that of health professionals using real or simulated data. The only study with no comparison group was the 6-month pilot study of webGP,34 which reported ‘no major incidents’.

| Reference | Type of system | Population/condition | Comparator | Main results |

|---|---|---|---|---|

| Kellermann et al.16 | Online: SORT was available on two interactive websites | Specific condition(s): influenza symptoms | Health professional performance on real-world data. The algorithm was tested against clinicians’ decision on past patient records | The algorithm was modified to be more conservative following testing against patient records. The effect of SORT on care seeking and patient safety could not be determined because only web hits were counted. There were no reports of adverse events following the use of SORT, but it is possible that patients could have delayed care seeking, which could have resulted in harm |

| Little et al.17 | Online: Internet Doctor website | Specific condition(s): respiratory infections and associated symptoms | Other: usual GP care without access to the Internet Doctor website | There was no evidence of increased hospitalisations in the intervention group (risk ratio 0.13, 95% CI 0.02 to 1.01; p = 0.051) |

| Madan34 | Online: webGP (subsequently known as eConsult) | General population | None | The report stated that ‘we have had no significant events’ and the only patient complaint related to a delay in processing an e-consultation |

| Middleton et al.27 | Digital: ‘babylon check’ automatic triage system | General population | Health professional performance on test/simulation: 12 clinicians and 17 nurses | All outcomes obtained through ‘babylon check’ were classified as clinically safe, compared with 98% of outcomes obtained through doctors and 97% of outcomes obtained through nurses |

| Poote et al.22 | Online: prototype self-assessment triage system | General population: students attending a university student health centre with new acute symptoms | Health professional performance on real-world data. GPs’ triage rating was compared with the rating from the self-assessment system | The self-assessment system was generally more risk averse than the GPs’ triage rating, meaning that the safety of patients did not appear to be compromised |

| Anhang Price et al.23 | Online: a web-based decision support tool, SORT for Kids, designed to help parents and adult caregivers decide whether or not a child with possible influenza symptoms needs to visit the ED for immediate care | Specific condition(s): influenza in children | Health professional performance on real-world data. The sensitivity of the algorithm was compared with a gold standard (evidence from the child’s medical records that they received one or more of the five ED-specific interventions) | 10.2% of patients were classified as low risk, 2.4% of patients were classified as intermediate risk and 87.4% of patients were classified as high risk by the SORT for Kids algorithm, based on parents and caregiver responses. SORT classified 14 patients as high risk of the 15 that met explicit criteria for clinical necessity at their ED visit |

Limitations of the studies included not being based on real patient data,27 covering only a limited range of conditions,16,23 and sampling a young and healthy population (students) that was not representative of the general population of users of the urgent-care system. 22 Studies of e-consultation systems did not generally collect data on those respondents who decided not to seek an appointment, which limited their ability to assess any impact on safety for this group. Overall, the evidence should be interpreted cautiously as indicating no evidence of a detrimental impact on safety rather than evidence of no detrimental effect.

Clinical effectiveness

Only two studies reported on clinical effectiveness outcomes (Table 7), making it difficult to draw any firm conclusions. In the study by Little et al. ,17 those who used the Internet Doctor website experienced longer illness duration and more days of illness that was rated as moderately bad or worse than the usual-care group, although the difference was not statistically significant. The online intervention in this study was designed to offer self-management advice for respiratory infections only. The pilot study of the webGP system34 reported that several patients received advice to seek treatment for serious symptoms that might otherwise have been ignored. However, no details or quantitative data were provided.

| Reference | Type of system | Population/condition | Comparator | Main results |

|---|---|---|---|---|

| Little et al.17 | Online: Internet Doctor website | Specific condition(s): respiratory infections and associated symptoms | Other: usual GP care without access to the Internet Doctor website | Clinical effectiveness: illness duration [11.3 days of illness in the intervention group vs. 10.9 days of illness in the control group; multivariate estimate of 0.48 days longer (95% CI –0.16 to 1.12 days; p = 0.141)] and days of illness rated moderately bad or worse (0.53 days, 95% CI 0.12 to 0.94 days; p = 0.012) were slightly longer in the intervention group. The estimate of slower symptom resolution in the intervention group was attenuated when controlling for whether or not individuals had used web pages that advocated the use of ibuprofen |

| Madan34 | Online: webGP (subsequently known as eConsult) | General population | None | Clinical effectiveness: report stated that ‘a number of patients’ received automated advice to seek urgent treatment for serious symptoms that might otherwise have been ignored |

Costs and cost-effectiveness

Three included studies provided limited data on possible cost savings (Table 8). Based on 6 months of pilot data, Madan34 estimated savings of £11,000 annually for an average general practice (6500 patients) compared with current practice. The report also suggested that there might be a saving to commissioners equivalent to £414,000 annually for a Clinical Commissioning Group (CCG) covering 250,000 patients. These savings were specifically related to the self-reported diversion of patients from GP appointments to self-care and from urgent care to e-consultation and, as such, were associated with the symptom checker part of the system. Using similar methodology, the manufacturers of the ‘babylon check’ app claimed average savings of > £10 per triage compared with NHS111 by telephone, based on a higher proportion of patients being recommended to self-care. 26 Neither study represents a formal cost-effectiveness analysis. The fact that these studies were produced by system manufacturers should be taken into account when interpreting their findings.

| Reference | Type of system | Population/condition | Comparator | Main results |

|---|---|---|---|---|

| Babylon Health26 | Digital: smartphone app | General population: participants in the London pilot evaluation of digital 111 services | Health professional performance on real-world data. Other: NHS111 data for 12 months from February 2017 | Costs/cost-effectiveness: cost savings were calculated by multiplying ‘where patients said they would have gone vs. where babylon triaged them to’ by savings for each combination (e.g. 3% triaged to a general practice who would have gone to a hospital, making a saving of £107 with a contribution to total savings per triage of £3.22). Based on data from 1373 patients, this report claims an average saving of £10.79 per triage, of which £5.13 comes from referring patients to a GP or self-management instead of sending them to a hospital |

| Cowie et al.32 | Online: eConsult, accessed via general practice surgery websites. Service provides self-care assessment and advice, including symptom checkers, triage and signposting to alternative services, access to NHS24 (telephone service) and e-consultations allowing submission of details by e-mail | General population: patients registered with participating general practices | None | Costs/cost-effectiveness: potential cost savings/e-consults depended on the percentage of general practice appointments saved/e-consultations submitted, from £1.30 per e-consultation at 74% to –£1 per e-consultation at 60% |

| Madan34 | Online: webGP (subsequently known as eConsult) | General population | None | Costs/cost-effectiveness: report stated that cost savings during the pilot were equivalent to £420,000 annually, of which approximately half would go to practices (subject to changes in workforce) and the other half to commissioners because of fewer patients attending urgent care. This was equivalent to an annual saving of £414,000 for an average CCG |

The other study reporting on costs32 concentrated on potential savings to practices from using e-consultation and found that savings depended on the percentage of face-to-face appointments avoided by use of e-consultation. Although important for the evaluation of e-consultation systems, this study has less relevance to the role of symptom checkers because patients completing an e-consultation have already decided that they need further contact with the health-care system.

Further economic evaluations should fully compare clearly defined alternatives and consider costs and benefits across the whole health-care system, particularly whether or not savings represent genuine cost reductions or merely transfers of costs from one part of the system to another.

Diagnostic accuracy

Eight studies reported at least some data on the diagnostic accuracy of symptom checkers (Table 9). One study18 was excluded from the table because although it contained some data on diagnostic accuracy of Google and WebMD symptom checkers, it was mainly concerned with patients’ ability to self-diagnose using the different systems. Most of the other studies attempted to compare the systems’ performance with that of health professionals using real patient data or simulations. Four of these studies23,28–30 evaluated systems designed to diagnose and triage specific symptoms or conditions and only three6,13,24 evaluated one or several ‘general purpose’ symptom checkers.

| Reference | Type of system | Population/condition | Comparator | Main results |

|---|---|---|---|---|

| Berry et al.28 | Online: 17 symptom checkers | Specific condition(s): gastrointestinal symptoms | None | Accuracy of diagnosis: 57 out of 170 (33%) correct diagnoses were listed on the symptom checkers; 12 correct diagnoses (7%), 24 correct diagnoses (14%) and 41 correct diagnoses (24%) were listed in the top one, top three and top 10, respectively. Clinical vignettes with additional information (e.g. laboratory test results, vitals and/or images) were more likely to be listed |

| Berry et al.29 | Online: three online symptom checkers (WebMD, iTriage and FreeMD) | Specific condition(s): patients with a cough presenting to an internal medicine clinic | Health professional performance on real-world data | Accuracy of diagnosis: 26 out of 116 patients reported GERD symptoms. A doctor diagnosed five of these patients with GERD. The diagnostic accuracy of the symptom checkers was poor. Providing doctors with symptom checker data alone did not enhance their diagnostic performance. Doctors given the symptom checker data plus notes of the visit did show improved diagnostic performance but were less accurate than the initial in-person diagnosis |

| Berry et al.30 | Online: three online symptom checkers (WebMD, iTriage and FreeMD) | Specific condition(s): abdominal pain | Health professional performance on real-world data | Accuracy of diagnosis: physician-determined diagnosis was not listed for any of the symptom checkers in the top one for 40 out of 49 (82%) patients or in the top three for 27 out of 49 (55%) patients. Seven (14%) patients and 18 (37%) patients had their diagnosis listed in the top one and top three, respectively, by one symptom checker |

| Anhang Price et al.23 | Online: a web-based decision support tool, SORT for Kids, designed to help parents and adult caregivers decide whether or not a child with possible influenza symptoms needs to visit the ED for immediate care | Specific condition(s) influenza in children | Health professional performance on real-world data. The sensitivity of the algorithm was compared with a gold standard (evidence from the child’s medical records that they received one or more of the five ED-specific interventions) | Accuracy of diagnosis: 10.2% of patients were classified as low risk, 2.4% of patients were classified as intermediate risk and 87.4% of patients were classified as high risk by the SORT for Kids algorithm, based on parents’ and caregivers’ responses. SORT classified 14 patients as high risk of the 15 that met the explicit criteria for clinical necessity at their ED visit. The algorithm had an overall sensitivity of 93.3% and a low overall specificity of 12.9% |

| Semigran et al.6 | Multiple: symptom checkers available as apps (via the App Store and Google Play) were identified through searching for ‘symptom checker’ and ‘medical diagnosis’, and the first 240 results were screened. Symptom checkers available online were identified through searching Google and Google Scholar for ‘symptom checker’ and ‘medical diagnosis’, and the first 300 results were screened. They also asked the developers of two symptom checkers for recommendations of other systems. They identified 143 checkers, 102 of which were excluded because they used the same content as the other checkers, 25 were excluded because they looked at one condition only, 14 were excluded because they did not give a diagnosis or triage advice (only medical advice) and two were not working. Therefore, 23 symptom checkers were evaluated | General population: when a single class of illness was examined by the symptom checker, the symptom checker was excluded from the study | Other: vignettes had a diagnosis and triage attached to them and these were compared with the symptom checker advice | Accuracy of diagnosis: diagnostic performance was assessed across 770 standardised patient evaluations. Overall, the correct diagnosis was made in 34% of cases (95% CI 31% to 37%). Correct diagnosis varied significantly (p < 0.001) by urgency of condition. Urgent conditions had 24% of correct diagnosis (95% CI 19% to 30%), non-urgent conditions had 38% of correct diagnosis (95% CI 32% to 34%) and self-care conditions had 40% of correct diagnosis (95% CI 34% to 47%). Correct diagnosis varied non-significantly for common (38%, 95% CI 34% to 43%; p = 0.004) as opposed to non-common (28%, 23% to 33%) conditions. Performance varied across symptom checkers. Correct diagnosis first varied from 5% to 50%. Correct diagnosis was one of the first three listed in 51% of cases (95% CI 47% to 54%) and one of the first 20 in 58% of cases (95% CI 55% to 62%). Symptom checkers that included demographic information did not perform any better than those that did not include this information |

| Semigran et al.13 | Multiple: ‘Human Dx’ is a web- and app-based platform | General population: of the 45 condition vignettes there were 15 of low acuity, 15 of medium acuity and 15 of high acuity. There were 26 common and 19 uncommon condition vignettes | Health professional performance on test/simulation. Clinical vignettes, a comparison of 23 symptom checkers with physician diagnosis for 45 vignettes | Accuracy of diagnosis: physician diagnosis accuracy was compared with symptom checker diagnostic accuracy for the 45 vignettes using two sample tests of proportion. Physicians listed the correct diagnosis first in 72.1% of cases versus 34.0% of cases for symptom checkers (84.3% vs. 51.2% for correct diagnosis in top-three diagnoses). Physicians were more likely to list the correct diagnosis for high-acuity and uncommon vignettes (as opposed to low-acuity and common vignettes). The symptom checkers were more likely to list the correct diagnosis for low-acuity and common vignettes |

| Sole et al.24 | Online: a web-based triage system (24/7 WebMed) with the following features:

|

General population | Health professional performance on real-world data. Data were evaluated from students who had used the web-based triage and then requested an appointment via e-mail (so triage data were available for comparison). Data from the triage system were compared with that from the Student Health Service Medical Record. Data were extracted on:

|

Accuracy of diagnosis: over the 4 months of the study there were 1290 uses of the web-based triage system, 143 out of the 1290 patients requested an appointment via e-mail and 59 out of the 143 patients were actually treated at the health centre. Self-care was recommended across 22.7% of the 1290 uses of the system. Generally, the medical complaints of the students were common and uncomplicated. Of the 59 who were treated at the health centre, the study calculated agreement between chief complaint, 24/7 WebMed classification and provider diagnosis. Chief complaint and WebMed: κ = 0.94; p = 0.000. Chief complaint and provider diagnosis: κ = 0.91; p = 0.000. WebMed and provider diagnosis: κ = 0.89; p = 0.000 |

In spite of the diverse methods and comparisons in the included studies, almost all studies agreed that the diagnostic accuracy of symptom checkers was poor in absolute terms (e.g. in evaluating ‘vignettes’ designed to test knowledge of specific conditions when the correct diagnosis was already known by definition) or relative to that of health professionals. In the most comprehensive evaluation, Semigran et al. 6 evaluated 23 symptom checkers across 770 standardised patient evaluations. Overall, the correct diagnosis was made in 34% of cases (95% CI 31% to 37%), although performance varied widely between symptom checkers, high- and low-acuity conditions, and common and rare conditions. When the same authors compared the 23 symptom checkers with physicians using 43 vignettes, physicians were more likely to list the correct diagnosis first (out of three differential diagnoses) (72.1% vs. 34%; p < 0.001) as well as among the top-three diagnoses (84.3% vs. 51.2%; p < 0.001). 13

The only exception to the rule was an evaluation carried out at a student health centre. 24 Using data from 59 participants who used the 24/7 WebMed system and who were subsequently treated at the health centre, the study found good agreement between chief complaint, 24/7 WebMed classification and provider diagnosis (κ-values of 0.89 to 0.94). This study differed from the others in using data from students rather than a general population sample. Data were obtained only from people who were actually treated, raising a potential issue of partial verification bias. In addition, the students’ complaints were generally common and uncomplicated, a scenario in which symptom checkers performed relatively well in the study by Semigran et al. 13

Accuracy of disposition (triage and signposting to appropriate services)

The ability to distinguish conditions of different degrees of urgency and advise patients on an appropriate course of action is a vital component of symptom checkers and e-consultation services and is closely linked to both safety and service use. Six included studies6,7,21,22,26,27 reported on this outcome, and one30 evaluated a ‘general purpose’ symptom checker (Table 10). As with diagnostic accuracy, diverse methodologies and outcome measures were used.

| Reference | Type of system | Population/condition | Comparator | Main results |

|---|---|---|---|---|

| Babylon Health26 | Digital: smartphone app | General population: participants in the London pilot evaluation of digital 111 services | Health professional performance on real-world data. Other: NHS111 data for 12 months from February 2017 | Accuracy of disposition: 74 triages that resulted in referral to urgent/emergency care were reviewed by three babylon doctors, who concluded that patients were signposted appropriately in all cases |

| Berry et al.30 | Online: three online symptom checkers (WebMD, iTriage and FreeMD) | Specific condition(s): abdominal pain | Health professional performance on real-world data | Accuracy of disposition: diagnoses were classified as emergency, non-emergency and self-care. Overall, 33% of symptom checker diagnoses were at the same level of seriousness as the physicians’, 39% of diagnoses were more serious and 30% of diagnoses were less serious |

| Middleton et al.27 | Digital: ‘babylon check’ automatic triage system | General population | Health professional performance on test/simulation. 12 clinicians and 17 nurses | Accuracy of disposition: available outcomes were A&E, general practice urgent, general practice routine, pharmacy and manage at home. An accurate outcome was produced in 88.2% of cases for ‘babylon check’, 75.5% of cases for doctors and in 73.5% of cases for nurses. When vignettes were delivered by a medical expert, rather than actors, ‘babylon check’ accuracy improved to 90.2% |

| NHS England7 | Multiple: pilots featured NHS Pathways (web-based, West Yorkshire), Sense.ly (‘voice-activated avatar’, West Midlands), Expert 24 (web-based, Suffolk) and babylon (app, North Central London) | General population | None: authors stated that it was not appropriate to compare pilot sites because of differences in starting date, ‘footprints’ covered, method of uptake and underlying population | Accuracy of disposition: senior clinicians tested each system under non-laboratory conditions and answered questions about the clinical accuracy of the product and its usability. Percentage clinical agreement was 95% of diagnoses for Expert 24 (43 responses), 84% of diagnoses for babylon (32 responses), 59% of diagnoses for NHS Pathways (41 responses) and 30% of diagnoses for Sense.ly (10 responses) |

| Nijland et al.21 | Online: web-based triage system (www.dokterdokter.nl) | General population | None | Accuracy of disposition: advice was to visit a doctor in 85% of cases. Authors stated that the frequency of self-care advice was limited, even when the complaint was treatable by self-care. There were also cases (e.g. headache and urinary complaints) when self-care advice was given that seemed to be inappropriate |

| Poote et al.22 | Online: prototype self-assessment triage system | General population: students attending a university student health centre with new acute symptoms | Health professional performance on real-world data. GPs’ triage rating was compared with the rating from the self-assessment system | Accuracy of disposition: the GP assessment and the self-assessment system classification of level of urgency was the same in 39% of consultations. There was a low association but it was significant (p = 0.016). The self-assessment system advised the urgent levels of care-seeking behaviour more than GPs. In over half of the consultations, the system’s advice was to seek care more urgently than was advised by the GPs during the face-to-face consultation |

| Semigran et al.6 | Multiple: symptom checkers available as apps (via the App Store and Google Play) were identified through searching for ‘symptom checker’ and ‘medical diagnosis’, and the first 240 results were screened. Symptom checkers available online were identified through searching Google and Google Scholar for ‘symptom checker’ and ‘medical diagnosis’, and the first 300 results were screened. They also asked developers of two symptom checkers for recommendations of other systems. They identified 143 checkers, of which 102 were excluded because they used the same content as other checkers, 25 were excluded because they looked at one condition only, 14 were excluded because they did not give a diagnosis or triage advice (only medical advice) and two were not working. Therefore, 23 symptom checkers were evaluated | General population: when a single class of illness was examined by the symptom checker, the symptom checker was excluded from the study | Other: vignettes had a diagnosis and triage attached to them and these were compared with the symptom checker advice | Accuracy of disposition: triage performance was assessed across 532 standardised patient evaluations. Appropriate advice was given in 57% of cases (95% CI 52% to 61%). Appropriate advice was given more often for urgent care than for non-urgent care or self-care (80%, 95% CI 75% to 86%, vs. 55%, 95% CI 47% to 63%, vs. 33%, 95% CI 26% to 40%; p < 0.001). Appropriate triage advice was higher for uncommon diagnoses than for common diagnoses (63%, 95% CI 57% to 70%, vs. 52%, 95% CI 46% to 57%; p = 0.01). Four symptom checkers never advised self-care, so these were excluded and appropriate advice was given in 61% of cases (95% CI 56% to 66%). Triage advice also varied significantly (p < 0.001) based on who was providing the symptom checker [provider groups and physician associations 68% of cases in which the symptom checker gave appropriate triage advice (95% CI 58% to 77%), private companies 59% (95% CI 53% to 65%), health plans or governments 43% (95% CI 34% to 51%)] |

The results overall presented a mixed picture, but most studies indicated that symptom checkers were inferior and/or more cautious in their triage advice than doctors or other health professionals. In their review of 23 symptom checkers, Semigran et al. 6 found that the systems provided appropriate triage advice in 57% (95% CI 52% to 61%) of cases. The rate of appropriate triage advice was higher for emergency cases (80%) than non-emergency (55%) or self-care (33%) cases. Performance also varied across the systems evaluated, with correct triage ranging from 33% to 78%. Similarly, the NHS England pilot evaluation7 of four systems found that agreement with clinical experts varied from 30% to 95%, although the number of responses also varied, reducing the comparability of the results.

For abdominal pain, Berry et al. 30 evaluated three symptom checkers and found that 33% of diagnoses were at the same level of urgency as physician diagnoses (emergency, non-emergency or self-care), 39% of cases were diagnosed as more serious and 30% of cases were diagnosed as less serious than the physician’s judgement. A similar level of agreement between algorithm and clinician (39%) was reported by Poote et al. ,22 whereas the system evaluated by Nijland et al. 21 advised patients to visit a doctor in 85% of cases, even when the symptoms were appropriate for self-care.

The only studies to report clearly equal or superior accuracy of disposition using an automated system were the evaluations of ‘babylon check’ by the company that developed the system. Middleton et al. 27 reported that, when using patient vignettes, the app gave an accurate triage outcome in 88.2% of cases, compared with an accurate triage outcome in 75.5% of cases for doctors and in 73.5% of cases for nurses. When vignettes were delivered by a medical professional rather than actors, the accuracy of ‘babylon check’ increased to > 90%. A later report looked at triage results obtained as part of the NHS England pilot evaluation, concluding that all of the 74 referrals to urgent or emergency care were appropriate. 26 However, this evidence should be treated with some caution as it was published without peer review and all of the authors were affiliated with the developer of the product.

Impact on service use/diversion

The widespread availability of online and digital systems for self-diagnosis and triage is intended to support self-management when appropriate and optimise the use of the urgent and emergency care system. However, unintended impacts are possible and for this reason it is important to monitor effects on service use, particularly the diversion of patients from urgent-care settings to primary care or self-care. Eight studies reported on this outcome (Table 11), although one16 of them merely stated that it was not possible to assess the effect of the intervention (a web-based influenza triage system) on patients’ use of health services.

| Reference | Type of system | Population/condition | Comparator | Main results |