Notes

Article history

The research reported in this issue of the journal was commissioned by the National Coordinating Centre for Research Methodology (NCCRM), and was formally transferred to the HTA programme in April 2007 under the newly established NIHR Methodology Panel. The HTA programme project number is 06/90/22. The contractual start date was in April 2004. The draft report began editorial review in January 2009 and was accepted for publication in March 2009. The commissioning brief was devised by the NCCRM who specified the research question and study design. The authors have been wholly responsible for all data collection, analysis and interpretation, and for writing up their work. The HTA editors and publisher have tried to ensure the accuracy of the authors’ report and would like to thank the referees for their constructive comments on the draft document. However, they do not accept liability for damages or losses arising from material published in this report.

Declared competing interests of authors

None

Permissions

Copyright statement

© 2010 Queen’s Printer and Controller of HMSO. This journal may be freely reproduced for the purposes of private research and study and may be included in professional journals provided that suitable acknowledgement is made and the reproduction is not associated with any form of advertising. Applications for commercial reproduction should be addressed to: NETSCC, Health Technology Assessment, Alpha House, University of Southampton Science Park, Southampton SO16 7NS, UK.

2010 Queen’s Printer and Controller of HMSO

Chapter 1 Policy interventions and their evaluation

The NHS Research and Development Methodology Programme identified the need to investigate the implications of randomised and non-randomised evaluation designs for assessing the effectiveness of policy interventions.

The work of Sacks1 and the classic paper by Schulz2 showed that the benefit ascribed to a clinical intervention depends on the methodology used in the study. For instance, the effect size tends to be more pronounced in historically controlled than in randomised controlled trials (RCTs) of the same intervention, and in poorly randomised than in rigorously randomised studies. Concurrently controlled and randomised studies produce more similar results,3 although the researchers urge caution when interpreting this finding, as the number of studies included in the review was small. While research comparing the effect sizes produced by different study designs is growing in clinical topics, little work has been done with respect to policy/management interventions. These are defined as those interventions that are not confined to an individual practitioner, and include, but are not limited to, health. Examples would include peer-led teaching and health promotion in schools. Non-randomised studies (NRSs) in these areas may be cross-sectional or before and after (i.e. either with or without baseline measurements), and many of the randomised studies may be cluster randomised.

The Research and Development Methodology Programme required the compilation of existing studies that compare findings of randomised and non-randomised studies of policy interventions in order to: (1) analyse effect sizes in which similar interventions have been examined by different methods and (2) extract and summarise the information bearing on the effects of study type and quality of study findings, with the ultimate aim of learning about biases (mean bias and spread of biases) associated with different study types.

Defining policy and intervention

The study required a definition of ‘policy interventions’ that would facilitate selection of systematic reviews of policy interventions and individual trials of policy interventions. The term ‘policy intervention’ is used throughout the UK government’s policy hub website (www.policyhub.gov.uk/search_result.asp), but without a definition. We have been unable to find a definition of ‘policy intervention’. The closest we have found in dictionaries are definitions of ‘policy’ as:

a course of action or principle adopted or proposed by a government, party, individual, etc.

Oxford English Dictionary

a plan of action adopted by an individual or social group

WordNet, a lexical database for the English language (www.cogsci.princeton.edu/cgi-bin/webwn)

policy (plan) noun [C] a set of ideas or a plan of what to do in particular situations that has been agreed officially by a group of people, a business organization, a government or a political party

Cambridge Advanced Learner’s Dictionary

We are not alone in struggling to define ‘policy’ and ‘policy intervention’. In seeking a sound and operational definition of policy intervention, we have referred to the public policy literature and the literature about evaluation and evidence-informed policy/practice.

Jenkins4 observed that:

Pursuit of the question ‘what is public policy?’ leads one down the tangled path towards a definition where many have been before and from which few have emerged unscathed. There is, as Lineberry and Masotti (1975) point out, little in the way of a consistent conceptualization of the term ‘policy’ itself and pages could be, and have been, filled with competing definitions. The problem may be to provide an account that captures the detail and density of the activities embraced by the policy arena. With this detail in mind, it is worth considering the following definition of public policy:

‘a set of interrelated decisions taken by a political actor or group of actors concerning the selection of goals and the means of achieving them within a specified situation, where those decisions should, in principle, be within the power of those actors to achieve’ (Roberts 1971)

[This definition] stresses the point that policy is more than a single decision. As Anderson (1975) has argued, ‘policy making typically involves a pattern of action extending over time and involving many decisions’.

The US has a strong history of employing controlled trials to evaluate ‘social programs’5 that fall within our understanding of policy interventions. For instance, House6 cites the dictionary definition of intervention as ‘interference that may affect the interests of others’. He goes on to talk about the inherently ‘messy’ social context within which emerge ‘complex disordered events we call interventions’ (House, p. 323). House distinguishes between generic development, policy-making and site-specific interventions (p. 325). ‘Policymaking interventions’ consist of ‘establishing rules and guidelines’; in the case of education, Standard Attainment Tests in the UK would be an up to date example of the type of educational intervention that House discusses under this heading.

Some of the literature refers to ‘social interventions’ and ‘policy analysis and evaluation research’. Haveman7 described social interventions as programmes that, when evaluated, can inform policy, but some ‘are’ policy. There is extensive literature on the ‘War on Poverty–Great Society’ developments in the US initiated in 1965, in which the various types of social intervention that represented local changes in policy were evaluated by government mandate and these evaluations were considered directly relevant to government policy. 8

Defining ‘policy intervention’

The focus of our investigation was on evaluations of interventions for public policy or service organisation and management that:

-

are intended to serve communities or populations

-

require more than the efforts of individual practitioners to apply

-

are not a one-to-one service.

We have adapted the definition of public health interventions provided by Rychetnik et al. 9 In order to embrace broader public policy, this definition of interventions is paraphrased as:

a set of actions with a coherent objective to bring about change or produce identifiable outcomes. These include policy, regulatory initiatives, single strategy projects or multi-component programmes. Policy interventions are intended to serve communities or populations. They are distinguished from one-to-one services that are for the benefit of individuals.

These interventions require more than the efforts of individual practitioners to be applied. They may include legislation or regulation; setting of policy or strategy at the level of national or local government, or institutions; the provision or organisation of services; environmental modification; or facilitating lay or public delivered support/education. These interventions may fall within public policy for health, education, social care, welfare, housing, criminal justice, transport and urban renewal. 10

Another interpretation of the term ‘policy intervention’ refers to intervening in policy making rather than policy intervention. Devlin et al. 11 considered the parameters of policy-making interventions in relation to service user perspectives on HIV policy. To paraphrase them:

policy interventions seek to influence decision-making . . . and ensure that policy supports or at least does not impede [services]. These interventions relate therefore to local and national policy makers (within governmental and statutory sectors) and local and national resource allocators (for example government departments and local authorities). They can also involve seeking to influence those people or agencies charged with the production and supply of information to support policy development and resource allocation. Therefore, they might also seek to influence applied and academic social researchers, epidemiologists, policy advisors and local public health surveillance personnel (collectively called, the research and policy community).

Examples of interventions that impact on policy-makers and seek to influence their (drafting) policy and legislation might include:

-

lobbying government departments, local authorities, research bodies

-

taking part in national consultation processes undertaken by policy and lobbying organisations and government

-

joining professional associations, research/policy forums

-

applying for funding from local authorities or government bodies

-

subscribing to information sources of national policy-makers and lobbyists.

This distinction between collectively effecting change through setting policy, and collectively effecting change through implementing prior policy decisions is apparent in the policy analysis literature. 12 Harrison12 describes policy as a process, rather than simply as an output of a decision, or an input to management. The policy process begins within the arena of political science with setting agendas around problematic issues, and progresses to designing and evaluating efforts to solve these problems. Thus, a ‘policy intervention’ may be either a method for influencing the policy-making process, or a method for influencing the policy implementation process.

Evaluating public policy interventions

Within the area of public policy there has been wide debate about the suitability of experimental evaluation methods. While it has been suggested that the RCT should be the ‘gold standard’ and used whenever possible,13,14 others have argued that evaluating social and policy interventions is a complex task and that RCTs, and experimental designs in general, are not always practical or even desirable. 15,16 Nutbeam17 suggests that complex multicomponent interventions (e.g. directed at communities or regions using a range of media, delivered in a number of settings) are more likely to be effective in bringing about population health gains than ‘single issue’ initiatives (e.g. directed at individuals or small groups, using fewer media, delivered in a particular setting), but are much harder to evaluate. For example, it might not be possible to allocate whole communities or regions to study groups randomly, and it is not easy to isolate the effects of competing interventions, thus confounding the results. The World Health Organization commenting on the evaluation of health promotion goes as far as saying:

The use of randomised control trials to evaluate health promotion initiatives is, in most cases, inappropriate, misleading and unnecessarily expensive. 18

Oakley et al. 19 have summarised the objections to RCTs for evaluating social interventions, arguably a category that includes all policy interventions, as: randomised experiments oversimplify causation, cannot be carried out in complex institutional and other settings or to test complex interventions, ignore the role of theory in understanding intervention effectiveness, are inappropriate in circumstances in which ‘blinding’ is impossible, are politically unacceptable and too expensive, have been tried and failed, are unethical because valued treatments are withheld from control groups and/or experimental/quantitative research is inherently exploitative, and perfectly good alternatives to RCTs that pose none of these problems exist and should therefore be used instead. These objections focus largely on the science, ethics and feasibility of randomisation. They have led to a dearth of randomised studies in some policy areas, which needs to be taken into account when preparing research syntheses, and to research communities who remain disinclined to mount randomised evaluations. Oakley et al. 19 used three recent UK trials of policy interventions (day care for preschool children, social support for disadvantaged families, and peer-led sex education for young people) to consider issues relating to the use of randomisation and suggest some practical strategies for its use in trials of social interventions. Their refutations of the objections to RCTs are supported by an analysis of the relevant theoretical literature. 20

Indeed, experimental evaluations have long been considered the optimal design for evaluation in some fields of social policy, particularly in the US. 5 Oakley21 cites examples of experimental policy evaluations that date back as far as the early decades of the twentieth century, and discusses how experimental methods became popular, particularly in the US, between the 1960s and the 1980s to evaluate the effectiveness of public policy:

This history is conveniently overlooked by those who contend that randomised controlled trials have no place in evaluating social interventions. It shows clearly that prospective experimental studies with random allocation to generate one or more control groups is perfectly possible in social settings. (p. 1239)

More recently there have been key trials that have evaluated the effectiveness of so-called ‘complex’ health promotion interventions. For example, the North Karelia Youth Program22 was a large-scale multicomponent intervention evaluated using an RCT involving over 4000 participants, featuring a range of activities including classroom education, media campaigns, changes to nutritional content of school meals, health screening, and health education initiatives in the workplace.

Particularly innovative are experimental evaluations of interventions addressing environmental or structural factors integrating sexual health and employment/economic policy. Examples include a matched controlled trial of the impact of an employment creation programme on teenage pregnancy23 and a cluster RCT of microcredit schemes for impoverished women to develop increased economic independence, social status, and power within sexual negotiations, thereby reducing HIV transmission. 24

An analysis of the reasons for not adopting the RCT design concludes that, despite serious practical objections and partial remedies, RCTs are logically and empirically superior to all currently known alternatives. 25 The view that the RCT is inappropriate to test the success of policy interventions is refuted by a bibliometric analysis, which concludes that between 6% and 15% of impact evaluations of childhood interventions in education and justice employ a randomised design. 26

Our own experience of conducting RCTs supports their use for evaluating social interventions. 19 Our experience of conducting and evaluating systematic reviews reveals their widespread use elsewhere. Of the 75 evaluation studies identified in a recent systematic review of interventions to promote healthy eating and physical activity among young people,27,28 31 (41%) used an RCT design, 30 (40%) used a controlled trial (without randomisation), and 14 (19%) used only one study group with outcomes measured before and after the intervention. While the evidence base in this area is likely to comprise a vast range of evaluation designs, the role of experimental evaluation cannot be discounted.

Efforts to consolidate this evidence base have increased, together with a recent surge in production of systematic reviews of the effects of policy interventions. 29,30 Reviews have recently been completed, or are in the process of being completed, in the areas of health (e.g. interventions to improve vaccination coverage),31 education (e.g. after school programmes)32 and criminology (e.g. ‘Scared straight’ interventions to discourage juvenile delinquency). 33

Randomisation and effect sizes of clinical interventions

The RCT is widely regarded as the design of choice for evaluating the effectiveness of clinical interventions in health care, as it can provide the most internally valid estimate. The main benefit of the RCT is the use of a randomisation procedure that, when properly concealed, ensures that the subjects receiving the treatment and control are equal with respect to all conditions except for receiving the treatment or the control. With sufficient sample sizes, and a truly random generation of the allocation sequence, comparison groups should on average be equal with respect to both known and unknown prognostic factors at baseline. 34 RCTs also have written protocols specifying, and thus standardising, important aspects of participant enrolment, intervention, observation and analysis. 35

Our knowledge of the importance of certain design features of RCTs has been derived primarily in the field of clinical health-care interventions. 2,36,37 Meta-epidemiological techniques have successfully been used to investigate variations in the results of RCTs of the same intervention according to features of their study design. 38 Substantial numbers of systematic reviews of RCTs have been identified, and results compared between the trials meeting and not meeting various design criteria such as proper randomisation, concealment of allocation and blinding. These comparisons have then been aggregated across the reviews to obtain an estimate of the systematic bias removed by the design feature. 2,37 The results have been shown to be reasonably consistent across clinical fields, providing some evidence that meta-epidemiology may be a reliable investigative technique. 39

The use of meta-epidemiology has also been extended from the comparison of design features within a particular study design to comparisons between study designs. A recent Health Technology Assessment report reviewed eight such examples:40 seven considered medical interventions, while one considered psychological interventions. The conclusions of these reviews varied, partly due to variations in their methods and rigour but also because of limitations in the meta-epidemiological methods used. The only robust conclusion that can be drawn is that in some circumstances the results of randomised and non-randomised studies differ, but it cannot be proved that differences are not due to other confounding factors. The key lessons that can be learned from this work are:

-

The identification and selection of comparisons of randomised and non-randomised evidence should be systematic. This will not overcome the problem of selective publication of primary studies (if studies with positive results are more likely to be published, regardless of design, meta-epidemiological reviews will find designs showing intervention effects in the same direction if not of similar magnitude) but should at least ensure that all available comparisons are included regardless of whether designs show similar or conflicting results.

-

To reduce confounding from factors other than lack of randomisation, randomised and non-randomised studies should be assessed for differences in the participants, interventions and outcomes. The possibility of temporal confounding of study types (NRSs typically being performed prior to the RCTs) should also be assessed.

-

The similarity of randomised and non-randomised studies should be assessed for differences in study methods other than allocation. Discrepancies and similarities between study designs could be partly explained by differences in other unevaluated aspects of methodological quality of the RCTs and/or the NRSs, such as blinding or intention-to-treat analysis.

-

Sensible, objective criteria should be used to determine differences or equivalence of study findings as these can have a large influence on the conclusions drawn. The amount of data available is also important; for example in one review, for each intervention five RCTs on average were compared with four NRSs. Hence the absence of a statistically significant difference cannot be interpreted as evidence of ‘equivalency’, and clinically significant differences in treatment effects cannot be excluded. 40

These previous investigations also suggest that there may be variability in the direction of bias introduced when randomisation is not used. Selection bias is commonly thought of as resulting from the systematic selection of either high or low risk participants to receive an intervention. This would lead to the intervention group being ‘heavily weighted by the more severely ill’41 or alternatively including those least likely to suffer adverse consequences from an intervention (less severely ill). If in fact selection bias arises due to haphazard variations in case-mix, there will be a mixture of under- and overestimates of the treatment effect. The results might all be biased, but not all in the same direction. 40 In these circumstances, an increase in the heterogeneity of treatment effect (beyond that expected by chance) rather than (or as well as) a systematic bias would be expected. Deeks et al. 40 suggest that a formal statistical comparison should aim to compare the heterogeneity in treatment effects, and not just the average treatment effects between randomised and non-randomised groups.

Randomisation and effect size of policy interventions

The effects of policy interventions have been assessed through the use of RCTs, nRCTs and other study designs. The choice has been influenced by the relative rigour of the designs and the feasibility in the circumstances of applying prospective designs and random allocation of interventions. The weight given to each of these influences (rigour and feasibility) when embarking on policy evaluations may be driven by philosophy, as much as by research evidence.

Although studies largely from clinical areas have identified detailed design features of rigorous RCTs that reduce systematic bias in estimating effect sizes,39 meta-epidemiological investigations of medical and psychological interventions concluded that it is less clear what influences the differences in results drawn from randomised and non-randomised studies, as results of NRSs sometimes, but not always, differ from results of randomised studies of the same intervention. 40 There is growing evidence in the meta-analytic literature that even strong quasi-experimental designs assessing criminology are more likely to report a result in favour of treatment and less likely to report a harmful effect of treatment than randomised studies. 42

Chapter 2 considers the methodologies appropriate for investigating the extent and possible causes of such differences.

Chapter 2 Methodology: design and data sources

Four approaches have been adopted in previous studies to investigate the relationship between randomisation and effect size of interventions:

-

Comparing controlled trials that are identical in all respects other than the use of randomisation by ‘breaking’ the randomisation in a trial to create non-randomised trials. These are often called resampling studies.

-

Comparing randomised and non-randomised arms of controlled trials mounted simultaneously in the field. These are replication studies.

-

Comparing similar controlled trials drawn from systematic reviews that include both randomised and non-randomised studies. These include structured narrative reviews and sensitivity analyses within meta-analyses.

-

Investigating associations between randomisation and effect size using a pool of more diverse randomised and non-randomised studies within broadly similar areas. These more diverse studies can be drawn from across reviews addressing different questions, or from broad sections of literature. This is known as meta-epidemiology.

This study sought reports of all four approaches conducted by others, and built on their work by conducting original research with new analyses for three of these approaches (1, 3 and 4 above) across a range of public policy sectors.

The latter two approaches were strengthened in new analyses by testing pre-specified associations supported by carefully argued hypotheses.

Resampling of randomised controlled trials

Resampling studies re-analyse data from RCTs to explore widely used alternatives to randomisation such as: comparing areas, matching areas and adjusting for differences between groups using multivariate analysis. By ‘breaking’ the randomisation in the trials, this analysis creates non-randomised trials and explores the extent to which established alternatives to randomisation are able to find the same results as the original RCTs.

Because these studies are based on trials that are identical other than the use of randomisation, they explore the direct association between randomisation and effect size without being confounded by other factors that might influence effect size when calculated from similar, but not identical, field trials.

Such studies were sought in a methodological review described in Chapter 4. Two new re-sampling studies are reported in Chapter 7. The data are drawn from two trials of social support for families with young children, one carried out in the UK, and the other in Canada. Both trials span the health and social care sectors.

Replication studies

Replication studies assess the effects of intervention from different comparisons within the same study. In order to investigate the role of randomisation, replication studies compare the effect sizes from randomised and non-randomised comparisons. Such studies were sought in the methodological review described in Chapter 4. We did not have access to data from other replication studies for new analyses.

Comparable field studies

Randomised and non-randomised evaluations drawn from a single review are comparable field studies for addressing the following questions. Do randomised and non-randomised evaluations lead to differences in effect sizes and variance? If so, are these differences due to the randomisation or to other factors associated with randomisation? If differences are due to characteristics of the interventions or their evaluation, is it possible to overcome these difficulties in the design or analysis of evaluations and/or research syntheses whether or not studies are randomised?

Extensive exploratory analyses would be expected to identify some associations between randomisation and other factors, if only by chance. To avoid the risk of identifying chance associations, we tested a limited number of well-argued associations for which hypotheses rested on our understanding of policy interventions, research communities and evaluation methodology, or arose from previous research. By drawing on published literature, we proposed a series of potential confounders, and argued how these are likely to be interrelated (see Chapter 3).

We proposed several possible conclusions for an exploratory investigation of published evaluations:

-

There is no systematic difference between the effect sizes of RCTs of policy interventions and the effect sizes of non-randomised trials; so non-randomised trials may be adequate to evaluate policy interventions.

-

The effect sizes of RCTs of policy interventions are systematically different from the effect sizes of non-randomised trials; this difference cannot be explained by any other variables in the interventions or their evaluation, so it is assumed that randomisation is required to control for unidentifiable influences; and examples of RCTs that are ethically and scientifically sound should be sought to model future evaluations of the effects of policy interventions.

-

The effect sizes of RCTs of policy interventions are systematically different from the effect sizes of non-randomised trials; however, this difference can be explained by one or more other variables in the evaluation, such as baseline differences, that are amenable to statistical adjustment in order to take into account the difference; in these circumstances, non-randomised trials with the appropriate corrections may be adequate to evaluate the impact of policy interventions.

-

Randomised trials of policy interventions lead to systematically different effect sizes compared with non-randomised trials; where this difference can be explained but not quantified by one or more other variables in the evaluation (but this difference is not amenable to adjustment) the strength of evidence to support decisions about policy interventions is necessarily weaker. An example may be small single centred randomised trials led by enthusiasts, compared with large multicentre uncontrolled trials attempting to assess the impact as an intervention is implemented more widely.

-

The variance of non-randomised trials is greater than that of RCTs. Deeks et al. 40 have found that, while non-randomised controlled trials (nRCTs) do not differ systematically in their effect sizes, their variance is greater than that of RCTs. This suggests that confidence intervals (CIs) for individual nRCTs should be considered to be larger than stated, which means that statements of statistical significance should be treated with caution. If the purely statistical studies show that nRCTs differ from RCTs only in variance (not effect size), we would conclude that nRCTs are biased in ways that cannot be explained simply because they are non-randomised: other biases (e.g. selection or publication bias) are at work which lead us to conclude that nRCTs overstate the statistical significance of their interventions, but not necessarily the size of the effect.

Thus, any investigation of a possible association between randomisation and effect size needs to take into account the similarities or differences of interventions and evaluations in which this association is tested.

Meta-epidemiology

Our study extended the use of meta-epidemiology by Deeks et al. 40 to policy interventions within health and other sectors to draw together systematically what is already known about the choice of study design for evaluating policy. Lessons learnt from that meta-epidemiological review were then applied to a meta-epidemiological study of policy evaluations using our own data sets.

Policy interventions

In attempting to distinguish ‘policy interventions’ from interventions examined in earlier methodological studies, our discussions and searches for relevant literature touched on the following issues:

Prior research

From the outset we were aware of a similar methodological study by Deeks et al. 40 This study did not have any inclusion or exclusion criteria regarding type of intervention, other than they had to have ‘intended effects’. Two chapters of that report are particularly relevant to our work:

-

Chapter 3: a review of eight ‘meta-epidemiological’ reviews that reviewed comparisons of RCTs and NRSs in which the original authors had specifically set out to examine the similarity/differences in results according to randomisation. Deeks et al. 40 reviewed interventions that were almost exclusively therapeutic in nature (i.e. aimed to treat or cure disease), but a handful related to the organisation of care and to educational interventions and were eligible for our study.

-

Chapter 5: a review of existing systematic reviews that included randomised and non-randomised studies. This chapter included ‘policy interventions’ as well as clinical/medical interventions. We have already drawn on the reviews within this chapter to outline the range of ‘policy interventions’ for this study. The study reported here extends the work of Deeks et al. 40 by comparing the results of randomised and non-randomised evidence from a wider range of policy interventions.

Resistance to randomised controlled trials

As the purpose of this methodological study is to resolve questions about how essential RCTs are in areas where they are less readily available, we anticipated finding relevant studies (randomised and non-randomised) in areas where there has been some but not complete resistance to RCTs. 14,43,44 These include circumstances in which:

-

it is difficult to stop contamination between intervention group(s) and control(s) (e.g. community wide interventions)

-

benefit may derive in part from an individual or group actively seeking to participate in the particular intervention (e.g. peer support provided by patient organisations)

-

randomisation is not feasible (e.g. legislation)

-

interventions are multicomponent (e.g. a combination of health service initiatives, face-to-face health education in schools and in the community plus mass media).

Within this study we shall explore whether differences other than the presence or absence of randomisation could account for any variation that might be found in results, and comment on the extent to which resistance to RCTs is justified.

Complex interventions and their relationship with policy interventions

Although not directly relating to policy interventions, the Medical Research Council (MRC) framework for the development and evaluation of RCTs for complex interventions to improve health seemed to capture the essence of what we have been discussing. However, the MRC definition of complex interventions includes interventions that may be delivered by individuals (e.g. the different social/educational/treatment aspects of physiotherapy distinguish physiotherapy as a complex intervention) (see Appendix 1). We noted that in other less clinical areas, ‘multicomponent’ interventions was the term of choice, although this usually included multipractitioners too.

Level of policy making

Discussion distinguished policy interventions at different levels (national, regional, community and institution). These distinctions appeared to translate poorly to policy evaluations at these different levels. In particular it was noted that an evaluation of institution-wide policies may precede or follow national endorsement of a policy; the report may not clearly acknowledge which of these circumstances prevail, and whether it is the former or the latter may make little difference to the methodological challenges of evaluation. When a definition of policy intervention was applied to a set of evaluations (see below) it confirmed the observation above that social interventions as programmes, when evaluated, can inform policy, but that some ‘are’ policy. 7

Implementation and its relationship with policy

We envisaged many clinical interventions also being ‘policy’; for instance, prescribing aspirin following a heart attack. In order to avoid replicating methodological research in the clinical area, we distinguished between, for example, a trial of aspirin treatment for heart attack (a trial of a clinical intervention) and a trial of methods to encourage greater use of aspirin treatment for heart attack (a trial of a social or educational intervention to increase uptake), and included the latter but not the former. We included other similar interventions such as interventions to increase the uptake of vaccination or screening. This scope made reviews conducted by the Cochrane Effective Practice and Organisation of Care review group particularly relevant.

Developing operational definitions

Examining prior reviews

A draft definition of policy interventions, and illustrative examples, was developed through several rounds of discussion within the research team. It was refined by two researchers independently applying the emerging criteria and definitions to a set of systematic reviews to judge whether each review would be included or excluded. Twenty of these reviews were sampled from the Health Technology Assessment (HTA) report on evaluating NRSs40 (this was a subsample of a larger set originally chosen for their relevance on the basis of their titles only). The remaining 20 were selected randomly from the Evidence for Policy and Practice Information and Co-ordinating Centre (EPPI-Centre) Database of Promoting Health Effectiveness Reviews (DoPHER; eppi.ioe.ac.uk).

Different categories of policy intervention can be further distinguished by their details, either as elements of policy setting, or as elements of implementing policies such as legislation or regulation, provision or organisation or services, environmental modification, or facilitating education or support delivered by lay people. Examples are offered below.

Setting of policy/strategies

-

Government policy (e.g. policies on vaccination/immunisation/screening; fiscal/economic incentives to participate in sport/physical activity; nutritional policies such as the ‘National School Fruit Scheme’).

-

Local government policy (e.g. provision/sponsorship of community based activities to promote cultural diversity and social cohesion, and to prevent discrimination and violence, such as ‘Neighbourhood Renewal Strategies’; community-wide inter-agency strategies to promote health such as ‘Health Action Zones’).

-

Institutional policy (e.g. school-wide strategies to promote mental and emotional health, such as bullying/harassment prevention; curriculum review to prevent disaffection with school/academic studies; health promoting hospitals).

Legislation/regulation

-

Environmental health regulations (e.g. waste disposal, pollution/emissions and its impact on health, smoking restrictions).

-

Taxation (e.g. on tobacco, alcohol).

-

Advertising/sponsorship regulation (e.g. on tobacco products).

-

Food standards regulations (e.g. nutritional content of school meals).

Provision/organisation of services

-

Education (e.g. increasing access to education through initiatives such as ‘Education Action Zones; vocational strategies to aid transition from school to work such as the ‘Connexions’ service; class sizes; training the trainer cascades).

-

Health promotion (e.g. increasing access to, and uptake of, facilities/resources; initiatives to promote health in the workplace; mass media campaigns; community development; social support).

-

Health care (e.g. increasing access to, and uptake of, facilities/resources; effective organisation of services; effective promotion, dissemination and uptake of evidence based clinical practice guidelines).

-

Social services (e.g. effective organisation of services; effective alliances with health and education sectors).

Environmental modification

-

Creation of safer cities (e.g. improved street lighting to prevent crime; traffic calming schemes, cycle paths/helmets, and speed cameras to prevent injuries).

-

Urban renewal (e.g. housing improvement programmes to promote better living conditions/health/sanitation/hygiene).

Facilitating lay/public delivered support/education

-

Facilitating one-to-one support (e.g. lay birth partners and fathers supporting women in childbirth; enabling carer/family support for chronic illness; peer-delivered counselling in schools).

-

Facilitating one-to-group support (e.g. peer-delivered health promotion in schools).

-

Facilitating community action (e.g. health promotion delivered by the community).

-

Facilitating self-directed activities (e.g. self-management of chronic disease; independent learning).

Some of the examples above reflect current UK intersectoral policy initiatives – attempts to set ‘joined-up policy’. Some interventions may span health/education/housing and a number of other sectors. Finally, the categories could be viewed as a hierarchy with legislation providing a context for the setting of policy/strategy, which in turn affects how services are provided and organised, and which may also manifest as changes to the physical environment.

The draft criteria and definitions worked well, and minor revisions were made to improve the inter-rater reliability for the handful of cases for which their relevance was questionable. From this sample:

-

The majority of reviews described interventions in health care and health promotion. Reviews in other areas (e.g. education) were in a minority.

-

Only around a quarter were included (n = 11; 28%). The majority were excluded (n = 25; 62.5%), and four (10%) were unclear.

-

Most of those included fell into the ‘Provision/organisation of services’ and the ‘Setting of policy/strategies’ categories. We found none that had addressed ‘legislation/regulation’.

-

Many of those excluded were interventions delivered by individual practitioners (e.g. mostly health professionals).

-

At least five of those excluded were ‘pharmacological’ interventions, such as vitamin/mineral supplementation, and in one case smoking cessation aids (e.g. lozenges, chewing gum).

-

One type of intervention that may be relevant, but usually involved some professional input, was activity under the broad heading of ‘self-management’. An example was one of the reviews for which it was ‘unclear’ whether or not it was a ‘policy intervention’. 45 It was about education for the self-management of asthma. Patients generally received written information (e.g. leaflets), and underwent a short interaction with a health professional (plus on-going consultations to monitor progress), but largely managed their illness on a day-to-day basis by themselves. This concept could be applied in many other contexts (e.g. self-learning/education/independent study initiatives). Perhaps a good example would be the development of policies or initiatives to promote distance learning as a way of encouraging greater access to further or higher education. The concept of self-management/education/help could be considered a policy intervention although, in these circumstances, such interventions will likely incur some professional one-to-one input in order to help people initiate their own activities.

Applying draft criteria to sources of policy interventions

In order to develop more detailed operational definitions, draft criteria were applied by two researchers to abstracts and extracted data of outcome evaluations included in: (i) a map of studies of HIV health promotion for men who have sex with men (MSM); (ii) a map of studies of children and healthy eating; and (iii) a review of the promotion of sexual health/prevention of sexually transmitted diseases among women. Refining the criteria involved successive rounds of independent coding and reflective discussion.

For policy interventions

Policy interventions are those interventions which establish or modify collective plans for action so as to have systematic impact on the public. These policy interventions operate via institutions (e.g. hospitals, practitioner bodies, schools, public authorities, commercial bodies, patient organisations) and communities (e.g. geographical or social groups, networks, people with shared interests) and do not include personal policies of individuals.

Policy interventions require more than the authority of individual practitioners to instigate, more than the resources of individual practitioners to implement and more roles or skills than those of a single practitioner to implement. Their instigation and implementation depends upon interaction between organised groups of people. Groupings that make policy range in formality, geographic scope and purpose, but examples include local, national and international government, the regulatory bodies for practitioners and industry and governing bodies of institutions such as schools, health-care services and workplaces. Because of the involvement of social units in policy instigation and implementation, policy interventions are often better evaluated for their effectiveness through the allocation and study of social units (e.g. schools, communities, wards), as opposed to individuals.

Thus, a policy intervention required one or more of the following:

-

more than the authority of individual practitioners to instigate

-

– consultants’ ward procedures are not policy interventions because a single consultant has the authority to implement them, neither are teacher-led interventions confined to the classroom and falling within the curriculum

-

– hospital wide procedures are policy interventions (e.g. complaints procedures) as are school procedures for engaging parents with pupils’ work [e.g. allowing parents to withdraw their children from Personal, Social and Health Education (PHSE) lessons]

-

-

more than the resources of individual practitioners to implement

-

– interventions delivered largely within the resources of an individual practitioner with no additional costs other than their reasonable time are ‘practice interventions’ (e.g. prescribing paracetamol for infants with fever)

-

– interventions requiring resources beyond the reach of individual practitioners in their conventional roles, such as interventions requiring additional budgets are policy interventions (e.g. widespread advertising for smoking cessation clinics; prescribing discounted access to fitness facilities)

-

-

more roles/skills than that of a single practitioner to implement

-

– procedures implemented by many practitioners within the remit of their individual professional roles are not policy interventions (e.g. sharing the workload of facilitating parent craft classes)

-

– procedures requiring a team of mixed roles are policy interventions (e.g. replacing doctors with nurses; or provision of specialist stroke units)

-

-

but may, nevertheless, be delivered by individual providers to individual recipients when the intention is to implement a policy

-

– one-to-one treatments are not necessarily policy interventions (e.g. drugs, surgical treatments, counselling, therapy)

-

– directives to consistently adopt a particular intervention are interventions to implement policy (e.g. prescribing aspirin following a heart attack, or counselling before and after HIV tests)

-

-

or may be evident by the use of clustered designs to evaluate their effectiveness, where clustering implies a collective decision about the implementation of different policy interventions in different arms of the trial

-

– one-to-one interventions readily evaluated by random allocation of individuals to different treatments are largely practice interventions where such RCTs can inform practice decisions

-

– higher units of allocation (e.g. practitioner, setting) are largely policy interventions where such RCTs can inform policy decisions.

-

For subcategories within policy intervention

In general, the categories of policy interventions described above (setting of policy/strategies, legislation/regulation, provision/organisation of services, environmental modification and facilitating lay/public delivered support/education) could be readily applied. The reviewers identified the possible need for expansion of the ‘Environmental modification’ category to include the modification of school meals. Any computer-based interventions were considered policy interventions on the grounds of the costs and staffing required for computer support, in addition to the teaching staff required for implementing the intervention.

The level(s) at which policy has been enacted

Four categories were developed for policy level: policy for an institution, policy for a community, policy for a region and policy for a nation. In general, it was clear when policy interventions were being implemented ‘institution wide’, although this did not exclude them being implemented ‘institution wide’ across a region or a nation. Also, it was often not possible to discern from the report whether the policy had been set nationally, regionally or institutionally, or whether institutions were obliged to adopt national or regional policy. These distinctions may have no discernible effect on the methodology of evaluation.

Applying operational definitions

Overall, the definitions and categories as described above (see Developing operational definitions) have proved possible to apply in a way that is consistent between two reviewers working independently. Limitations to the work done so far include characteristics of the studies appraised – EPPI-Centre reviews tend to focus on policy level interventions as described here, and so the tests done so far can only have limited powers to test the discriminatory powers of these tools for including or excluding policy interventions. However, the distribution of types of policy interventions that appeared in each EPPI-Centre review varied, and discriminating between types of policy interventions appeared practical.

In developing our data sets we coded as policy evaluations those:

-

in which there was an explicit directive/policy for the intervention OR

-

beyond the capacity of individual providers (in terms of their roles/skills, resources, or authority).

These inclusion criteria match the focus of the commissioning brief on policy/management interventions which was ‘those interventions that are not confined to an individual practitioner . . . examples would include peer-led teaching and health promotion in schools’.

Explicit directives or policies include named policies such as national government legislation or programmes or, less formally, explicit collective action plans in which non-researchers had been involved in the decision-making. Collective action plans could be explicit either from descriptions of planning processes or from descriptions of the products of their planning processes, such as guidelines sponsored nationally or regionally by professional organisations or charities, or commercially available curricula.

Operating the second inclusion criterion requires a judgement about the roles, skills, resources and authority of individuals. For instance, distributing fruit to children at school would be judged a policy intervention because it would be beyond the authority/resources of an individual practitioner on the grounds that we do not expect teachers to pay for it out of their own pockets and if the school were to have a budget for it, it must also have a policy for it.

In addition to inclusion/exclusion criteria, we anticipated being able to separately identify and analyse evaluations of interventions that operate at different levels (national, regional, community, or institutional level) and in different policy sectors (housing, transport, health, crime and justice, etc.) or across policy sectors.

Data sources

Lipsey46 argues that the most suitable data sets for investigating the association between randomisation and effect size are either small numbers of evaluations that are nearly identical, except for randomisation, or large numbers of interventions allowing for diversity in the study population and design. In the first instance, any association between randomisation and effect size would be readily apparent. In the second, any association would need to be distinguished from associations of effect size with other variables, such as differences in the populations, interventions, outcomes or evaluation methods. Resampling studies, which draw on the data from individual RCTs, take Lipsey’s argument for comparing similar trials a step further.

Resampling studies data

We had access to two trials of policy interventions in which data were suitable for resampling studies.

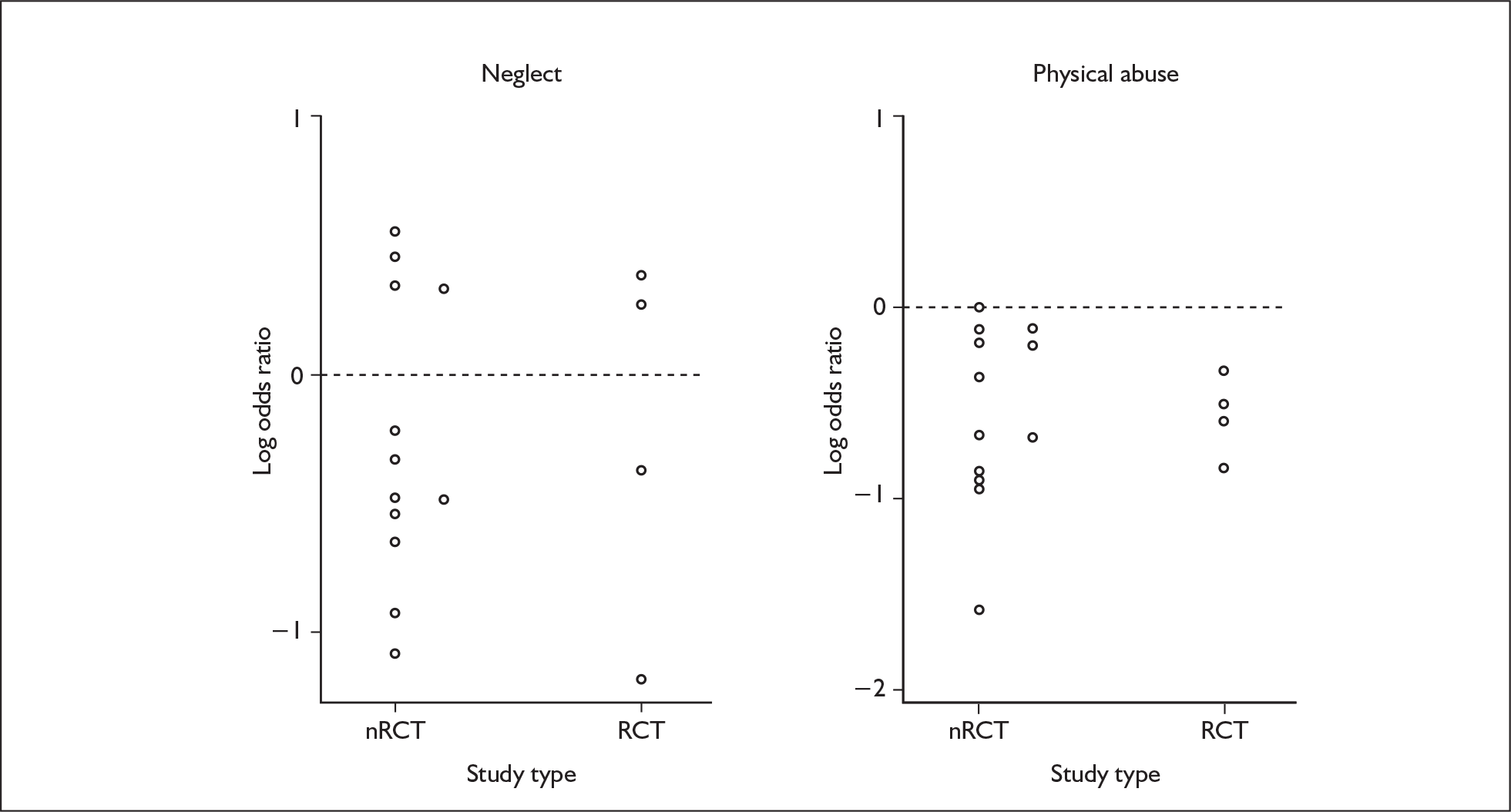

Trial 1: The Social Support and Family Health Study

This study was an RCT which assessed whether increased postnatal support could influence maternal and child health outcomes. 47

Two support interventions were set up. The first, the Support Health Visitor intervention, was the offer of 1 year of monthly supportive listening visits, the first to take place when the baby was approximately 10 weeks old. The primary focus for this intervention was on the mother and her needs. The second intervention, using the services of local community support organisations, entailed being assigned to one of eight community groups that offered drop-in sessions, home visiting and/or telephone support for a period of 1 year.

The trial compared maternal and child health outcomes for women who had been offered either of the support interventions with outcomes for control women who received standard services only. The primary outcomes were child injury, maternal smoking and maternal psychological well-being. Secondary outcomes were uptake and cost of health services, household resources, maternal and child health, experience of motherhood and child feeding.

No evidence of impact was found for either intervention on the primary outcomes. The Support Health Visitor intervention was popular with women and was associated with some of the secondary outcomes. Greater emphasis could, in future research, include the social support role of health visitors, developing more culturally sensitive outcome measures and exploring the role of social support on the delay of subsequent pregnancy.

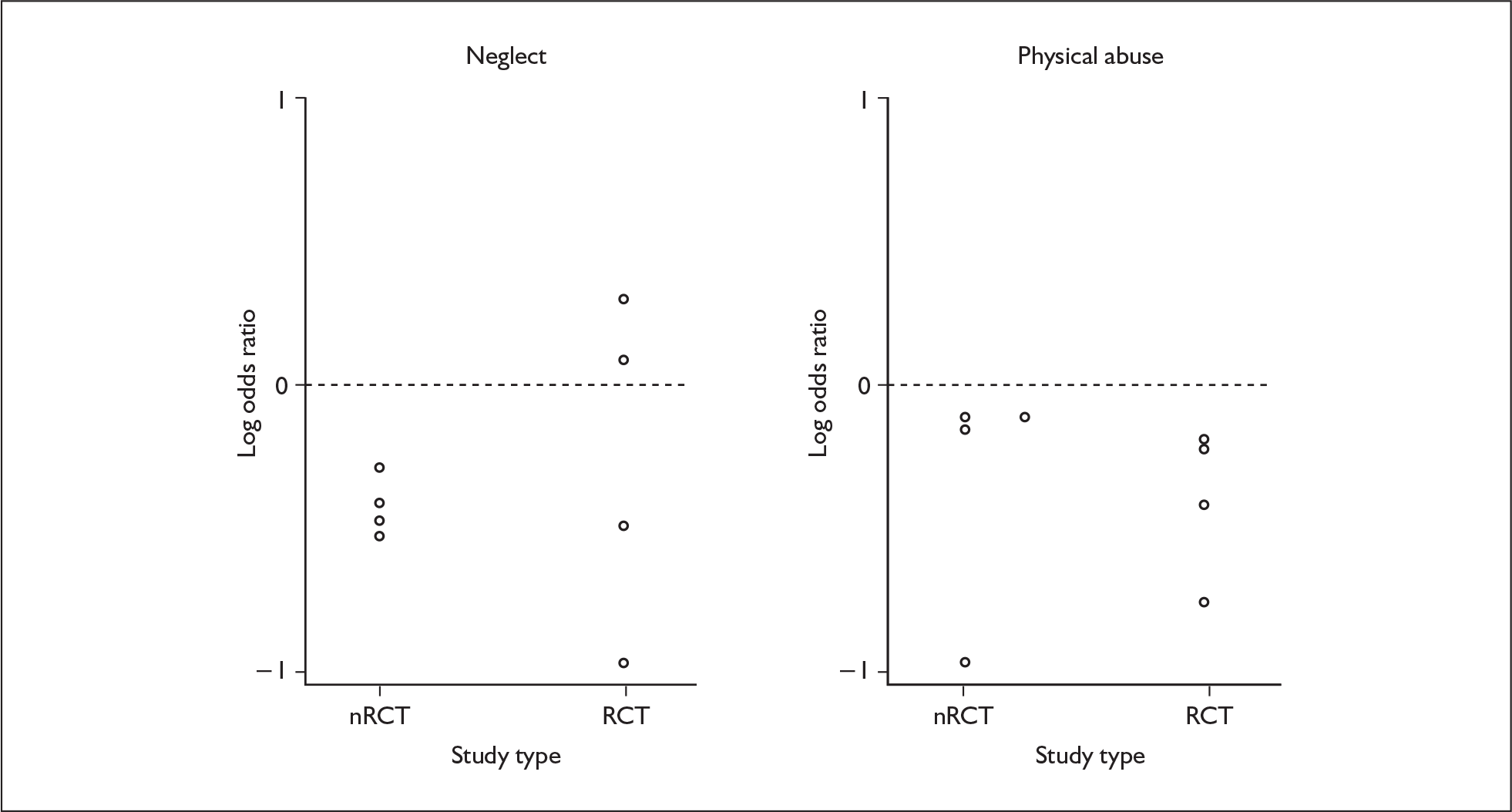

Trial 2: The effectiveness of home visitation by public health nurses in preventing the recurrence of child physical abuse and neglect

Home visitation by public health nurses is known to be effective in preventing child abuse and neglect. 48 This RCT therefore aimed to investigate if home visitation by public health nurses to disadvantaged first-time mothers was effective in reducing recidivism.

Families with a history of one child being exposed to physical abuse or neglect were assigned to either a control or intervention group. The control group received standard treatment. The intervention group received a programme of home visitation by nurses in addition to the standard treatment. The main outcome was recurrence of child physical abuse and neglect, and analysis was by intention to treat.

At 3 years’ follow-up, recurrence of physical abuse did not differ between control and intervention groups, making the intervention ineffective. Although hospital records showed significantly higher recurrence of hospital attendance in the intervention group than in the control group, the authors concluded that this may be due to specific advice from public health nurses. No significant differences were found for secondary outcomes. This suggested that this home-based strategy was not effective, and that much more effort needed to be made towards prevention of child abuse or neglect before it becomes established as a pattern of behaviour in a family.

Similar studies drawn from systematic reviews

We sought readily available systematic review data stored on EPPI-Reviewer, software for storing and analysing data about primary research for inclusion in systematic reviews. This source includes data from reviews of health promotion (conducted by or in collaboration with the EPPI-Centre published 1999–2004) and education (conducted by the EPPI-Centre or by review groups supported by the EPPI-Centre published before June 2004).

Of the 24 education systematic reviews, only seven included RCTs and NRSs; these included policy interventions of Interactive Communication Technology (ICT) for literacy (three reviews), out-of-home integrated care and education, paid adult support in mainstream schools, supporting pupils with emotional and behavioural difficulties in mainstream primary schools, and personal development planning for improving student learning. Between them they included 32 RCTs and 82 NRSs. This was considered too few studies to analyse further considering their diversity.

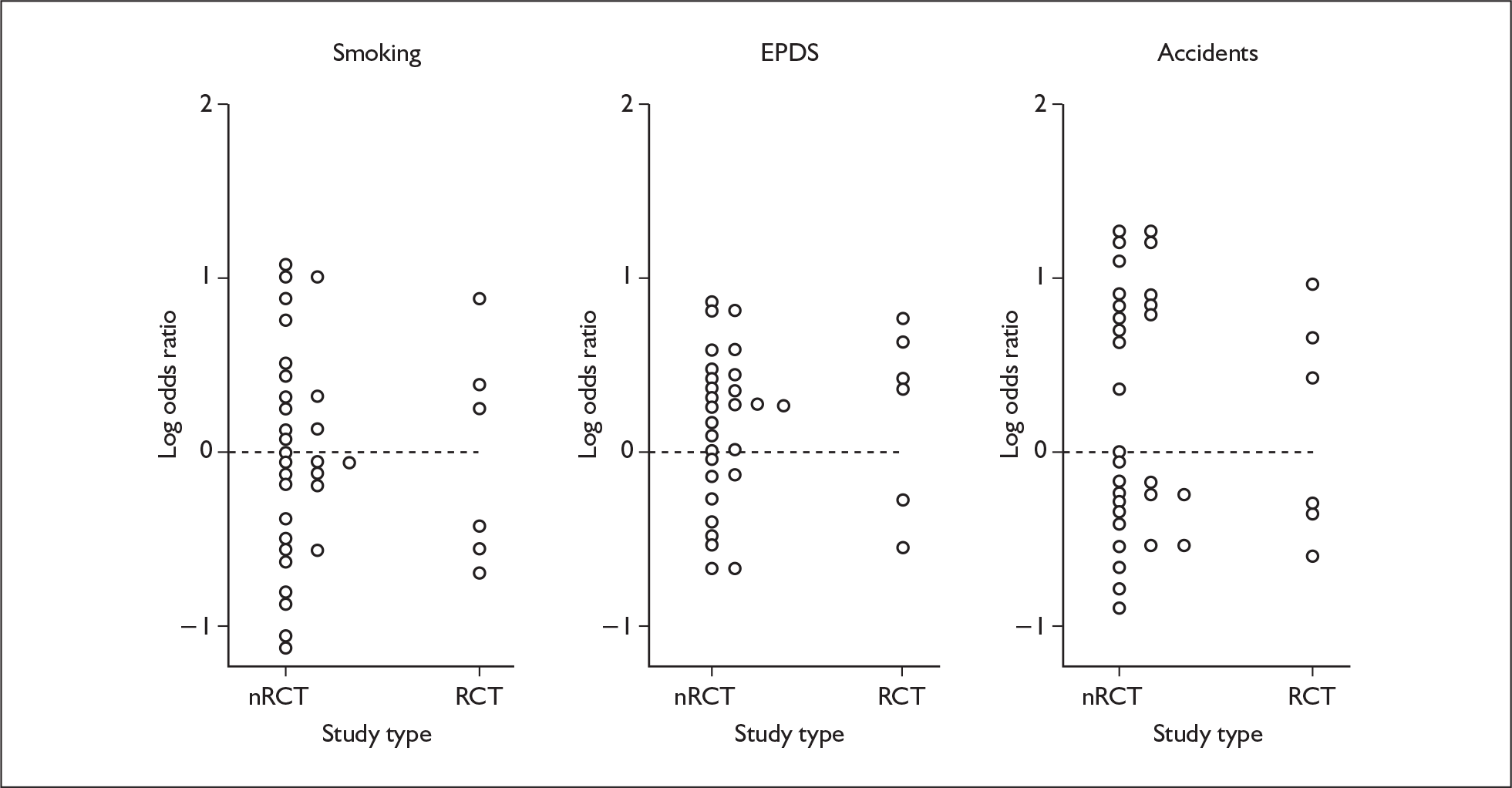

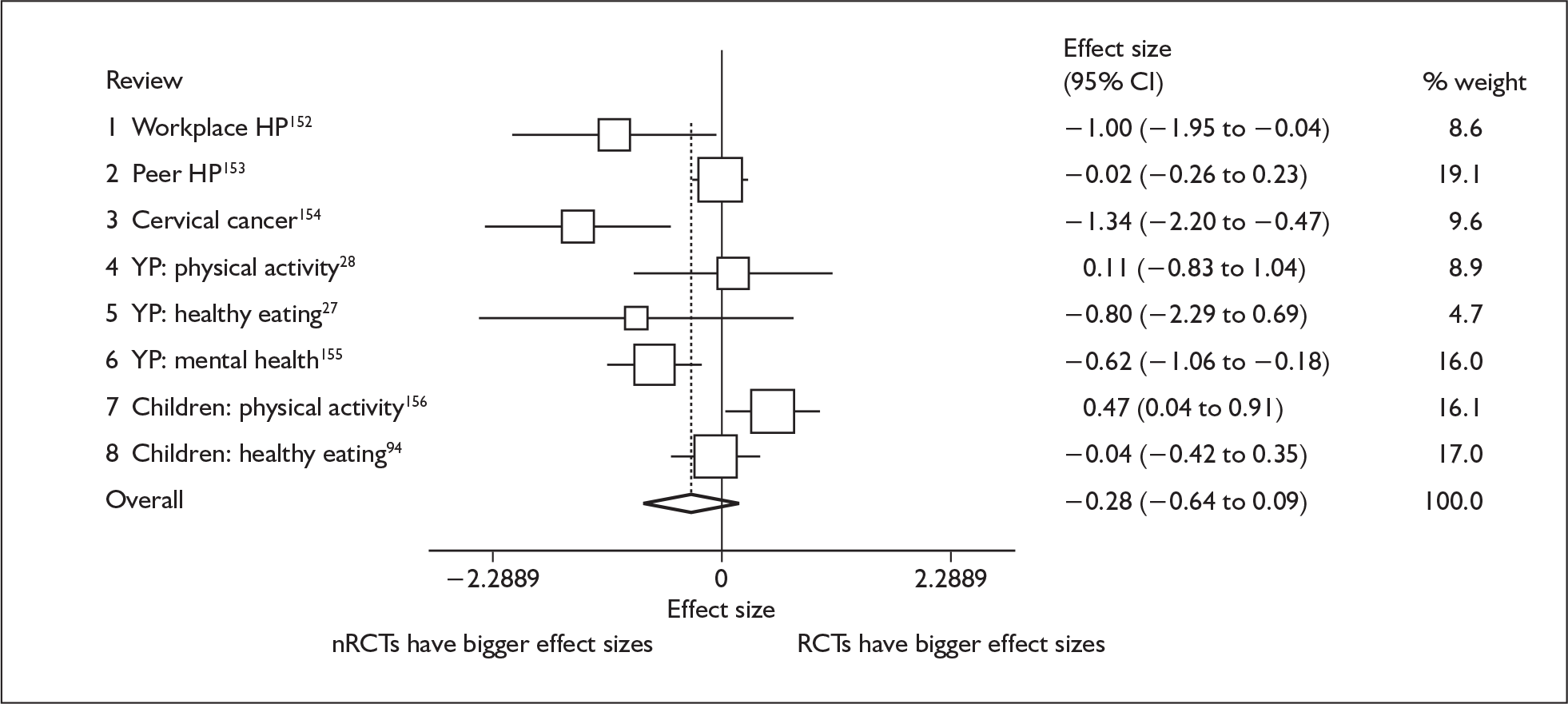

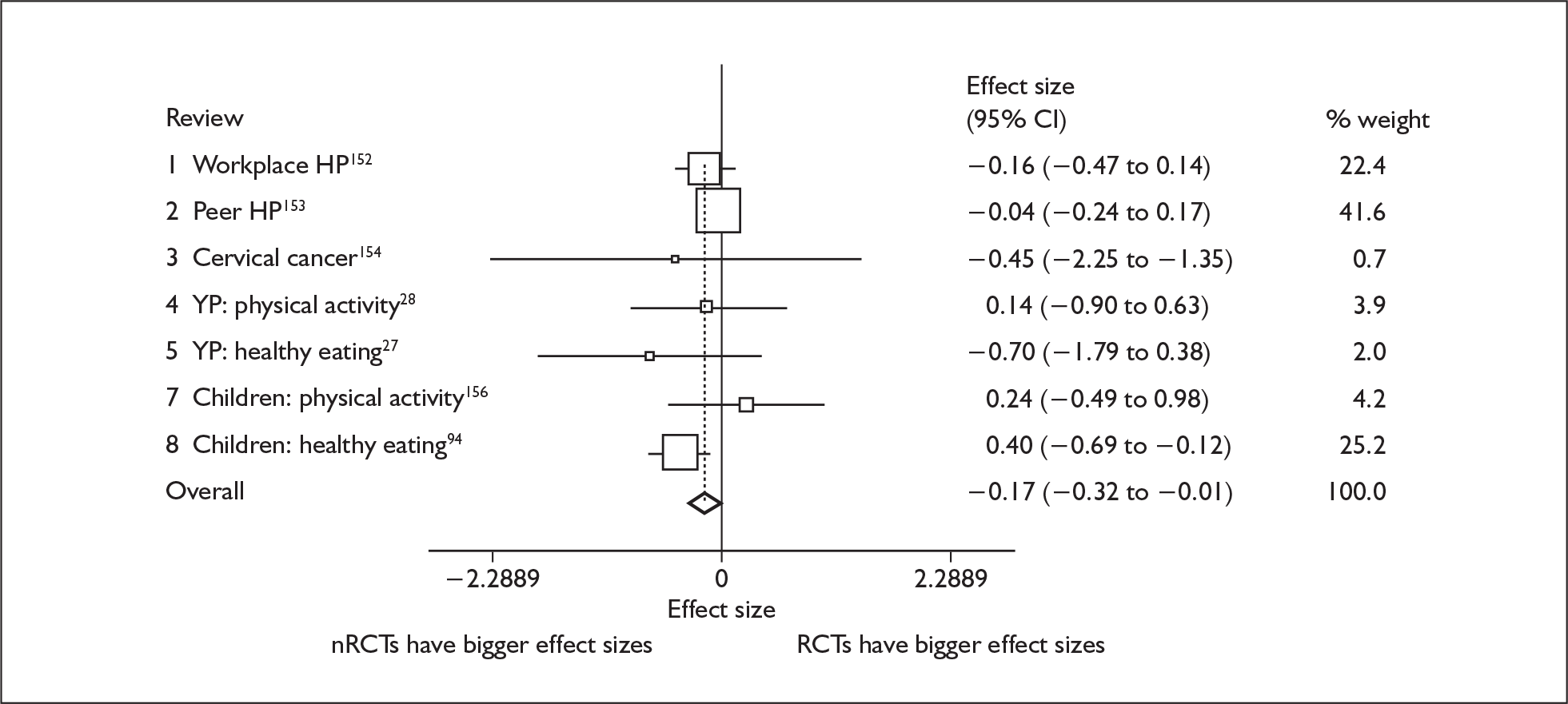

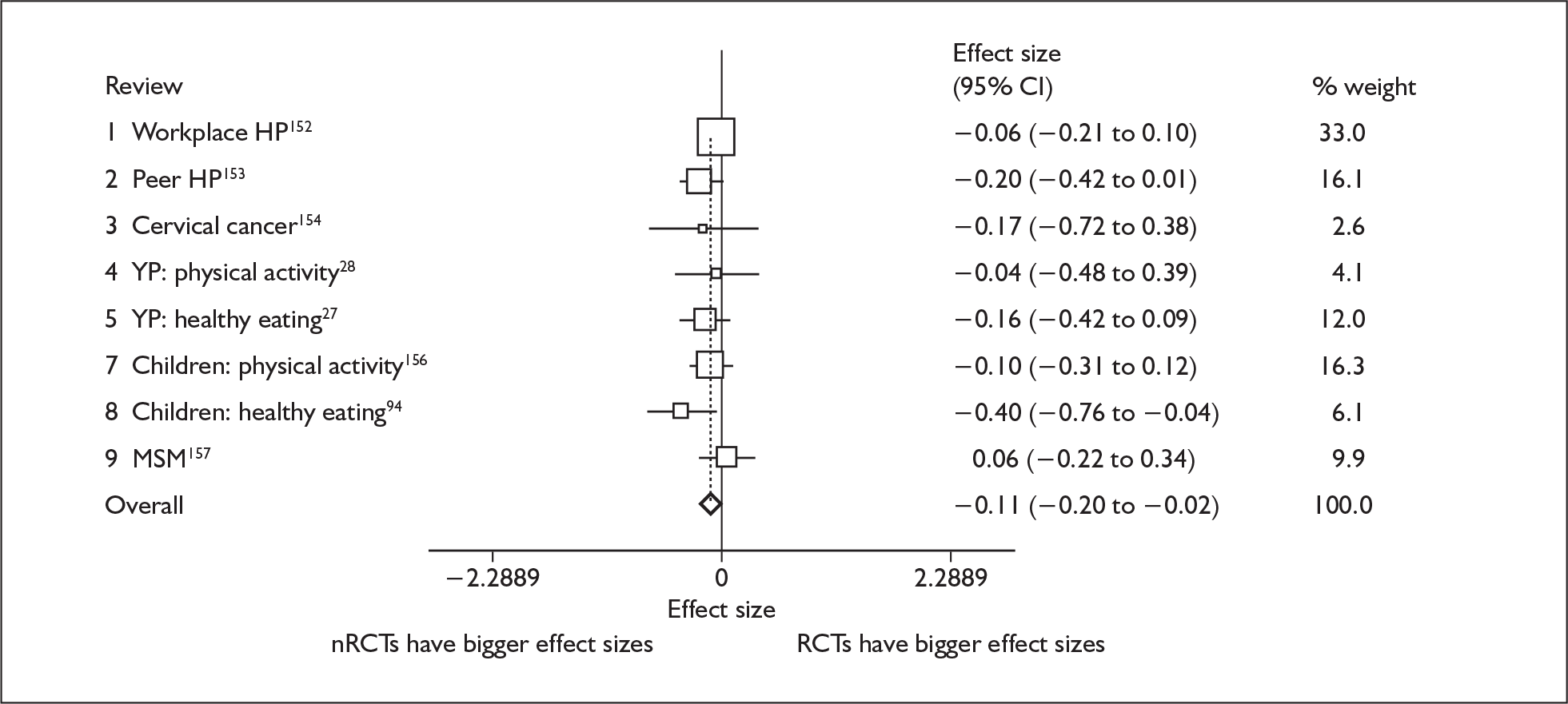

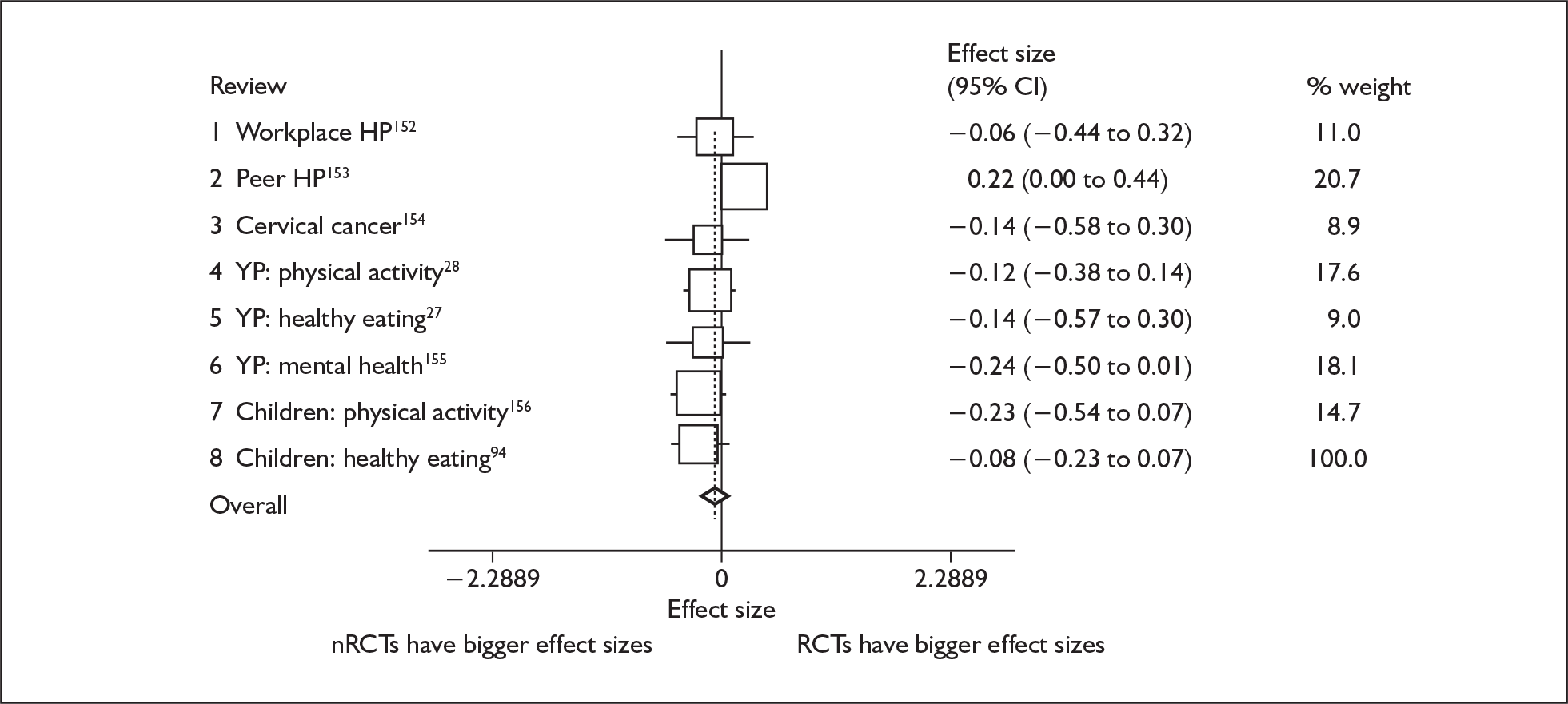

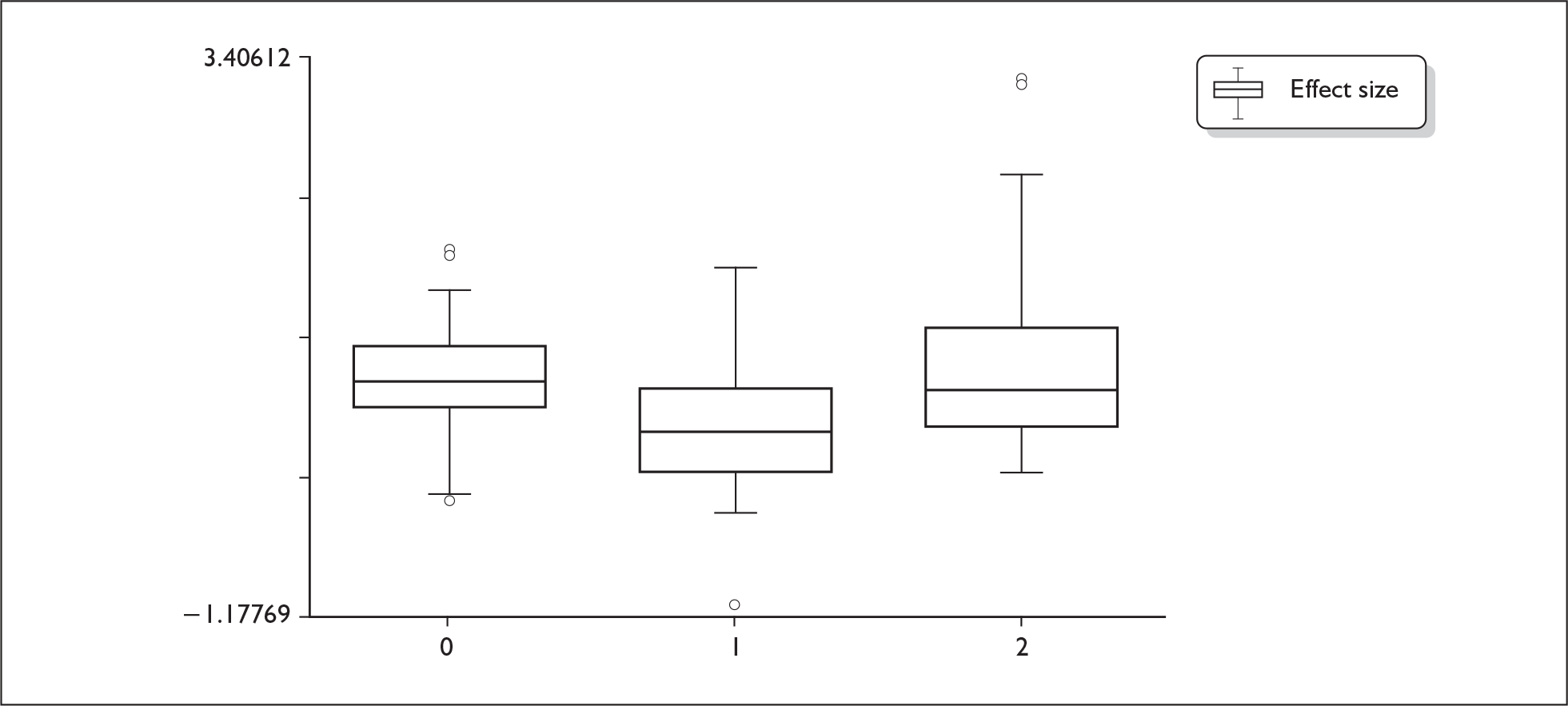

There were nine systematic reviews conducted by the EPPI-Centre between 1996 and 2004, and one Cochrane review conducted using EPPI-Centre software. These reviews all included both randomised and non-randomised studies. These reviews were of health promotion, with studies often conducted in educational settings. Between them they addressed workplace health promotion, peer-delivered interventions, mental health, physical activity (two reviews), healthy eating (two reviews), cervical cancer and sexual lifestyle; (nine reviews with a total of 206 studies). See Appendix 2 for summaries of these reviews.

Meta-epidemiological data

The studies described above, when combined as 206 controlled trials of health promotion, also provided a suitable data set for meta-epidemiological investigations.

Another data set suitable for this approach was also available from ongoing work by Colorado State University reviewing and synthesising the past 20 years of research and advancements in the area of transition for youths with disabilities (www.ncset.org/publications/viewdesc.asp?id=714). These data from 126 studies are also held on EPPI-Centre software.

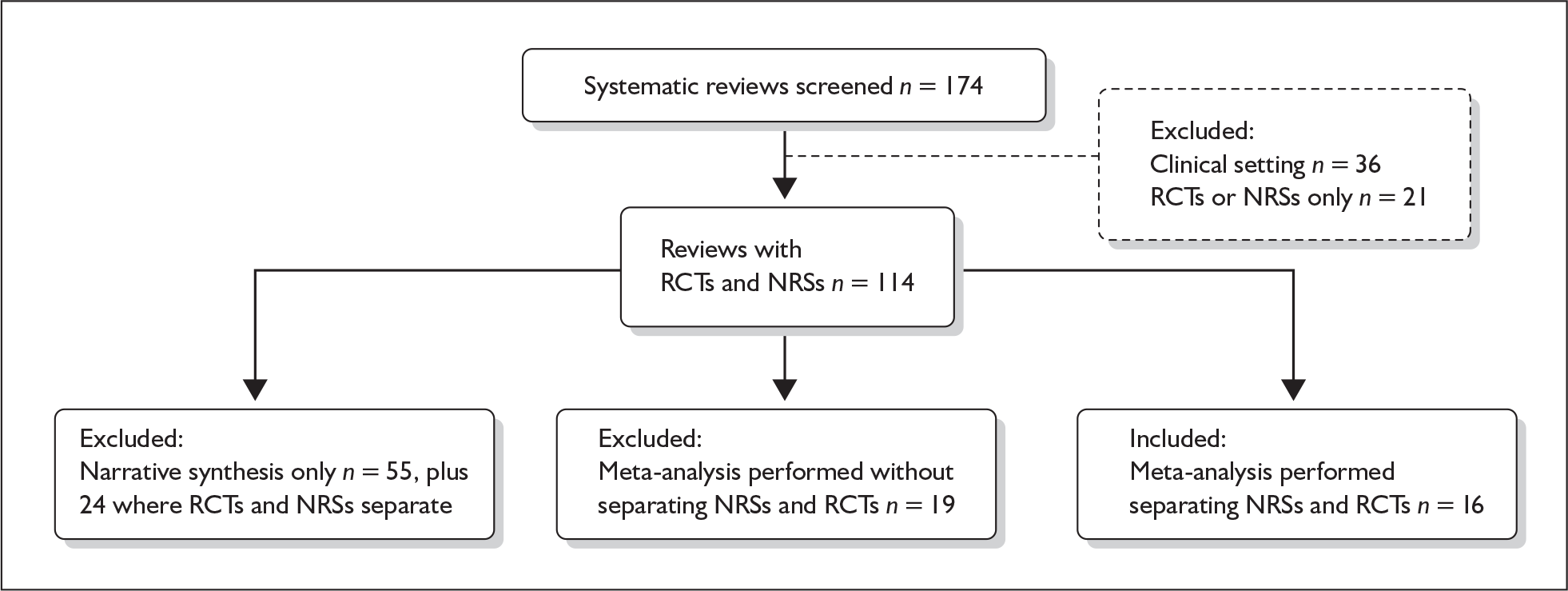

Reviews of reviews

For a review level analysis, data were sought from methodological studies and systematic reviews of randomised and non-randomised studies using systematic search strategies, selection criteria and data extraction procedures.

Chapter 3 Hypothetical associations between randomisation and effect sizes of policy interventions

In Chapter 1 we described divergent views on the appropriateness of RCTs for evaluating the effects of policy interventions. Here we build on our experience of reviewing policy interventions, and the relevant literature, to propose how randomisation and effect sizes may be associated in evaluations of policy interventions.

Our original objectives were to determine whether RCTs lead to the same effect size and variance as NRSs of similar policy interventions, and whether these findings can be explained by other factors associated with the interventions or their evaluation. To meet the second of these objectives we proposed a number of variables for which arguments could be mounted, hypothesising links between them, randomisation and effect size. These variables and hypotheses are presented below and summarised in Table 1.

| Potential confounder | Association with randomisation | Association with effect size | Possible technical solutions |

|---|---|---|---|

| Participants | |||

| Baseline characteristics | |||

| Groups may differ at baseline because: either recipients of the intervention have self-selected or those who declined to participate have been assigned to the control/comparison group; or recruitment favoured those most amenable to participation, or those in most need, or excluded older people or those with comorbidities | nRCTs are more likely to have more heterogeneous populations and non-equivalence between groups | Heterogeneity and non-equivalence at baseline may influence the calculated effect size and variance | Randomisation wherever possible; better matching and assessment of baseline characteristics elsewhere. More pragmatic trials reflecting ‘real world’ problems that will be more generalisable and more likely to be implemented |

| Attrition | |||

|

Higher attrition may be expected in community and home settings than in organisational settings Higher attrition may be expected in transient populations (e.g. commercial sex workers, asylum seekers, socially excluded people) |

It is easier to employ randomisation and have good follow-up for trials set in organisations. Attrition and randomisation can be used as quality markers for trials | High attrition may be associated with losing a disproportionate number of socially disadvantaged people who are more resistant to health promotion/public health initiatives |

Greater investment in recruiting and retaining participants – use of NHS number for tracking. Adjusting for attrition, assuming those lost to follow-up have poor outcomes Innovative strategies for managing contact with transient populations (e.g. using ‘peer evaluators’) |

| Intervention | |||

| Theoretical underpinnings of the intervention | |||

| Public health triallists value experimental methodologies more than do health promotion specialists, who place more emphasis on involving the community in developing and delivering the intervention |

Experimental methodologies in public health are associated with randomisation Health promotion is associated with community development but not randomisation |

Rigorous public health trials minimise effect sizes Community development is mounted with the expectation that it will maximise effectiveness |

Cross disciplinary research Encourage a results driven culture among social scientists |

| Public involvement in developing the intervention | |||

| Empowerment theories attribute responsibility to people not for the existence of a problem, but for finding a solution to it | The goal of ‘full and organised community participation and ultimate self-reliance’55 is a feature of social work such as community development and youth work, rather than a feature of public health and randomised experiments | Successful interventions specifically aimed at reducing health differentials include ensuring interventions address the expressed or identified needs of the target population, and the involvement of peers in the delivery of interventions56 | |

| Setting and boundaries of the intervention | |||

| Interventions with a broader reach (communities, regions, nations) have more diffuse boundaries than those set within institutions |

Randomisation is less often applied to community, regional or national interventions. Clustered trials are more appropriate for these and some organisational level interventions Attrition may be greater in larger scale interventions, where tracking of individuals is more difficult than within an organisation (see Attrition) |

Clustering reduces the power of a trial, so clustered evaluations are less likely to show effectiveness Standardised implementation of interventions may be more difficult across large communities, regions or whole countries than in single organisations, and therefore may be less effective |

Larger scale cluster trials for policy interventions Greater investment in tracking participants – use NHS number |

| Provider of the intervention: Community/peer provider | |||

| Community development and peer delivery specialists value health promotion theory and process evaluations more than RCTs | These interventions may therefore be found to have less randomisation | Peers may be seen as more credible sources of information than professionally trained, health educators, and may be particularly helpful in reaching ‘at risk’ populations | Cross disciplinary research |

| Clinician | |||

| Many clinicians who design and provide interventions value RCTs | These interventions may therefore be found to have more randomisation | Methodological rigour associated with randomisation is likely to lead to lower effect sizes69 | |

| Researcher provider | |||

| Researchers have more control over the intervention and evaluation | Theoretically, the researcher would therefore be better able to randomise | Interventions will be found to be more consistently implemented by enthusiasts, and therefore more effective | |

| Outcomes | |||

| Choice of outcome domains | |||

| Health outcomes are more readily measured in clinical settings | Clinical settings are more likely to mount RCTs, and have clinical providers, and long-term follow-up (see above) | ||

| Choice of outcome measures | |||

|

Clinical outcomes are more commonly found in clinical settings Choice of ‘hard’ or ‘soft’ outcomes can be associated with randomisation |

If clinicians favour RCTs, clinical outcome measure may be associated with greater randomisation | Clinical outcomes will be found to be more resistant to change than ‘softer’ outcomes such as reported behaviour | |

| Evaluation design | |||

| Sample size | |||

| Sample size affects the choice of study design | Larger sample size may be more likely in NRSs | Smaller sample sizes are more likely to give spurious results; of these, those with positive results are more likely to be published | Weight of evidence by sample size |

| Control group | |||

| Study design is linked with the use of a control group | Control groups are always found in RCTs, but only sometimes in NRSs | Use of a control group leads to smaller effect sizes than uncontrolled evaluations | |

| Blinding | |||

| Blinding of participants, recruiters, intervention providers and outcome assessors to the intervention allocation | Blinding is easier with randomisation | Poor concealment, more common in nRCTs, will be associated with greater effect sizes | |

| Follow-up | |||

| Length of follow-up periods is linked with study design | Long follow-up may be easier within institutions, where randomisation is also easier | Long follow-up will be associated with declining effect size | |

| Clustering | |||

| Clustered trials with few clusters are more likely to be ‘natural experiments’ | Natural experiments do not include randomisation | Natural experiments may be more likely to have enthusiasts supporting the intervention and non-enthusiasts supporting the comparisons, and therefore lead to greater effect sizes | |

| Quality of the reporting | |||

| Quality of reporting specific elements of a study is associated with researchers’ disciplines | Better reporting (of pre- and postintervention data) will be seen to be associated with triallists who also support randomisation | Reporting of pre- and postintervention data precludes effect sizes inflated by differences between groups | Adjusting for differences between groups, in primary studies and in reviews |

Potential confounders associated with participants of the evaluation

The population of a given study may be related to both the design of an evaluation and its effect size.

Baseline characteristics

Groups in nRCTs may differ at baseline for a number of reasons. Recipients of the intervention may have self-selected, or those who declined to participate may have been assigned to the control/comparison group. Alternatively, recruitment may have favoured those most amenable to participation or those in most need, or excluded older people or those with comorbidities. Well-conducted RCTs, with their standardised procedures for recruitment and data analysed according to the intention to treat rather than receipt of the intervention, are more likely to have more equivalence between groups. Non-equivalence at baseline may influence the calculated effect size and variance.

Attrition

Attrition rates may be linked to the quality of the evaluation. Higher attrition may be expected in community and home settings than in organisational settings where it is easier to employ randomisation and good follow-up. High attrition may also be associated with losing a disproportionate number of people who are socially disadvantaged and more resistant to interventions, and hence lead to a misleadingly large effect size. 49,50 For this reason, high attrition may be associated with both lack of randomisation and higher effect sizes. Potential technical solutions in primary research include greater investment in recruiting and retaining participants, perhaps using the NHS number for tracking. Other solutions for primary studies and systematic reviews include adjusting for attrition, assuming those lost to follow-up have poor outcomes.

Potential confounders associated with the intervention

There are arguments for linking the theoretical underpinning of intervention design, public involvement in developing interventions, and the geographical or organisational scope of interventions with the presence or absence of randomisation and effect size.

Theoretical underpinnings

Policy interventions pose serious challenges to evaluations of effectiveness because they may be large and difficult to replicate consistently, and have diffuse boundaries. Evaluation methodologies differ in their responses to such challenges. RCTs, and systematic reviews of RCTs, are the methods of choice employed by public health physicians wishing to elucidate causal effects in variable circumstances. These methodologies emphasise the need to reduce bias by employing randomisation, preferably with blinded allocation to treatment and outcome measurement, minimising attrition, and analysing according to the intended treatment. Examples include community interventions for preventing smoking in young people,51 computerised support for prescribing practice52 and day care for preschool children. 53

By contrast, many social scientists are known to employ different theories from experimentalists evaluating policy interventions. The relatively new profession of health promotion specialists21 has favoured ‘an approach to evaluation that implicitly acknowledges the need for outcome data but explicitly concentrates on process or illuminative data that helps us understand the nature of that relationship’. 54 The centrepiece of the health promotion paradigm is the concept of empowerment – enabling people to increase control over, and to improve, their own health. Empowerment claims to attribute responsibility to people not for the existence of a problem, but for finding a solution to it. The goal is then ‘full and organised community participation and ultimate self-reliance’. 55 This approach is endorsed by Arblaster56 in a systematic review of the effectiveness of health service interventions aimed at reducing inequalities in health. The review concluded that characteristics of successful interventions specifically aimed at reducing health differentials include ensuring interventions address the expressed or identified needs of the target population, and the involvement of peers in the delivery of interventions. The tradition of community development rests heavily on public involvement, and we expect community-based interventions to include the public more often in identifying the aims of the intervention, and/or participating in its development. Examples include impact evaluations of a large-scale social marketing initiative to encourage fruit and vegetable consumption57 and of bar-based, peer-led community-level intervention to promote sexual health among gay men. 58 With this understanding we anticipate evaluations of community development to be more theoretically informed from the tradition of social science, and less subject to randomisation. This expectation is supported by a comparison of the CONSORT (Consolidated Standards of Reporting Trials) statement (a checklist and flow chart, to help improve the quality of reports of RCTs) and the TREND (Transparent Reporting of Evaluations with Nonrandomized Designs) statement. The TREND statement, unlike the CONSORT statement, seeks information about theories used in designing behavioural interventions. 36,59

In summary, the public health approach has tended to emphasise randomisation but not public involvement or community-based approaches, compared with health promotion where the reverse is so; with the expectation within public health that randomisation leads to more conservative estimates of effect size and the expectation within health promotion that public involvement leads to interventions with greater effect sizes.

Similarly, divergent views about appropriate methods for evaluating interventions are found in the areas of social welfare44 and education25 where The Centre for Evidence-Based Social Services (www.ex.ac.uk/cebss/introduction.html) and the Evidence-based (www.cemcentre.org/ebeuk/) Education Network UK stand out from many of their British professional colleagues in social welfare and education, respectively, as advocates for randomised evaluation.

Setting and boundaries of the intervention

In the area of public policy, community-wide interventions, or regional/national interventions may pose challenges in being less easily manipulated for the purposes of evaluation than individual or institutionally based interventions. Standardised implementation of interventions may be more difficult across large communities, regions or whole countries than in single organisations and therefore may be less effective or more variable in effectiveness.

Interventions with a broader reach (communities, regions, nations) have more diffuse boundaries than those set within institutions. Randomisation is less often applied to community, regional or national interventions. Clustered trials are more appropriate for these and some organisational level interventions, in order to reduce the likelihood of participants experiencing comparison interventions to which they have not been allocated. However, clustering reduces the power of a trial, so clustered evaluations are less likely to show statistically significant effectiveness. The solution is to increase the size of the trial, yet recruitment can be particularly challenging in community settings. Moreover, attrition may be greater in larger scale interventions, where tracking of individuals is more difficult than within an organisation (see Attrition).

Providers of the intervention

Community development and peer delivery specialists value health promotion theory and process evaluations more than RCTs. These interventions may therefore be found to have less randomisation. Theories underpinning community development and peer delivery anticipate more effective interventions through their greater relevance. Characteristics of interventions effective for reducing health inequalities include community commitment and peer delivery. 56 Examples in the area of smoking cessation include the non-randomised evaluation of the Wessex Healthy School Award where the intervention was delivered by the school community. 60

In contrast, clinicians design and deliver their own interventions and evaluations, and work in a culture that favours randomisation; for instance, many of the smoking cessations’ interventions for pregnant women are delivered by health professionals and evaluated by RCTs. 61

Also, working in the area of criminology, Lipsey and Wilson62 have shown a statistical association between randomisation and effect size in ‘demonstration’ projects in which the researcher had greater control of both the intervention and randomisation. Our data set of health promotion evaluations provides an opportunity to test this association in another area.

Potential confounders associated with outcomes

The design of the evaluation provides a wealth of potential confounders. Among these are the choice of outcome domains (e.g. knowledge, attitudes, behaviour or health) and the choice of outcome measures (‘hard’ or ‘soft’). These are considered below.

Choice of outcome domains

The impact of health education has traditionally been considered in terms of changes in knowledge, attitudes, behaviour and health. Kirkpatrick’s63 hierarchy of outcomes from the policy area of professional training presents the higher level outcomes (health and behaviour) as harder to attain than lower level outcomes (knowledge and attitudes). The choice of outcomes may be strongly influenced by the intervention setting. Other broader health behaviour theories present knowledge and positive attitudes as necessary but not sufficient for improved behaviour and health in many theories of health behaviour. 64 Thus there is support from these two different policy areas of professional training and health behaviour change for the argument that outcomes in the domains of knowledge, attitudes, behaviour and health are successively more difficult to influence and therefore associated with lower effect sizes.

The choice of outcomes may be strongly influenced by the intervention setting. For instance, the measurement of any health outcome may be easier in a clinical setting where randomisation is also more readily acceptable by staff. Following this argument, evaluations with health outcomes are more likely to be associated with patient populations than community populations. For instance, generating evidence about smoking cessation in pregnancy lends itself to short-term health outcomes such as birth weight, gestational age at birth, perinatal mortality, method of delivery, and measures of anxiety, depression and maternal health status in late pregnancy and after birth as seen in a systematic review of 64 RCTs (51 RCTs and six clustered RCTs). 61 Such data can be easily collected in a clinical setting where randomisation is also feasible. Similarly, a review of smoking cessation for hospitalised adults where nine of the 17 included studies (16 RCTs, one quasi-RCT) measured death of the patient as well as abstinence from smoking. 65 Both these reviews included randomised or quasi-randomised trials.

In contrast, a review of community interventions for preventing smoking in young people51 found that over half the controlled trials were non-randomised, and outcomes were restricted to knowledge about the effects of smoking, attitudes to smoking, intentions to smoke in the future and smoking cessation.

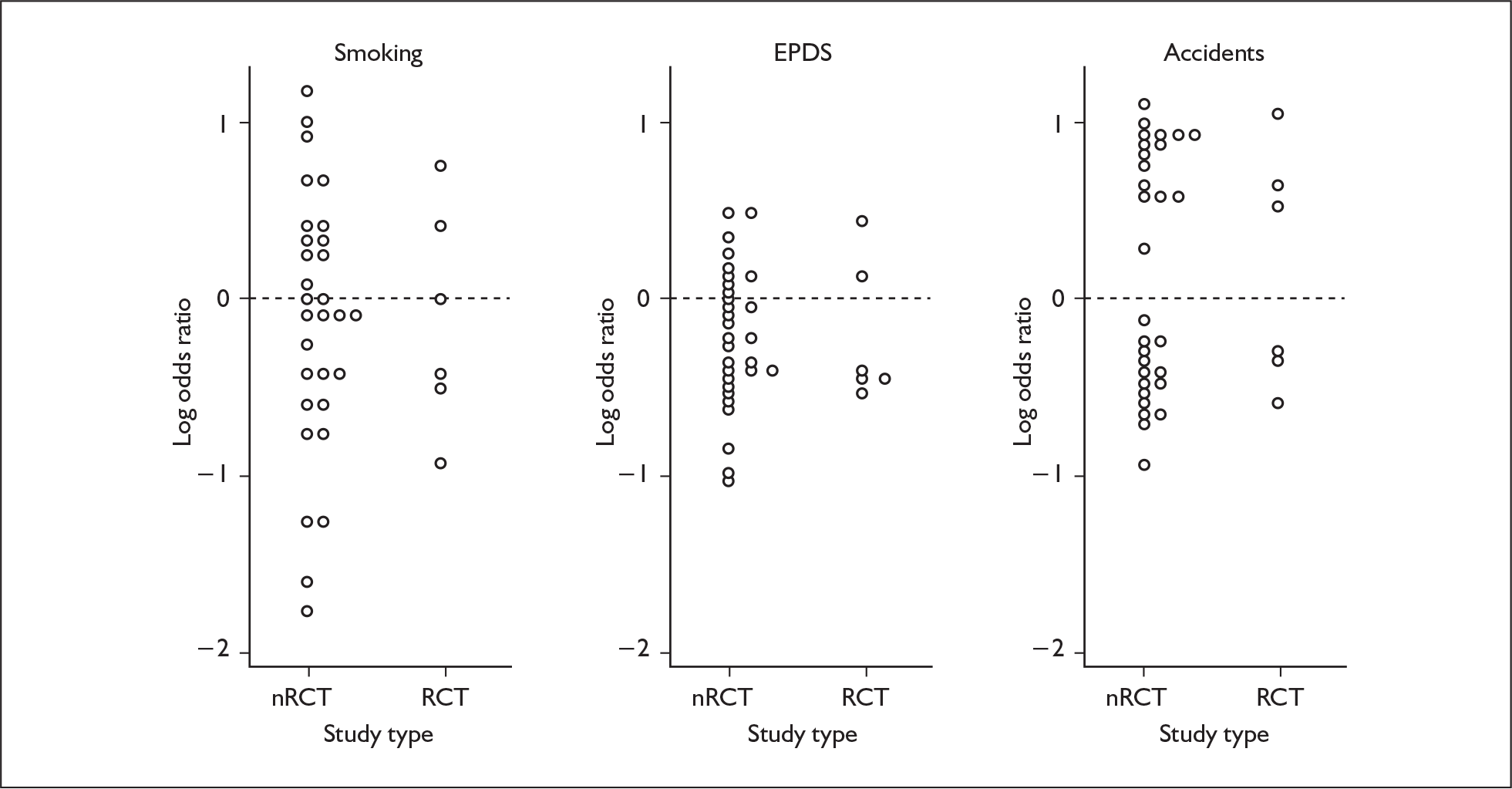

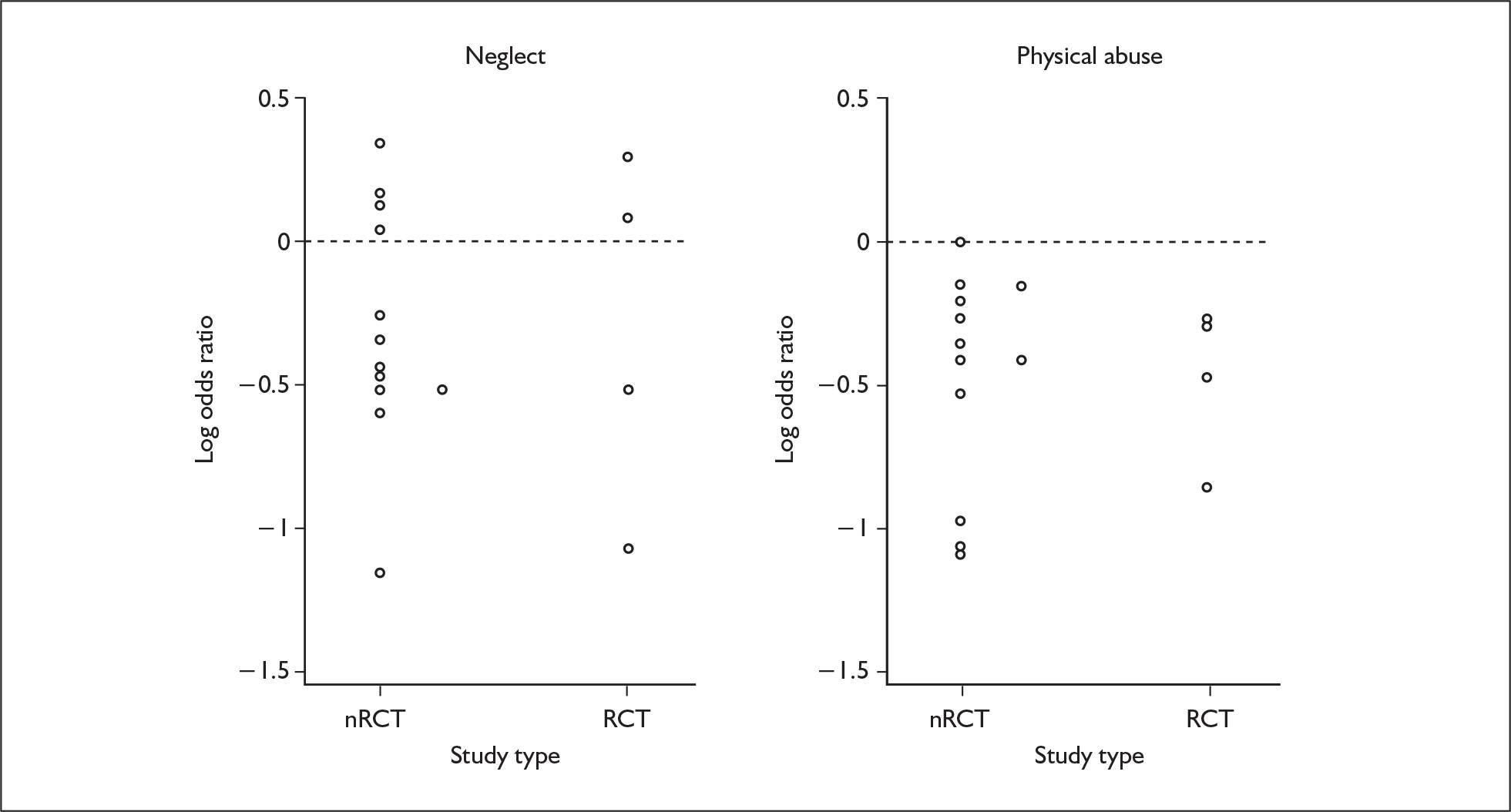

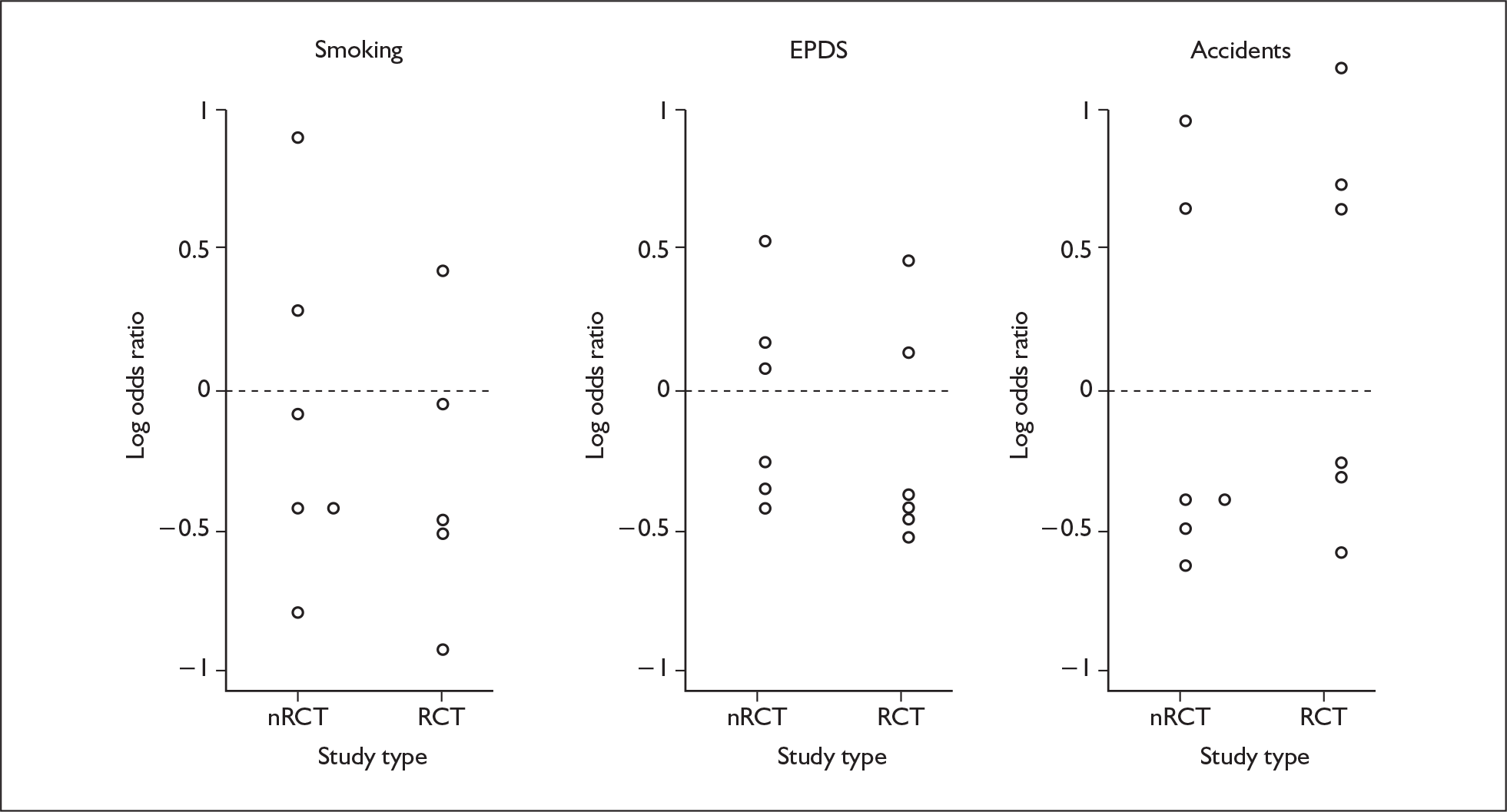

Choice of outcome measures