Notes

Article history

The research reported in this issue of the journal was commissioned by the National Coordinating Centre for Research Methodology (NCCRM), and was formally transferred to the HTA programme in April 2007 under the newly established NIHR Methodology Panel. The HTA programme project number is 06/92/05. The contractual start date was in April 2007. The draft report began editorial review in September 2009 and was accepted for publication in May 2010. The commissioning brief was devised by the NCCRM who specified the research question and study design. The authors have been wholly responsible for all data collection, analysis and interpretation, and for writing up their work. The HTA editors and publisher have tried to ensure the accuracy of the authors’ report and would like to thank the referees for their constructive comments on the draft document. However, they do not accept liability for damages or losses arising from material published in this report.

Declared competing interests of authors

Jonathan Beard is a member of the Curriculum Development and Assessment Group of the Royal College of Surgeons of England.

Permissions

Copyright statement

© 2011 Queen’s Printer and Controller of HMSO. This journal is a member of and subscribes to the principles of the Committee on Publication Ethics (COPE) (http://www.publicationethics.org/). This journal may be freely reproduced for the purposes of private research and study and may be included in professional journals provided that suitable acknowledgement is made and the reproduction is not associated with any form of advertising. Applications for commercial reproduction should be addressed to: NETSCC, Health Technology Assessment, Alpha House, University of Southampton Science Park, Southampton SO16 7NS, UK.

2011 Queen’s Printer and Controller of HMSO

Chapter 1 Introduction

The art of medicine is to be learned only by experience, ‘tis not an inheritance; it cannot be revealed. Learn to see, learn to hear, learn to feel, learn to smell, and know that by practice alone can you become an expert.

Sir William Osler, 19191

Background and rationale for the study

Sir William Osler was only partially correct. Experience is vital, but, as Halsted observed,2 ‘Experience can mean doing the wrong thing over and over again’. Becoming an expert also requires feedback, which is informed by assessment. Assessment is therefore the cornerstone of education and training, driving both teaching and learning, and shaping the overall nature of a curriculum. Within the context of postgraduate medical education, an assessment system may assume a regulatory role, by ensuring the quality of training delivered and educational standards for the purposes of professional regulation, clinical governance and patient safety.

The principal consideration of a well-designed and -evaluated assessment system is to ensure that the assessment methods adopted are valid, reliable, acceptable and cost-effective and have educational impact. Evidence of validity and reliability are essential characteristics of fair and defensible assessments and a prerequisite for making the high-stakes assessment decisions that allow progression in training and certification. All assessment systems must provide evidence of assessment rigour, in particular for identifying underperforming doctors who could compromise patient safety. The development of robust methods of assessment is axiomatic as they underpin the current competency-based assessment systems and curricula of all UK postgraduate training programmes.

The evolution of surgical training in the UK

Surgical training in the UK has been in a state of constant evolution since the 1990s. Radical changes to the regulation of surgical training and reforms in educational policy, with fundamental shifts in the delivery of surgical care and public attitudes towards surgery, have collectively driven the modernisation towards competency-based surgical curricula.

Until the Calman report3 initiated changes in the structure of postgraduate medical training, the Halstedian model of ‘surgical preceptorship’ had been used with modifications for over a century. 2 The craft specialties, including surgery as well as medical and interventional specialties, taught technical and surgical procedures through clinical exposure and experience within lengthy training programmes. Trainees were required to complete a set number of years of training and pass knowledge-based exams in their specialty to achieve their Certificate of Completion of Training (CCT). Technical skills and non-technical skills including teamwork, decision-making and communication were not formally assessed in exams or in the workplace.

There has been growing pressure to introduce regular assessment of practical skills or competence in the interests of public, political and professional accountability. The assessment of practical skills was initially encouraged by the Joint Committee for Higher Surgical Training (JCHST) in 2001. This was closely supported by the 2002 consultation paper Unfinished Business,4 which set out the case for major reforms in postgraduate training, concluding that ‘a new Postgraduate Medical Education and Training Board [PMETB] will be required to ensure that, throughout training, all assessments and examinations … are appropriate, valid and reliable’.

The PMETB assumed statutory responsibility in 2005, its remit being to establish and maintain standards for all postgraduate assessment programmes and curricula. 5 The PMETB has required all postgraduate specialties to provide comprehensive curricula, in which the competencies defined in the syllabus are blueprinted to the assessment programme. All postgraduate assessment programmes required urgent reform to be able to assess those competencies that could not be assessed adequately by examinations, in particular technical skills and professional behaviours. For the craft specialties, the formalised assessment of technical skills demanded different methods of assessment. Workplace-based assessments (WBAs) have been implemented to address this gap in assessment programmes.

Workplace- and competency-based assessment

Although competency-based assessment (CBA) and WBA have been relatively recently introduced within medicine, accelerated through changes to policy, these originate and are now well established within the education field. Since the 1970s, educationalists have been concerned with defining aspects of learning, in order to clearly define the content of training or education periods and to align these with assessment processes. Bloom’s taxonomy6 was one of the first frameworks developed, which divided learning into ‘knowledge’, ‘skills’ and ‘attributes’. This led to the development of learning objectives for defining educational content and learning outcomes for defining assessment content. The outcome-based model of assessment remains the predominant approach to assessment within higher education. 7

The competency-based approach to workplace assessment originates from occupational and vocational sectors of education. During the 1980s, there was a political drive to make the UK workforce more competitive globally. Parallel attempts were made to divide aspects of vocational learning into competencies, using functional analysis of job roles, to serve the purpose of assessing occupational competence within vocational training. 8 The National Vocational Movement9 produced ‘standards of competence’ and work-based competencies were assessed upon these clearly defined outcomes.

The assessment of competencies is highly relevant to medical practice. Competencies define job-related tasks or roles, using applied and integrated aspects of knowledge, skills and attributes. CBA is concerned with the assessment of essential competencies designed to ensure that health professionals perform their job to an acceptable standard of clinical competence. From the late 1990s, this approach to assessment has been adopted widely in health education including nursing,10 undergraduate medical training11,12 and postgraduate medical training. 13

Within UK postgraduate training, CBA was implemented under the umbrella of ‘Modernising Medical Careers’. 14 This started with the Foundation Programme in 2005,15 which marked the introduction of WBA into postgraduate medical training. That same year, the Orthopaedic Curriculum and Assessment Project (OCAP) (www.ocap.org.uk) was introduced. In 2007 the other surgical specialties participating in the Intercollegiate Surgical Curriculum Programme (ISCP), together with obstetrics and gynaecology (O&G), launched their new competency-based surgical curricula (www.iscp.ac.uk, www.rcog.org.uk). Central to these new curricula are the formal, structured assessments of surgical skill in the workplace, to provide an authentic assessment of day-to-day working practice and to maximise the educational impact of the experience.

It is important to acknowledge that the adoption of WBA has not been solely a response to changes in educational policy. There are many strengths to WBA, as well as limitations, as with any approach to assessment. 16 WBA offers a method of assessing job-related competencies that cannot be fully assessed by other assessment methods. Focusing on competencies achieved rather than time served allows for more individualised training and the opportunity to identify those trainees who may need additional support. It is potentially highly valid, assessing what doctors actually do in practice (performance) as well as their ability to modify their performance under different clinical circumstances. The evidence for the reliability of WBA is emerging, although there is a current paucity stemming from the difficulties involved in collating suitable and sufficient assessments for reliability evidence.

The success of an assessment method is determined by its effectiveness and WBA is no exception. It is important that WBA is shown to translate positive learner reactions and learning outcomes into improvements in clinical performance and patient/health outcomes. Using a modified version of Kirkpatrick’s model,17 described in Freeth et al. ,18 four levels of assessment effectiveness can be evaluated. The lowest level, level 1, concerns learners’ reactions and satisfaction with the assessment experience; level 2 concerns a change in learning outcomes; level 3 concerns a change in behaviour (divided into self-reported changes for level 3a and measured changes for level 3b); and level 4 concerns a change in patient outcomes. A review of performance-based assessment, including the use of peer assessment, portfolio, appraisal report and medical audit, highlighted that there are 19 studies providing evidence of positive assessment effectiveness at levels 1, 2 and 3. 19 One of these studies reported the audit loop for using SAIL (Sheffield Assessment Instrument for Letters), a WBA method designed to improve the communication between secondary and primary care using referral letters, with every doctor improving their mean scores 3 months after receiving feedback on the quality of their clinic letters. 20 It is acknowledged that empirical evidence is lacking for WBA supporting an improvement in the routine practice of doctors. It is a significant challenge to design and implement research that is able to demonstrate changes in patient outcomes for any given assessment method, although it is increasingly recognised that evaluation should focus on programmes rather than on methods. 21 This is because assessing performance requires integrated assessment methods within a broad assessment programme. As with clinical evidence guidelines, much of the evidence for WBA is circumstantial. For example, there is evidence that the educational principles of WBA, including one-to-one competency-based instruction22 and giving feedback on performance,23,24 are effective educational interventions. Improvements in training, including changes in attitudes and behaviours towards supervised training with feedback, should be viewed as the first necessary step towards long-term improvements in patient care and surgical safety. Furthermore, a significant outcome of using WBA is that trainees in difficulty and underperforming trainees, not previously identified, can more easily have their training needs identified by WBA with appropriate remediation.

The introduction of WBA is a challenge for all stakeholders, and issues of implementation, including the time and resources required, constitute potential limitations. However, there are several compelling reasons that make WBA more suited to the current surgical training climate than the examinations and time-served model.

The opportunity to gain surgical training and experience in the operating theatre has decreased significantly since the Calman report initiated shortened surgical training time. 3 We, and others, have shown a reduction in the number of operations undertaken and the level of surgical competence achieved by surgical trainees following the Calman reforms. 25 Furthermore, the European Working Time Directive (EWTD) was enacted into UK law in 1998 and has legislated for a step-wise reduction in the working hours of doctors in training. This directive undoubtedly has beneficial objectives, both in the interests of patient safety and quality of care and in terms of providing doctors with a better work–life balance and good training. The maximum number of hours trainees can stay in the workplace is 48 hours per week from August 2009, with a voluntary option of working 56 hours. 26 To balance training with service provision, it has been necessary to institute full shift rotas for the majority of training posts, requiring trainees to work regular night shifts with a loss of daytime supervised surgical training. These changes in working practices equate to an overall reduction in surgical training time in the operating theatre, with increasing amounts of elective surgery being performed by consultants. Under these conditions, the traditional apprenticeship model is no longer appropriate for the current training structure.

The demonstration of surgical competence ensures that the dual concerns of patient safety and training quality are satisfied. It must be emphasised that WBA is not a substitute for procedural experience. The two are complementary in terms of achieving surgical competence. The questions of how many hours or number of procedures are required to train a surgeon are yet to be answered.

The adoption of CBA and WBA tools is designed to improve the efficiency of surgical training, by directing both clinical supervisor (or consultant supervisor) and trainee to use each supervised operation (or other clinical encounter) as an opportunity for objective assessment and constructive feedback. Direct supervision and feedback are integral to WBA practice, and should take place as a formalised part of the assessment process, in contrast to the more ad hoc nature of supervision and feedback often observed within the apprenticeship training model. In this way, surgical training using WBA becomes a focused educational activity with joint trainee and clinical supervisor responsibility, to meet the challenge of an overall reduction in training time. Specific to the operating theatre, WBA tools can be completed with feedback in the time between surgical cases.

Principles of assessment

Assessment theory and practice can differ substantially. To address this gap between theory and practice, we illustrate the key concepts from assessment theory to provide a relevant and applied background for WBA.

Assessment is concerned with a process of measuring a trainee’s knowledge, skills, judgement or professional behaviour against defined standards. The WBA tools used within the current surgical curricula use explicit judgements against defined performance-based criteria. Assessment is distinct from appraisal. The latter is designed to review the progress and performance of an individual trainee. It is a planned process, focusing on achievements, future learning and career guidance, in which the criteria are usually internal and individual.

This report is focused on the assessment of surgical skills, whereby the skills of surgeons in training are judged against a defined reference. Assessments can be referenced in two ways:

-

Criterion-referenced assessment compares a trainee’s performance to an absolute standard. Such a benchmark might be the ability to perform a procedure competently and independently.

-

Norm-referenced assessment compares a trainee’s performance with other trainees in the same cohort. Such a reference might include a below average, average or above average performance within a particular cohort.

Criterion referencing, rather than norm referencing, is used within WBA, i.e. a trainee’s performance is not compared with their peers but with a fixed standard. Criterion-referenced assessments assist assessors in making consistent judgements by setting absolute standards of performance and a clear description of the standard expected. Recent consensus statements on WBA support the use of criterion-referenced assessments using rating scales with clear text descriptors. 27

At best, norm-referenced assessments imply that the ‘average’ level will increase with time and experience. However, this is inherently more difficult for assessors to determine, as it depends on the performance of that particular cohort and relies on intuitive assessor judgements.

Various benchmarks are used by current WBA tools. Those used by the Foundation Programme and core medical and core surgical training use the standard expected of a trainee at that level of training. 28 The procedure-based assessment (PBA) used by both OCAP and ICSP for the specialty index procedures uses the standard expected for certification of completion of training29 with a summary judgement based on the ability of the trainee to perform the procedure independently. The Objective Structured Assessment of Technical Skills (OSATS) used by the Royal College of Obstetricians and Gynaecologists (RCOG) during the course of this study (version in use before February 2009) adopts a pass/fail judgement. The standard against which this judgement is made is not explicit on the form,30 although there are benchmarks within the curriculum and training portfolio that define the standard as that required for independent practice.

Purpose of assessment

Assessment has been shown to drive both learning31,32 and teaching. 33 In view of this, it is essential when designing an assessment system that the object and purpose of the assessment are clearly defined to all stakeholders from the outset.

The purpose of an assessment should determine every aspect of its design. 34 These aspects include:

-

the choice of assessment methods

-

the selection of assessment tools

-

the way in which the above are combined

-

the number of assessments required

-

the timing of assessments

-

the way in which the outcomes are used to make decisions regarding progression or certification.

The purposes of assessment will be different for the individual trainee being assessed, the training programme, the employer and the public,35 and are presented in Table 1. Some purposes may be shared by different stakeholders whereas there may also be conflicting assessment purposes between different stakeholder groups.

| For the trainee |

|---|

|

Provide feedback about strengths and weaknesses to guide future learning Foster habits of self-reflection and self-remediation Promote access to advanced training |

| For the curriculum |

|

Respond to lack of demonstrated competence (targeted training) Certify progression in training over time Certify achievement of curricular outcomes Foster curricular change Create curricular coherence Cross-validate other methods of assessment in the curriculum Establish standards of competence for trainees at different levels |

| For the institution |

|

Discriminate among trainees for progression in training or access to subspecialty training Guide a process of institutional self-reflection and self-remediation Develop shared educational values among a diverse community of educators Promote faculty development Provide data for educational research |

| For the public |

|

Certify competence of doctors in training Identify unsafe or poorly performing doctors |

Assessment has multiple purposes. However its educational purpose can be broadly divided into:

-

Assessment for learning (alternative terms are formative or low-stakes assessment). This is primarily intended to aid a trainee’s learning through the provision of constructive feedback, identifying good practice and areas for development.

-

Assessment of learning (alternative terms are summative or high-stakes assessment). This is primarily aimed at determining a level of educational achievement relative to a defined standard. Such assessments are infrequent and usually take place at set times to permit progression in training or certification.

Some assessments can serve both purposes and there is a continuum between these two poles. In-training assessment may seek to integrate both assessment purposes into an overarching assessment framework, in recognition of the fact that they may be complementary in reinforcing feedback and self-directed learning. 36

Workplace-based assessment has a particular strength for formative assessment (i.e. assessment for learning), through the direct observation of trainees by trained assessors and the provision of immediate feedback. However, an assessment of learning for progression still needs to be made to inform the annual review of competency progression (ARCP), using all sources of evidence including WBA. Conflict exists with the use of WBA to serve both educational purposes, and this is carefully considered within the most recent policy documents on WBA. 16,27 The importance of making the purpose of assessment explicit to stakeholders is now recognised as fundamental to the successful implementation of WBA.

Levels of assessment

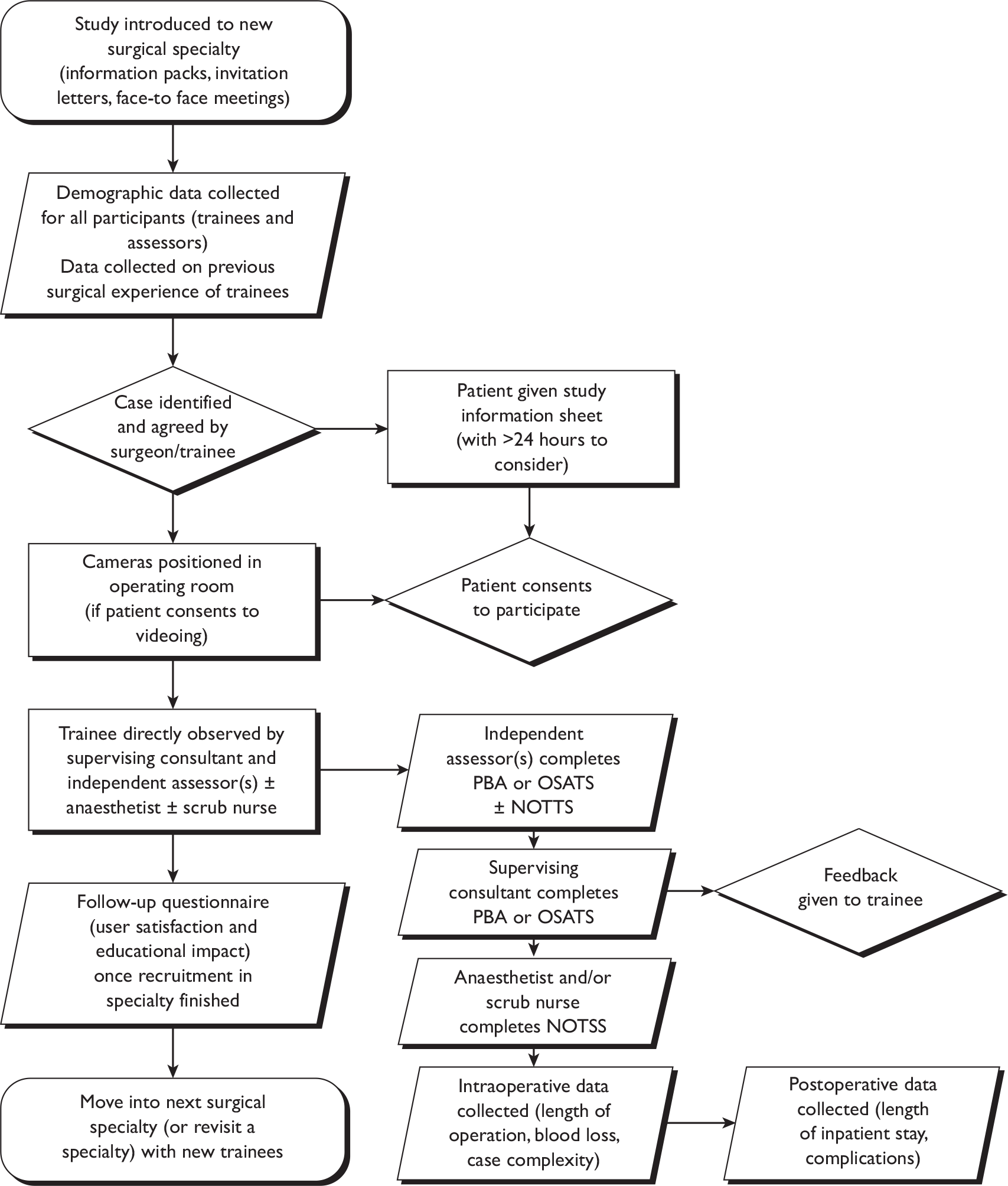

There are different levels of assessment that can be targeted by a given method of clinical assessment. Miller’s assessment pyramid37 describes a simple hierarchy for the development and assessment of clinical skills. The four levels of assessment are illustrated here with reference to surgical skill assessment methods (Figure 1). They describe fundamentally different assessment constructs, in terms of both the nature of learning they require and also the situational context of the learning. The strength of Miller’s model has been for guiding curricular design and the selection of assessment methods to target the appropriate and intended levels of assessment.

FIGURE 1.

Relationship between Miller’s pyramid and methods of assessment. EMQs, extended matching questions; MCQs, multiple choice questions.

The lower two levels of Miller’s pyramid describe the cognitive domains of knowledge (‘knows’) and integrated and applied knowledge (‘knows how’). In terms of surgical skills assessment, the first level could encompass the simple recall of anatomy and physiology facts, with a suitable method of assessment being a factual test of knowledge. The second level could relate to applied anatomy and physiology, and this would be appropriately targeted by clinically based tests (e.g. problem-based scenarios, extended matching questions) to assess the deeper nature of applied knowledge. These levels of assessment are mostly addressed within both undergraduate and postgraduate assessment systems using written examination methods. The top two levels of the pyramid are the behavioural domains that Miller termed ‘shows how’ and ‘does’, distinguishing competence from performance. Competence can be defined as ‘what a person does in a controlled representation of professional practice’ whereas performance is ‘what a person does in actual professional practice’ within the workplace. 38 Both of these assessment constructs are addressed within postgraduate assessment programmes using a variety of assessment methods. Improvements in competence and performance usually come from experience (practice) combined with constructive feedback,39 with feedback aimed at providing ‘an informed, non-evaluative, objective appraisal that is intended to improve clinical skills’. 40

A complex relationship exists between competence and performance. Competence cannot necessarily predict performance, and this has been demonstrated in a number of medical education contexts using a variety of assessment methods. 41–43 Therefore, the use of CBAs is inappropriate for the assessment of performance.

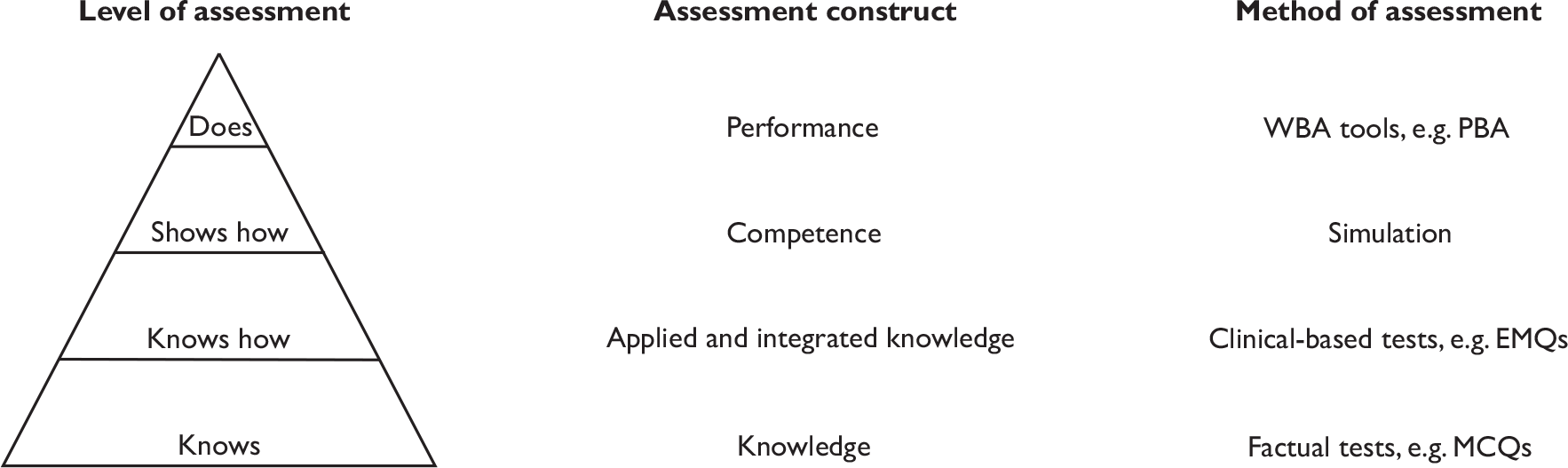

The Cambridge model described by Rethans et al. 38 is an extension of Miller’s pyramid. It conceptualises performance as a window to competence, illustrating that competence is a prerequisite for performance with several additional factors that influence the normal day-to-day performance of doctors (Figure 2). These were classified in the model as individual-related influences (e.g. physical and mental health of the doctor, state of mind at the time of assessment, relationships with peers) and system-related influences (e.g. time pressures, guidelines, facilities). In the case of surgical skill assessment there could equally be added case-related influences (e.g. case complexity or type of procedure).

FIGURE 2.

The Cambridge model of performance.

Workplace-based assessment aims to target the level of performance, assessing most closely the actual behaviour of doctors in the workplace. Individual-, system- and case-related influences cannot be fully controlled for in the context of WBA. However, WBA moves beyond the theoretical construct of assessing professional practice under ‘controlled’ conditions (competence) and seeks to assess authentic performance in the workplace. In practice, the distinction between competence assessment and performance assessment is becoming less clear cut as practising doctors are subject to continuous assessment in the workplace using methods such as multisource feedback and analysis of patient records/letters. One important consideration when using WBA is that case-related and judge-related effects are more dominant influences than for other performance-based assessments.

Performance-based assessment

Performance-based assessment is concerned with assessing complex, ‘higher order’ knowledge and skills in the workplace context in which they are used, generally with open-ended tasks that require substantial assessor time to complete. 44 The adoption of WBA tools to provide structured performance-based assessment of surgical skills in the operating theatre is a relatively recent development within surgical training programmes. However, there is considerable experience in using performance-based assessment in other medical education contexts, including covert standardised patients45 and peer assessment. 43 The lessons from these experiences44,46 are summarised here to highlight the particular considerations of performance-based assessments:

-

The behaviour and performance of a doctor are highly dependent on the nature of the problem or task undertaken. The level of performance achieved for one problem or task is not a good predictor for subsequent ones – a finding termed case specificity. 47,48 Therefore, performance-based assessments need to sample widely and consider both context (situation/task) and construct (knowledge/skill/attitude), as complex interactions exist between these dimensions. For example, to adequately assess surgical performance would require assessment of different operations that demand different decision-making and technical skills.

-

Assessors make subjective judgements even when using assessment methods that include clear and objective descriptors as the performance criteria. It is vital to ensure that assessment criteria are rigorously developed and well understood by assessors through training. Performance-based assessments should also draw upon the judgements of as many assessors as feasible in order to limit the impact of assessor bias on assessment scores. 34 For example, an assessment system that adopts WBA needs to stipulate that sufficient numbers of assessors are involved in assessing the performance of an individual doctor.

-

The most complex aspects of performance (e.g. judgement and decision-making) are the most difficult of all for which to devise observable and/or meaningful criteria that will allow adequate assessment. However, they remain a vital part of assessing overall performance. It is important that performance-based assessments seek to assess broadly, rather than focusing assessment on the easy-to-assess aspects. Global rating scales have the ability to assess these more complex dimensions of performance. 49

-

The use of systematic methods to select assessment content (e.g. task analysis and Delphi processes using specialty experts) is a good approach for designing valid assessment methods. However, it may not be possible or feasible to develop assessment methods to include all of the validated content.

-

Performance is a unified assessment construct and it is difficult to identify obvious planes of cleavage. There have been systematic efforts directed towards defining components of performance,50 and in developing checklists to allow assessment of specific competencies there is evidence that expert judgement using global rating scales provides a superior assessment of performance. Comparing the use of detailed checklist scales with global rating scales in a variety of contexts, assessors produce more reliable assessments using global scales. 51,52 There is also evidence that trainees find checklists helpful both for training and for informing feedback. 53 Therefore, the roles of checklists and global ratings are complementary within performance-based assessment.

-

Assessing performance requires the triangulation of assessment methods to facilitate an overall judgement. There is no one assessment method that can sample across all relevant contexts and constructs or that should be relied upon singularly for the assessment of performance. 54 This is the approach that has been adopted by the General Medical Council’s (GMC’s) Performance Procedure, for which several assessment methods have been selected to assess poorly performing doctors. 55 These target levels of both competence and performance in the light of their complex and non-linear relationship. It is also the approach used within the summative annual review process for doctors in training (termed ARCP), which judges the suitability of each trainee to progress or complete training. The ARCP panel uses evidence from multisource feedback – WBA, educational supervisor reports and examinations – to judge if the trainee’s performance is satisfactory or otherwise. The breadth of the assessment methods also allows for specific deficiencies in performance to be highlighted and addressed within the ARCP, for example there may be specific concerns about a trainee’s operative skill and a collection of WBAs over the year of training will fail to show progression in training.

These unique considerations need to be fully appreciated when designing and implementing performance-based assessment for both research and training purposes. Our research methodology, outlined in Chapter 2, is aligned to these considerations for researching performance-based assessment methods.

Designing and evaluating assessment methods

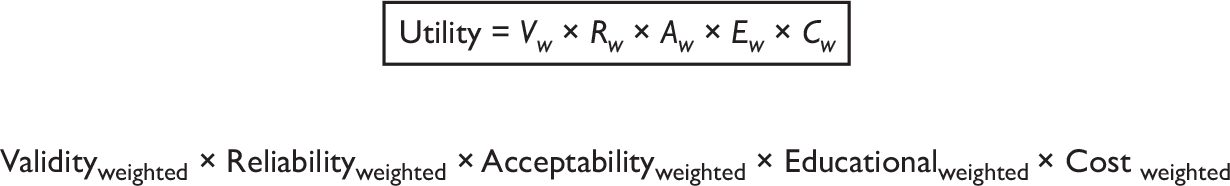

All assessments methods need to balance rigour (reliability and validity) against practicality (feasibility, cost and acceptability). 34 van der Vleuten’s utility index56 offers a useful conceptual framework for assessment design and evaluation. Ensuring that our assessment methods are valid, reliable, acceptable and cost-effective and have educational impact are the principal considerations of a well-designed and -evaluated assessment system. The ideal assessment method would possess all these essential measurement characteristics. However, the choice of assessment methods adopted within any training programme should be determined by balancing these conflicting considerations to fit the purpose of the assessment (Figure 3). For example, a high-stakes examination will need to weight reliability and validity, whereas an in-training assessment may focus upon educational impact. All assessment methods demand acceptability.

Evidence of validity and reliability are essential characteristics of fair and defensible assessments, particularly in identifying underperforming doctors who could compromise patient safety. 48 The PMETB standards of assessment, set in 200457 and recently updated,5 have stipulated that postgraduate training programmes provide reliability and validity evidence for their assessment methods. By design, the focus of interest for UK training programmes has been in ensuring that their assessment methods are defensible. However, there is a more recent consensus of opinion that successful implementation of WBA demands a redress of acceptability and feasibility issues. 27

The psychometrics of assessment

Validity and reliability are metric properties, termed psychometrics when they concern the quantitative measurement of psychological variables such as behaviour and cognition. Psychometrics, originally a branch of psychology, has developed an increasingly important place within the health sciences for measuring complex constructs such as quality of life and the assessment of clinical performance. This report is primarily focused on evaluating the assessment of surgical performance. The validity and reliability of different assessment methods are carefully measured. We will briefly outline the conceptual basis of these measurements, relating them closely to the context of performance assessment.

Validity is the extent to which a result reflects the construct it intends to measure and not something else. 58 To establish the validity of assessments intended to measure surgical performance requires evidence to support whether or not the assessment actually measures surgical performance. There are many sources of validity evidence that can be drawn upon in making judgements of assessment validity to allow a meaningful interpretation of assessment scores. 59 As a bare minimum, a valid assessment should appear to be measuring what is intended (face validity) and must include the relevant performance criteria and elements of the skill or behaviour being tested (content validity). There should also be agreement with other assessments intended to measure the same construct (criterion validity). For example, tool A and tool B both assess non-technical surgical skills and, despite being different assessment methods that are completed separately, could be expected to agree as they measure the same construct of surgical performance. Construct validity concerns the extent to which assessment scores correspond to an assessment construct, which could be an attribute, ability or skill, as predicted by some rationale or theory. It is measured by testing hypotheses about the construct of interest (for example, surgical skill) and evaluating whether these are confirmed or refuted by the assessment scores. A surgeon’s surgical skill could be predicted to improve with years of training and previous surgical experience. If one correctly hypothesises that more senior and experienced surgeons will obtain higher scores for surgical skill (because theory shows that the acquisition of surgical expertise requires many hours of surgical experience), the assessment may have construct validity. Construct validity should be demonstrated by an accumulation of evidence. There will be more confidence in the assessment score when more strategies are used to demonstrate its construct validity, provided the evidence is convincing.

Predictive and consequential validity both provide more stable evidence of the validity of assessments. Predictive (outcome) validity is the extent to which an assessment score predicts expected scores on some criterion measure. For example, the validity of an assessment of surgical performance would be demonstrated by a significant correlation of scores with surgical outcomes or clinical supervisor performance ratings. Large sample sizes and follow-up data collection are required to demonstrate predictive validity. Consequential validity considers the educational impact of assessments, in particular what trainees learn and how they learn it, to evaluate whether assessments encourage good or poor learning behaviours. For example, WBAs of surgical skill are designed to aid learning through supervised operating and feedback from clinical supervisors, but if they failed to support trainees to gain operating experience and develop their surgical skills, their consequential validity would be judged as low.

Reliability is the extent to which an assessment score reflects all possible measurements of the same construct. 58 It reflects the reproducibility of assessment scores. A reliable test should give the same result if repeated or if a different assessor is used. 60 An evaluation of reliability should involve a clear statement of the circumstances that the results are meant to represent. 34 For example, this particular assessment has demonstrated good reliability for assessing the surgical skill of all levels of vascular specialist registrars, using no fewer than four assessors on four cases, within a typical teaching hospital context.

To understand how big an impact the case, the judge and other important contextual factors have on the assessment score requires that all these sources of error (termed variability) are quantified. Estimating reliability then depends on comparing the effect of assessor-to-assessor and case-to-case variability in scores with overall trainee-to-trainee variability in scores. Assessor and case variability represent the greatest threats to the reliability of WBA. 61 Generalisability theory provides the most robust and meaningful reliability estimates by simultaneously examining assessment scores for these different types of variability (see Glossary for a detailed description). Generalisability theory is the statistical approach used for the analysis of assessment scores within this study.

The observation of real-time performance in the workplace is essential for achieving the most authentic assessment, as this method approximates to the ‘real world’ as closely as possible. The more frequently WBA is integrated into routine practice, the better the validity of the assessment. 27 Trainers and trainees are being encouraged to use every clinical encounter and surgical case as an opportunity for WBA, moving away from the mini-exam mentality that is produced by infrequent assessment. WBA frequently lacks evidence of reliability because of the difficulties involved in producing accurate estimates of reliability, the methods themselves are new, and the evaluations have yet to be done. The main threats to reliability include case specificity, variations in case complexity, assessor subjectivity, differences in rating scales and methods (see Performance-based assessment above). The PMETB has acknowledged the difficulties involved in demonstrating reliability for WBA and encouraged the use of van der Vleuten’s utility index56 (see Figure 3) and the triangulation of evidence from different assessment methods. 62

Approaches to assessing surgical skills

Our intention is to provide an overview for some of the approaches to surgical skill assessment to illustrate the transition to more performance-focused assessment methods. This overview includes formalised assessment processes, whether simulation-based or in the workplace, as well as surrogate measures of surgical skill. The rigour (reliability and validity) of an assessment method is an essential component of its overall utility, which we draw on as the focus for discussing the relative advantages and disadvantages of the various approaches for surgical skill assessment. The literature search for this section was updated in July 2009.

Speed, quality of product and patient outcomes

These three outcomes, while not formalised assessment processes, have been explored for use as surrogate measures of surgical skill.

Operative speed may provide a measure of technical skill, although there is a paucity of literature on this subject. Robert Liston, a London surgeon working before the introduction of anaesthesia, was proud of his operative speed and once challenged observers, ‘Now gentlemen, time me, 28 seconds before placing an amputated limb in the sawdust’. 63 However, measuring competence merely by setting time targets for a certain procedure is crude and probably unacceptable, as a fast surgeon is not necessarily a good one. Lord Lister, in comparison, was observed to have ‘none of the dramatic dash and haste of the surgeon of previous times. He proceeded calmly, deliberately and carefully and, as he told his students, anaesthetics have abolished the need for operative speed and they allow time for careful procedure’. 63

The Toronto group undertook a small study to examine whether time and operative product could serve as measures of technical skill using bench model simulations. 64 Twenty general surgery residents participated in a six-station bench model examination, in which ‘time to completion’ was recorded and ‘quality of the final product’ was assessed using a global five-point rating scale by two assessors per station. The mean inter-rater reliability was 0.59 for product quality. Interstation reliability (Cronbach’s α) was 0.59 for analysis of product quality and 0.72 for time to completion. Both measures demonstrated construct validity, with more senior trainees producing better products in less time, although there was poor agreement with previous OSATS examination scores. These measures offer a time- and cost-efficient, if less reliable, alternative to direct observation for the assessment of technical skill. However, the quality of the final product may be relatively easy to measure on a simulation, whereas it may be more difficult to assess in the operating theatre and is likely to depend on the specific procedure. Similarly, time to completion is a straightforward measure for simulations but in the operating theatre is affected by many case-specific variables as well as the performance of the whole surgical team.

Expert surgeons appreciate that operative speed is important. For instance, surgeons performing cardiac and vascular procedures seek to minimise cardiac bypass and vessel cross-clamping time to reduce operative complications. Some evidence with respect to time and surgical performance comes from the Medical Research Council (MRC) European Carotid Surgery trial, which reported a relationship between procedure time and adverse outcomes. 65

Efficiency in operating time is important to maximise service delivery, which is increasingly pressured under the current policies for patient management pathways and waiting list times. However, such efficiency requires large numbers of procedures, which trainees could not usually expect to obtain, and is therefore a more appropriate aspiration for newly appointed consultants.

Although attractive, measurement of the performance of surgeons based upon patient outcomes is fraught with difficulty owing to variation in case mix and the large numbers required for reliability. 66 Errors made by trainees are often corrected, and therefore masked, by their supervising consultant. In addition, patient outcomes reflect the performance of the whole surgical team, both within the operating theatre and during the postoperative period, and therefore do not provide a reliable assessment of an individual surgeon. It may be a good screening method for consultant surgical skill, but tests of competence will be required for those consultants for whom there is cause for concern.

OpComp

Logbooks form a useful record of procedural experience67 but do not reflect the performance level achieved by trainees, lacking both validity and reliability as a method of assessing trainees’ surgical skill. 68 The Operative Competency (OpComp) form was introduced by the Specialty Advisory Committee (SAC) in General Surgery in 2003 to complement the information provided by logbooks on trainees’ surgical skills. 69 The OpComp form asked educational supervisors to assess the ability of a trainee to perform the specific index procedures (relevant to their specialty) against defined criteria at the end of a clinical placement. The following rating scale was used to make these summary judgements, derived from the then current ‘Training the Trainers’ Course70 and modified on the basis of the pilot studies:69

U = Unknown or insufficient evidence to support a judgement.

D = Unable to perform the procedure, or part observed, under supervision.

C = Able to perform the procedure under supervision.

B = Able to perform the procedure with minimum supervision but needs occasional help.

A = Competent to perform the procedure unsupervised and can deal with most complications.

A checklist of technical skills was provided on the reverse of the OpComp form to assist educational supervisors in making judgements. This checklist’s content was systematically derived using a Delphi survey of surgeons in Scotland to establish the ‘essential’ technical skills required of trainees. 71 The OpComp form was shown to have good construct validity, and trainers found it simple to complete. 68 Although an advance, there were aspects of this method that undermined its reliability. The form suffers from retrospective recall of numerous procedures over the course of a placement, risking loss of important training information and cross-contamination between procedures. Almost half of the trainees surveyed during its pilot indicated that a trainer had rated their ability to perform a procedure unseen. 68 In addition, the reliance on a single assessor per clinical placement opens up this method to various types of assessor bias, including the halo effect, whereby an assessor provides a final opinion rather than providing a discriminatory rating for each item,72 and expectation bias, in which the knowledge of a trainee’s seniority influences assessors’ ratings,73 as well as bias arising from the primary influence of a trainee’s interpersonal skills, rather than their technical skills, on supervisor ratings. 74 The use of a collection of surgical skill assessments at the end of a clinical placement did not promote the opportunity for ‘on-the-job’ training and feedback. It can be appreciated that the move to current WBAs, using immediate assessment and feedback after single procedures/operations using multiple assessors during a clinical placement, moves the validity and reliability of assessment methodology forward, beyond that offered by OpComp.

Simulators

The controlled environment of a skills centre permits reliable assessment of technical skills on simulations such as bench models, animals and computer models. However, their validity depends upon the fidelity of the simulation,75 with high-fidelity simulations possessing a high level of realism compared with a living human patient. Characteristics of high-fidelity simulations include visual and tactile cues, feedback capabilities and interaction with the trainee, with the opportunity for trainees to complete surgical procedures rather than isolated tasks. Although low-fidelity bench models and video box methods show less realism, these are often used to assess trainees because of lower cost, portability and the potential for repetitive use.

Parallel assessments of surgical skill, using live animals and bench simulations, have been compared by the Toronto group. 52 Performance was graded for both assessment formats using task-specific checklists, global ratings and pass/fail judgements (i.e. OSATS). Using 20 surgical residents, the correlations between live and bench scores were high (0.69–0.72), and the mean inter-rater reliability across stations ranged from 0.64 to 0.72. This study showed that using simulations with OSATS is a valid and reliable method of assessing surgical skill, with bench models giving equivalent results to live animal model simulations. Lentz et al. 76 repeated this comparison of bench and live animal assessments within a surgical laboratory curriculum and found that skills improved over time for individual trainees and as a cohort by year of training, suggesting that simulations can also be successfully used to assess progress during training.

Whenever possible, the assessment method used in the workplace should be used for the relevant simulation because this will aid validity and transferability. 77 Studies of the transferability of simulation-based assessments to actual operating theatre performance are of fundamental importance in establishing their validity as an assessment method. Several authors have demonstrated that assessments of technical skill on low-fidelity simulations predict performance in the operating theatre. 52,78–80 It does appear that complete procedures can be deconstructed into tasks suitable for trainees to rehearse and be assessed for competence, before moving on to surgical training in patients.

The more recent development of high-fidelity simulations for surgical assessment offers the potential to reduce assessor time while providing automatic ‘objective’ output data for feedback and assessment purposes. Output metrics for trainees performing virtual simulations include economy of motion, length of path movements and instrument errors. 81 Several studies have shown that both the minimally invasive surgical trainer–virtual reality (MIST-VR) (Mentice, Gothenburg, Sweden) and LapSim® Gyn VR systems (Surgical Science, Gothenburg, Sweden) are valid and reliable methods of assessing psychomotor skills for various laparoscopic procedures. 81–85 Furthermore, randomised controlled studies have demonstrated the benefits of high-fidelity simulation training for performing laparoscopic cholecystectomy,86,87 endoscopy88 and catheter-based interventions. 89

The main advantage of simulations is that they allow unlimited practice for trainees to learn a new procedure before moving to patients, thus providing a new opportunity to learn safely from mistakes. 90 Assessment using simulations provides access to surgical skill training and assessment in situations where training in patients is unavailable or very limited91 and/or too high risk. 92 Simulations can also be used to assess the performance of individuals within emergency teams93 and surgical teams. 94 It appears that simulators can take on a well-defined assessment role that complements the assessment of real-time operating theatre performance. However, practice and assessment on simulators are no substitute for real operating experience, although they offer trainees the opportunity to progress their surgical skills before training in the complex operating theatre environment.

Direct observation in the operating theatre

It seems axiomatic that direct observation of surgical performance in the operating theatre represents the ‘gold standard’ in terms of both content and construct validity. However, unstructured directly observed assessments suffer from halo error95 and other types of assessor bias. 73 In fact, the main issue with unstructured assessments using expert/consultant assessors is that the direct observation itself is not wholly successful, i.e. assessments are completed based on indirect observation or incomplete direct observation, which limits its reliability as an assessment method. 96

Standardising direct observation depends upon the use of a structured assessment, such as OSATS or PBA. Although the validity and reliability of structured direct observation has been comprehensively researched for simulations, the evidence for assessing surgical performance in the operating theatre is limited. This study seeks to directly fill this gap in the research.

There are two main types of rating scales used for structuring assessment of surgical skill: task analysis checklists and global ratings. These rating scales are compared in Table 2. Dual assessments using separate task analysis and global ratings may be time consuming to perform, but each method may have different roles. 53 Global ratings seem useful when assessing more complex operations, especially when there is more than one method of performing the task correctly, or when assessing experts for the purposes of certification or revalidation. Task analysis checklists provide a trainee with detailed instructions and feedback of how to undertake the operation in an approved way. The PBA and OSATS tools use both types of rating scales, in combination and separately respectively.

| Task analysis | Global ratings |

|---|---|

| Procedure specific | Items common to any procedure (e.g. handling of instruments) |

| Checklist of steps that represent one safe way to perform a procedure | Useful in assessing complex operations |

| Good for assessing trainees and for feedback | Good for assessing experts as there is no ‘right’ way |

Assessment tools used in this study

There is a plethora of assessment tools that have been developed worldwide to assess surgical performance. The background presented here is limited to the assessment tools considered within this study, all of which are used to directly observe and assess dimensions of surgical performance. These WBA tools are not research-only tools but are either directly or indirectly relevant to current UK surgical training practice.

Two of the tools (PBA and OSATS) are primarily concerned with the assessment of technical skill, although they both include some non-technical skills. Both tools are in current use in UK postgraduate training programmes. These conform to the assessment principles laid down by the PMETB in 2005 and are designed to measure all the domains of Good Medical Practice. 50 PBAs were introduced for orthopaedic trainees by the OCAP in 2005 and for all other surgical trainees by the ISCP in 2007. The OSATS tool has been adopted as the method of assessing technical skills within O&G and ophthalmology specialties since 2007.

One concern about PBA, OSATS and other similar assessments is that they may not reflect ‘higher-order’ skills that underpin technical proficiency, such as situation awareness, decision-making, team-working and leadership. The Non-technical Skills for Surgeons (NOTSS) tool is designed to assess and debrief trainee surgeons on their non-technical skills, although it is not currently used within UK training programmes.

The formal training of surgeons predominantly focuses on developing knowledge, clinical expertise and technical skills, with the focus of assessment on the observation of technical skills and surgical performance. Non-technical skills have been defined as the critical cognitive (e.g. decision-making) and interpersonal (e.g. teamwork) skills that complement surgeons’ technical skills. 97 There is increasing recognition of the need for explicit training and assessment in non-technical skills because of the importance of these skills for patient safety. Case reviews and studies of operating theatre behaviour have consistently shown that failures in non-technical skills are implicated in surgical adverse events and errors,98,99 Therefore, technical skills appear to be a prerequisite but are insufficient to ensure patient safety in the operating theatre. Fostering non-technical skills within training is likely to support surgeons in maintaining high levels of performance over time.

Examples of the three assessment tools are found in Appendices 1–3. The full guidance notes for each assessment tool are available on the relevant websites: www.iscp.ac.uk for PBA, www.rcog.org.uk for OSATS and www.abdn.ac.uk/iprc/notss/ for NOTSS.

Procedure-based assessment

Procedure-based assessment is a method for assessing surgical skills in the operating theatre during interventional procedures. It is designed to be used in conjunction with the surgical logbook for all the index procedures for a particular surgical specialty. PBA was originally developed by the OCAP for trauma and orthopaedic surgery100 and PBAs have now been written for all other surgical specialties by the relevant specialty associations and SACs within the ISCP. Trainees already in training when PBA was introduced have been encouraged to use it, both as an aid to learning and to complement logbook experience, but the use of PBA has been made compulsory only for those entering surgical training since 2005 for orthopaedics and 2007 for the other surgical specialties. PBA has not yet been adopted by surgical training organisations outside the UK.

The assessment form itself has two principal parts. The first consists of a series of competencies within six core domains covering ‘consent’, ‘preoperative planning’, ‘preoperative preparation’, ‘exposure and closure’, ‘intraoperative technique’ and ‘postoperative management’. The consent and preoperative planning domains address perioperative competencies, whereas the remaining domains encompass intraoperative competencies. It is not expected that all PBA domains will be completed at any one time as consent and preoperative planning are often undertaken at a different time and place. While many of the competencies are common to all procedures (global items), others are specific to the particular procedure (task specific), particularly within the intraoperative technique domain. Each competency is assessed as satisfactory (S), unsatisfactory/development required (U/D) or not assessed (N). The assessment form is supported by a worksheet, originally used as part of the validation process, which gives examples of desirable and undesirable behaviours for each competency. Therefore, the first part of the PBA uses a combination of task and global items which are rated with a single binary rating scale. The second part of the assessment form consists of a four-level summary judgement in which the assessor rates the ability of the trainee to perform the observed elements of the procedure on that occasion with or without supervision (see Appendix 2). It uses similar levels to those used on the OpComp form. The content and construct validity of PBAs has been validated for index procedures in both general and orthopaedic surgery. 52,100

It is assumed that the assessor (clinical supervisor) will normally be scrubbed and supervising the trainee. Trainees carry out the procedure, or part of it, explaining what they intend to do throughout. The assessor will provide verbal prompts to remind the trainee to make explanations, if required, and will intervene if patient safety is at risk or the quality of treatment may be compromised. The form has been designed to allow the assessor to score items at the end of the procedure by using a simple binary rating scale. In addition, the completed form is intended to structure the provision of immediate constructive feedback (e.g. in the coffee room between cases). A PBA may be undertaken every time an index procedure is undertaken, as the primary aim is to aid learning.

The satisfactory standard for each competency is the level required for the CCT. At the end of a placement, a collection of PBAs, together with the logbook, will enable the educational supervisor or programme director to make a summary judgement about the competence of a trainee to perform an index procedure to the required standard.

Objective Structured Assessment of Technical Skills

The OSATS system was introduced by the RCOG (www.rcog.org.uk/education-and-exams/curriculum) as a formal requirement of their new training and education programme for all grades of trainees since August 2007. Prior to this, OSATS was used informally within training, particularly for junior O&G trainees.

The development of OSATS by Reznick et al. 52,101 at the University of Toronto in the 1990s initiated the current trend towards structured observational assessment. OSATS was originally developed for use on bench model simulations and was designed to be completed in real time by assessors, as with objective structured clinical examinations, rather than at the end of the procedure. OSATS has been shown to possess good inter-rater reliability and construct validity for assessing general surgical trainees performing common operations on both cadaver and live animal simulations. 52 Goff et al. 102 have also demonstrated that OSATS possesses construct validity and good inter-rater reliability for blinded and unblinded assessment of O&G trainees performing common procedures on lifelike models in a multiple station exam format. Direct observation or videoing of real surgical procedures in the operating theatre using structured checklists based on OSATS can demonstrate high inter-rater reliability and construct validity for simple operations such as varicose veins surgery. 53,103

The original OSATS was developed by Winckel et al. 101 and consisted of a technical checklist (rated on a numerical scale using 0 for ‘not performed’, 1 for ‘performed poorly’ and 2 for ‘performed well’) that was specific for the procedure. The second part was a generic assessment of 10 global items (using a five-point numerical rating scale from 0 ‘poorly, or never’ to 4 ‘excellent or always’) that were common to all procedures. The global rating assessment was modified by Martin et al. ,52 reducing the number of global items to seven, changing the five-point numerical rating scale to a behaviourally anchored scale using descriptors (e.g. ‘makes many unnecessary moves’ to ‘fluid moves with instruments and no awkwardness’ for global item time and motion) and including a pass/fail judgement at the end of the global assessment. Therefore, the OSATS form uses separate task and global assessments which are rated using two different rating scales, with a summary pass/fail judgement.

The OSATS adopted by the RCOG for the assessment of 10 O&G index procedures uses a similar form to the modified Martin et al.’s OSATS version. 52 Part 1 is a technical checklist in which task items, specific to the index procedure, are rated as ‘done independently’ or ‘needs help’. Part 2 provides the generic assessment, which has been reduced to a three-point behaviourally anchored rating scale of seven global items (see Appendix 1). These seven global items have been further modified by the RCOG to combine some global items (e.g. ‘instrument handling’ and ‘knowledge of instruments’ have been combined as ‘knowledge and handling of instruments’), whereas other global items are new additions (e.g. ‘suturing and knotting skills’, ‘relations with patient and the surgical team’, ‘insight/attitude’ and ‘documentation of procedures’). This is combined with a summary pass/fail judgement (this version was in use at the time of this study). However, the pass/fail terminology has since been revised to ‘competent in all areas included in this OSATS’ and ‘working towards competence’ to enforce the formative nature of the OSATS. 104 For OSATS to count as a pass, an assessment algorithm is used: all items on the technical checklist must be ticked as ‘performed independently’, and the majority of global items ringed in the middle to right of the rating scale, while insight/attitude must be consistently ringed ‘fully understands areas of weakness’. 105 Trainees are required to achieve a set number of passes for each index procedure in order to ‘sign off’ their logbook competency for that procedure. Currently, the requirement is for three completed OSATSs by at least two trainers in order for the relevant logbook competency to be signed off as competent for independent practice. Validity and reliability studies have not been undertaken for the application of this revised tool for assessing trainees in the operating theatre environment.

From our literature review and from email correspondence with surgical training organisations in North America, Continental Europe and Australasia, it appears that OSATS is not routinely used within any surgical curriculum in the workplace.

Non-technical Skills for Surgeons

The NOTSS system is a behavioural rating system developed using a multidisciplinary group of surgeons, psychologists and an anaesthetist from the Royal College of Surgeons of Edinburgh, in collaboration with the University of Aberdeen. 106 The development of NOTSS built on work from a similar project that developed a behaviour rating system for anaesthetists called Anaesthetist’s Non-technical Skills (ANTS). 107 While ANTS is not used formally in UK anaesthetic training, it has been piloted for use within the Australian and New Zealand College of Anaesthetists training programme. NOTSS is not currently part of the curricula for surgeons in training in the UK. However, there are a number of ongoing process trials worldwide that are considering the adoption of NOTSS. Furthermore, the Royal Australian College of Surgeons has adapted and expanded NOTSS to establish a surgical performance framework for assessment purposes. 108

Behavioural rating systems are already used to structure training and evaluation of non-technical skills in anaesthesia, civil aviation and nuclear power, to improve safety and efficiency. They are rating scales based on skills taxonomies, with examples of good and poor behavioural markers, and are used to identify observable, non-technical behaviours that contribute to superior or substandard performance. Behavioural rating systems are context specific and should be developed in the domain in which they are to be used.

Non-technical Skills for Surgeons describes the main observable non-technical skills associated with good surgical practice. It has been designed to provide surgeons with explicit ratings and feedback on their non-technical skills, either within the operating theatre or operating theatre simulator. The system comprises only behaviours that are directly observable or can be inferred through communication during the intraoperative (‘gloves on, scrubbed up’) phase of surgery.

The NOTSS system comprises a three-level hierarchy consisting of categories (at the highest level), elements, and behaviours. Four skill categories and 12 elements make up the skills taxonomy (see Appendix 3). Each category and element is defined with examples of good and poor behaviours for each element. These exemplar behaviours were generated by consultant surgeons and are intended to be indicative rather than comprehensive. The aim is to provide a common terminology and assessment framework for surgical trainees and consultants to structure their training needs, in order to develop their non-technical skills in the workplace. 106

The development and design of the NOTSS system has been a rigorous and structured process, from initial task analysis through to system evaluation. For more details on NOTSS development see Yule et al. 109 The psychometric evaluation of NOTSS to date has been carried out by the NOTSS development team in the operating theatre simulator. 110 Six video scenarios were designed and filmed by practising surgeons, anaesthetists and nurses with experience in non-technical skills, to illustrate a range of surgeons’ non-technical skills. A group of 44 consultant surgeons, trained in the use of NOTSS, observed and rated these videos using NOTSS assessment forms. Their ratings were compared with expert ratings for ‘accuracy’ and assessed for inter-rater reliability. In this study, the NOTSS system demonstrated a consistent internal structure, and internal reliability was high for all four categories (overall mean difference of 0.25 scale points between categories and elements). The sensitivity (‘accuracy’) of the system was moderate (mean sensitivity across all categories was 0.67 scale points difference from expert ratings) with the ‘decision-making’ category most sensitive and the ‘situation awareness’ category least sensitive. The inter-rater reliability (mean within-group agreement of ratings across NOTSS categories) was acceptable (> 0.7) for the categories ‘communication and ‘teamwork’ and ‘leadership’, although below acceptable for categories ‘situation awareness’ and ‘decision-making’.

The NOTSS development team has analysed the level of agreement between ratings of expert versus novice raters in more detail. 111 The mode ratings of NOTSS category ratings for each video scenario showed 50% agreement. Where there was disagreement, novice raters were more likely to give harsher ratings, with the widest differences between rater groups within ‘communication and teamwork’ and ‘leadership’ domains. Of note, 23% of ‘situation awareness’ ratings were scored not applicable by novice raters, compared with 0% of experts, indicating that experts in non-technical skills are more equipped to rate these behaviours. This highlights the role of training in human factors training and the rehearsal of non-technical skill ratings for NOTSS assessors.

The NOTSS development team has also considered the usability of the NOTSS system112 within the operating theatre for observing common surgical procedures. Questionnaire responses from consultant surgeons participating in the usability trial have indicated that NOTSS provides a structure and language to rate and provide trainee surgeons with feedback on their non-technical behaviours. The main concerns with using the NOTSS system were reported as: difficulty in understanding the behavioural descriptors; difficulty in rating behaviours when trainees do not verbalise adequately; assessments being more suitable for senior than for junior surgical trainees; the use of routine cases raising insufficient decisions for rating behaviours in the decision-making category; and the ‘negative’ impact of a scrubbed consultant upon trainee-led leadership. These user-satisfaction responses from NOTTS assessors provide great insight into the challenges of rating behaviours in the operating theatre.

This study moves forward the psychometric evaluation of the NOTSS system into the operating theatre environment. The tool will be used for observing and rating surgeons’ non-technical skills in vivo. In addition, this study provides further work on the usability of the NOTSS system in the operating theatre.

Working with the pace of change

Workplace-based assessment is a relatively recent development within postgraduate assessment and therefore this research field is fast evolving. Since this study began in April 2007, there have been significant changes to the policy of WBA implementation and also the use of assessment tools, particularly with respect to RCOG. We will fully elaborate upon these more recent changes in Chapter 4. It was envisaged that WBA would evolve during the timescale of this study. For this reason, our study design was not solely focused on the evaluation of validity and reliability but also the wider issues of acceptability and feasibility.

Aims of the study

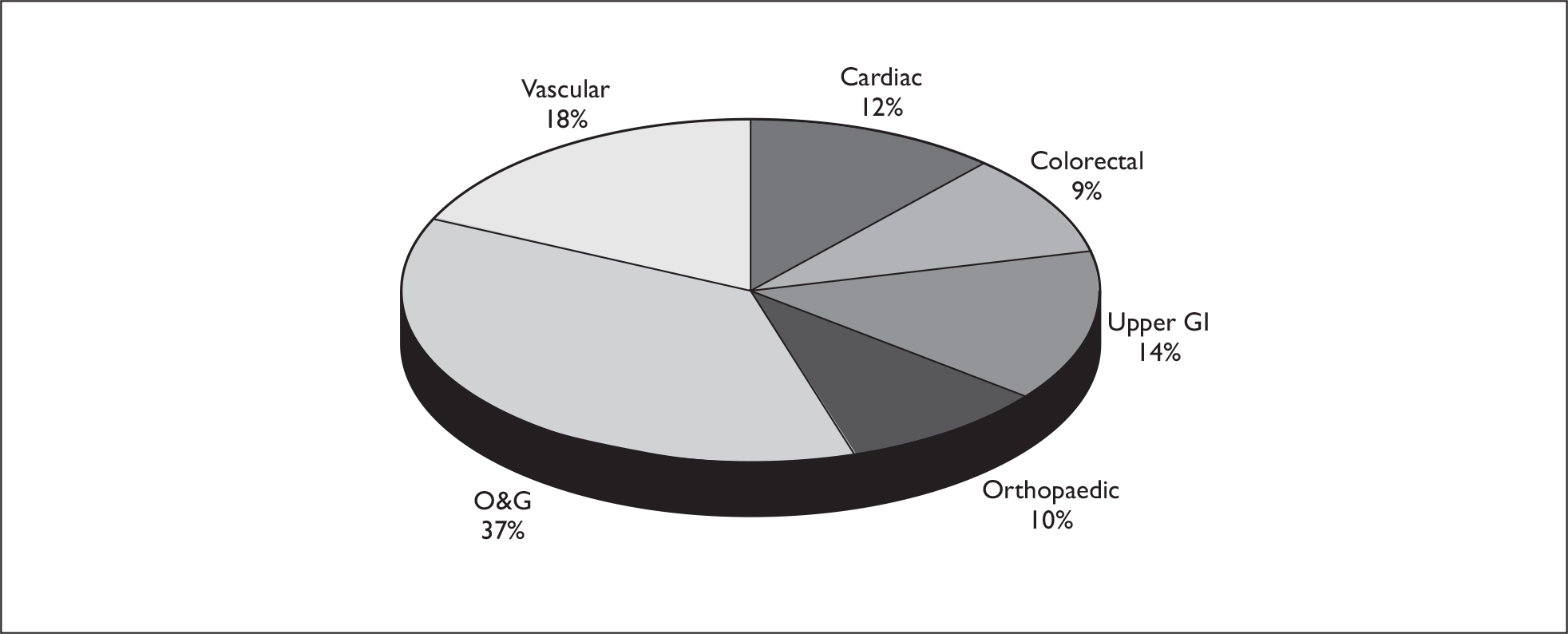

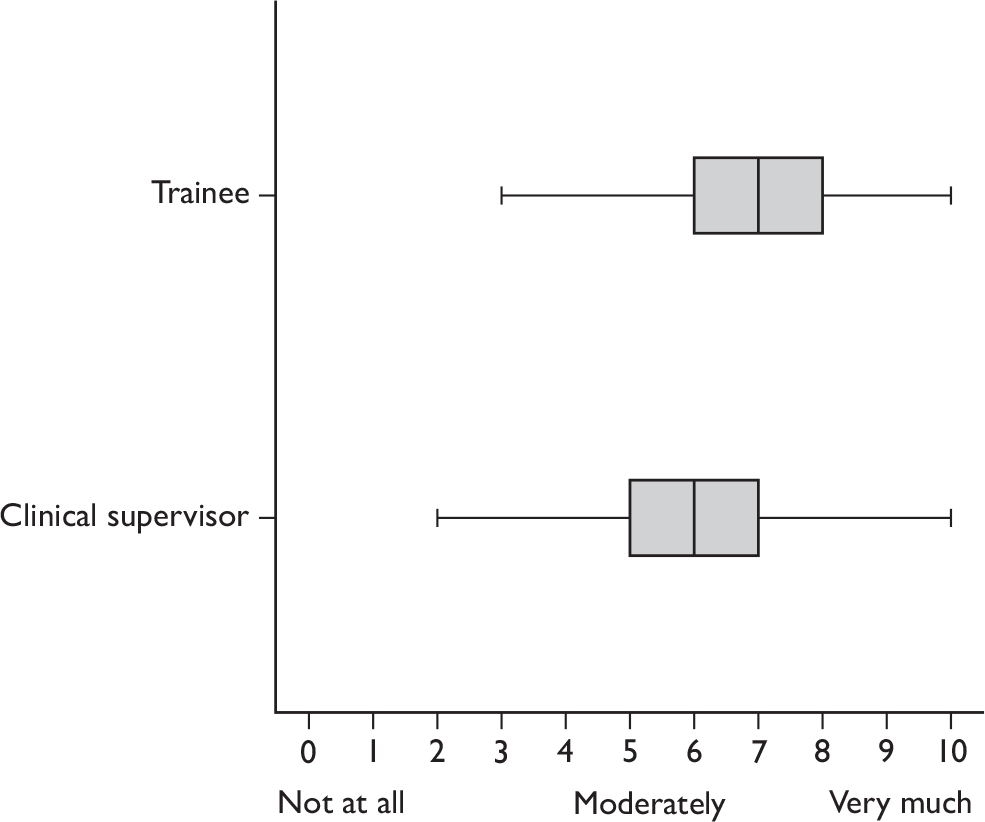

The primary aims of this study were to compare the user satisfaction and acceptability, and reliability and validity, of three different methods of assessing the surgical skills of trainees by direct observation in the operating theatre across a range of different surgical specialties and procedures. The methods selected for study were PBA, OSATS and NOTSS as these address different aspects of surgical performance (technical and non-technical skills) and are used in differing assessment and training contexts in the UK. The specialties selected were O&G, upper gastrointestinal (GI) surgery, colorectal surgery, cardiac surgery, vascular surgery and orthopaedic surgery. Two to four index procedures were chosen in each specialty.

Information on user satisfaction and acceptability of each assessment method from both assessor and trainee perspectives were obtained from structured questionnaires. The reliability of each method was measured using generalisability theory. Aspects of validity included the internal structure of the structured tools and correlation between tools, construct validity, predictive validity, interprocedural differences, the effect of assessor designation and the effect of assessment on performance. User satisfaction/acceptability, reliability and validity are all important because they equally affect the utility of an assessment method.

A secondary aim was to study the feasibility and fidelity of video recording in the operating theatre with a view to evaluating the reliability of subsequent blinded assessment.

We anticipate that the information provided by this study will be of value to the following organisations:

-

the ISCP

-

the OCAP

-

the RCOG

-

the Academy of Medical Royal Colleges

-

the Academy of Medical Educators

-

the Conference of Postgraduate Medical Deaneries

-

the PMETB

-

the GMC (Revalidation and Performance Procedures)

-

the National Clinical Assessment Authority.

Chapter 2 Methods

Ethics

Ethical approval for the study was obtained from Trent Main Research Ethics Committee on 15 March 2007. The original study proposal can be found in Appendix 6. An ethical amendment was approved on 1 June 2007, primarily to include the specialty of O&G to enable evaluation of the OSATS tool within a surgical specialty in which it is used for training but also to include additional index procedures. In May 2009, when recruitment was complete, the ethics committee approved the collection of anonymised trainee data from the deanery regarding any formal or informal training concerns identified, including ARCP2 [formerly Record of In-Training Assessment (RITA) D] and ARCP3 (formerly RITA E) statements.

Participants

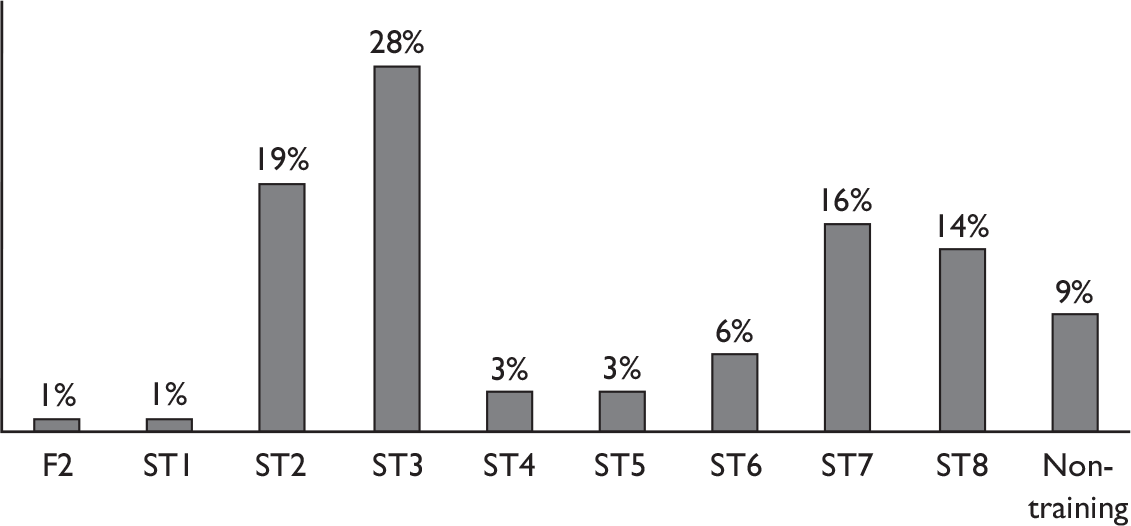

Participants were a large, heterogeneous group consisting of patients, surgical trainees, consultant surgeons, anaesthetists and theatre practitioners. Ethical approval had originally been obtained for the study to assess consultant surgeons as well as surgical trainees. However, once the study began in the clinical setting there was concern raised by some consultants that the study might constitute an attempt at ‘revalidation by the back door’. It was essential that the consultants were supportive of the study as their assessments of trainees would provide the data necessary to answer the primary research question. The initial reason for inclusion of consultant assessments had been to provide a reference group against which to compare the trainee assessments. However, the study statistician advised that the scores given by consultants to their peers would not provide valid reference scores. The study was therefore confined to the assessment of trainees only from its outset. The aim was to concentrate assessments on trainees within specialty training (ST), i.e. specialist training (ST3–7), although there was no exclusion of those in core training (ST1–2) or doctors in non-training posts on the basis that all doctors require regular assessment. Hereafter in the report these participants are referred to collectively as trainees.

Setting

Although ethical approval was granted for the study to be undertaken as a multicentre study in Sheffield, Nottingham and Leeds, the study proceeded as a single-centre study. The multicentre approach was seen initially to offer the greatest potential for recruitment and to strengthen the generalisability of the results. However, there was only sufficient funding from the grant to employ a single study co-ordinator, who was based in Sheffield. Once recruitment began, it became apparent that it would not be feasible to undertake the study in more than one location without a co-ordinator in each centre. Our study statistician also recommended that the limited resources would be better dedicated to obtaining multiple assessments on each trainee participant rather than single assessments on many participants. These views were supported by the Steering Committee. Therefore we focused recruitment within a single city at three teaching hospitals: Royal Hallamshire Hospital, Northern General Hospital and Jessop Wing for Women.

Timescale and schedule

The study ran from April 2007 to June 2009. Initial funding and ethical approval was given until March 2009. However, a 3-month funded and ethically approved extension was granted in recognition of the impact that the Medical Training Application Service (MTAS) had had upon recruitment during the initial 3 months of the study. During this period, many clinical supervisors and trainees were preoccupied with specialty applications and selection.

A Gantt chart was used to provide a framework for the study schedule. It was amended to take into consideration the delayed start of recruitment (see Appendix 4). Recruitment took place from June 2007 to May 2009. Interim reports were provided to the Health Technology Assessment programme at 6, 12 and 18 months along the study’s timescale. An interim analysis was provided to the Steering Committee at 12 months.

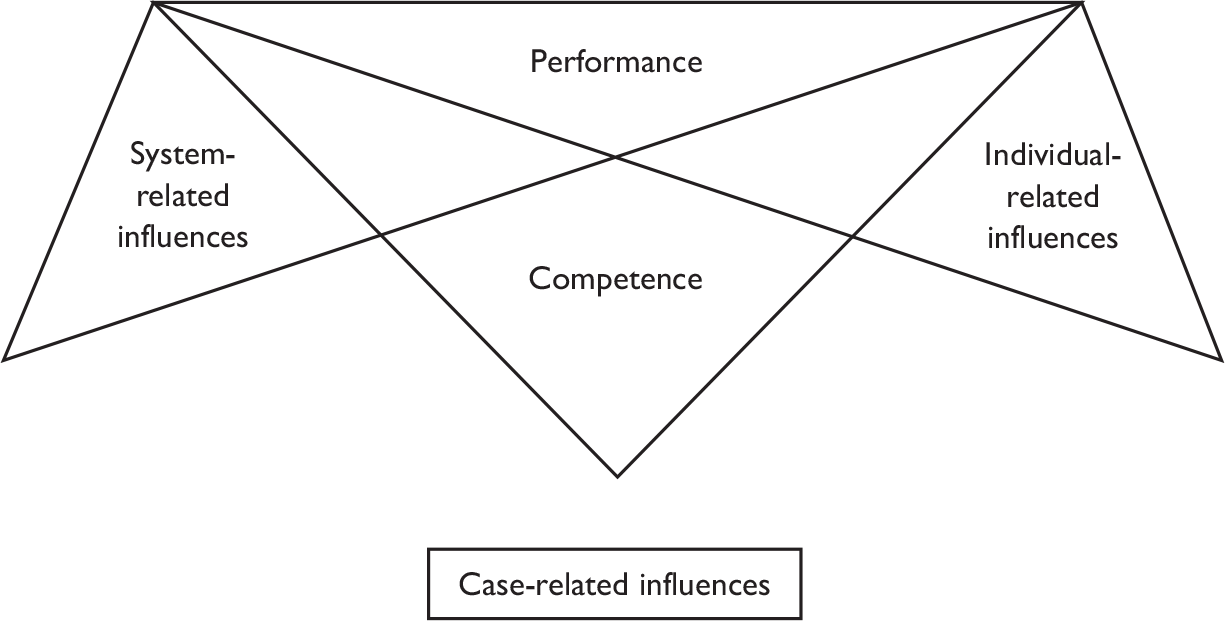

Study design and methodology

The design was a prospective, observational study within the operating theatres of the three teaching hospitals in Sheffield. Trainees were directly observed performing named index surgical procedures in six specialties.

The methodology of the assessments was direct observation of trainee’s surgical performance (encompassing technical and non-technical skills) followed by the provision of structured assessment ratings by trained assessors according to the criteria, standards and rating scales of each individual tool. We considered the role of the assessors in this study to be observer-as-participant, part-way along the continuum from complete observer to complete participant. 113 This takes account of the context of performing assessments, in which we were not purely observers but part of a working surgical team. For example, clinical supervisors acted as scrubbed-in first assistants for the assessments.

Specialties and index procedures

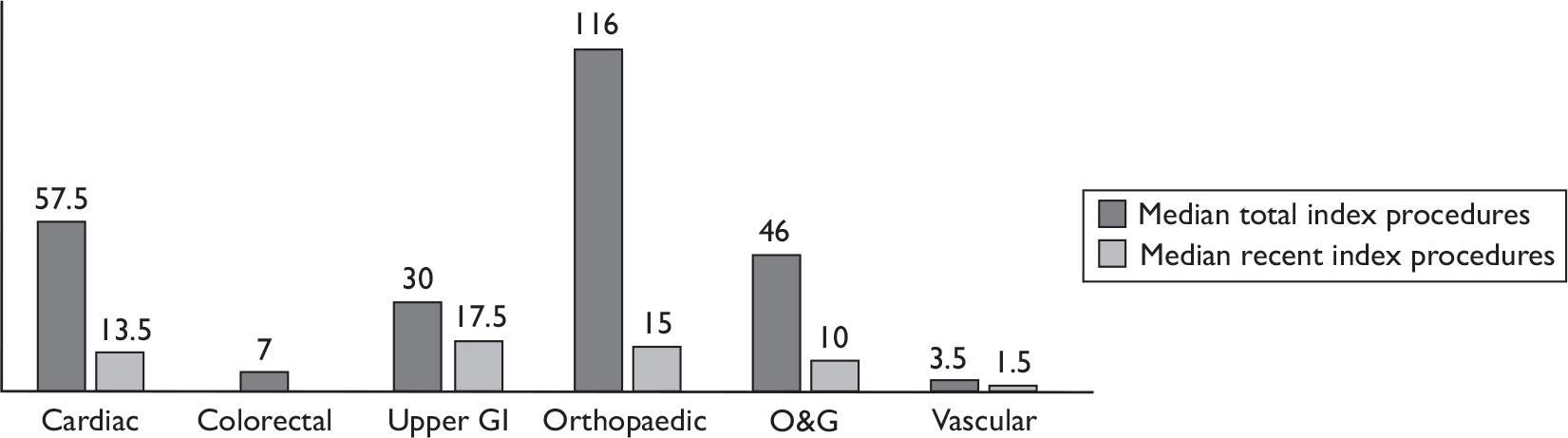

The index procedures were selected by the research team in collaboration with each surgical specialty and after subsequent approval by the Steering Committee (Table 3).

| Specialty | Index surgical procedure |

|---|---|

| Cardiac | Coronary artery bypass grafts |

| Aortic valve replacement | |

| Colorectal | Right hemicolectomy |

| Anterior resection | |

| Upper GI | Laparoscopic cholecystecomy |

| Open inguinal hernia repair | |

| Orthopaedic | Primary hip replacement |

| Primary knee replacement | |

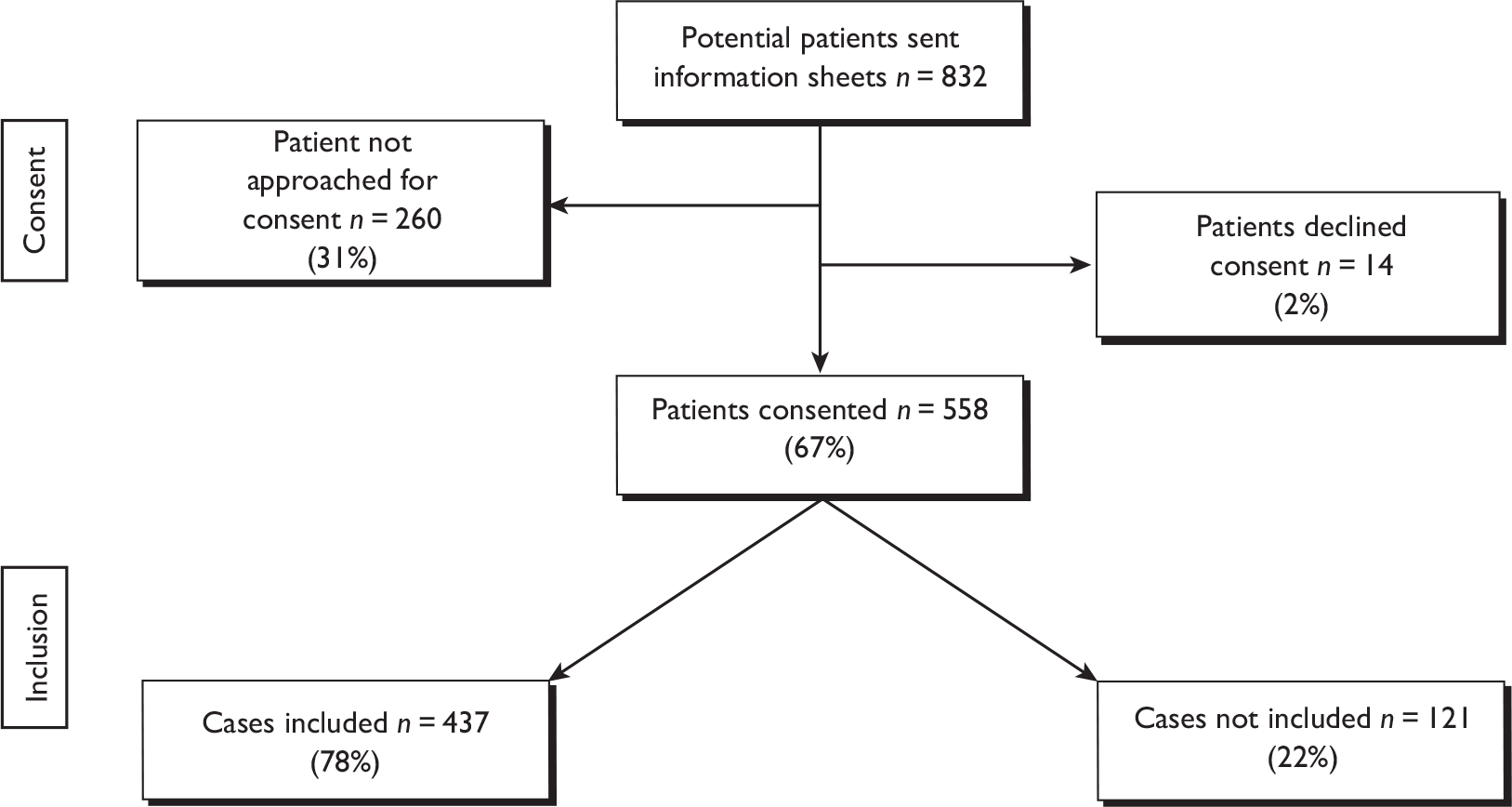

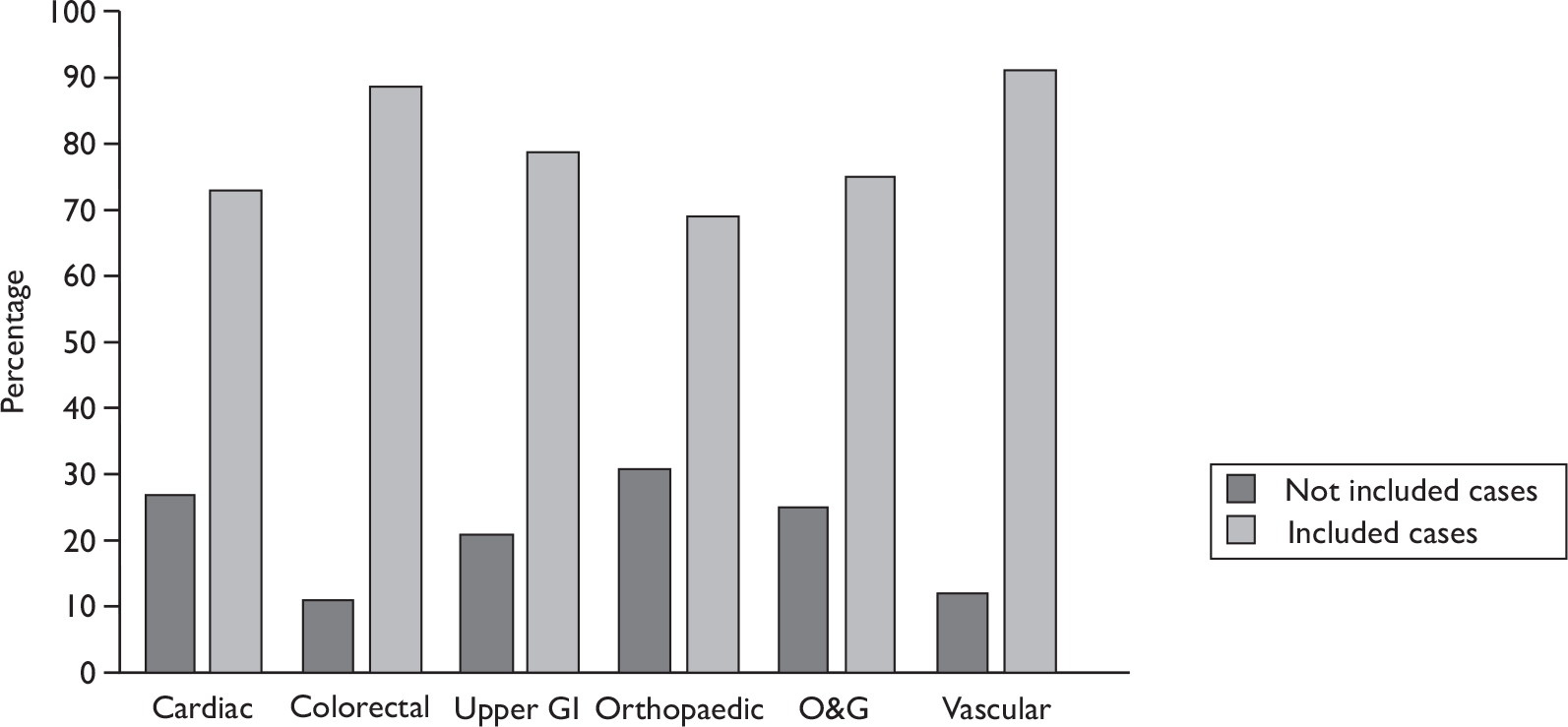

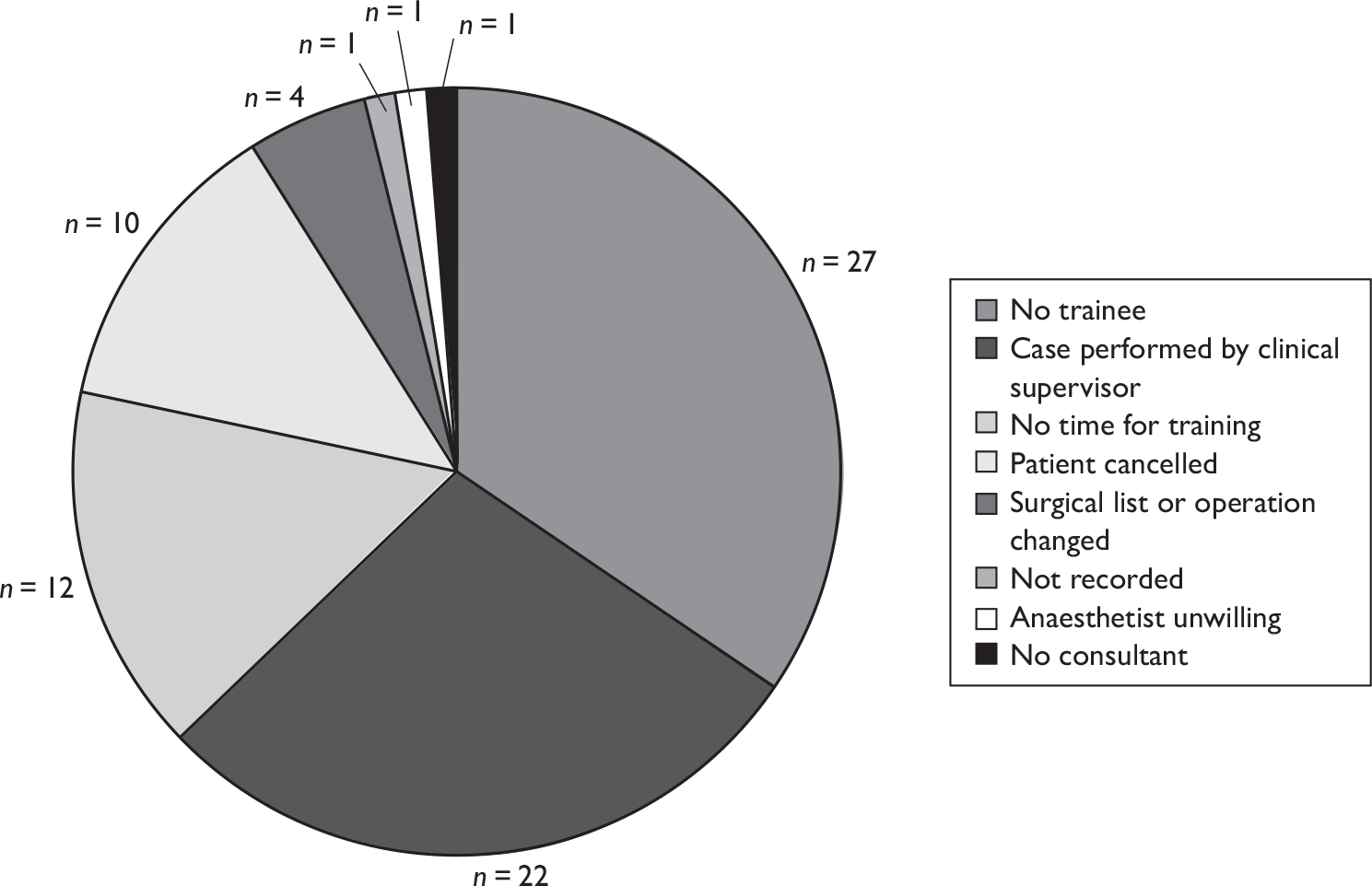

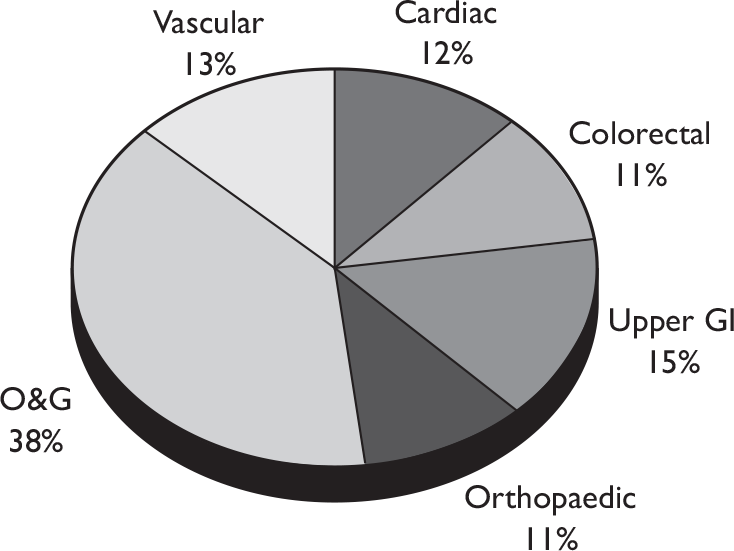

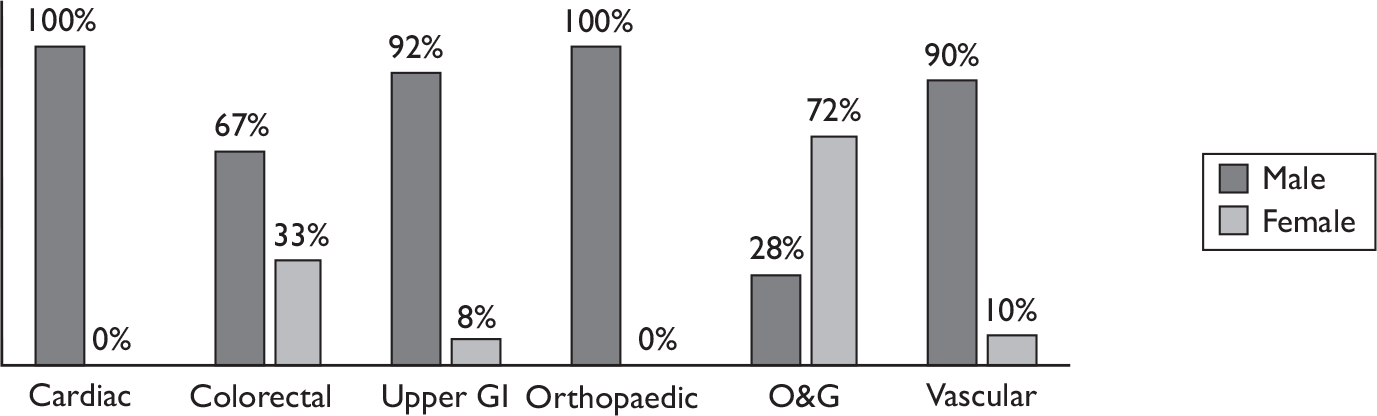

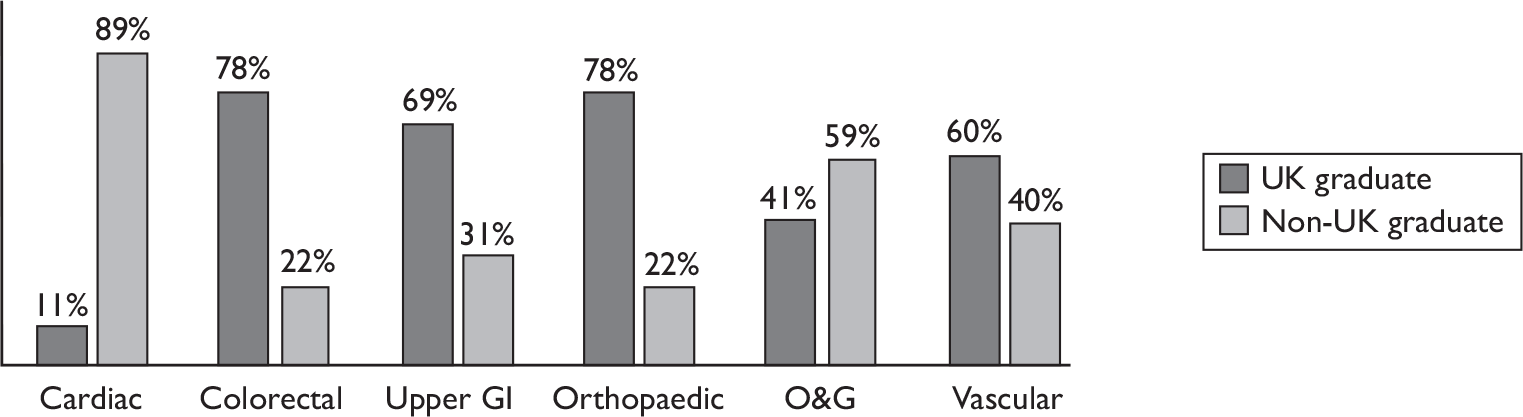

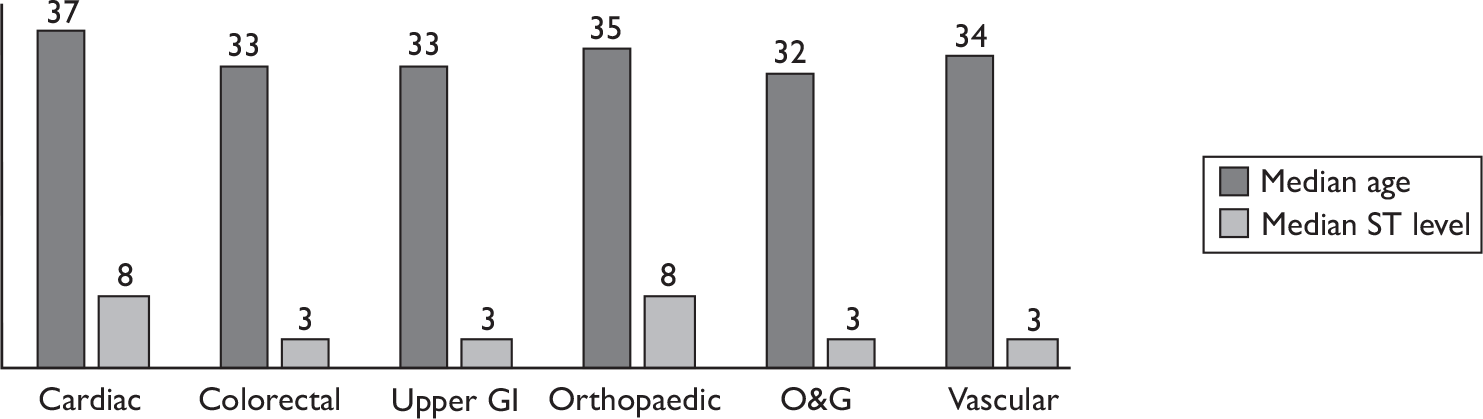

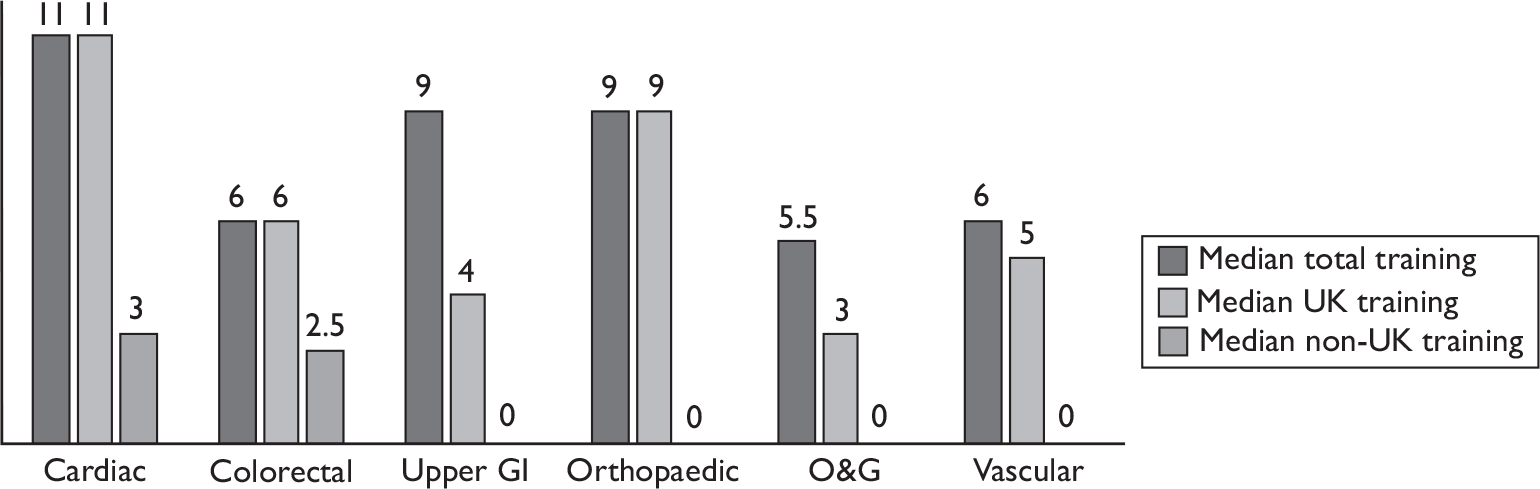

| Vascular | Varicose vein surgery |