Notes

Article history

The research reported in this issue of the journal was commissioned by the HTA programme as project number 03/04/02. The contractual start date was in August 2005. The draft report began editorial review in February 2010 and was accepted for publication in June 2010. As the funder, by devising a commissioning brief, the HTA programme specified the research question and study design. The authors have been wholly responsible for all data collection, analysis and interpretation, and for writing up their work. The HTA editors and publisher have tried to ensure the accuracy of the authors’ report and would like to thank the referees for their constructive comments on the draft document. However, they do not accept liability for damages or losses arising from material published in this report.

Declared competing interests of authors

none

Permissions

Copyright statement

© 2011 Queen’s Printer and Controller of HMSO. This journal is a member of and subscribes to the principles of the Committee on Publication Ethics (COPE) (http://www.publicationethics.org/). This journal may be freely reproduced for the purposes of private research and study and may be included in professional journals provided that suitable acknowledgement is made and the reproduction is not associated with any form of advertising. Applications for commercial reproduction should be addressed to: NETSCC, Health Technology Assessment, Alpha House, University of Southampton Science Park, Southampton SO16 7NS, UK.

2011 Queen’s Printer and Controller of HMSO

Chapter 1 Introduction

The English cervical screening programme

Evidence of the effectiveness of cervical screening

The NHS Cervical Screening Programme (NHSCSP) began a managed programme of call and recall in 1988 and is estimated to save as many as 5000 lives per year in the UK. 1 It has become recognised as one of the world’s leading cervical cancer prevention programmes.

Harnessing new technology to improve service efficiency is a key strategy of the NHSCSP. Desirable advances in cytology include improving sensitivity and specificity, and reducing human workload. The number of tests processed by the screening programme has dropped significantly in recent years owing to service improvement. The roll-out of liquid-based cytology (LBC), completed in 2008, has seen the number of inadequate samples (and the associated repeat testing) drop from 9% in 2004–5 to 2.9% in 2007–8. 2 The implementation of six sentinel sites for human papillomavirus (HPV) triage and test of cure around England has reduced the number of repeat tests taken by triaging women on the basis of their HPV results. Women attending for routine tests who are found to have a low-grade abnormality and a positive HPV result are referred directly to colposcopy without repeat cytology testing, and those who are HPV negative are returned to routine recall without cytological follow-up. 3 National roll-out of HPV triage and ‘test of cure’ would further reduce the amount of cytology, and allow women either to be diagnosed and, if necessary, treated more quickly, or to be returned to routine recall.

Current manual screening practice

Current programme guidelines recommend that all cytology is primary screened; slides reported as negative or inadequate receive a rapid review, and slides that are suspected to be abnormal are reviewed and reported by senior laboratory staff. 4 It is recommended that cytoscreeners do a maximum of 5 hours of microscopy work in a 24-hour period, with a complete break from the microscope at least every 2 hours. 5 With the introduction of LBC, rapid screening is carried out by screening staff performing a rapid review of the whole slide in 90 seconds. Current screening techniques are time-consuming and require a large and committed laboratory workforce. Despite the effectiveness of the screening programme, cytoscreeners have often felt under pressure, particularly when failures receive media attention.

Screening schedule and coverage

Currently, women aged 25–49 years are invited every 3 years, and women aged 50–64 years are invited every 5 years. 2 Of the 3.6 million women aged 25–64 years who were screened in 2008–9, around 6.7% received an abnormal result. 6 In the same period there were 134,000 referrals to colposcopy prompted by an abnormal screening result, 28.9% of which were for results of moderate dyskaryosis or worse,6 the remainder resulting from low-grade cytological abnormalities.

Despite the efficiency of the call–recall system, coverage for the year 2007–8 fell below 80% for the first time, at 78.6%. 7 There has been particular concern in recent years over the fall in attendance in the under-30s, although this trend was bucked during 2009 following the occurrence of cervical cancer in a media celebrity. A total of 3.6 million women aged 25–64 years were screened in 2008–9 compared with 3.2 million in 2007–8 – an increase of 11.9% with an increase in coverage to 78.9% [with a range of 65.8%–85.8% between primary care trusts (PCTs)]. 6 The durability of this increase will not be confirmed until the publication of screening statistics for tests taken in 2009–10.

Future programme considerations

Alongside the question of whether or not to implement automated screening, there are several organisational challenges that face the NHSCSP. In 2007 the Department of Health published the Cancer Reform Strategy. 8 This document recommended that in order to achieve the Government’s target of a 14-day turnaround time (from cervical sample being taken to the result being received by the woman), laboratories and screening offices should be reconfigured to make them larger and more efficient. Some laboratories currently operate as ‘hub and spoke’ with larger central laboratories processing the LBC samples and returning them to the smaller laboratories for screening. Amalgamation of smaller laboratories will see further changes to this service configuration. NHS pathology services as a whole across England are also under review by the Department of Health as part of the NHS Pathology Improvement Programme,9 which may have further implications for the NHSCSP’s laboratory infrastructure.

The HPV vaccination programme will also have an impact on screening once vaccinated girls enter the screening programme. (Girls are vaccinated at ages 12–13 years. This began in September 2008 when a 3-year catch-up campaign began to vaccinate older girls aged 14–17 years.) Screening intervals and follow-up protocols will need to be reviewed once the evidence base regarding screening in a vaccinated population becomes clearer. The importance of following up the screening outcomes of recently vaccinated girls was stressed by the Advisory Committee on Cervical Screening (ACCS) during the review of current screening policy in women aged 20–24 years. 10 Following recommendations from the ACCS, the Department of Health decided against making any changes to current policy regarding screening in women aged 20–24 years. Instead, further education of general practice staff will ensure that symptomatic women aged < 25 years are assessed appropriately. 11

Liquid-based cytology

The conventional method of producing cervical cells on a glass slide involved a sample being obtained from the cervix using a spatula which was smeared onto a glass slide and then fixed. Fifty years on, this method is still widely used worldwide. The quality of the slide material is variable, with blood cells and mucus capable of obscuring the cervical cells, as well as cells being unevenly spread. This has led to a large number of slides being designated as ‘inadequate’ for reporting.

With LBC, the cervical sample is dissipated in a fluid medium which contains fixative. The liquid sample is then subjected to either a process which filters the cells onto a slide (ThinPrep® LBC, Hologic, Bedford, MA, USA) or cell enrichment [Becton Dickinson (BD) SurePath™ LBC, BD, Franklin lakes, NJ, USA] producing a cleaner, more homogeneous preparation which facilitates examination of the cervical cells. In 2001–3 an NHSCSP pilot study was performed in England in order to evaluate LBC in comparison with conventional cytology in a historical population. The findings were that inadequate samples were reduced from around 7%–8% to around 1%, that LBC was certainly not less sensitive than conventional cytology and possibly more so, that laboratory throughput was more efficient, and that laboratory staff preferred LBC. 12 LBC was determined to be cost-effective and meant that far fewer women were recalled because of an ‘inadequate’ smear. The National Institute for Health and Clinical Excellence (NICE) recommended its adoption13 and between 2003 and 2008 LBC was rolled out nationally across the entire UK.

Two of the critical differences between LBC and conventional cytology are (1) reading of LBC slides can be automated using the technology being evaluated in the Manual Assessment Versus Automated Reading In Cytology (MAVARIC) study and (2) the LBC residue can be used for real-time reflex testing such as HPV testing to triage low-grade cytological abnormalities. The adoption of LBC provided the means for a more efficient cytology service, enabling both triage and the potential to move to automated technology if that were shown to be cost-effective.

Automated technologies

Development of technologies

Two US Food and Drug Administration (FDA)-approved automated machines were developed in the 1990s, the AutoPap® 300 QC (NeoPath, Redmond, WA, USA) and the PapNet® (Neuromedical Systems Inc., Suffern, NY, USA), both systems being designed to work with conventional cytology slides. AutoCyte had also developed a machine known as the AutoCyte-Screen which was able to read AutoCyte-Prep slides (now BD SurePath LBC). Despite the initial promise of the technology none of these machines is now available. AutoCyte and NeoPath merged to form TriPath Imaging Inc. (Burlington, NC, USA) and discontinued both the AutoCyte and the AutoPap 300 QC, replacing the systems with the AutoPap Primary Screening System, which is now known as the BD FocalPoint™ GS Imaging System (BD Diagnostics, Franklin Lakes, NJ, USA).

There are currently two commercially available FDA-approved automated screening systems – the BD FocalPoint GS Imaging System and the ThinPrep Imaging System (Hologic™, Bedford, MA, USA). The BD FocalPoint Slide Profiler scans the slides and assigns each one a rank according to the likelihood of there being abnormal cells present. The slides are assigned to quintiles, with quintile 1 containing the highest ranking slides. The machine also categorises slides into one of four of categories: review (comprising quintiles 1–5), no further review (NFR; up to 25% of slides), process review (indicating a technical problem) and quality control review (requiring a full screen). NFR designates the 25% of slides least likely to contain an abnormality which could be reported as negative and archived without human reading. Slides that are flagged for review by the system are examined by screening staff using the BD FocalPoint Guided Screener Workstation (previously known as TriPath Slide Wizard®). This comprises a standard screening microscope fitted with an electronic stage linked to a desktop computer. The Workstation directs screening staff towards 10 electronically marked fields of view (FOVs) on the slide. If abnormal cells are seen in any of the FOVs the entire slide is screened and appropriate action taken in line with laboratory protocols. The BD FocalPoint Guided Screener (GS) Imaging System has received FDA approval to scan both conventional and BD SurePath LBC slides.

In contrast, the ThinPrep Imaging System is designed to work with ThinPrep LBC slides (stained with the Hologic Imager stain) alone. The ThinPrep Imaging System scans all of the slides and selects 22 FOVs which are presented to screening staff on the review scope. The review scope comprises a Hologic automated screening microscope with a motorised stage to guide screeners to each of the 22 FOVs. If an abnormality is suspected in any of the 22 FOVs then a full screen of the slide is undertaken. Unlike the BD FocalPoint GS Imaging System, the ThinPrep Imaging System does not assign scores to slides and is therefore unable to rank and select slides for archiving without further intervention, or to select slides for quality control (QC) reviewing.

Capability of automated cytology

Two systematic reviews have been published on the potential of automated screening technologies. 14,15 A review commissioned by the Health Technology Assessment (HTA) programme and published in 2005 concluded that there was a need for rigorous, unbiased public sector research into the effectiveness of automated screening technologies. 14 One drawback of this review was that the majority of the papers included relate to the now obsolete PapNet and AutoPap 300 QC systems. An earlier review by the New Zealand HTA programme reached a similar conclusion and recommended large-scale prospective trials to be conducted under normal laboratory conditions with reliable gold standards for diagnostic verification. 15 This review also focused on technologies that are no longer commercially available. As yet there have been no systematic reviews that focus on the two currently available technologies which are under appraisal in the MAVARIC study.

Table 1 summarises previous ‘controlled’ studies, in which there was a general pattern of increased rates of abnormality detection in the automated arm. The studies are, however, characterised by methodological weaknesses including the use of outdated systems, using split samples, the use of manually read conventional (as opposed to liquid-based) cytology, using the same slide set for retrospective comparative readings and not reporting histological outcomes. There has not been a single rigorous prospective randomised comparison of manual and automated reading which has been specifically powered to show superiority or non-inferiority, in terms of detection of any lesion of cervical intraepithelial neoplasia grade II (CIN2) or worse (CIN2+).

| Study and design | Comparison groups | CIN detection rates | Sensitivity/Specificity | PPV/NPV |

|---|---|---|---|---|

| Halford et al. 16 | ||||

| Prospective two-armed masked study. Histology taken within 6 months of the Pap smear was used as the reference standard | 87,284 split sample conventional slides read manually and ThinPrep LBC slides read with the ThinPrep Imaging System. Biopsy data were available for 1083 HSIL lesions | Automated-LBC reading showed a 3.2% increase in possible high-grade and HSIL reports compared with manually reading convention slides | For ASCUS+ the sensitivity of automated was 96.0% and manual 91.6% (p = 0.001) | For 1083 biopsy confirmed HSIL cases automated was correct in 61% of cases and 59.4% on manual (p = 0.05) |

| Wilbur et al. 17 | ||||

| Prospective two-armed masked study. Truth adjudication used as the gold standard | 12,313 slides screened using both the BD FocalPoint GS Imaging System’s FOV and QC and manually with manual QC | Not given |

HSIL+ sensitivity 85.3% in automated arm and 65.7% in manual (p < 0.0001) with a 2.6% decline (p < 0.0001) in specificity. LSIL+ sensitivity 86.1% automated and 76.4% in manual (p < 0.0001) with a 1.9% (p = 0.0032) in specificity ASCUS+ sensitivity and specificity were not significantly different between the two arms |

NPV of a not HSIL+ slide in the automated arm was 99.7% and 99.4% in the manual arm |

| Pacheco et al. 18 | ||||

| Retrospective analysis comparing samples taken during the first 6 months of both 2004 and 2005. Final and initial diagnoses on the same slide were compared for the analysis | 79,791 manually screened ThinPrep slides and 76,887 slides screened with the ThinPrep Imaging System | Number of diagnosed HSIL cases increased from 0.46% to 0.78% with use of the ThinPrep Imaging System (p < 0.01) | Not given | Not given |

| Papillo et al. 19 | ||||

| Retrospective comparison study with biopsy data collected for 64% of HSIL cases | 55,547 ThinPrep Imaging System slides and 54,565 manually read LBC slides | LSIL cytology significantly increased by 29%, HSIL by 54% | Not given | Not given |

| Passamonti et al. 20 | ||||

| Routine consecutive conventional Pap slides prospectively processed on the BD FocalPoint GS Imaging System. Histology was obtained for 67% of slides showing abnormalities | 37,306 conventional Pap slides processed and screened using the BD FocalPoint GS Imaging System. All slides then received a manual rapid screen before the results were compared | 91% of CIN2+ cases were ranked in high-risk quintiles along with 93% of CIN1. 97% of HSIL+ and 98% of LSIL slides were triaged for a full manual review by screening the FOVs | Not given | Not given |

| Lozano 21 | ||||

| Retrospective comparison with biopsy data collected for all HSIL+ samples | 39,717 ThinPrep Imaging System slides and 87,262 manually read LBC slides | HSIL+ cytology significantly increased by 38% and LSIL by 46% | Not given | PPV of HSIL for CIN2+ = 83% for automated and 84% for manual. HSIL for CIN1+ = 98% for automated and 96% for manual |

| Troni et al. 22 | ||||

| Concurrent cohorts retrospectively identified with a negative screen at baseline. Screening modality at repeat smear was independent of the baseline screen. All subjects with CIN2+ at repeat screening were identified | AutoPap Primary Screening System 300 using conventional slides compared with manually read conventional slides. 33,646 women at baseline, 30,658 of whom returned for repeat screening. 30% randomised to manual reading | No significant difference in CIN2+ detection at repeat screening when comparing baseline automated and manual cohorts | Not given | Not given |

| Miller et al. 23 | ||||

| Two consecutive cohorts. Biopsy data were used as the reference standard for ASCH+ | 82,063 manually read ThinPrep slides, 84,473 slides read with the ThinPrep Imaging System | Significant decrease in ASCUS (15.56%) in the automated cohort along with a significant increase in LSIL (37.62%) and HSIL (42.42%) | Not given | Not given |

| Davey et al. 24 | ||||

| Prospective study using split sample pairs. Histology results were obtained for discordant pairs | 55,164 split samples – ThinPrep Imaging System compared with manually read conventional slides | Significantly fewer inadequates in the automated arm (1.8% vs 3.1%). Automated detected 1.29 more cases of histologically confirmed high-grade disease per 1000 women and classified 8.6 more slides as low grade per 1000 women | Not given | Not given |

| Schledermann et al. 25 | ||||

| Comparative study with three distinct phases: manual screening, automated screening training and routine automated screening. All abnormal slides discussed with senior pathologists | 11,354 slides in total to compare ThinPrep Imaging System read slides during training and routine use with manually read LBC slides | Not given | During routine use the sensitivity of the ThinPrep Imaging System was 93.3% and the specificity 97.6% | Not given |

| Roberts et al. 26 | ||||

| Three-armed trial. The worst histopathology result within 9 months of the end of the trial was collected | 11,416 split sample ThinPrep and conventional slides. ThinPrep slides read both manually and with the ThinPrep Imaging System. Conventional slides read manually | 14 false-negatives in the ThinPrep Imaging System arm, nine in the ThinPrep manual arm and 28 in the conventional arm | Sensitivity for reporting high-grade disease = 86.8% in the ThinPrep manual arm and 81.1% in the ThinPrep Imaging System arm | No significant difference between the PPV of the ThinPrep Imaging System arm and both the ThinPrep and conventional manual arms for high-grade reports |

| Dziura et al. 27 | ||||

| Two consecutive cohorts. All available biopsy data collected for ASC-H and HSIL | 27,525 manually screened ThinPrep slides and 27,725 ThinPrep Imaging System read slides | 29% increase in ASCUS detection, 50% increase in ASC-H detection, 30.7% increase in LSIL detection and 20% increase in HSIL detection in ThinPrep Imaging System arm (all significant). Also an increase in ASC-H (11.7%) and HSIL (8.9%) samples showing HSIL on biopsy in ThinPrep Imaging System arm (not significant) | Not given | Not given |

| Bulgaresi et al. 28 | ||||

| An evaluation of rapid review of slides designated NFR as a QC procedure. ASCUS–SIL+ samples were reviewed before referral. Negative colposcopy or biopsy used as the gold standard | 24,503 slides classified as NFR by the AutoPap Primary Screening System 300 | 98.6% of slides reviewed as negative, 0.4% as inadequate, 0.4% as ASCUS-R and 0.12% (31 cases) as ASCUS–SIL+ | Not given | Estimate of 99.99% NPV for NFR based 51.6% compliance rate with repeat cytology and 83.3% with colposcopy referral |

| Biscotti et al. 29 sponsored by Cytyc | ||||

| Two-armed comparison. Slides received an automated read by the same member of staff 48 days after the manual read. Screeners blinded to the manual read results. Cytological truth adjudication on all non-negative and 5% of negative slides | 9550 slides included in the analysis that had been read both manually and by the ThinPrep Imaging System | Not given |

Sensitivity for LSIL+ = 79.7% for manual and 79.2% for automated, for HSIL+ = 74.1% for manual and 79.9% for automated Specificity for LSIL+ = 99.0% for manual and 99.1% for automated, for HSIL+ = 99.4% for manual and 99.6% for automated |

Not given |

| Parker et al. 30 sponsored by TriPath Imaging | ||||

| Two-armed retrospective masked study. Discrepant results screened by a single cytopathologist | 1275 SurePath slides seeded with abnormals. Screened manually with 10% QC and with BD FocalPoint GS Imaging System with NFR slides classed as WNL and review slides screened and triaged to WNL or requiring full screen | 58% of HSIL+ slides ranked in Q1 and 83% in Q1 and Q2. All HSIL slides were ranked as review | Not given | Not given |

| Stevens et al. 31 | ||||

| Two-armed retrospective study. Truth was taken as a concordant diagnosis. Discrepant pairs reviewed by a discrepancy panel | 6000 conventional slides screened manually and with the AutoPap Primary Screening System using PapMaps | AutoPap identified 35 additional abnormal slides, but missed 92 (94.5% of which were low grade). The difference between low-grade detection in the two arms was significant. AutoPap was equivalent to manual for the detection of high-grade abnormalities. NFR correctly identified 975/986 slides as normal | Not given | Not given |

| Ronco et al. 32 | ||||

| Retrospective comparison, with the result of the manual read taken as the gold standard | 481 conventional slides read manually then reviewed several months later by the same cytotechnologist using PapMaps | Not given | Sensitivity of PapMaps for selecting abnormal slides = 100% for SIL and 80% for ASCUS | Not given |

| Confortini et al. 33 | ||||

| Retrospective comparison with histology obtained from punch and loop biopsies. The worst result was taken used as the gold standard | 14,145 conventional slides read manually then rescreened (unless classified as NFR) 3–4 days later by the same cytotechnologist using PapMaps with the AutoPap Primary Screening System | Not given | AutoPap and manual reading are equivalent in terms of sensitivity. The AutoPap had a slightly higher specificity than manual reading | Not given |

| Wilbur et al. 34 supported by TriPath Imaging | ||||

| Two-armed retrospective, masked study. Cytological truth adjudication taken as the gold standard | 1275 AutoCyte PREP slides (seeded with known abnormals) read manually and with the AutoPap system using the Slide Wizard 2 | False-positive rate was 3.8% for AutoPap and 4.4% for manual | Sensitivity of AutoPap for truth determined HSIL+ = 98.4% and manual 91.1%. Specificity of AutoPap = 96.1% and manual 95% | Not given |

| Vassilakos et al. 35 | ||||

| Two-armed comparison study using the manual reading as the gold standard | 8688 AutoCyte PREP slides read manually and compared with the AutoPap Primary Screening System’s review rankings |

47.4% of LSIL slides were in Q1, 20.8% in Q2, 10.6% in Q3, 10.1% in Q4, 5.3% in Q5 and 5.8% in NFR 85.2% of HSIL slides were in Q1, 12.7% in Q2, 2.1% in Q3. 0% were in Q4, Q5 and NFR. 84% of all abnormalities were in the highest scoring group along with 100% of HSIL |

Not given | Not given |

Productivity and cost-effectiveness

Automation has productivity implications for staff time reviewing slides in the laboratory with potential for cost savings in staff time. There are also additional costs associated with the automated equipment. The HTA programme’s systematic review concluded that there were productivity gains associated with automation when compared with manual reading with conventional cytology. 14 Studies published since, which have evaluated the cost and productivity implications associated with using the ThinPrep Imaging System and BD FocalPoint GS Imaging system, have suggested that automation results in both increased productivity and increased costs. In all studies the authors found that automation resulted in at least a 50%25,26,29 increase in productivity, with the biggest increase reported being 56%. 32 A study based in Italy which estimated the costs associated with automated screening concluded that similar costs to manual screening could be achieved only if 60,000 samples per year were processed by the AutoPap Primary Screening System (now BD FocalPoint GS Imaging System) with a 30% NFR rate. 33

There is also a lack of rigorously evaluated data relating to the incremental cost-effectiveness of automated screening compared with manual reading. The HTA programme’s systematic review concluded that there were insufficient data to draw any conclusions regarding the cost-effectiveness of automated screening and acknowledged that the papers included in the review did not consider the effect of combining LBC with the technologies. 14

Other current trials of automated screening

Becton Dickinson FocalPoint Guided Screener Imaging System

Currently, there are two ongoing evaluations in the UK involving the BD FocalPoint GS Imaging System. Cervical Screening Wales began an evaluation in 2006 to assess the utility of the BD FocalPoint GS Imaging System for QC by comparing the 10 FOVs with the current manual QC method. The technology has been used as an additional QC tool. All slides were then manually primary screened. This evaluation has since been extended to include four laboratories across Wales, and was due to be completed by March 2010. A similar evaluation was also undertaken at Derby City Hospital. This study was completed in early November 2009, over 40,000 slides were included. In both studies the slides were sent to Source Bioscience’s (formerly Medical Solutions) laboratory in Nottingham for scanning, with the images being read remotely at the trial sites (Wilma Anderson, Source Bioscience Plc., 2010, personal communication).

ThinPrep Imaging System

The Scottish Government Health Department has commissioned a feasibility study of the ThinPrep Imaging System which began in 2008 and aims to compare 40,000 manually read ThinPrep LBC slides with 40,000 ThinPrep Imaging System read slides. The trial has been running in six laboratories – two laboratories processing and reviewing ThinPrep Imaging System slides plus four remote reviewing laboratories. 36 The analysis of the first two phases of the study showed that the ThinPrep Imaging System performed as well as manual screening. 37 The results of phase 3 of the study involving the Review Scope Plus are described in Chapter 4. There are three further feasibility studies taking place in England: one based in Ashford and a second based in Taunton; a QC evaluation study is also taking place in Northampton General Hospital (Glenn Weatherley, Hologic, 2009, personal communication).

The characteristics of further studies involving the ThinPrep Imaging System that are ongoing worldwide are summarised in Table 2.

| Site | Sample size | Type of study | Control | Intervention |

|---|---|---|---|---|

| Rheinland Pfalz and Saarland, Germany | 20,000 | Clinical trial | Manually screened ThinPrep LBC slides | ThinPrep Imaging System |

| Cologne, Germany | 984,509 | Retrospective study | 890,090 conventional Pap tests | 94,419 ThinPrep LBC slides read with the ThinPrep Imaging System |

| Cerba Laboratories, France | Not known | Internal evaluation | Not known | ThinPrep Imaging System |

| Leper, Belgium | c.18,000 in first year of study | Evaluation study | Manually screened ThinPrep LBC slides | ThinPrep Imaging System |

| Abruzzo, Italy | Not known | Clinical trial | Conventional Pap tests | ThinPrep Imaging System and BD FocalPoint Imaging System |

Human papillomavirus testing

Epidemiology of human papillomavirus

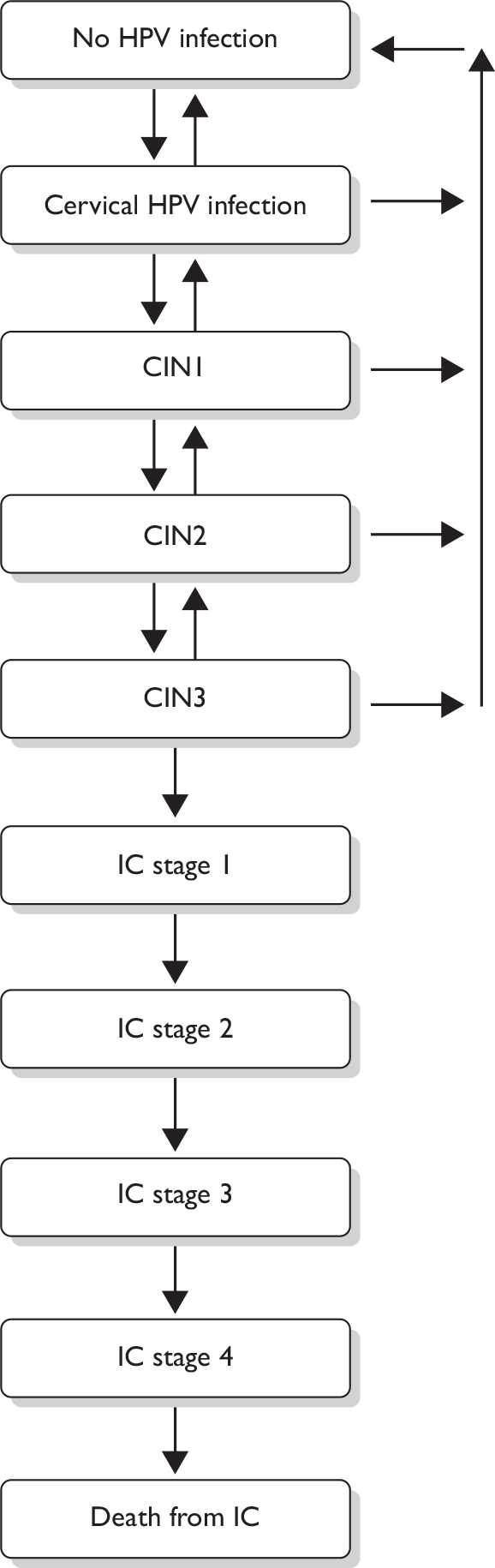

It is now universally accepted that HPV infection by so-called ‘high risk’ types is essential for the process of cervical carcinogenesis. 38 There are > 100 different HPV types based on differences in genetic sequences. Of these, > 20 oncogenic types are associated with cervical cancer and, of these, type 16 alone is thought to be responsible for up to two-thirds of all cases. 39 Types 16, 18, 31, 33 and 45 are probably responsible for almost 90% of cervical cancers. 40 HPV including all high-risk types is considered to be responsible for virtually 100% of cervical cancer. 38 There are two crucial implications from this. The first is that prevention of high-risk HPV infection will prevent the chain of events that leads to cervical cancer, which has resulted in the production of prophylactic vaccines based on virus-like particles. 41,42 Beginning in 2008, a prophylactic vaccination programme directed against types 16 and 18 was established across the UK, directed at girls aged 12–13 years with a one-off catch-up programme over 3 years to vaccinate girls aged 14–18 years. The second has been the development of HPV tests which can be used diagnostically. The rationale of these is that women who test HPV-negative are not at risk of cervical neoplasia, and so HPV testing can be used to distinguish HPV-positive women who are at risk from the HPV-negative women who are not.

Current technologies

The first HPV deoxyribonucleic acid (DNA) test to receive FDA approval was the so-called Digene high-risk HPV Hybrid Capture® 2 (HC2) (Qiagen, Crawley, UK) test in which a cocktail of 13 high-risk types are tested, which can be used with the liquid cytology medium and which does not require the step of polymerase chain reaction (PCR) to amplify the viral DNA. This test has become the current standard by which emerging tests need to be compared with, in terms of sensitivity and specificity. It is the test currently used in the NHSCSP sentinel sites protocol both for triage and for test of cure for cervical intraepithelial neoplasia (CIN)-treated women, and was adopted for the MAVARIC study (see Triage and test of cure). New tests have been developed and others are under development. These tests rely on PCR and most test for DNA, but two test for ribonucleic acid, believed by the manufacturers to achieve greater clinical specificity. Another feature of several new tests is the ability to genotype HPV, with the intention of adding specificity to clinical testing by identifying types such as type 16 which are most strongly associated with high-grade CIN. Some testing kits will combine generic testing for a mixture of high-risk types, with restricted genotyping. Others will rely solely on genotyping. The full potential of HPV testing for cervical screening has yet to be realised.

Triage and test of cure

Triage was employed to achieve maximal detection of underlying CIN2+ in the MAVARIC trial. The use of HPV testing to triage women with low-grade cervical cytology has already been referred to above. Various studies have demonstrated the value of HPV triage in terms of avoiding the need for colposcopy for HPV-negative women as well as increasing the relative sensitivity for detecting CIN2+ compared with repeated cytology. 43–45 These benefits of HPV triage were demonstrated in the NHSCSP pilot study, although it did result in an increase in rates of colposcopy referral. 46 These benefits included immediate colposcopy referral, avoiding failure by women to comply with repeat cytology, and increased rates of CIN2+ suggesting that either CIN was being diagnosed more rapidly or triage was more sensitive than repeat cytology, or indeed an element of both.

Test of cure is a term coined for HPV testing following treatment of CIN. A process of long-term cytological surveillance has evolved which has resulted in 10-year annual cytological follow-up in England for treated women found to have CIN2+. Test of cure using HPV testing exploits its high negative predictive value (NPV), to identify the large majority of women who are HPV negative following treatment (who are therefore at very low risk) and allowing them to be returned to routine recall. An assessment of HPV testing as test of cure in the NHS system was undertaken in a recently published study of 900 treated women. 47 The incidence of cytological abnormality over 2 years among women who were cytology negative and HPV negative at 6 months was sufficiently low to recommend return to routine recall. This would save many thousands of women multiple annual follow-up cytology and this approach has been incorporated into the current sentinel sites protocol. Some samples in MAVARIC underwent HPV test of cure as part of this protocol.

Primary screening

Primary screening using HPV testing is not relevant to the MAVARIC study, which is based on primary screening by cytology. Nonetheless, there is a strong rationale for considering a move to HPV testing in the future based on three considerations:

-

greater sensitivity than cytology

-

the potential for increased screening intervals

-

greater throughput efficiency than cytology.

It should be recognised that the NHSCSP is extremely effective, based as it currently is on cytology. In the future, however, the majority of screened women will have been vaccinated, strengthening the rationale for HPV as the initial test. Published randomised trials indicate that HPV and cytology combined do not increase the overall detection of CIN2+ and CIN grade III (CIN3) or worse (CIN3+) over two successive rounds of screening,48–50 but HPV as a single initial test could be a cost-effective means of screening if suitable strategies can be developed to manage HPV-positive women. Such strategies could combine reflex cytology, HPV genotyping and biomarkers.

Chapter 2 Study design and methods

Aims and objectives of the MAVARIC study

The principal aim of MAVARIC was to compare ‘automation-assisted’ reading with manual reading in cervical screening in terms of effectiveness and cost-effectiveness in the detection of CIN2+, which defines lesions which are treated in the prevention of cervical cancer. This necessitated a randomised design in order to achieve an unbiased comparison and to allow all primary cytology to be read manually as this is the current standard. The first objective required cytology staff to be unaware of whether they were reading a slide which would be read only manually or by automation-assisted backed up by manual reading. The second objective was therefore to create a framework for initial reporting by one method blinded to the result of the other method. The third objective was to accommodate both LBC platforms being used in the NHSCSP: ThinPrep and SurePath. Each of these uses different automated technology – ThinPrep LBC uses the ThinPrep Imaging System and BD SurePath LBC uses the BD FocalPoint GS Imaging System. The fourth objective was to ensure that cytology randomised between manual and automation, and that assessment by the BD FocalPoint GS Imaging System and the ThinPrep Imaging System was comparable in terms of abnormality rates; to achieve this the general practices generating the cytology were stratified by the Townsend Index of Deprivation. A fifth objective was to be able to achieve as rapid and complete a confirmation of clinical outcomes as possible. HPV triage was used to select women with low-grade cytology for colposcopy referral in order to avoid the delays and failure to comply associated with repeat cytology which could lead to non-detection of underlying CIN.

The primary outcome was the relative sensitivity of screening by automated or manually read cytology to detect CIN2+. The relative sensitivity to detect CIN3+ was also determined.

Other outcomes – clinical:

-

The detection rates of CIN2+ and CIN3+ in the manual-only and paired arms.

-

The detection rates [positive predictive values (PPVs)] for each category of cytology including the threshold of borderline or greater and mild dyskaryosis or greater following HPV triage.

-

Relative specificity of screening by automated and manual reading.

-

All of the above comparing the BD FocalPoint GS Imaging System with the ThinPrep Imaging System using BD SurePath LBC and ThinPrep LBC respectively.

-

The reliability of NFR in the BD FocalPoint GS Imaging System in terms of NPV using manual reading in the paired reading as the reference standard.

-

To determine the inadequate rates with both technologies.

-

To determine how automated reading compares with manual reading when used in conjunction with HPV triage of low-grade abnormalities.

Other outcomes – economics and organisational:

-

Comparative throughput and reporting times (for each stage of screening).

-

Detailed cost estimates of the total cost of processing samples at the laboratory and total cost per sample including consideration of inadequate rates and using NFR at different cut-off levels.

-

Estimate of the comparative cost-effectiveness of automated versus manually read cytology using trial data and modelled lifetime costs and effects.

-

Assessment of cytoscreeners’ experience and satisfaction with automated systems and the organisational changes that automation would require in implementation.

Trial design

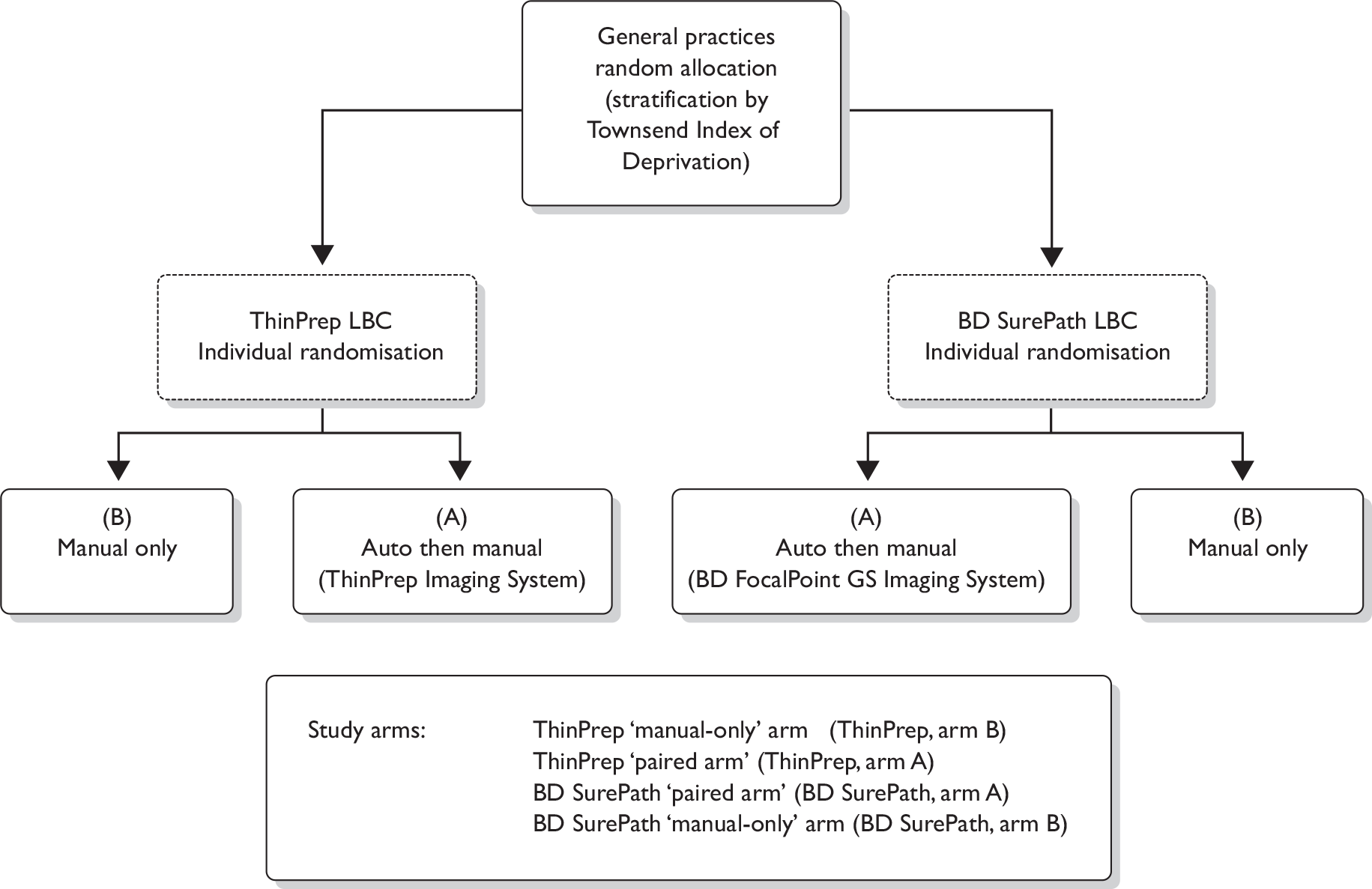

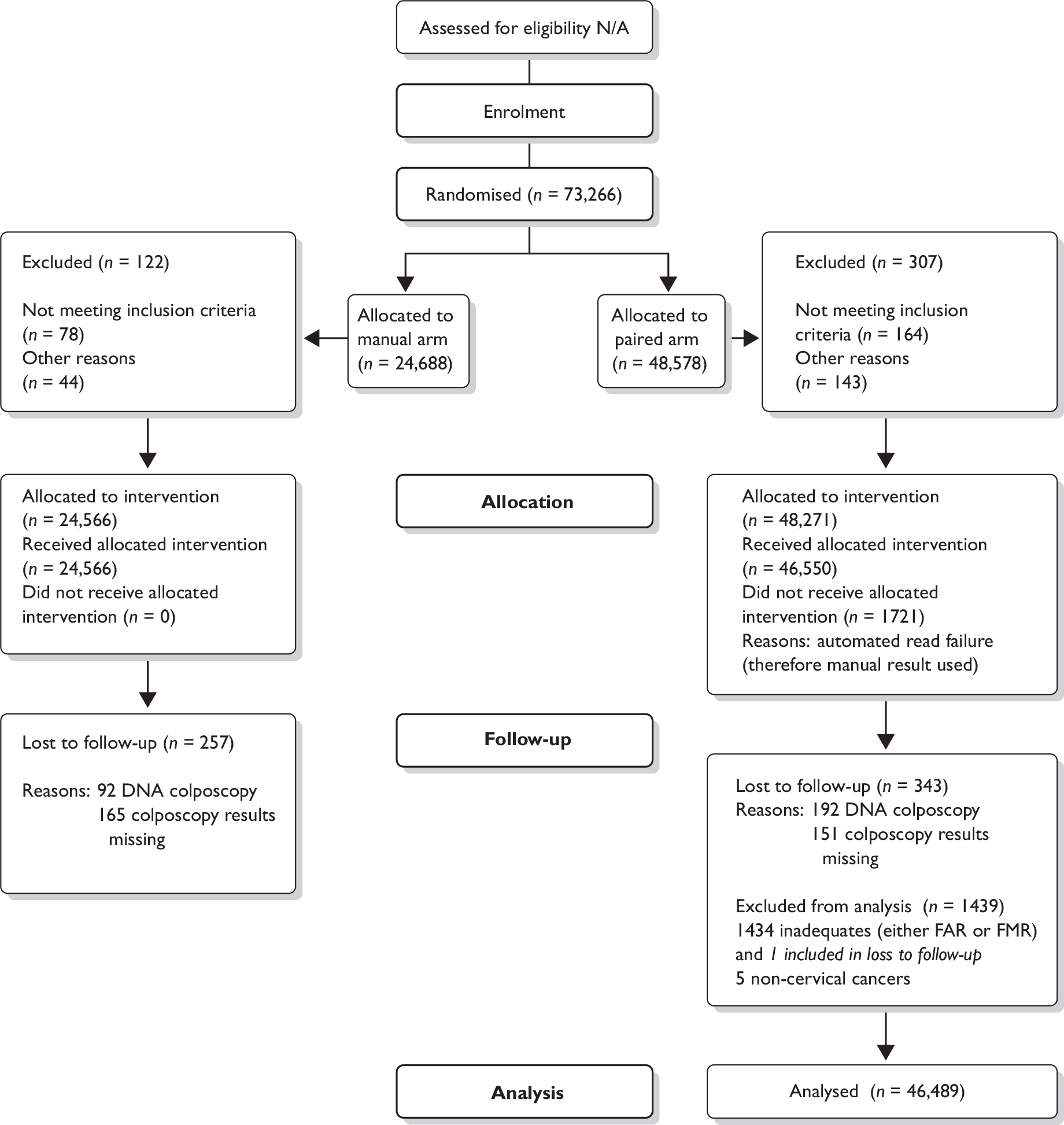

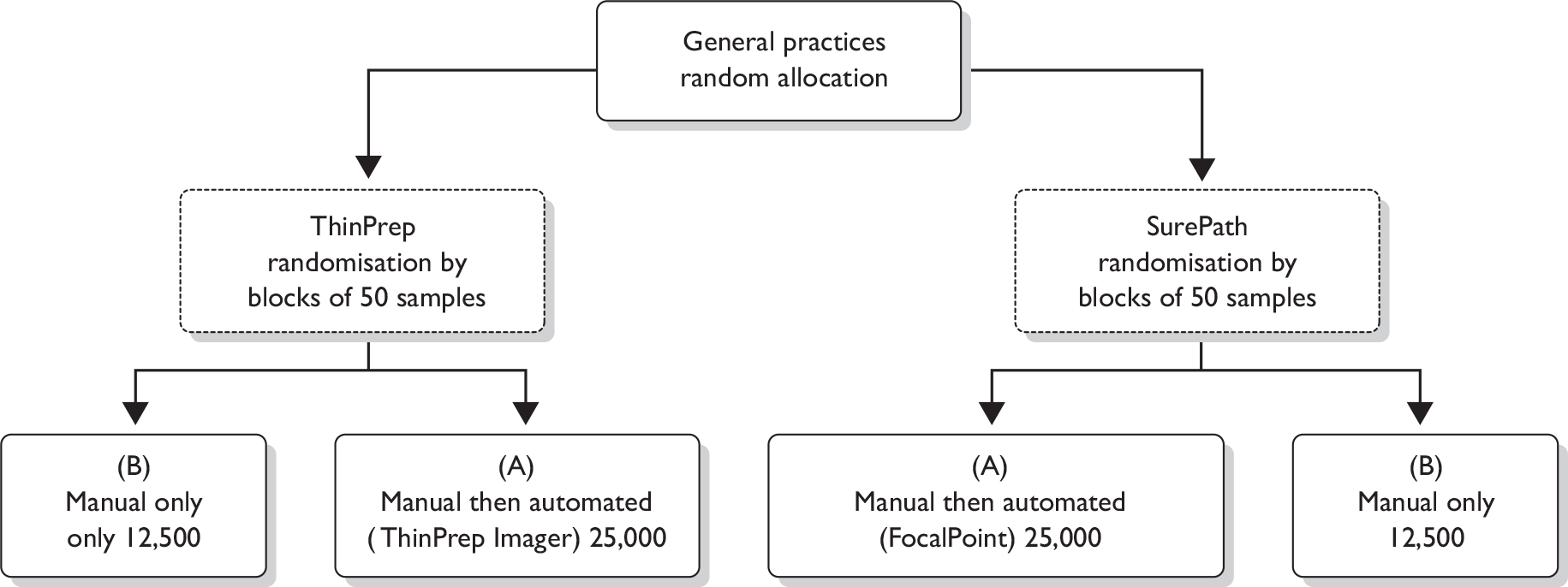

Randomisation of technologies

Initial cluster randomisation between technologies was performed at the general practice level (Figure 1) because it was not feasible for both cytology systems to be used within a single practice. The overall aim of this randomisation was to ensure, as far as possible, that sources allocated to the two systems should have similar population numbers and include women with similar underlying risk. Randomisation was stratified by PCT to take account primarily of variation in Townsend Deprivation Score, but also in ethnic minority composition and screening interval. Sources within each PCT were assumed to have closer levels of these risk indicators. Community clinics were included as a separate stratum. There were a total of nine PCT strata; seven consisted of one PCT where the PCT was expected to contribute large numbers and two strata consisted of more than one PCT (grouped by high or low deprivation) for PCTs where only a small number of women were expected to contribute [i.e. contributing fewer general practitioner (GP) practices]. Owing to the variation in population size, the sources were ordered by decreasing size (number of women) within each PCT and block randomisation of four sources at a time ensured that similar numbers from each PCT were allocated to each of the two techniques.

FIGURE 1.

Randomisation flow chart.

The numbers 1 –6 in Table 3 show the various possible allocations of two As and two Bs within a block of four where A coded for ThinPrep LBC and B for BD SurePath LBC.

| Block number | Source allocation |

|---|---|

| 1 | AABB |

| 2 | ABAB |

| 3 | ABBA |

| 4 | BBAA |

| 5 | BABA |

| 6 | BAAB |

For each PCT stratum the sources were therefore ordered by decreasing size and a series of random digits were generated, with each digit giving the randomisation for a block of four of the sources. For example, in a series of random digits such as 21234, the first number, 2, allocated the first four sources in the PCT to ABAB, the number 1 the next four to AABB and so on until all the sources in the PCT had been allocated to A (ThinPrep LBC) or B (BD SurePath LBC).

Randomisation of slides

The Cancer Screening Evaluation Unit (CSEU) provided two spreadsheets, one for each system, with unique numbers allocated to either the manual-only arm or the paired comparison arm. In the laboratory a query was set up to run on the CliniSys information technology system to pick up all samples eligible for the study and populate the appropriate randomisation spreadsheet. Laboratory randomisation lists were prepared by the laboratory trial co-ordinator and placed alongside the appropriate slides ready for screening.

Inclusion and exclusion criteria

All samples from women attending for screening within the randomised general practices, family planning clinics and colposcopy clinics were initially eligible for randomisation. The inclusion criteria for HPV triage changed part-way through the trial to include only samples from women aged > 25 years who were on routine recall to bring the triage protocol into line with the NHSCSP’s sentinel sites project.

Analysis of the trial was by intention to treat; however, there were some instances where slides were randomised in error and had to be excluded from the analysis. Slides were excluded for the following reasons:

-

Vault samples taken from hysterectomised women who were no longer part of the cervical screening programme.

-

Subsequent slides randomised from the same woman on early repeat screening.

-

Slides that had to be removed from the automated reading arm because the results were required urgently.

In some instances slides were reported as an automated read failure (ARF) by the imaging systems. When this occurred the final manual result (FMR) was taken as the final automated result (FAR) (see definitions on page 24) for analysis purposes as this reflects what would happen to slides failing an automatic read in real-life practice.

Human papillomavirus triage

It had originally been intended to use the Amplicor™ HPV microwell plate (MWP) test (Roche, Basel, Switzerland) because of certain theoretical advantages including increased sensitivity of HPV detection. 49,51,52 Early comparison with the Amplicor HPV MWP test revealed a number of problems, particularly a higher proportion of HPV-positive tests with ThinPrep LBC compared with BD SurePath LBC. In addition, a significant proportion of BD SurePath LBC samples gave inadequate results (see Appendix 5). It was therefore decided to revert to the HC2 DNA test which had been validated by the company for use with both ThinPrep LBC and BD SurePath LBC samples.

Settings and ethics approval

Ethical approval was initially received from Central Manchester Local Research Ethics Committee (LREC) in December 2005 – project reference number 04/Q1407/318 – based on a need for individual signed consent, which was required for HPV triage, which was not at that time part of NHSCSP standard practice.

Research and development approval was received from Central Manchester and Manchester Children’s University Hospital NHS Trust, Ashton, Leigh and Wigan PCT, Bury PCT, Heywood, Middleton and Rochdale PCT, Manchester PCT, Oldham PCT, Salford PCT, Tameside and Glossop PCT, Trafford PCT, St Helens PCT, Salford Royal Hospitals NHS Trust and NHS Lothian.

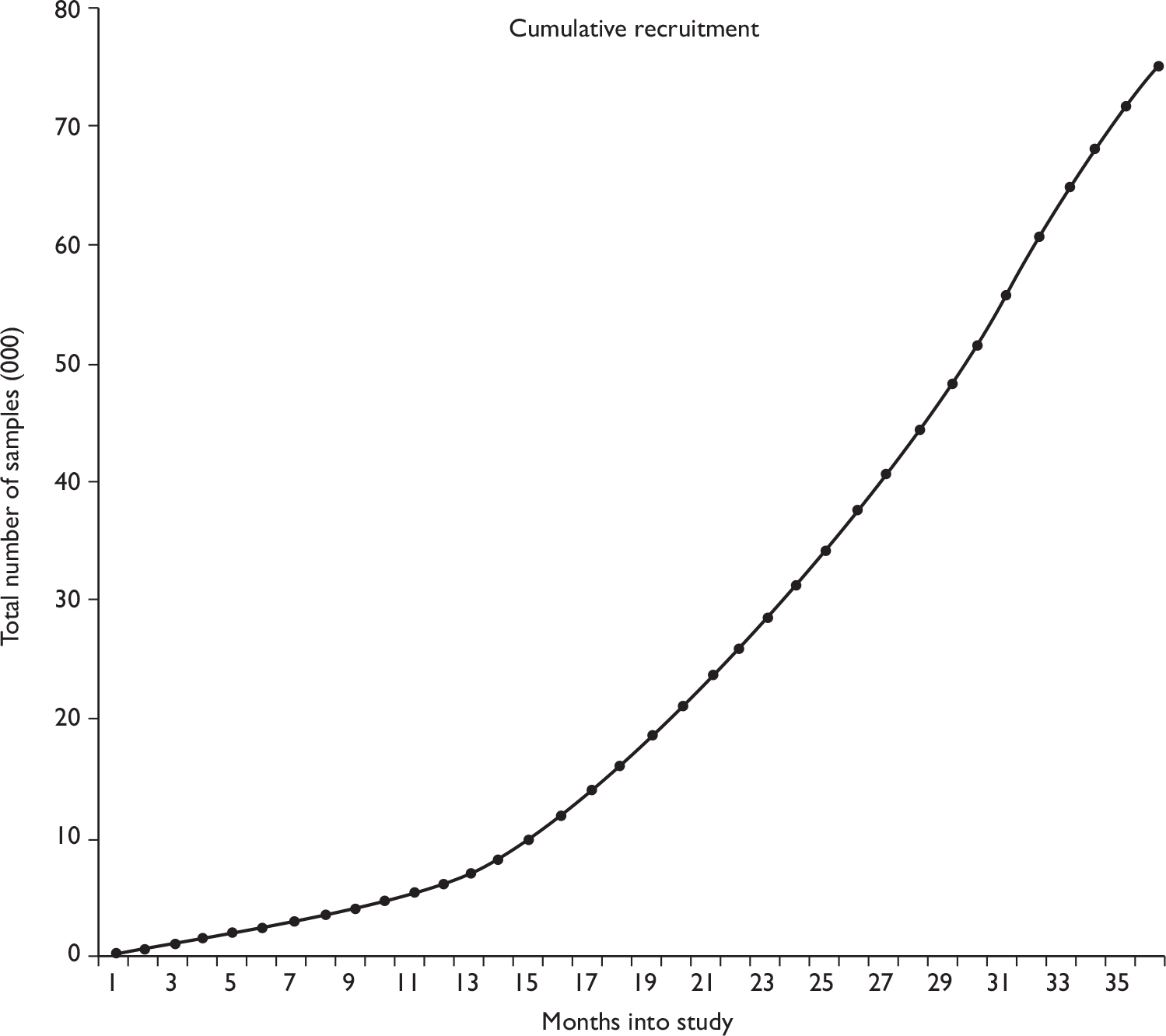

In August 2005, information was sent to randomised general practices and family planning clinics to introduce the trial. Two study sessions were held in 2006 for general practice and family planning staff where they were given the opportunity to put questions to the chief investigator. The trial opened to recruitment on 1 March 2006 in Salford and Trafford, Tameside and Glossop, Oldham and Manchester PCTs. Ashton, Leigh and Wigan PCTs began recruitment in 2007. Women were sent copies of the patient information sheet with their invitation for screening by the local call/recall agencies and surgeries were supplied with copies to give to women who presented opportunistically.

Initial recruitment was slow. Many GPs were unable to recruit women into the trial and gain their consent owing to time constraints within their surgeries and the lack of financial reimbursement. Nurses also reported finding the opt-out system of consenting difficult to work with. Patients were asked to sign an opt-out form to decline either participation in the trial as a whole or to decline a reflex HPV test in the event of a low-grade cytological abnormality. This decision was communicated to the cytology laboratory on the cervical cytology request form which accompanied the sample. Signed opt-out forms were also returned to the laboratory.

Incorporation of trial into the NHS Cervical Screening Programme sentinel sites protocol

In September 2006 the Manchester Cytology Centre agreed to become one of the NHSCSP’s sentinel sites for HPV triage, making reflex HPV testing (triage) of low-grade cytological abnormalities routine for all NHS cervical screening samples received at the laboratory. This removed the need for the option to opt out of HPV testing and the LREC agreed that women need no longer be given the opportunity to opt out of the trial. Randomised practices began working to the sentinel site protocol from mid-2007 after consultation with the local Cervical Screening Steering Groups.

Monitoring

The trial was monitored by the HTA programme in July 2007 and by Central Manchester University Hospitals NHS Foundation Trust R&D Office in March 2009, receiving a satisfactory report on both occasions.

Logistical considerations

Processing of samples for cytology testing

The cytology samples were received in the Manchester Cytology Centre in either BD SurePath or ThinPrep LBC vials depending on the system to which the surgery had been randomised. On receipt in the cytology laboratory all samples were allocated a unique identifying number. The ThinPrep samples were processed using the ThinPrep 3000 Processor to produce slides with a printed 14-digit number including the unique identifying number which, after staining with ThinPrep Imaging System stain, were ready to be read on the ThinPrep Imaging System. The use of acetic acid to remove blood from heavily blood-stained ThinPrep LBC samples had to be discontinued as this procedure could affect the validity of the HPV result.

The BD SurePath LBC samples were processed using the BD PrepStain™ Slide Processor to produce slides ready to be read by the BD FocalPoint GS Imaging System. Prior to processing the samples on the BD PrepStain Slide Processor a paper label containing a barcode with the unique identifying number was placed onto the appropriate slide.

All slides were left overnight to dry before being placed into the appropriate imaging system. Both systems produced a print-out of the number of samples processed with any errors incurred during processing; however, the print-out from the BD FocalPoint GS Imaging System could be run only after 120 slides had been processed. The print-outs from both systems were passed to the laboratory co-ordinator to check for errors.

Transporting samples for human papillomavirus testing

The vials from the LBC samples showing low-grade abnormalities were collated at the Manchester Cytology Centre for dispatch to the Specialist Virology Centre in Edinburgh. The samples were anonymised prior to sending by removing the woman’s name, date of birth and NHS number. The identifier used for subsequent interaction between Manchester and Edinburgh was the sample number assigned by the Manchester laboratory.

The transfer of samples was performed according to the United Nations’ (UN’s) regulations governing the packaging of diagnostic and infectious samples UN3373 (packing instruction 650). CitySprint (www.citysprint.co.uk) was the designated courier. The samples were sent on Monday to arrive in Edinburgh on Tuesday and the results of the test sent back to the Manchester Cytology Centre within 4 days. An electronic sheet was sent to Edinburgh with the unique identifying number, date and type of sample.

Processing of samples for human papillomavirus testing

In Edinburgh, samples were accorded an internal sample number for HPV testing. A MAVARIC trial sample identification worksheet and laboratory checklist were completed in the laboratory throughout the testing process. Sample information was entered into a password-protected, bespoke Microsoft access (Microsoft Corporation, Redmond, WA, USA) database.

For the Amplicor HPV MWP test, nucleic acids were extracted from a 1-ml aliquot using a Qiagen BioRobot 9604 in conjunction with the QIAamp 96 DNA Swab BioRobot Kit and a protocol validated in Edinburgh for use with ThinPrep LBC medium. 53 Where weekly sample numbers were small (< 22), nucleic acids were extracted manually using the Roche Diagnostics AmpliLute™ Liquid Media Extraction Kit.

For the HC2 test both ThinPrep LBC and BD SurePath LBC samples were processed according to the manufacturer’s instructions. Initial sample preparation involved denaturation with sodium hydroxide rather than nucleic acid extraction. HC2 is a solution hybridisation assay for the qualitative detection of high-risk HPV DNA (types 16/18/31/33/35/39/45/51/52/56/58/59/68) in cervical samples. It uses an oligonucleotide probe cocktail of 13 probes. Hybrids are captured on the wells of a microtitre plate and detected with an amplified chemiluminescent signal. This assay is FDA approved and CE marked.

A positive sample, i.e. indicating the presence of high-risk HPV DNA sequences, was reported, where a relative light unit/cut-off (RLU/CO) measurement was ≥ 3.0. From 19 February 2008, the protocol was changed to report a positive sample with an RLU/CO ratio ≥ 2.0 to be in line with the NHSCSP sentinel sites protocol. Both these cut-off values deviate from the manufacturer’s recommendation of 1.0 RLU/CO, values below which indicate that the HPV DNA levels were below the detection limit of the assay or absent. The reason for the higher cut-off was to achieve additional specificity without significant loss of sensitivity based on data from the ARTISTIC (A Randomised Trial In Screening To Improve Cytology) trial. 54 From 2 March 2009 any remaining HPV testing was performed in the Manchester virology department along with triage samples from the NHSCSP sentinel sites.

Test data were entered into the local database and results returned to the Manchester Cytology Centre electronically as a Microsoft excel (Microsoft Corporation) password-protected file after each batch run.

Summary of significant changes to the protocol during the course of the study

Significant changes that were made to the protocol throughout the course of the trial are summarised in Table 4. The major changes have been described fully in Statistical analysis, including statistical considerations and Processing of samples for human papillomavirus testing. The original trial protocol has been included as an appendix (see Appendix 15).

| Change to protocol | Months into study | Impact |

|---|---|---|

| Two colposcopy clinics (with similar number of referrals) were allocated either ThinPrep or SurePath LBC and were invited to have their samples processed as part of the study | 2 | Increased the amount of abnormal cytology (and underlying CIN2+) in both arms |

| Recruitment methods changed to allow staff at GP surgeries to hand women a patient information sheet if they had not received one with their invitation | 5 | More women were informed about the trial and were able to participate |

| Manchester Cytology Centre becomes one of the NHSCSP’s sentinel sites for HPV triage | 16 | HPV triage protocol is aligned with the NHSCSP’s protocol (i.e. only first borderline and milds triaged). This allowed the need for an opt-out system of consent to be removed as HPV triage had become standard practice and resulted in a more rapid accrual of samples |

| HPV testing changed to HC2 | 18 | Resolved initial problems with the Roche Amplicor which were resulting in a number of invalid tests on BD SurePath samples |

| Sample size reduced to 75,000 | 18 | The number of samples in the manual-only arm was reduced to allow the study to finish on time while still achieving the pre-specified number of samples in the paired arm |

| HC2-positive cut-off changed from ≥ 3.0 RLU/CO to ≥ 2.0 RLU/CO to align the HPV triage protocol with the NHSCSP’s sentinel sites protocol | 24 | This was not thought to have any significant impact on the trial as only 1% of triage samples had an RLU/CO value between 2 and 3 |

| Randomisation ratio changed from 1 : 1 to 3 : 1 | 24 | The randomisation ratio was changed in favour of the paired arm to ensure the number of samples specified in the power calculation was achieved. The reduced number of samples entering the manual-only arm remained sufficient to blind the cytoscreeners to the randomised allocation of the samples |

Automated cytology methods

Machine set-up

Both companies, Hologic and BD Diagnostics, assessed the site prior to installing the imaging machines. Several changes to the layout of the preparation laboratory and the screening room had to be made to accommodate the installation of the machines.

Training

Staff with varying levels of LBC experience were selected to receive automated screening training. Both companies performed the training, further details of which are provided in Appendix 6. Eight medical laboratory assistants (MLAs) were trained in the handling and maintenance of the imaging systems. Eight cytoscreeners and one chief biomedical scientist (BMS) were trained in the use of the automated microscopes and cell morphology recognition. The laboratory trial co-ordinator and two cytopathpologists were trained in the handling and maintenance of the imaging systems, the use of the automated microscopes and cell morphology recognition for both systems.

Staining

The Becton Dickinson SurePath staining parameters were changed slightly for the study (an additional water wash was added to the process to comply with company recommendations). For the ThinPrep LBC slides, the routine laboratory Papanicolaou (Pap) stain had to be changed to the ThinPrep Imaging System formulation and the Hologic staining schedule had to be followed. The ThinPrep Imaging System formulation stains the cells darker than conventional formulations. The initial proposal was to stain only the trial slides; however, it was recognised that this could cause bias by (a) indicating to the screeners which slides were being read by the automated systems and (b) one of the stains being advantageous in terms of detection of abnormalities. It was therefore necessary to stain all ThinPrep LBC slides received in the laboratory with the ThinPrep Imaging System stain to prevent such bias occurring.

ThinPrep Imaging System stain validation process

In order to validate the ThinPrep Imaging System stain, 100 slides stained with the department’s routine Pap stain (of which 25% were abnormal) were screened. A second slide from each of the 100 samples was made and stained using the ThinPrep Imaging System stain. The slides were then processed by the ThinPrep Imaging System and the 22 FOVs were reviewed using the Hologic automated microscopes. The results of the slides read by the ThinPrep Imaging System were compared with the original diagnoses. The reviewers were blinded to the original diagnoses throughout the validation process. Both Hologic and the departmental validators (two cytopathologists and the laboratory trial co-ordinator) classed the ThinPrep Imaging System stain as not significantly different from the routine Pap stain.

All levels of screening staff manually screened 100 ThinPrep Imaging System stained slides to ensure that they had become accustomed to the new staining process. Slides stained with the ThinPrep Imaging System had to pass the Regional Technical External Quality Assurance. Slides were fed into the first available round and achieved an acceptable result on assessment.

Screening of cytology samples

The slides for automated screening were screened using the review scopes; no marks were made on the slides to indicate any abnormal cells, and the results were entered onto the randomisation list. The list and slides were then passed to another screener for rapid review. The list was removed and passed to the laboratory co-ordinator prior to the slides being placed back into the routine screening in numerical order, thus ensuring that the manual screener was blinded to the result of the automated read. Manual screening (in both arms of the trial) was carried out according to routine laboratory protocols, including the practice of marking areas of interest on the slide. In the paired arm the automated reading was undertaken first, followed by the manual read, and the woman’s management was based on whichever reading was the greater in terms of abnormality.

Blinding procedures

One of the principal reasons for the manual reading-only arm was to blind the screener to whether or not slides had received an automated read. The other main reason for the manual-only arm was to provide a comparison with manual reading in the paired arm in order to be able to demonstrate that manual reading in the paired arm was neither superior nor inferior in terms of sensitivity to that in the manual-only arm. Manual screening was performed in the routine laboratory flow of work by a mixture of auto-trained and non-auto-trained cytoscreeners. This created the potential for the same screener to read the slide both manually and on the automated system; however, owing to the large pool of cytoscreeners performing manual screening the chance of this happening was low.

In order to blind the manual screener to knowledge of which slides had been screened using the automated review scopes, no marks were made on the slides during the automated screen. Routinely in the cytology department any abnormal cells found are highlighted by marking the slide above and below the abnormal cells with a coloured marker pen. The Hologic ThinPrep Imaging system utilises a marker pen on the review scope to mark the FOV after the automated screen has been performed, this pen was removed so no marks could be made. The automated screener could add electronic marks as these could be viewed only when using the automated review scopes.

Once the automated read had been performed the result was added to the randomisation sheet, the sheet and the slides were then passed to another screener to perform a rapid screen. The rapid screener then passed the randomisation sheet and slides to the laboratory co-ordinator, the co-ordinator removed the sheets and placed the slides back into the routine screening in numerical order, again helping to blind the manual screeners to which slides had been screened on the automated system.

Review of discordant pairs

Discordant pairs are defined in Table 5. A list of eligible discordant pairs was produced by the CSEU for the cytology laboratory. A review of the discordant pairs with a known clinical outcome of CIN2+ was undertaken to assess whether or not the discrepant results were due to a location error by either of the imaging systems or an interpretation error by the cytoscreener assessing the FOVs. Two cytopathologists and the laboratory trial co-ordinator reviewed the FOVs (blinded to both the automated and manual results) and recorded their findings on a mismatch proforma (see Appendix 7). A random sample of 10 known CIN2+ concordant pairs was added as a control in order to provide blinding as to whether or not slides were from discordant pairs.

| Automation result | Manual result |

|---|---|

| High grade (moderate /severe dyskaryosis or worse) | Negative/Inadequate |

| Negative/Inadequate | High grade (moderate/severe dyskaryosis or worse) |

| Low-grade (borderline or mild dyskaryosis ) HPV positive | Negative/Inadequate |

| Negative/Inadequate | Low-grade (borderline or mild dyskaryosis ) HPV positive |

| NFR | Inadequate, borderline or worse |

When the results of the review had been recorded on the proforma, the results of the initial and final automated and manual reads plus any histology were entered on to the form. A majority view determined the outcome of each discordant pair. The review of the discordant pairs was to determine whether the FOVs were showing the significant cells. The cytological consensus resolved the discordant results and was used to determine whether or not the slide had been interpreted incorrectly on either the automated or the manual reading. In cases where the reviewers agreed with the negative automated reading it was agreed that the machine had not presented any abnormal cells in the FOVs.

Clinical management

Cytology management

All samples were initially reported as per the departmental/NHSCSP protocols for manual reading, but not authorised. The laboratory co-ordinator then recorded the results of the automated screening (which were recorded on separate proformas to blind the manual screening process) onto the laboratory computer system. In the event of a discordant result the samples were taken to peer review meetings for discussion after being reviewed on the automated system by a checker/BMS and a consensus report produced. All results were reported using the British Society for Clinical Cytology 1986 classification (Table 6). 55 Final reports were issued as described in Table 7.

| BSCC 198655 | Bethesda System 200156 | Definition |

|---|---|---|

| Negative | Negative for intraepithelial lesion or malignancy | Normal cytology |

| Inadequate | Unsatisfactory for evaluation | Low-grade cytology (PPV for CIN2+ generally in the range of 15%–20%) |

| Borderline nuclear change (includes koilocytosis) |

|

|

| Mild dyskaryosis | LSIL | |

| Moderate dyskaryosis | HSIL | High-grade cytology (PPV of CIN2+ generally in the range of 69%–85%) |

| Severe dyskaryosis | HSIL | |

| Severe dyskaryosis query invasive | Squamous cell carcinoma | |

| Query glandular neoplasia |

Endocervical Endometrial Extrauterine NOS |

| Manual | Automatic | Reported by |

|---|---|---|

| Negative | Negative | Screener/Checker/Senior BMS/Chief BMS |

| Negative | Abnormal | Medic/Advanced BMS practitioner |

| Abnormal | Negative | Medic/Advanced BMS practitioner |

| Abnormal | Abnormal | Medic/Advanced BMS practitioner |

Colposcopy management

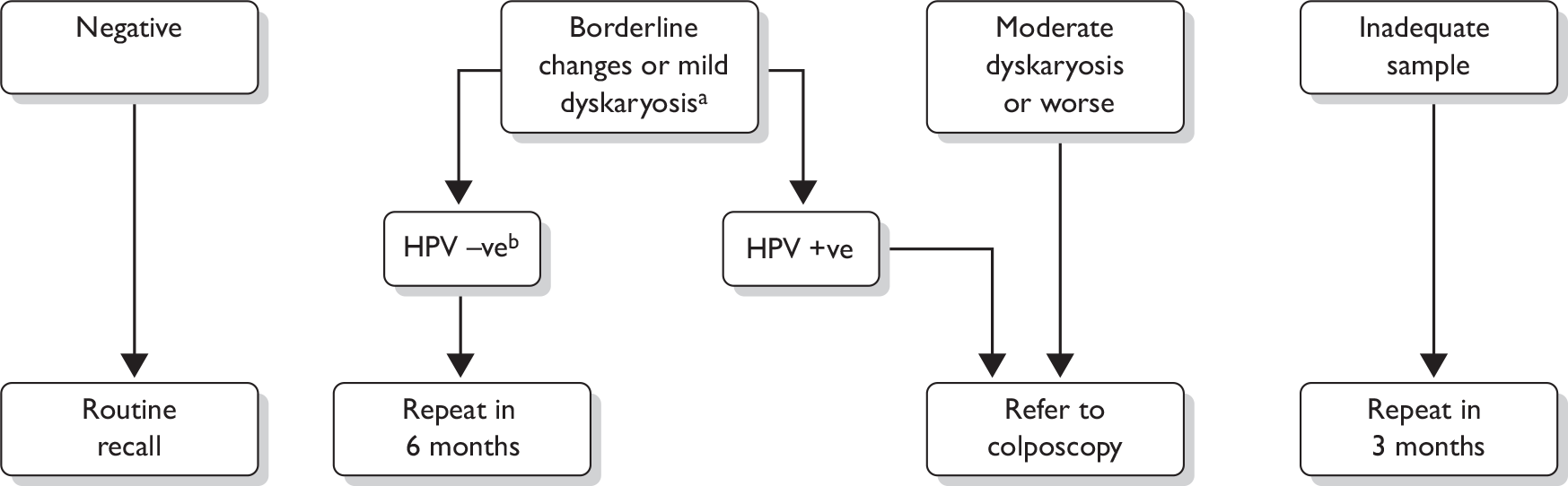

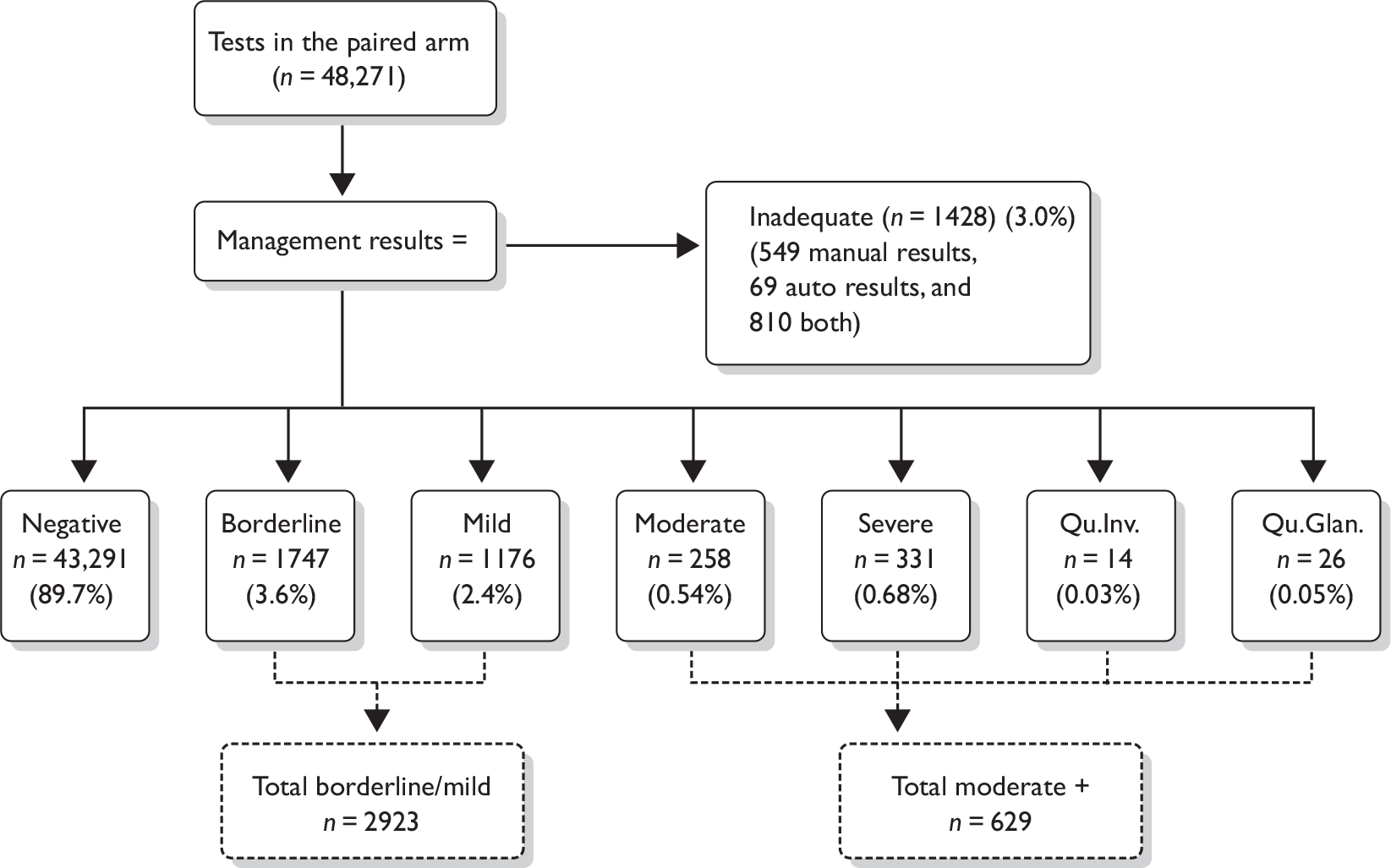

The management of abnormal cytology is shown in Figure 2. Colposcopy was undertaken according to national Cervical Screening Programme clinical practice guidelines. Women with high-grade cytology (moderate dyskaryosis or worse) underwent either a targeted biopsy with subsequent treatment for CIN2+ or an immediate ‘see and treat’ loop excision. Women with borderline or mild dyskaryosis were referred for colposcopy if they were HPV positive. If they were HPV negative they were returned to routine recall. In triaged cases, a biopsy was not mandated in the presence of normal satisfactory colposcopy. CIN2+ was treated by excision, usually loop excision, and CIN grade I (CIN1) would usually be managed conservatively. The study biopsy result was the higher grade in the event of both a targeted biopsy and subsequent loop excision. All histology was read with the pathologist unaware of the trial arm or LBC type and was reported using the World Health Organization (WHO) and the International Society of Gynecological Pathologists CIN classification system. The pathologist was aware of the grade of cytology. The definitions applied to the colposcopy and histology outcomes for the analysis are given in Table 8.

FIGURE 2.

Final management cytology protocol. a, After adoption of the Sentinel Sites protocol only women who were on routine recall were eligible for triage. Women on early repeats and follow-up were managed according to national guidelines. b, After adoption of the Sentinel Sites protocol women who had a negative HPV test were returned to routine recall as amendments had been made to the national screening database to permit this management recommendation.

| Colposcopy and histology outcomes | Definitions |

|---|---|

| Other cancer | A non-cervical cancer found during further investigations |

| Adenocarcinoma/squamous cell carcinoma stage 1a+ | Invasive cervical squamous cell carcinoma or adenocarcinoma reported as stage 1a or greater according to the FIGO system |

| CIN3 (squamous cell carcinoma in situ) and CGIN | High-grade pre-cancerous squamous or glandular cell changes on colposcopically directed biopsy |

| CIN2 | |

| CIN1 | Low-grade pre-cancerous squamous cell changes on colposcopically directed biopsy |

| No CIN/HPV only | No pre-cancerous abnormalities detected on colposcopically directed biopsy |

| Colposcopy NAD | No abnormalities seen during colposcopic examination |

Data collection

Transferring data

Data were transferred to the CSEU from a number of sources. Cytological and histological data stored in the Manchester Cytology Centre database (CliniSys Labcentre Laboratory Information System, Chertsey, UK) were downloaded to either a plain text file or Microsoft excel spreadsheet. The file was compressed and encrypted to AES 256 standard using winzip version 11 (WinZip Computing, Mansfield, CT, USA). Finally, the encrypted file was sent to the CSEU by secure file transfer protocol (FTP) data transfer. Randomisation data were also sent from Manchester by the same method.

Human papillomavirus results were sent from Edinburgh by secure FTP, but without encryption. Data on exact ranking and quintile for each slide relating to the BD FocalPoint GS Imaging System were stored on hard disk and also backed up on tape. The hard disk was accessed via the internet by BD Diagnostics and archived. The data on tape were also sent to the company by post. From Erembodegem, the unprocessed data files were passed on to CSEU by e-mail, again without encryption. Encryption was thought unnecessary in the latter stages as they did not contain personal identifiers.

Database development

At the CSEU all the data were stored and processed on a secure Microsoft access database. The database was under the control of the investigators and there was no involvement by either BD Diagnostics or Hologic in the conduct of the study or analysis of the results.

Recording cytology and human papillomavirus results

The data received from the cytology laboratory consisted of the manual reading results, the automated reading results and the final management result (MR). The final MR was the result that determined clinical management (routine recall, triage by HPV test or direct colposcopy referral). The results of the manual readings included up to five readings [the first reading, the rapid review for negative and inadequate first readings, the second reading if required, and further readings by a checker or pathologist/advanced BMS practitioner (AP) for samples with positive cytology]. For each reading, the data received included the test cytology result, whether the screen was full or rapid and the cytoscreener classification (cytoscreener, trainee cytoscreener/BMS, checker, BMS, medic or AP). The data related to the automated readings included the results of the first automated (auto) reading and of the auto rapid review if the first auto reading was negative or inadequate. The data also indicated whether the auto result was used to help determine the final result.

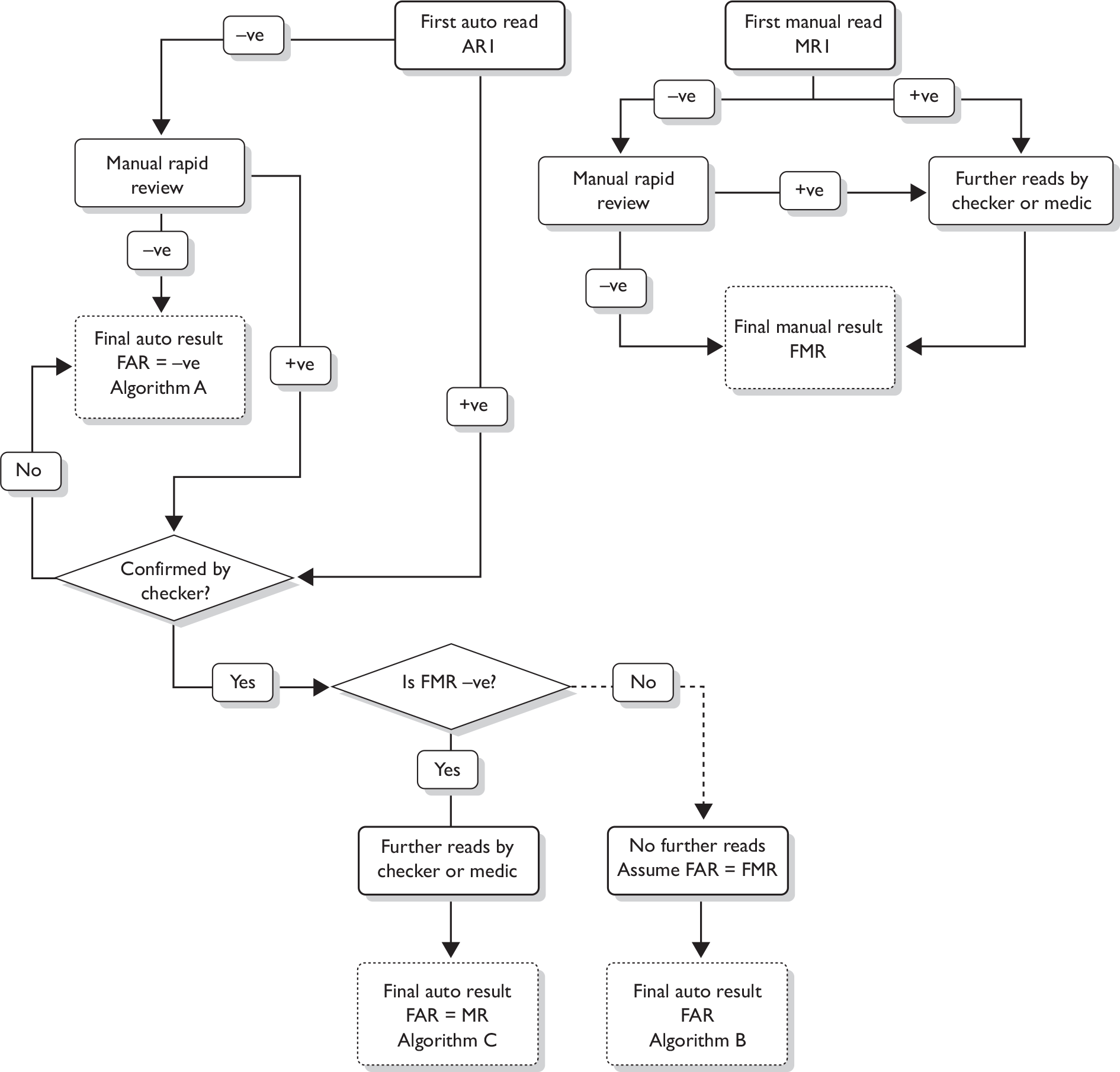

The protocol for determining the FMR and FAR is shown in Figure 3.

FIGURE 3.

Pathways based on actual laboratory application of study.

The following definitions are used:

-

The first manual result pre (MR1) and post (MR2) rapid review.

-

The FMR, defined as the result of the first reading by a medic or AP, or the result of the last reading that led to the report being signed off if the slide was not seen by a medic or AP (usually a negative finding signed off by a checker or screener as part of the manual process).

-

The auto result pre (AR1) and post (AR2) rapid review.

-

The FAR, defined as the first medic or AP result from a slide considered as abnormal after the first auto reading or abnormal from the rapid review (post checker), or any negative or inadequate result after the rapid review of negative and inadequate samples that was signed off without being seen by a medic or AP. The three possible pathways are shown in Figure 3. Algorithm A was where the automatic read AR1 was negative and the subsequent manual rapid review was negative; the FAR was therefore also negative. Algorithm B occurred where the auto result after confirmation by a checker was positive, but the FMR was also positive and the auto result was therefore not considered further. Under such circumstances it is assumed that in the real-life situation the slide would have proceeded to be seen by a checker and/or medic and the best estimate of the FAR was therefore assumed to be same as the FMR. Algorithm C was where the auto result (after confirmation by a checker) was positive, but the FMR was negative. Under these circumstances the slide was reviewed again and the FAR was that recorded after this further review, which was also the MR as recorded on the Manchester system.

-

The MR was based on the manual result and/or the auto result, whichever was worse, and this determined the woman’s management.

In the paired arm, the further reads by a checker or a medic applied to both the manual and auto; hence, the discordant pairs could arise only from a negative or inadequate read or from NFR.

Collecting histology data

Histology results were linked to the cytology results using patient identifiers from the Manchester Cytology Centre database and dates. The histology result was considered to be related to the cytology if the histology date was between 3 weeks and 12 months after the cytology date. In the case of more than one histology result being recorded during that time period, the highest grade abnormality was used. For samples taken at colposcopy clinic visits, the histology result from that visit was used unless superseded by a further result.

Missing data

Data were missing or unobtainable for the reasons given in Table 9.

| Data type | Reasons why data were unavailable |

|---|---|

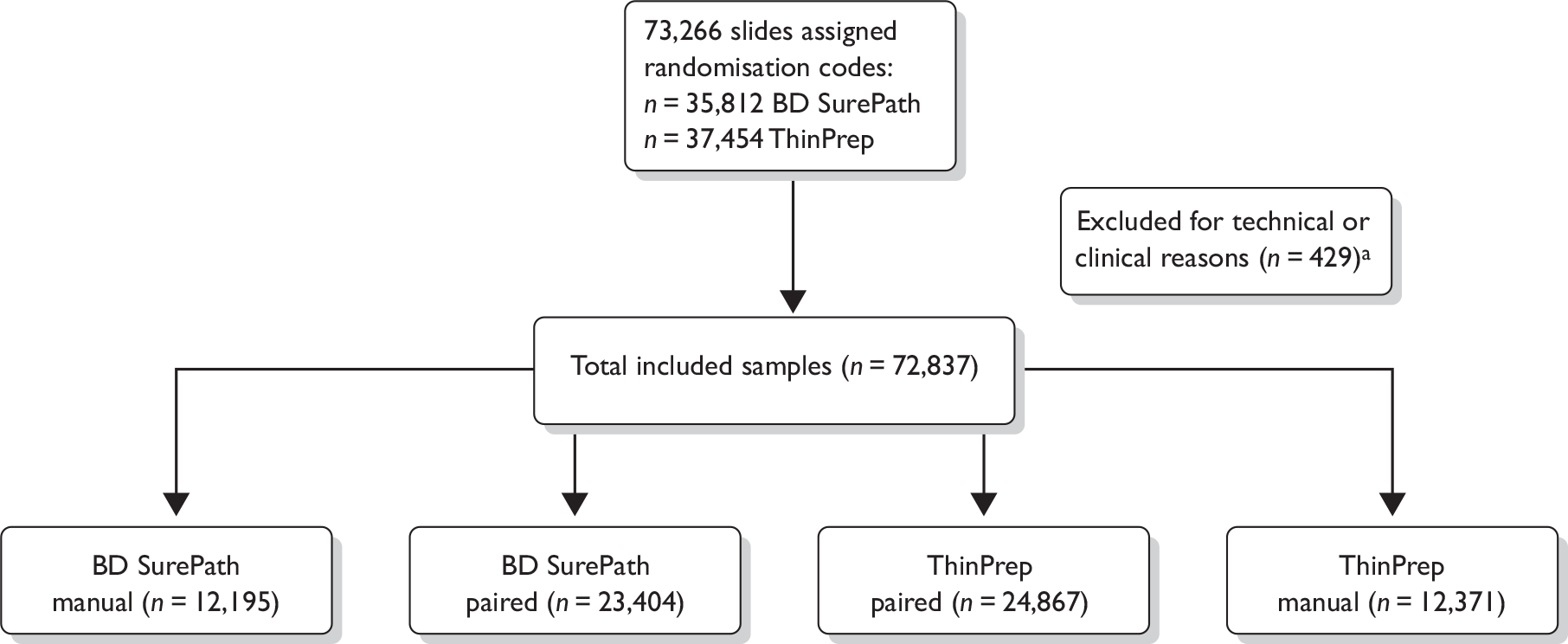

| Cytology | Apart from those samples excluded for technical or clinical reasons (as detailed in Figure 6), all cytology results were obtained |

| Randomisation | None missing – all cytology samples were associated with a valid randomisation code |

| Colposcopy/histologya |

Inadequate biopsy Failed to attend colposcopy Woman left GP or practice area Colposcopy delayed for known reason Follow-up search inconclusive |

| HPV data |

Sample was spoiled before assay HPV test failed No HPV test performed on samples taken at colposcopy clinic No HPV test performed on samples from subjects aged ≤ 24 years |

| BD FocalPoint GS Imaging System ranking data (quintile information) | Data could not be retrieved by BD Diagnostics from either the BD FocalPoint GS Imaging System via the internet or the backup tapes |

Statistical analysis, including statistical considerations

The sample size calculations were based on a test of non-inferiority of the automated technology in terms of its sensitivity (relative to that of the manual reading) based only on data from the paired observations. Inclusion of the unpaired data increases statistical power, but we chose a conservative approach based solely on the paired comparisons. Sample sizes for the paired comparison were determined by the numbers of CIN2+ outcomes (see Table 10) needed to evaluate relative true-positive rates (TPRs). When the number of CIN2+ outcomes is about 630, a paired test with a 0.025 one-sided significance level has an 80% power to reject the null hypothesis that the sensitivities are not equivalent [the difference in sensitivities (TPRs) is 0.050 or further from 0 in the same direction] when the expected difference in proportions is 0, assuming that the proportion of discordant pairs is 0.200 (nquery advisor, Version 3, Statistical Solutions, Saugus, MA, USA). The sample size estimation is sensitive to the assumed value for the proportion of discordant pairs. It was thought that 0.2 was likely to be the upper limit. The power would increase to about 95% if the proportion of discordant pairs were actually 0.1; in this case the study would have about 70% power to exclude a difference in the TPRs of 0.03 or further from 0 in the same direction. If the proportion of women who are CIN2+ in the population is about 3% we needed to obtain a total of about 46,000 participants in the paired arm to have a probability of 0.975 that it contained at least 630 CIN2+ outcomes. We chose a conservative estimate of 50,000 samples for the paired comparison, and an equal number of unpaired samples (hence a total of 2 × 50,000 = 100,000 samples in the trial overall). The above absolute difference of 5% in sensitivity defining non-equivalence between manual and automated reading would require a relative difference in sensitivity of at least 6.5%, assuming a sensitivity for LBC (to detect CIN2+) of 79%. 57

| FAR positive | FAR negative | |

|---|---|---|

| FMR positive | A | B |

| FMR negative | C | [D] |

Owing to accrual problems in the early part of the study the study design was later changed to increase the proportion of samples allocated to the paired arm, in order to ensure that the primary analysis was adequately powered. In June 2007 the sample size for the manual-only arm was reduced from 50,000 to 25,000, reducing the total requirement from 100,000 to 75,000 samples to complete the study. The original design based on the accrual of 100,000 samples required 1 : 1 randomisation, but the later design where only 75,000 samples were required to accrue changed the randomisation to 3 : 1 to achieve the required numbers, with a final paired–manual ratio of 2 : 1. This change retained equal numbers of ThinPrep and SurePath in each arm. The purpose of the manual arm was to ensure that manual reading was reported as it would be if no automated reading was taking place. The distribution of manual reading cytology grades in the manual and paired arms was compared for the two periods before and after the change in randomisation in order to determine whether this change had any impact.

The analysis compares the FMR with the FAR including the results of HPV triage. A ‘positive’ test was one that led to the woman being referred directly to colposcopy (moderate or worse or a result of borderline/mild dyskaryosis accompanied by a positive HPV test). A ‘negative’ test was a result of negative or borderline/mild dyskaryosis with a negative HPV test. The FAR was defined as positive if the cytology result was moderate or severe, or if the cytology result was borderline or mild with a positive HPV test. An FAR of borderline or mild with negative HPV was considered as negative. For borderline/mild samples where the HPV status was not known, the result was taken as positive if the woman was referred to colposcopy. The same applied to the FMR. The main analysis was conducted for each of the ThinPrep Imaging System and BD FocalPoint GS Imaging System arms, based on cytological and histological findings. Tables 10 and 11 show the final analysis of the paired data. Table 10 analyses the disease-positive outcomes (defined as CIN2+, essentially all cases requiring treatment). Table 11 includes histological outcomes that are CIN1 or less (CIN1–), essentially all cases not requiring treatment. The outcome of colposcopy was taken to be the gold standard, available only for those women who were referred to colposcopy. Note that in Tables 10 and 11, numbers in enclosed brackets ([D] and [H]) are those that, from the nature of the design, cannot be directly observed, because women who were negative on both the manual and automated reading were not referred to colposcopy.

| FAR positive | FAR negative | |

|---|---|---|

| FMR positive | E | F |

| FMR negative | G | [H] |

We estimated the relative sensitivity of automated screening against manually read cytology outcomes to detect both CIN2+ and CIN3+. CIN2+ represents the threshold for treatment and was used to determine true-positives. However, detection of CIN3+ was also used as a clinical outcome in the analysis.

Estimating the relative sensitivity using CIN2+ as disease positive

The sensitivity of the FAR from Table 10 = (A + C)/(A + B + C + [D]).

The sensitivity of the FMR from Table 10 = (A + B)/(A + B + C + [D]).

Although D is unknown and the absolute sensitivity cannot be calculated, the relative sensitivity can be calculated as R = (A + C)/(A + B).

The 95% confidence interval (CI) is calculated as [R/y,R × y], where

A calculation for the relative sensitivity was undertaken for both the BD FocalPoint GS Imaging System and the ThinPrep Imaging System in the paired arm.

Relative specificity rates of screening by automated and manual reading

The relative specificity was calculated in a similar manner to that for the relative sensitivity using Table 11.

H is unknown – but a very close estimate can be achieved by assuming that D (CIN2+ not detected by either manual or auto) is 0 so that H = N – [E + F + G + A + B + C], where N is the total number of samples.

The calculations of relative sensitivity and specificity were undertaken for both the BD FocalPoint GS Imaging System and the ThinPrep Imaging System separately.

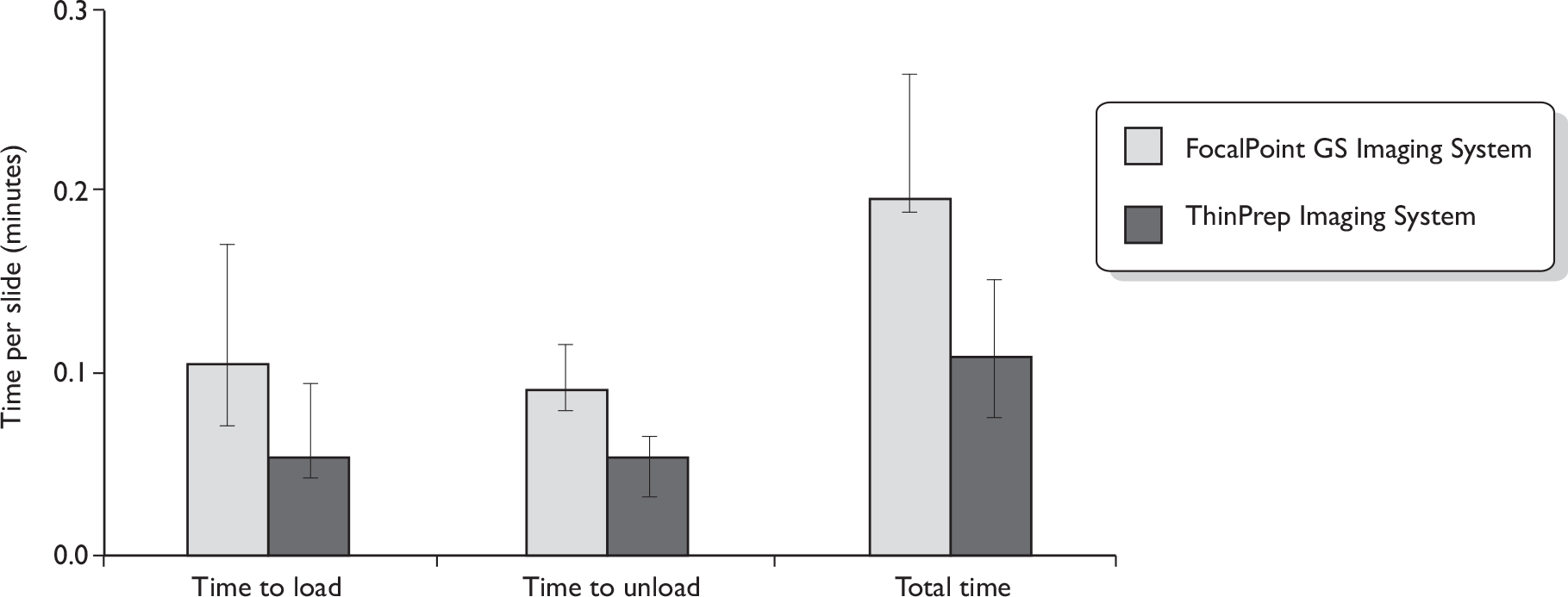

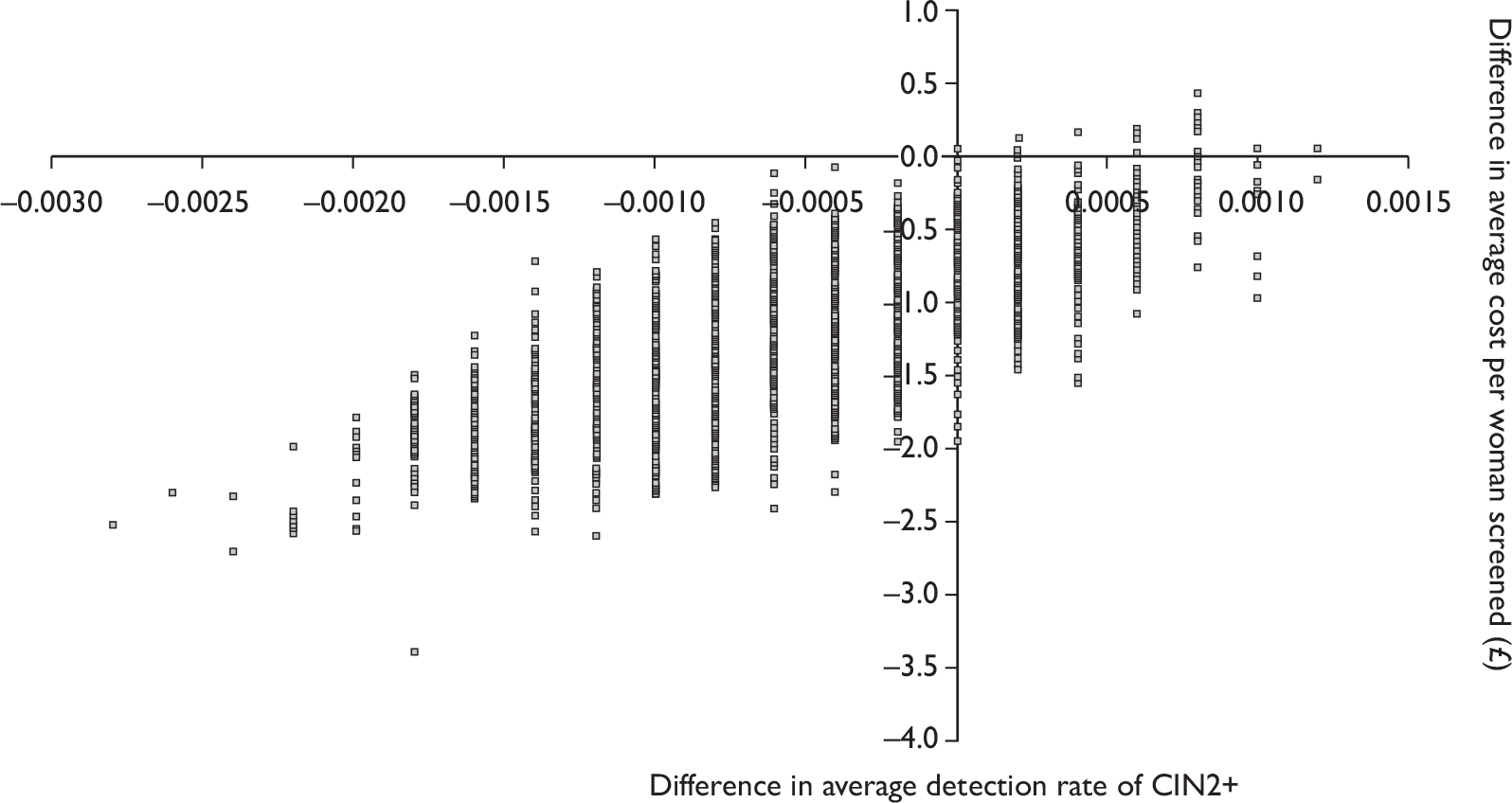

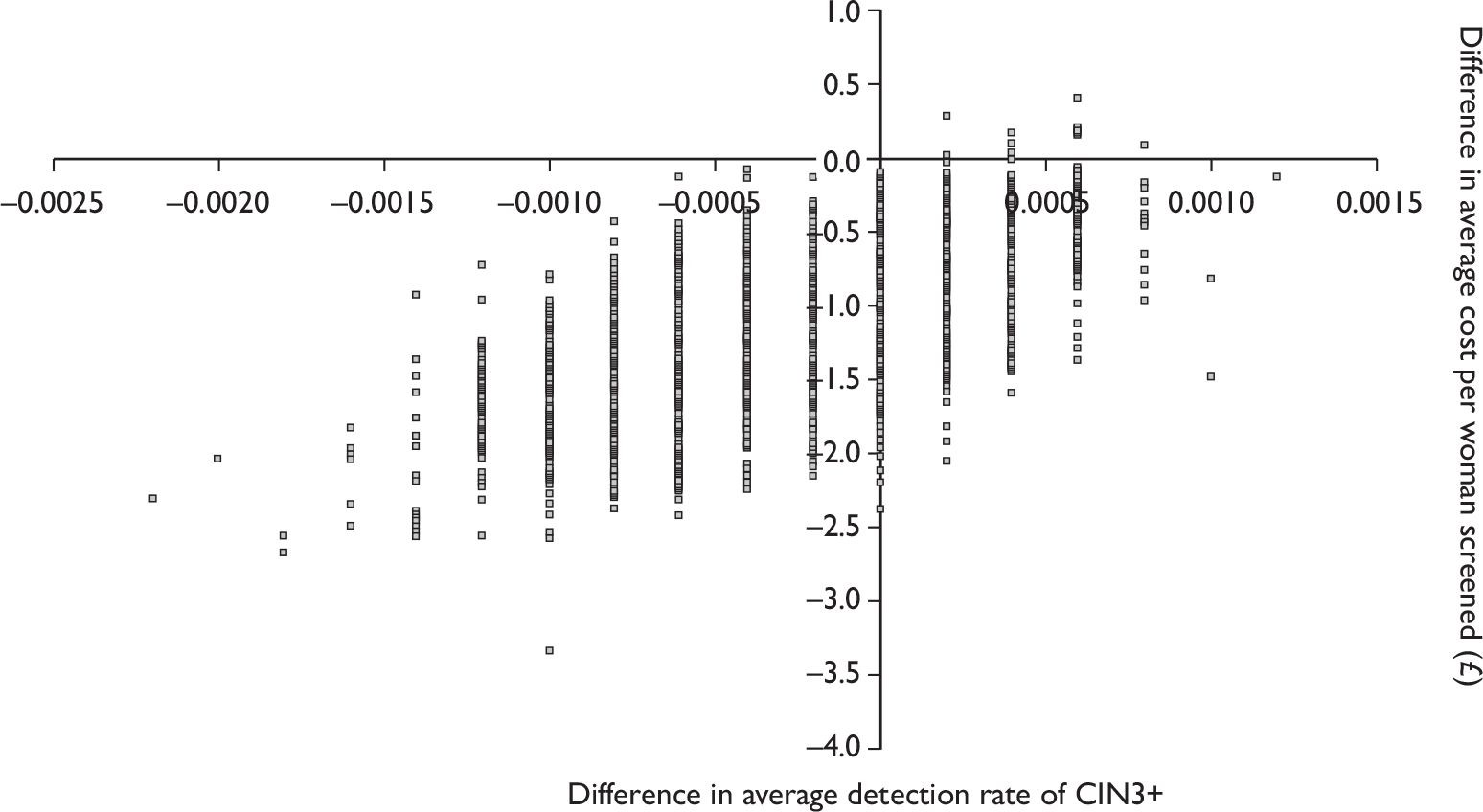

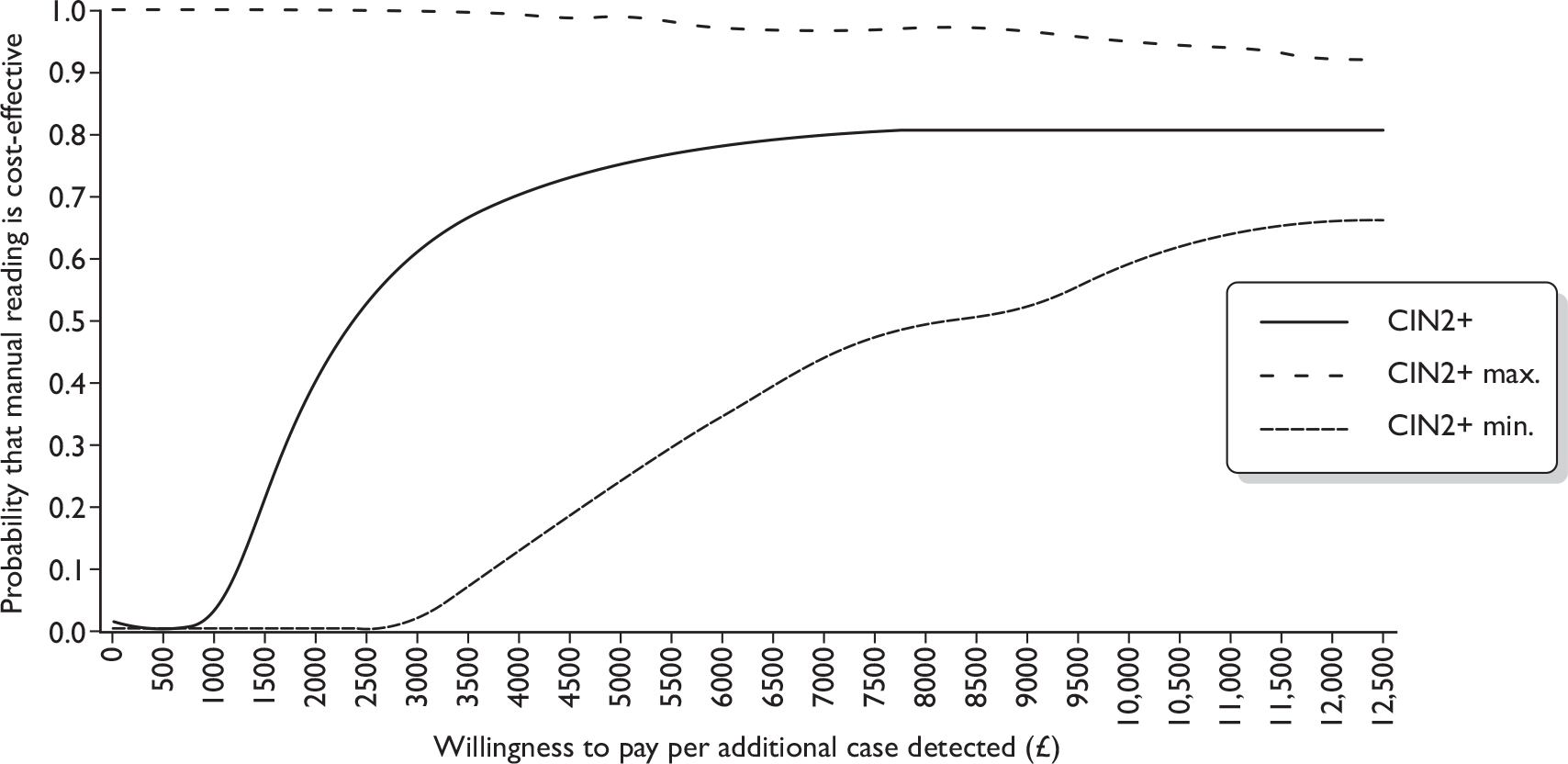

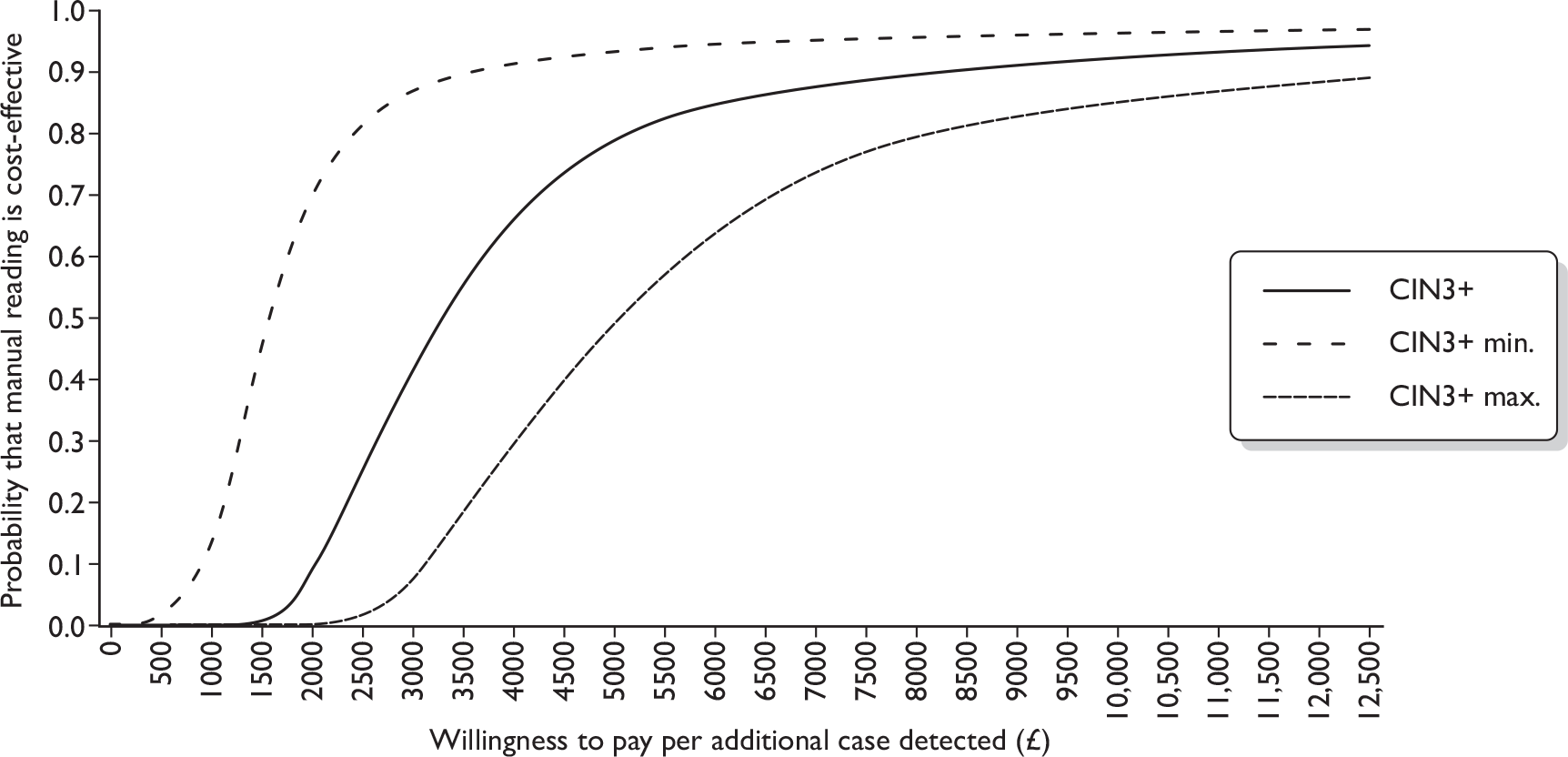

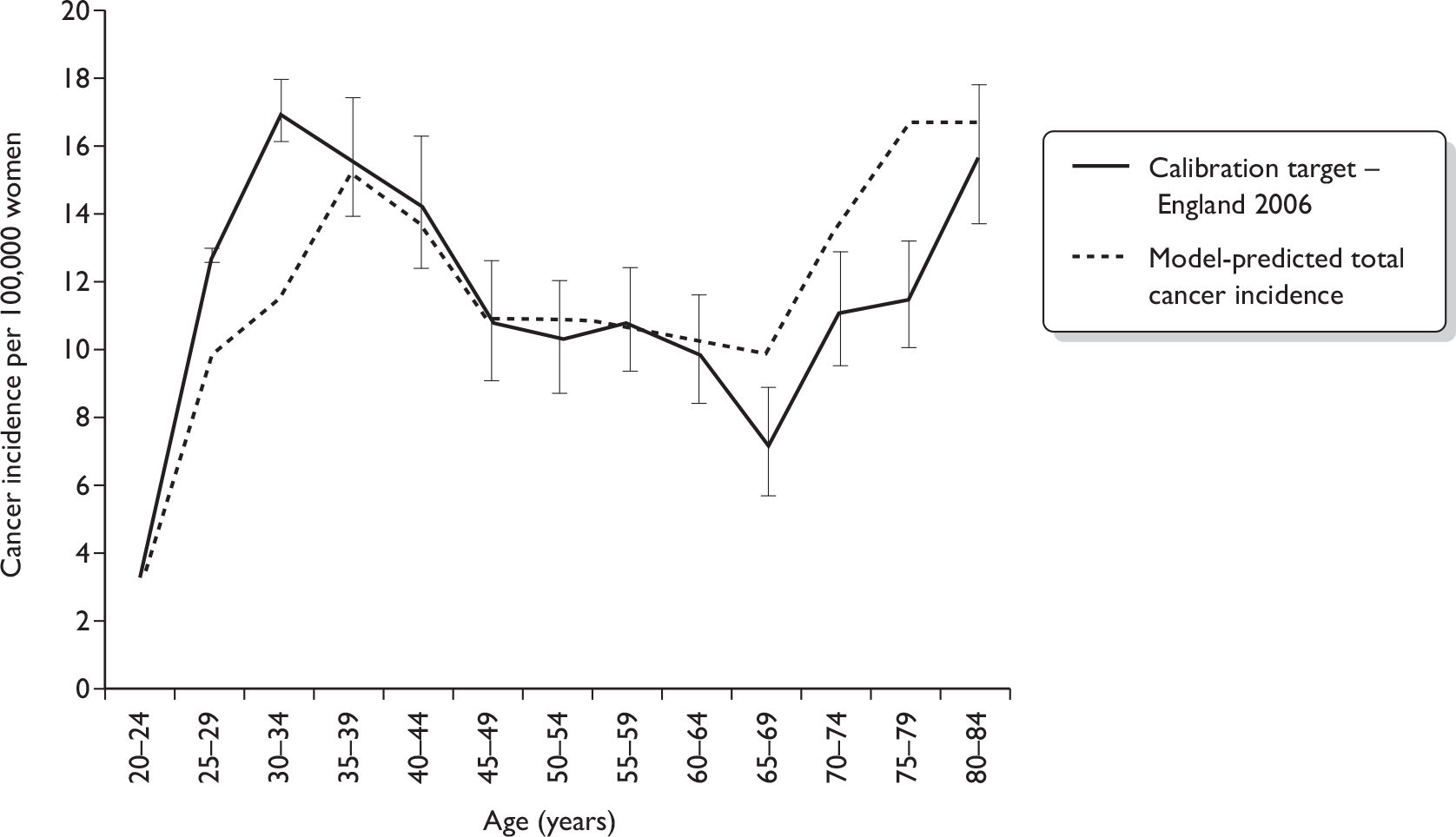

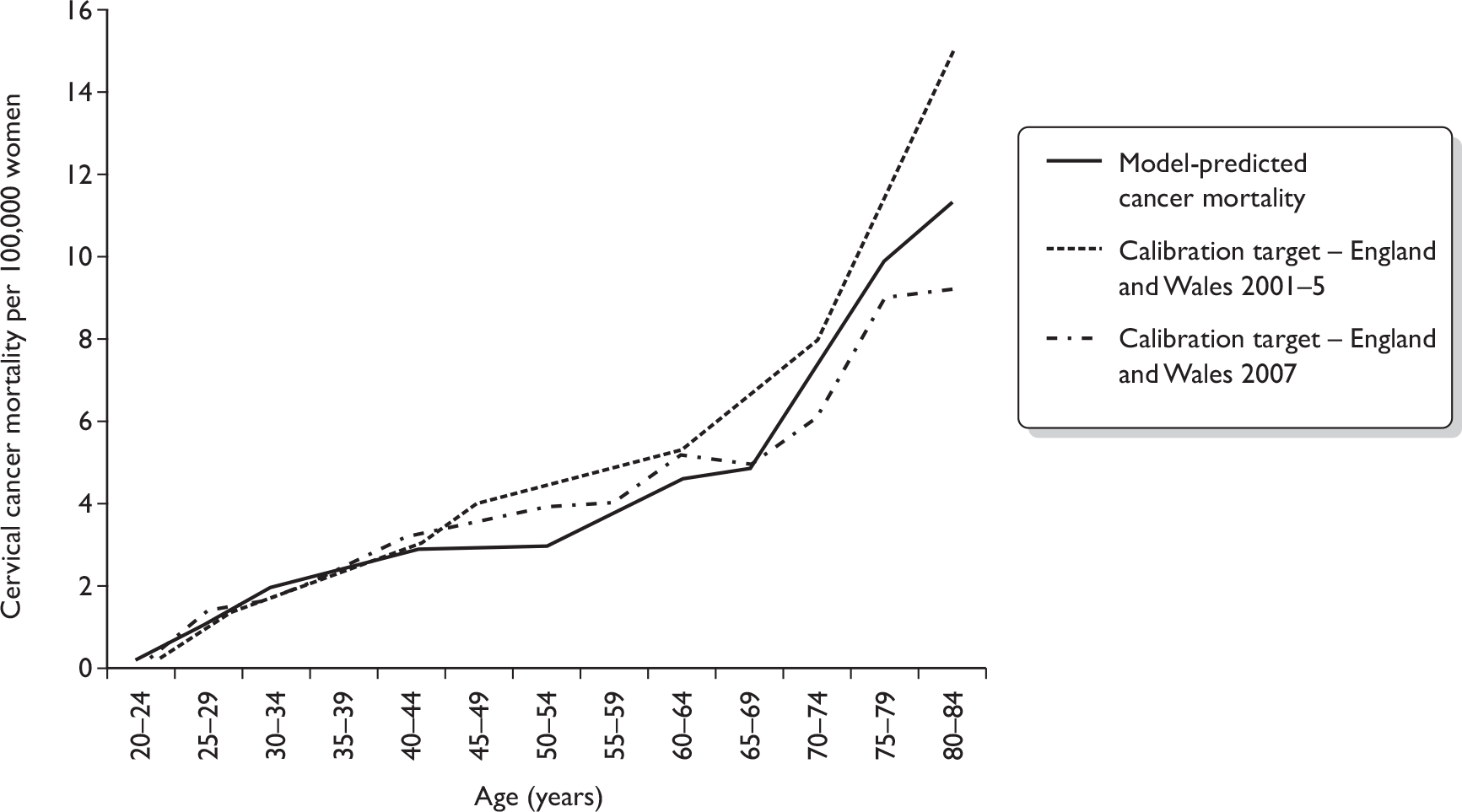

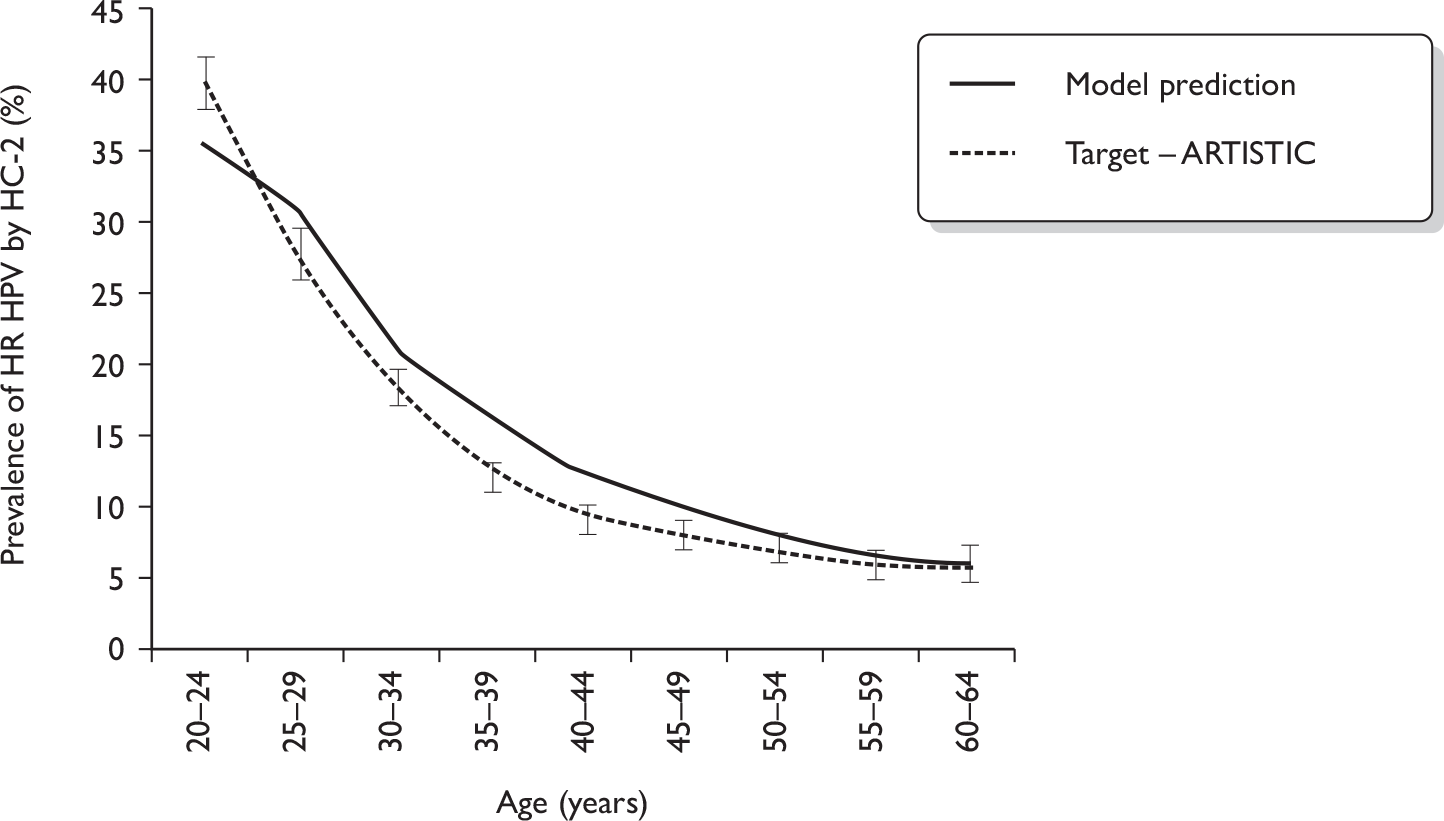

Further analysis for Becton Dickinson FocalPoint Guided Screener Imaging System and ThinPrep Imaging System involving unpaired arms data and specific data for the Becton Dickinson FocalPoint Guided Screener Imaging System