Notes

Article history paragraph text

The research reported in this issue of the journal was funded by the HTA programme as project number 06/98/01. The contractual start date was in October 2010. The draft report began editorial review in April 2012 and was accepted for publication in August 2012. The authors have been wholly responsible for all data collection, analysis and interpretation, and for writing up their work. The HTA editors and publisher have tried to ensure the accuracy of the authors’ report and would like to thank the reviewers for their constructive comments on the draft document. However, they do not accept liability for damages or losses arising from material published in this report.

Permissions

Copyright statement

© Queen’s Printer and Controller of HMSO 2014. This work was produced by Cook et al. under the terms of a commissioning contract issued by the Secretary of State for Health. This issue may be freely reproduced for the purposes of private research and study and extracts (or indeed, the full report) may be included in professional journals provided that suitable acknowledgement is made and the reproduction is not associated with any form of advertising. Applications for commercial reproduction should be addressed to: NIHR Journals Library, National Institute for Health Research, Evaluation, Trials and Studies Coordinating Centre, Alpha House, University of Southampton Science Park, Southampton SO16 7NS, UK.

Scientific summary

Background

The randomised controlled trial (RCT) is widely considered to be the gold standard study for comparing the effectiveness of health interventions. Central to the design and validity of a RCT is a calculation of the number of participants needed (the sample size). This provides reassurance that the trial will identify a difference of a particular magnitude if such a difference exists. The value used to determine the sample size can be considered the ‘target difference’. From both a scientific and an ethical standpoint, selecting an appropriate target difference is of crucial importance. Specifying too small a target difference could be a wasteful (and unethical) use of data and resources. Conversely, too large a target difference could lead to an important difference being easily overlooked because the study is too small. Furthermore, an undersized study may not usefully contribute to the knowledge base and could potentially have a detrimental impact on decision-making.

Determination of the target difference, as opposed to statistical approaches to calculating the sample size, has been greatly neglected. A variety of approaches have been proposed for formally specifying what an important difference should be [such as the ‘minimal clinically important difference (MCID)’], although the current state of the evidence is unclear, particularly with regard to informing RCT design by specifying a target difference.

Aim

The aim was to provide an overview of the current evidence on methods for specifying the target difference in a RCT sample size calculation.

Objectives

-

To conduct a systematic review of methods for specifying a target difference.

-

To evaluate current practice by surveying triallists.

-

To develop guidance on specifying the target difference for a RCT.

-

To identify future research needs.

Methods

The study comprised three interlinked components.

Systematic review of methods for specifying a target difference for a randomised controlled trial

A comprehensive search of both biomedical and some non-biomedical databases was undertaken. Additionally, clinical trial textbooks and guidelines were reviewed. To be included, a study had to report a formal method that could potentially be used to specify a target difference. The biomedical and social science databases searched were MEDLINE, MEDLINE In-Process & Other Non-Indexed Citations, EMBASE, Cochrane Central Register of Controlled Trials (CENTRAL), Cochrane Methodology Register, PsycINFO, Science Citation Index, EconLit, Education Resources Information Center (ERIC) and Scopus for in-press publications. All were searched from 1966 or the earliest date of the database coverage and searches were undertaken between November 2010 and January 2011.

Identification of triallists’ current practice

This involved two surveys:

-

Members of the Society for Clinical Trials (SCT) were sent an invitation (followed by a reminder) to complete an online survey through the society’s email distribution list. Respondents were asked about their awareness and use of, and willingness to recommend, methods for determining a target difference in a RCT.

-

Survey of leading UK- and Ireland-based triallists. The survey was sent to UK Clinical Research Collaboration (UKCRC)-registered Clinical Trials Units (CTUs), Medical Research Council (MRC) UK Hubs for Trials Methodology Research and National Institute for Health Research (NIHR) Research Design Services (RDS). One response per triallist group was invited. In addition to the information collected in the SCT survey, this survey included questions on the approach used for the most recent trial developed. The initial request was personalised and sent by post, followed by two reminders.

Production of guidance on specifying the target difference for a randomised controlled trial

The draft guidance was developed by the project steering and advisory groups utilising the results of the systematic review and surveys. Findings were circulated and presented to members of the combined group at a face-to-face meeting along with a proposed outline of the guidance document structure and list of recommendations. Both the structure and main recommendations were agreed at this meeting. The guidance was subsequently drafted and circulated for further comment.

Results

Systematic review of methods for specifying a target difference for a randomised controlled trial

The search identified 11,485 potentially relevant studies, of which 1434 were selected for full-text assessment, with 777 included in the review. Fifteen clinical trial textbooks and the International Conference on Harmonisation (ICH) tripartite guidelines were also reviewed. Seven methods were identified – anchor, distribution, health economic, opinion-seeking, pilot study, review of evidence base (RoEB) and standardised effect size (SES) – each with important variations. The most frequently identified methods used to determine an important difference were the anchor, distribution and SES methods. No new methods were identified by this review beyond the seven pre-identified methods described earlier; however, substantial variations in the implementation of each method were detected. It is critical when specifying a target difference to decide whether the focus is to determine an important and/or a realistic difference as the appropriate methods vary accordingly. Some methods for determining an important difference within an observational study are not appropriate for specifying a target difference in a RCT (e.g. statistical hypothesis testing approach). Multiple methods for determining an important difference were used in some studies although the combinations varied, as did the extent to which results were triangulated.

Identification of triallists’ current practice

The two surveys regarding formal methods to determine the target difference in a RCT provided insight into current practice among clinical triallists.

Society for Clinical Trials survey

Of the 1182 members on the SCT membership email distribution list, 180 responses were received (15%). Awareness ranged from 69 (38%) for the health economic method to 162 (90%) for the pilot study method. Usage was lower than awareness and ranged from 16 (9%) for the health economic method to 133 (74%) for the pilot study method. The highest level of willingness to recommend was for the RoEB method (n = 132, 73%) and the lowest was for the health economic method (n = 28, 16%). Willingness to recommend among those who had used a particular method was substantially higher than across all respondents: the lowest level was for the opinion-seeking method (n = 40, 56%) and the highest level was for the RoEB method (n = 118, 89%).

UK- and Ireland-based triallist survey

Of the 61 surveys sent out, 34 (56%) responses were received. Awareness of methods ranged from 97% (n = 33) for the RoEB and pilot methods to only 41% (n = 14) for the distribution method. All respondents were aware of at least one of the different formal methods for determining the target difference. Usage ranged from 24% (n = 8) for both the distribution and health economic methods to 94% (n = 32) for the RoEB method. Usage was substantially less than awareness for all methods except for the pilot study, RoEB and SES methods. The highest level of willingness to recommend was for the RoEB method (76%, n = 26) followed by the SES method (65%, n = 22), with the distribution method having the lowest level of willingness to recommend (26%, n = 9). Based on the most recent trial (n = 33), all bar three groups (91%, n = 30) used a formal method. The vast majority (91%, n = 30) stated that the target difference was one that was viewed as important by a stakeholder group. Just over half (61%, n = 20) stated that the basis for determining the target difference was to achieve a realistic difference given the interventions under evaluation.

Guidance on specifying the target difference in a randomised controlled trial

Guidance was developed for specifying the target difference in a RCT. Additionally, guidance on reporting the sample size calculation was developed which includes a minimum set of items for reporting the specification of the target difference in the trial protocol and main results paper. A minimum set of items for reporting the specification of the target difference in the trial protocol and main results paper was developed.

Conclusions

The specification of the target difference is a key component of a RCT design. There is a clear need for greater use of formal methods to determine the target difference and for better reporting of its specification. Although no single method provides a perfect solution to a difficult question, methods are available to inform specification of the target difference and should be used whenever feasible. Raising the standard of RCT sample size calculations and the corresponding reporting of them would aid health professionals, patients, researchers and funders in judging the strength of the evidence and ensure better use of scarce resources.

Further research priorities

-

A comprehensive review of observed effects in different clinical areas, populations and outcomes is needed to assess the generalisability of the Cohen’s interpretation for continuous outcomes, and to provide guidance for binary and survival (time-to-event) measures. To achieve this, an accessible database of SESs should be set up and maintained. This would aid the prioritisation of research and help researchers, funders, patients and health-care professional assess the impact of interventions.

-

Prospective comparison of formal methods for specifying the target difference is needed in the design of RCTs to assess the relative impact of different methods.

-

Practical use of the health economic approach is needed; the possibility of developing a decision model structure that reflects the view of a particular funder (e.g. the Health Technology Assessment programme) and incorporates all relevant aspects, should be explored.

-

Further exploration of the implementation of the opinion-seeking approach in particular is needed. The reliability of a suggested target difference that would lead to a change in practice should be explored. Additionally, the impact of eliciting the opinion of different stakeholders should also be evaluated.

-

The value of the pilot study for estimating parameters (e.g. control group event proportion) for a definitive study should be further explored by comparing pilot study estimates with the resultant definitive trial results.

-

Qualitative research on the process of specifying a target difference in the context of developing a RCT should be carried out to explore the determining factors and interplay of influences.

Funding

The Medical Research Council UK and the National Institute for Health Research Joint Methodology Research Programme.

Chapter 1 Introduction and background

Introduction

The randomised controlled trial (RCT) is widely considered to be the best method for comparing the effectiveness of health interventions. 1 But simply detecting any difference in the effectiveness of interventions may not be sufficient or useful: if the interventions differ to a degree or in a manner that is of little consequence in patient, clinical or economic (or other meaningful) terms, then the two interventions might be considered equal. If RCTs are to produce useful information that can help patients, clinicians and planners make decisions about health care, it is essential that they are designed to detect differences between the interventions that are meaningful.

Specifying the ‘target difference’, the difference that a trial sets out to detect, is also necessary for calculating the number of participants who need to be involved. It is an essential component of an a priori sample size calculation. Performed before the trial starts, this calculation determines the number of participants needed for the trial to reliably detect a difference of predetermined magnitude between interventions. Assuming that the trial manages to recruit the number of participants determined by the sample size calculation, the sample size calculation provides reassurance that the trial is likely to detect such a difference to a predefined level of statistical precision.

From both a scientific and ethical standpoint, selecting an appropriate target difference is of crucial importance. If a small target difference is determined as the appropriate measure of a meaningful difference between one intervention and another, the sample size calculation will usually indicate that a large number of participants are needed for a trial. Selecting a target difference that is too small to be meaningful may result in a large study identifying a difference between interventions that may have limited patient, clinical or economic significance. Conversely, when a larger difference is targeted, fewer participants will be required, but if the target difference is too large then this may lead to a small study incapable of confirming a smaller important difference. Either would be a wasteful (and unethical) use of data and resources. Furthermore, an undersized study may not usefully contribute to the knowledge base and could detrimentally impact on decision-making. 2 The importance and impact of the target difference chosen can be demonstrated using two motivating examples (Table 1).

| Trial | Target differencea | Result | Triallists’ interpretation |

|---|---|---|---|

| Norwegian Spine Study3 | Mean difference of 10 points in the Oswestry Disability Index (SD 18 points) | −8.4 points, 95% CI −13.2 to −6.6 points | ‘As there is no consensus based agreement of how large a difference between groups must be to be of clinical importance it is impossible to conclude whether the effect found in our study is of clinical importance’ |

| Full-thickness macular hole and Internal Limiting Membrane peeling study (FILMS)4 | Mean difference of six letters in distance visual acuity (SD 12 letters) | 4.8 letters, 95% CI −0.3 to 9.8 letters | ‘There was no evidence of a difference in distance visual acuity after the internal limiting membrane peeling and no-internal limiting membrane peeling techniques. An important benefit in favor of no-internal limiting membrane peeling was ruled out’ |

The Norwegian Spine Study was a RCT comparison between surgery with disc prosthesis and conservative non-surgical treatment (multidisciplinary rehabilitation) for patients with chronic lower back pain. 3 Sample size calculations indicated that 180 participants were needed for the study to reliably detect a difference at 2 years of 10 points in the primary outcome measure, the Oswestry Disability Index. Ultimately, 179 participants were recruited and the analysis demonstrated a statistically significant difference of −8.4 points between the treatments in favour of surgery, less than the prespecified value although such a magnitude was comfortably within a plausible range of uncertainty [95% confidence interval (CI) −13.2 to −6.6 points]. Should clinical practice now change given the study’s finding? Was the observed difference of a sufficient magnitude to warrant the risk that surgery entails over the conservative treatment? The study investigators concluded that ‘As there is no consensus on how large a difference between groups must be to be of clinical importance it is impossible to conclude whether the effect found in our study is of clinical importance . . . our study underlines the need for such a consensus agreement.’ Thus, the study demonstrated a statistically significant difference between the interventions, but not one that met the authors’ own predefined target difference. Because the investigators had opted for a target difference that was difficult to justify, an otherwise well-conducted study did not produce a clear answer to the clinical question. Had a clear and defendable decision been reached before the start of the trial on what difference in Oswestry Disability Index can be considered clinically important, the research could have contributed more effectively to treatment decision-making.

The Full-thickness macular hole and Internal Limiting Membrane peeling Study (FILMS) compared peeling, or not, of the internal limiting membrane as part of surgery for a macular hole. 4 The primary outcome was visual acuity and the study was designed to detect a difference of six letters on an eye chart, although no clear justification for this choice was reported. Once completed and analysed, there was a mean difference of five letters (95% CI −0.3 to 9.8) between groups in favour of peeling although this was (just) not statistically significant at the 5% level. Five letters actually corresponds to one additional line on the eye chart and carries intuitive appeal and arguably should have been the target difference. Interpreting their findings in light of their selected target difference the authors concluded that ‘There was no [statistical] evidence of a difference in distance visual acuity after the internal limiting membrane peeling and no-internal limiting membrane peeling techniques. An important benefit in favor of no internal limiting membrane peeling was ruled out.’ Although the study did partially answer the research question, the findings were not as definitive as they could, and perhaps should, have been if a smaller and more widely accepted target difference had been used.

This study also included an economic evaluation, the primary outcome of which was the incremental cost per quality-adjusted life-year (QALY) saved. The evaluation found that, on average, internal limiting membrane peeling was less costly and more effective, although neither difference was statistically significant. Moreover, there was a 90% probability that internal limiting membrane peeling would be considered cost-effective compared with no internal limiting membrane peeling at a threshold that the UK NHS would generally consider acceptable. 5 Although such findings suggest that the balance of evidence favours internal limiting membrane peeling, interpretation of the results based on the chosen target difference suggests an inconclusive result.

Both of the examples above illustrate that the statistical evidence alone is an insufficient basis on which to interpret the findings of a study, which is ultimately dependent on how the study was designed and the wider context. The target difference is the difference that the study is designed to reliably detect. For example, a trial of nutritional supplementation was designed to detect a reduction from 50% to 25% in reported infections for critically ill patients receiving parenteral nutrition. 6 The trial seeks to inform a decision (do we adopt the new treatment or stay with the existing treatment?) and this involves not only identifying the differences for single measures but also weighing up the benefits, harms and (resource) costs of an alternative course of action.

Surprisingly, given its critical impact, the determination of the target difference, as opposed to statistical approaches to calculating the sample size, has been greatly neglected. 7,8 A variety of approaches have been proposed for formally determining what an important difference should be [such as the ‘minimum clinically important difference (MCID)’]; however, the relative merits of the available options for informing RCT design are uncertain.

Project summary

The Difference ELicitation in TriAls (DELTA) project described in this monograph aimed to address this gap in the evidence base. The study was prompted by the observation that a number of methods have been proposed that could potentially be used. Beyond the medical area, it is possible that there are further methods that might also be adopted. Reviews of subsets of methods have been conducted,2,9 but there is a need for a comprehensive review of the methods available that considers their use explicitly for the design of RCTs. There is also uncertainty about current practice among the clinical trials community and about the usage of methods in practice. The aim of this research project was to provide an overview of the current evidence through three main components:

-

A systematic review of potential methods for identifying a target difference developed within and outside the health field to assess their usefulness.

-

Identification of current ‘best’ trial practice using two surveys of triallists [members of the international Society for Clinical Trials (SCT) and leading UK clinical trial researchers from UK Clinical Research Collaboration (UKCRC) Clinical Trials Units (CTUs)].

-

Production of a structured guidance document to aid the design of future trials.

The research was commissioned and funded by the UK Medical Research Council (MRC) Methodology Research Programme (MRC005885 and G0902147 respectively) and was undertaken by a collaborative group in which the majority of members have extensive experience of the design and conduct of RCTs (both as investigators and as independent committee members) and have conducted methodological research related to RCTs (e.g. quality-of-life measurement, statistical methodology, reporting, surgical trials and economic evaluation).

The project website can be found at www.abdn.ac.uk/hsru/research/assessment/methodological/delta/.

Background

Why seek an important difference?

The RCT examples in the previous section illustrate the difference between statistical evidence of a difference and what might be described as an important difference. It is worth considering why there should be a distinction between the two. Conventional RCT sample size calculations provide reassurance that RCTs will be able to detect a difference of a particular magnitude while also controlling for the risk of falsely concluding a difference when there is none. In statistical hypothesis testing terminology, this is controlling the possibility of falsely accepting that there is a difference when there is not (a type I error) and failing to reject the hypothesis of no difference when there is a genuine difference (a type II error). The risk of such errors exists because of the variability in outcome and the need for sufficient data to achieve statistical precision. However, if these errors are controlled, why would we not act on the basis of an observed statistical difference? The reason lies in the consequences of actions. Changing from one treatment to another can result in benefits or harms, or in costs, to the patient or to the care provider. Returning to the Norwegian Spine Study, all major surgical procedures incur a very small risk of serious morbidity or mortality related to anaesthesia. From the perspective of patients and clinicians, is this risk warranted for the anticipated benefit gained? Health-care providers wish to know that the not insubstantial costs (resource costs as much as financial costs) related to surgery are worthwhile. This underlying issue has a long history in the statistical literature. Gosset,10 the statistician of ‘t-test’ fame, wrote as early as 1939:

When I first reported on the subject, I thought that perhaps there might be some degree of probability which is conventionally treated as sufficient in such work as ours and I advised that some outside authority should be consulted as to what certainty is required to aim at in large scale work. However it would appear that in such work as ours the degree of certainty to be aimed at must depend on the pecuniary advantage to be gained by following the result of the experiment, compared with the increased cost of the new method, if any, and the cost of each experiment [emphasis added].

The ‘advantage’ and associated costs drive our desire for a particular degree of certainty in decision-making; in Gosset’s case, working for Guinness, it was with regards to the brewing industry. The context should drive how certain we need to be that we have come to the correct conclusion and therefore there is no universal value that can be applied in all scenarios. If ‘a lot’ is at stake then we wish to be more certain than when the consequences of an incorrect decision are minor. The parallel with regards to health care is clear, although in this setting ‘advantage’ and ‘costs’ can be broadened to include not just economic definitions (based on the use of resources and profit or loss) but also benefits and harms for patients; this broadening of scope is needed to allow an informed decision about treatment to be made. It can be argued that a full answer to the question will therefore require all relevant benefits, harms and costs to be considered simultaneously. This naturally leads to decision modelling approaches (see How can an important difference be determined? and Chapter 2 for further details) that seek to link both the current evidence and the decision (including potential consequences for costs and health of making the wrong choice). The desire for an (clinically) ‘important’ difference can be viewed as a middle ground seeking to ensure that any harms and costs are incurred for a good reason: the patient receives spinal surgery over alternatives because we can realistically expect benefits to justify the associated risks and costs. Focusing on a benefit (or harm) as the most important outcome is a natural and intuitive, if imperfect, way to guide our decision.

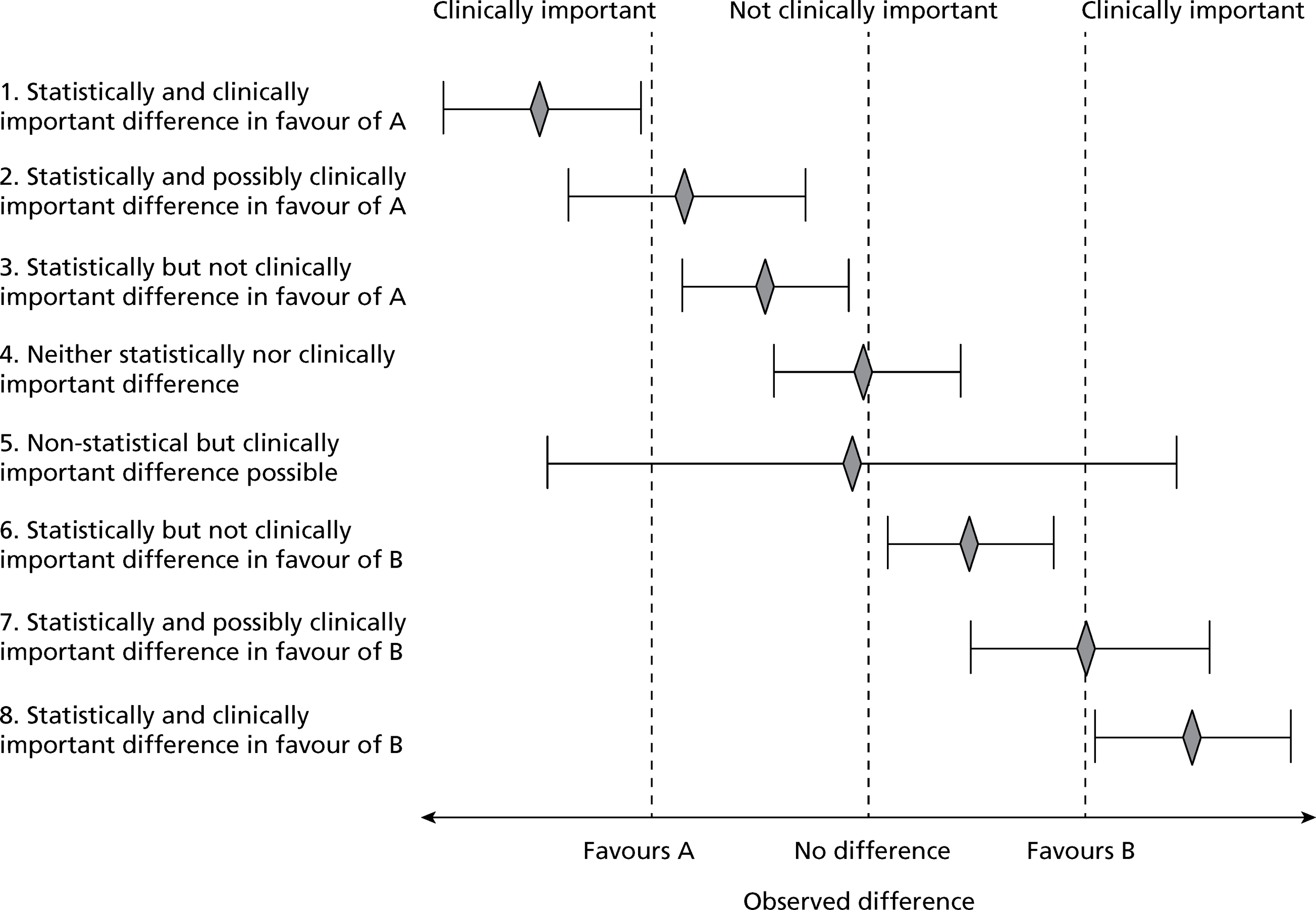

The implication of imposing an interpretation of clinical importance onto the statistical result (observed difference) can be seen in Figure 1. Eight possible (although not exhaustive) scenarios, with regards to interpretation when comparing two interventions, are shown; they reflect possible combinations of statistical and clinical importance. Drawing an interval beyond which clinical importance is determined is obviously a simplification: in reality, a gradation in merit is more likely. Clearly, this approach does not provide a full answer as it is focused on a single outcome (ignoring impact on other outcomes) and is a simplification even for that outcome; however, it is still a useful and intuitive way to interpret the result. Determining what constitutes an important difference, however, is not as straightforward as it seems. A number of factors come into play. These include the potential for improvement (beyond the control intervention in a RCT setting) in the anticipated population as well as the type of outcome and whether the focus is restricted to this outcome alone or whether the wider impacts (e.g. benefits, harms and costs) of the interventions are to be considered. Expectations about what can be viewed as a realistic difference may also be brought into consideration [e.g. the performance of a similar intervention against the same (control) intervention]. Specification of an important difference is a particular challenge for certain types of outcomes.

FIGURE 1.

Statistically and clinically important difference.

Inherent meaning versus constructed scales

The difficulty of interpreting outcomes varies. Some outcomes have an inherent and tangible meaning, whereas others do not and are much more difficult to interpret. 11 If spine surgery were to lead to an additional 10 in every 100 people being able to walk as opposed to being bedridden without surgery, we would be comfortable in noting its benefit (at least in terms of mobility). We would most likely view any genuine difference in mortality, however small, as being important. However, it would be valuable to know the impact of the benefits or harms associated with spine surgery on the health of the living by considering an array of less dramatic outcomes. Many medical conditions are chronic and not life-threatening, although they clearly impact on the health and well-being of an individual. The impact of treatment is not likely to be ‘miraculous’ and gains are typically small or moderate and may not be readily detected or summarised by function or symptom measurement. How can we evaluate this impact on health? Health-related quality-of-life instruments address this need by generating latent scores that represent well-being or quality of life. Typically, multiple responses by a patient to a series of carefully designed and tested questions are used to generate a score for a particular dimension or construct of health, such as social well-being or ability to perform everyday tasks. However, the range of possible values for such dimensions is dependent on the framing of the individual scores and on the calculation of the weights used to generate the overall score. As a result, the impact in real terms of a difference in 1 point on the scale is unclear, as is the interpretation of any of the possible scores. Although undoubtedly useful for measurement and comparison of otherwise unmeasurable health-related outcomes, determining the difference in these scores that represents meaningful benefit or harm in real terms is often uncertain; this difficulty of interpreting quality-of-life measures is well known. 12–14

Along with seeking to validate and assess the inherent reliability of such a measure, there is a need for some assessment of its ability to detect a meaningful or important difference. Evaluating this (often described as measuring responsiveness) is now a recognised part of the process of validating a quality-of-life instrument. 14 Interpreting scales that purport to measure latent constructs which cannot be directly measured, such as generic and condition-specific health, is particularly challenging. Determining an important difference in such a score for a sample size calculation presents a particular challenge.

How can an important difference be determined?

An influential development has been the concept of the MCID as a rationale to define an important difference. Originally, this was defined as the ‘the smallest difference . . . which patients perceive as beneficial and which would mandate, in the absence of troublesome side effects and excessive cost, a change in the patient’s management’,15 but has also been referred to as the ‘minimum difference that is important to a patient’. 16 Variations have been suggested such as the ‘minimally clinically important improvement’, the ‘patient acceptable symptom state’ and the ‘sufficiently important difference’,16 which seeks to adopt a wider perspective by taking into account cost, risk and harms. 17–19 A variety of economic approaches have also been suggested from both a conventional and a Bayesian perspective (see Bayesian approaches to the design and analysis of randomised controlled trials) that explicitly explore the trades-offs between benefits, harms and cost. 18,20 With respect to defining an important difference most work has been carried out on patient-reported outcomes, reflecting the belief that patients find it more difficult than clinicians to specify an important difference and also the challenge of interpreting constructed quality-of-life measures. 15,21 All seek to ascertain a cut-point for a scale (whether directly measurable or latent) on which an ‘important’ difference or change can be separated from an ‘unimportant’ one.

How important differences relate to the design of randomised controlled trials

Conventional (Neyman–Pearson) approach

Randomised controlled trials allow the direct comparison of alternatives. They are overwhelmingly what can be described as Phase III (to use the pharmacological terminology) or confirmatory trials, which seek to answer the research question based on assessing ‘real’ outcomes in a substantial sample of people. Under the most common design, patients are randomly allocated to one of two treatments and statistical evidence of a difference between groups is determined (a superiority trial). Alternative designs in which the aim of the study is to demonstrate that a new treatment is equivalent or not inferior to another treatment (described in the literature as equivalence or non-inferiority trials respectively), are also possible. Irrespective of the design question, an a priori sample size calculation is required to provide reassurance that the study will provide a meaningful answer to the research question. The vast majority of trials adopt the same basic (sometimes called the Neyman–Pearson or statistical hypothesis testing) approach to calculating the sample size. 22–24 This general approach for RCTs is outlined below.

To calculate the sample size for a trial, the researcher must strike a balance between the possibility of being misled by chance and the risk of not identifying a genuine difference. Standard practice is to pick one (often the most important outcome for a key stakeholder) or a small number of primary outcomes for which the sample size is conducted. 23,25 A null hypothesis that the two treatments have equal effects is defined for a superiority trial. It is this null hypothesis that the trial is attempting to assess evidence against. Rejecting the null hypothesis when it is true (type I error) would lead to a concluding that one treatment is superior to another when in reality there is no real difference in treatment effect. The significance level of the test (α) is the probability of the occurrence of a type I error, that is, falsely concluding that there is a difference when there is not. Failing to reject the null hypothesis when it is false (type II error) would lead to a trial concluding that one treatment is not superior to another when in reality one treatment is superior. The probability of the occurrence of a such an error is β, which is equal to 1 minus the (statistical) power of the test. The smaller the specified difference to be detected the lower the power for a given sample size and significance level.

Once these two criteria are set, and the statistical tests to be conducted during the analysis stage are chosen, the sample size is determined depending on the magnitude of difference to be detected. This ‘target’ difference is the magnitude of difference that the RCT is designed to reliably investigate. It is worth noting that in practice the value used as a target difference might be one that is considered important or arrived at from another basis, such as what would be a realistic difference26 as observed in previous studies or a difference that leads to an achievable sample size. Different methods can be used to determine this target difference (Box 1; see also Chapter 2 for details). This issue is considered in more depth in Chapter 4 (see Specifying the target difference).

-

Anchor: Under such an approach, the outcome of interest is ‘anchored’ by using either a patient’s or a health professional’s judgement to define an important difference. This may be achieved by comparing before and after-treatment and then linking this change to participants who had an improvement/deterioration. Alternatively, a contrast between patients can be made to determine a meaningful difference.

-

Distribution: This covers approaches that determine a value based on distributional variation. A common approach is to use a value that is larger than the inherent imprecision in the measurement and therefore likely to represent a minimal level for a meaningful difference.

-

Health economic: This covers approaches that make use of the principles of economic evaluation and typically involves defining a threshold value for the cost of a unit of health effect that a decision-maker is willing to pay and using data on the differences in costs, effects and harms to make an estimate of relative efficiency. This can be based on a net benefit or value of information approach that seeks to take into account all relevant aspects of the decision and can be viewed as implicitly specifying a target difference.

-

Standardised effect size: Under such an approach, the magnitude of the effect on a standardised scale is used to define the value of the difference. For a continuous outcome, the standardised difference (most commonly expressed as Cohen’s d ‘effect size’) can be used. Cohen’s cut-offs of 0.2, 0.5 and 0.8 for small, medium and large effects, respectively, are often used. Binary or survival (time-to-event) outcome metrics (e.g. an odds, risk or hazard ratio) can be utilised in a similar manner although no widely recognised cut-offs exist. Cohen’s cut-offs approximate to odds ratios of 1.44, 2.48 and 4.27 respectively. Corresponding risk ratio values vary according to the control group event proportion.

-

Pilot study: A pilot (or preliminary) study may be carried out when there is little evidence, or even experience, to guide expectations and determine an appropriate target difference for the trial. The planned definitive study can be carried out in miniature to inform the design of the future study. In a similar manner, a Phase II trial could be used to inform a Phase III trial.

-

Opinion-seeking: This includes formal approaches for specifying the target difference on the basis of eliciting opinion (often a health professional’s although it can be a patient’s or other’s). Possible approaches include forming a panel of experts, surveying the membership of a professional or patient body or interviewing individuals. This elicitation process can be explicitly framed within a trial context.

-

Review of evidence base: The target difference can be derived using current evidence on the research question. Ideally, this would be based on a systematic review of RCTs, and possibly meta-analysis, of the outcome of interest, which directly addresses the research question at hand. In the absence of randomised evidence, evidence from observational studies could be used in a similar manner. An alternative approach is to undertake a review of studies in which an important difference was determined.

For an equivalence (or non-inferiority) trial, as opposed to a superiority trial, a range of values around zero will be required within which the interventions are deemed to be effectively equivalent (or not inferior) in order to establish the magnitude of difference that the RCT is designed to investigate. The limits of this range are points at which the differences between treatments are believed to become important and one of the treatments is considered superior; the difference between one of these points and zero can be viewed as defining a minimum difference between treatments that would be important and which the study is designed to reliably investigate. The sample size calculation can also incorporate the possibility of an expected ‘real’, although non-important, difference into the calculation, reflecting that two treatments are very unlikely to have a (statistically) identical effect. A hybrid approach exists between the superiority and the equivalence/non-inferiority designs, sometimes called ‘as good as or better’. Under this approach a ‘closed’ testing (i.e. ordered) procedure is used that allows both an inferiority and a superiority question to be potentially answered in a single study. 23 As with the standard superiority and equivalence/non-inferiority designs, a definition of the difference to be detected is still needed.

Once the target difference, or for an equivalence/non-inferiority trial the limit(s) of equivalence, is determined then the method of estimating the sample size will depend on the proposed statistical analysis, trial design (e.g. cluster or individually randomised trial) and statistical properties specified (e.g. agreement for paired data). The general approach is similar across studies under the Neyman–Pearson approach. Implicitly, the study is designed as a ‘stand-alone’ evaluation (without the resort to any external data) to answer the research question.

Other statistical approaches to defining the required sample size are Fisherian, Bayesian and decision-theoretic Bayesian approaches, along with a hybrid of both the Bayesian and the Neyman–Pearson approaches. 22 Economic-based methods tend to follow a decision-theoretic approach, with the types of benefits, harms and costs included reflecting the perspective of the research funder and the values used and the way that they are combined (typically a net benefit approach) reflecting the context of the study. Despite the existence of these different methods a recent review of RCT sample size calculations identified only the Neyman–Pearson approach in widespread usage. 22 Regardless of the statistical method used the key issue is what magnitude of a difference is of practical interest and one that the study should be designed to detect. As will be described in The relevance of decision theory-based models, the intent of the economic-based approaches can be different as they tend to focus on maximising some measure of efficiency. Prior evidence may be informally guide the process though explicit incorporation of prior evidence in the sample size calculation for a RCT is also possible. 27,28

Bayesian approaches to the design and analysis of randomised controlled trials

Much has been written about the benefits of Bayesian approaches to the design and analysis of RCTs. 24 They allow incorporation of current evidence (represented as a prior distribution) with new evidence to produce a summary of the overall evidence (posterior distribution). With substantive computing power now readily available, their use has grown because of the flexibility of the approach and intuitive appeal. One area in which the value of using Bayesian methods has been highlighted is for sample size determination of RCTs. Standard sample size calculations assume that the imputed values are ‘known’ (i.e. without any uncertainty); in reality, inputs such as event proportions or variances are estimates of what is anticipated to happen in the trial. A Bayesian framework provides a natural framework in which uncertainty about the inputs can be incorporated (as a prior distribution) and the impact of uncertainty on the estimates can be included to evaluate a predictive distribution of power. 24 Following such an approach still requires specification of the difference to be detected. The use of Bayesian methods for design and analyses does not avoid the need for interpretation of the importance of the results. Although they allow a more comprehensive, although also complex, representation of evidence through posterior distributions, consideration of the relevance of the findings is still necessary. To state that the outcome (posterior) distribution differs between treatments, or that the distribution of the mean difference excludes zero, does not clarify whether we should act on the basis of the result. Correspondingly, ‘Bayesian p-values’ (e.g. the posterior predictive probability that the outcome is better for treatment A than for treatment B) are similar to frequentist p-values in that for the same reasons (as noted above) they are an insufficient basis on which to make a decision. One approach to this issue is to define an ‘indifference zone’ in the outcome distribution in a similar manner to that adopted in equivalence trials. 29 The CHART trial30 provides an example of using clinicians’ opinion (both a range of equivalence and expectations regarding a realistic difference) regarding 2-year survival for trial design and data monitoring; this approach could be readily used a priori to determine the corresponding sample size within a Bayesian framework. A Bayesian approach can naturally be extended into a formal decision model, which enables consequences of the decision to be formally incorporated into the analysis.

Adaptive designs

Adaptive designs are studies that are set up to formally modify the trial design, according to accumulated data. They are used most commonly within the pharmaceutical setting for Phase II studies in which the primary outcome can be assessed in the short term after randomisation. A decision about adapting the trial design can therefore be made while the study is still recruiting. Such adaptive designs can be viewed as a form of decision model. Typically, they need specification of what is viewed as important (e.g. outcome values that would lead to the intervention not being worthwhile to pursue) at the outset to control the process of adaptation. Common types of adaptation are discarding a treatment arm as it is unlikely to achieve the desired or optimal effect (e.g. Phase II dose-finding study)31 or stopping a trial if benefit/futility has been shown (sometimes called group sequential trial). 29,32 Although the latter may have a decision rule that involves only a statistical rule of precision (e.g. p-value or Bayesian posterior probability), decision-making of Data Monitoring Committees and study investigators will involve more than the pure statistical results of accumulated data, with consideration of the consequences of making a decision such as stopping early. Some Phase II designs adapt based on a maximum allowable sample size and/or decision rules based on Bayesian posterior probability. 29 Trend analysis models have been proposed that adjust the sample size according to the trend in the current data. 33 They are not commonly used because of the risk of bias (similar to play-the-winner designs)34 with regards to inflated type I and type II errors and because knowledge of the adaptation reveals the direction, and possibly magnitude, of the observed difference at that time point. In contrast, Phase III trials will typically be designed to detect or exclude a particular (target) difference.

The relevance of decision theory-based models

As highlighted earlier in Why seek an important difference?, the decision that the study is designed to inform, such as treating patients with lower back pain with surgery or conservative treatment, should guide the design of the study. Decision theory-based models allow the decision and corresponding losses (consequences and costs) to be quantified and incorporated in an extended model that incorporates the clinical evidence and any other relevant data. 35 The underlying rationale is that any decision should be made to maximise utility (value) given current evidence. There has been much discussion about whether RCT results should be analysed on their own or whether a decision modelling approach should be adopted that incorporates the decision (and corresponding consequences) which the study is seeking to inform. 36 For example, should the spinal surgical treatment (from the Norwegian Spine Study) be analysed on its own or should prior evidence be incorporated into the model, all with estimation of the impact of changing the treatment. It has been noted that investigators who design and carry out a study are generally not the same as those who make decisions. 24 As such, there is a practical challenge in implementing such an approach. However, models have been proposed that specifically address the design of RCTs: they seek to determine the optimal sample size given current evidence and the estimated consequences of the actions. 37 Although clearly of a different nature to other approaches, such as the MICID and variants, these models may be viewed as informing the same decision (but taking into account other factors); as such, they are also considered in this monograph.

Summary

In practice, specification of the target difference is often not based on any formal concepts and in many cases (at least from trial reports) appears to be determined according to convenience or some other informal basis. 26 A variety of methods have been proposed to formally determine a target difference (including those for the MCID and its variants). 16,18 These methods take different approaches to determining the target difference: some seek to find the most realistic estimate of the effect based on current evidence, some focus on an important difference, whereas others seek to incorporate the cost and consequences into a single analysis. 27,28,37

Chapter 2 Systematic review of methods for specifying a target difference

Introduction

The aim of the systematic review was to identify methods for specifying a target difference for a RCT. In this chapter, the methods and results of the systematic review are described. For each method, important variations in approach, a summary of the included studies and practical considerations with respect to its use, are described. The findings across all methods are summarised in the discussion section of this chapter.

Methodology of the review

Search strategy

Both medical and non-medical literature were searched given that the underlying issue is relevant to both areas and useful methods could be reported only in the non-medical literature. Search strategies and databases searched were informed by preliminary scoping work. Full details of the databases searched and search strategies used can be found in Appendix 2. The biomedical and social science databases searched were MEDLINE, MEDLINE In-Process & Other Non-Indexed Citations, EMBASE, Cochrane Central Register of Controlled Trials (CENTRAL), Cochrane Methodology Register, PsycINFO, Science Citation Index, American Economic Association’s electronic bibliography (EconLit), Education Resources Information Center (ERIC) and Scopus for in-press publications. All were searched from 1966 or the earliest date of the database coverage and searches were undertaken between November 2010 and January 2011. There was no language restriction.

The search strategies aimed to be sensitive but, because of the paucity of relevant subject indexing terms available in all databases, relied mostly on text word and phrase searching using appropriate synonyms. It was anticipated that reporting of methods in the titles and abstracts would be of variable quality and that, therefore, a reliance on text word searching would be inadvisable. Consequently, several other methods were used to complement the electronic searching including checking of reference lists, citation searching for key papers using Scopus and Web of Science and contacting experts in the field.

In addition, textbooks covering methodological aspects of clinical trials were consulted. These were identified by searching the integrated catalogue of the British Library as well as the catalogues of publishers of statistical books, including Wiley, Open University Press and Chapman & Hall, for relevant books published in the last 5 years (2006 onwards). Additionally, key clinical trial textbooks as determined by the steering group were reviewed along with the International Conference on Harmonisation (ICH) tripartite clinical trials guidelines. The corresponding references are given in Appendix 2.

Inclusion and exclusion criteria

Studies reporting a method that could potentially be used to specify the target difference were included in this review. Methods may seek to determine an important and/or a realistic difference. All study designs were eligible for inclusion as it was considered unnecessary and potentially restrictive to limit them. In addition, studies using methods in hypothetical scenarios were eligible for inclusion, as were studies implicitly specifying a target difference by determining an optimal study sample size. All included studies were required to base their assessment on at least one outcome of relevance to a clinical trial or an outcome that could be used for this purpose.

Exclusion criteria were:

-

studies failing to report a method for specifying a target difference (or equivalent)

-

systematic reviews of methods for specifying the target difference

-

studies reporting only on sample size statistical considerations (e.g. a new formula for sample size calculation)

-

studies considering a metric (e.g. risk ratio or number needed to treat) without reference to how a difference could be determined.

Full-text papers were obtained for all titles and abstracts identified by the search strategy that were determined to be potentially relevant to the review. One of four reviewers (JH, TG, KH and TA) screened abstracts and data extracted information from the full-text papers of relevant studies. The reviewers undertook a practice sample of abstracts to ensure consistency in the screening process. When there was uncertainty regarding whether or not an article should be requested for full-text assessment or included in the review, the opinion of another member of the team (JC) was sought and the article was discussed until consensus on inclusion or exclusion was reached.

The titles and abstracts of all studies requested for further full-text assessment were provisionally categorised according to the known methods for eliciting a target difference. This categorisation process used the information provided in the abstracts of each study that was requested for further full-text assessment, to provisionally determine which known method (or methods) it might use. Based on the full-text assessment, final classification of the articles according to the methods used was carried out. A register of studies meeting the inclusion criteria was organised using Reference Manager bibliographic software version 12 (Thomson ResearchSoft, San Francisco, CA, USA), using the keyword facility to classify articles by type, reference source and methodology.

Data extraction

Data were extracted from all included studies to help summarise the variation and range of applicability of each method. Data extracted included (when reported):

-

what is being measured (e.g. a single measure of clinical effectiveness or safety, a composite measure of clinical effectiveness and/or safety, a measure of overall or disease-specific health or a cost/cost-effectiveness measure)

-

the type of outcome measure (e.g. binary, ordinal, continuous or survival outcome)

-

the relevant summary measure reported (e.g. mean difference, risk ratio or absolute risk difference)

-

the size of the sample used to elicit a value for the difference

-

the perspective used to define the difference (e.g. patients’ or clinicians’).

Data were also extracted on the following details (when reported):

-

the context in which the difference was elicited (e.g. real or hypothetical RCT)

-

methodological details and noteworthy features (e.g. unique variations).

Some data items were relevant only to certain methods. Additional data were extracted on specific information relevant to each particular method. No generic data extraction form was used across all methods.

Method of analysis

A narrative summary description of each method found was produced by reporting extracted details from the data, categorising the key characteristics of each method and assessing the strengths and weaknesses of each method to aid the development of guidance.

Results

Search results

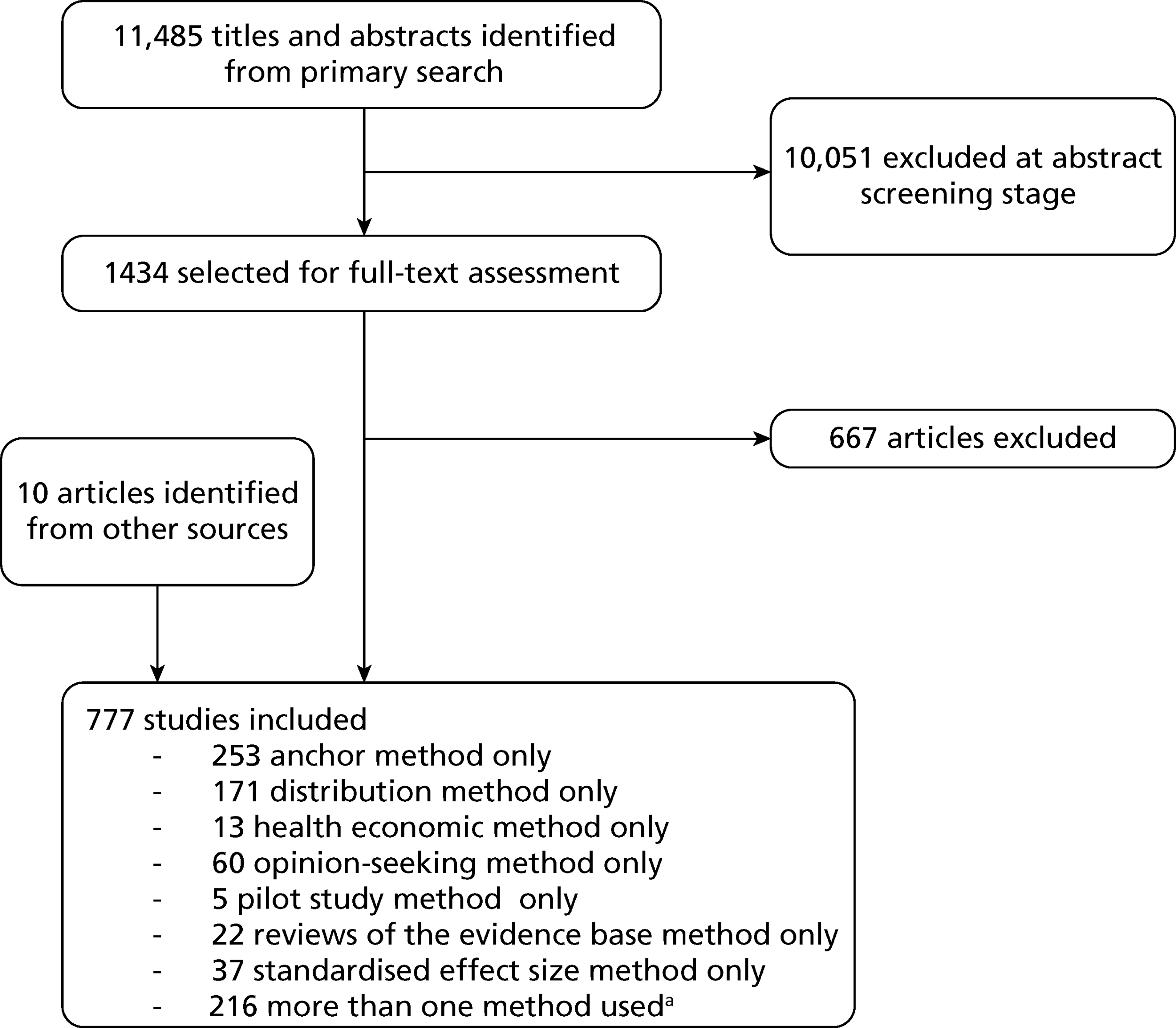

The search of databases identified 11,485 potentially relevant studies after deduplication. The number of studies obtained from each database is provided in Table 2. A depiction of the screening process itself and the number of studies pertaining to each method at key stages of the process is provided in Figure 2.

| Database | Number of titles and abstracts identified by the search strategya |

|---|---|

| MEDLINE/MEDLINE In-Process & Other Non-Indexed Citations/EMBASE | 7189 |

| CENTRAL | 487 |

| Science Citation Index | 1898 |

| EconLit | 255 |

| PsycINFO | 1367 |

| Cochrane Methodology Register | 10 |

| ERIC | 158 |

| Scopus | 121 |

| Total | 11,485 |

FIGURE 2.

The screening process. a, For a breakdown of studies in which more than one method was used in combination, please see Combination of methods.

Following the screening of titles and abstracts, a total of 1434 articles were retained for further full-text assessment and were provisionally categorised based on the different methods used, using the information available in the title and/or abstract. After full-text assessment and the identification of additional studies from other sources, including citations and consultation with experts, a total of 777 studies were included in the review. The full list of included studies is available in Appendix 3.

Hand-searching of specific journals was not undertaken. Fifteen clinical trials textbooks along with the ICH clinical trials guidelines were hand-searched (see Appendix 2). The full-text papers of five identified systematic reviews of methods for determining an important difference were retained to provide additional key references for the review. The output of one author related to a particular approach (decision-analytic economic evaluation framework) (Andy Willan, SickKids Institute, Toronto, ON, Canada) was searched after the main search strategy identified several articles on this method. 37,38 Another researcher (Robert Gatchel, University of Texas, Arlington, TX, USA) contacted the principal investigators of this project following a letter published by the principal investigators on this subject. 39 The titles and abstracts of the relevant articles by this expert had already been identified by the search strategy and requested by the reviewers for full-text assessment. One of these articles was subsequently included in the review. 40 In addition, a newly published study was forwarded to the principal investigators by a colleague who thought that it would be relevant for inclusion. 41 Eight further studies were identified from citations of full-text studies screened or were sent for assessment by members of the steering group. 30,42–48 The included studies were categorised as using one (or more) of seven methods: anchor, distribution, health economic, opinion-seeking, pilot study, review of evidence base (RoEB) or standardised effect size (SES).

There was substantial variation in terminology across and within methods even when the same concept was being addressed. In particular, various terms were used to describe the concept of the M(C)ID. The terminology varied among (and sometimes within) papers, particularly for older papers. Common variations in terminology include studies identifying a ‘difference’ or a ‘change’, and whether this was ‘meaningful’, ‘significant’, ‘perceivable’ or ‘important’. Some studies specifically referred to ‘clinical’ importance or significance, whereas others did not. In one instance, justification was given for not including a reference to ‘clinically’ important difference [e.g. minimal important difference (MID)], arguing that the word ‘clinically’ should no longer be part of the term as it puts the focus on more objective ‘clinical’ measures of change rather than measures that are important to patients. 49 The words ‘minimum’, ‘minimal’ and ‘minimally’ were also added in many cases to the terms used, with minimal being the most common. The definition of an ‘important’ difference also varied. Piva and colleagues50 defined the minimum detectable change (MDC) as ‘the amount of change needed to be certain, within a defined level of statistical confidence, that the change that occurred was beyond that which would be the result of measurement error’. 51,52 Cousens and colleagues53 defined the ‘clinically important change’ as a change ‘that, by consensus, is deemed sufficiently large to impact on the patient’s clinical status’.

Anchor method

Brief description of the anchor method

In its most basic form, the anchor method evaluates the minimum (clinically) important change in score for a particular instrument. This is established by calculating the mean change score (post minus pre) for that instrument among a group of patients for whom it is indicated (using an additional measurement – the ‘anchor’) that a minimum clinically important change (or difference) has occurred. The word ‘minimum’ reflects the separation of patients according to the amount of change, with the smallest amount of change viewed as ‘important’ calculated. In evaluating a patient’s progress (e.g. before and after a given treatment), a clinician could use this value as an indication of the expected change in score of an instrument among patients who have indicated on the anchor measurement that they consider themselves (or are considered by someone who completes the anchor measurement on their behalf) to have undergone an improvement within a particular time frame.

Variations on the anchor method

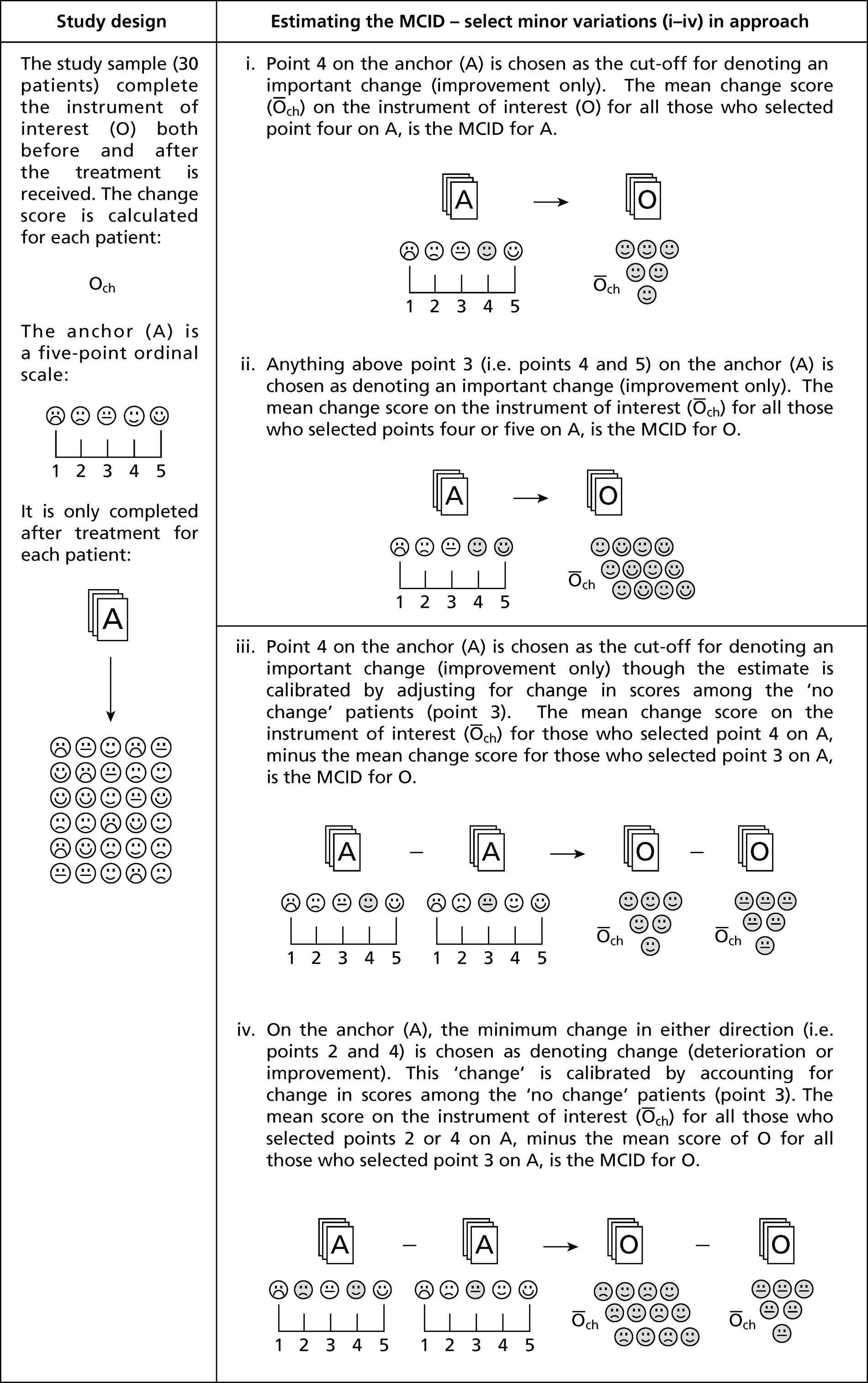

An anchor method example is given in Box 2. However, various implementation approaches of the anchor method have been used (i.e. varying the number of points and labels attached) along with variations in how an anchor is used to determine an important difference [typically the M(C)ID]. One of the most common variations is not only to consider the mean change score for improved patients but also to take account of the relative change compared with another subset of those undergoing assessment (who have been tested using both the same instrument and the anchor) who reported no change over time. 54–58 The results for both groups can be compared, potentially allowing the calibration of a resulting MCID value by adjusting for the mean value found for the ‘no change’ group (i.e. variation in score that is unimportant). Yalcin and colleagues59 refer to the original method as ‘within-patient’ change and this variation as ‘between-patient’ change. However, these terms are not solely used for these variations and can therefore be confusing. The variations are depicted in Figure 3. Another very common variation is to consider the percentage change score in the instrument under consideration,60 rather than simply using the absolute change score. Including the percentage change score as well as the absolute score has been justified as possibly reducing variation, thereby ensuring that the MCID values obtained are more robust. Other variations reported used the median change rather than the mean change since baseline. 61–63

Please rate the change in the patient’s health from before they received treatment to now by circling one of the following options:

Options:

-

marked improvement

-

moderate improvement

-

minimal improvement

-

unchanged

-

minimal worsening

-

moderate worsening

-

marked worsening.

FIGURE 3.

Anchor method illustrative example.

Diagnostic accuracy methodology has also been used to define the MCID with an anchor method. The anchor response is used as the reference standard, and the sensitivity and specificity of a cut-point difference in the instrument under consideration is assessed against the anchor definition. Use of the cutoff that optimised together sensitivity (proportion of those who had experienced an important difference correctly identified) and specificity (proportion of those who did not have an important difference correctly identified) was almost universal. In a few cases this was not carried out, for example using a cut-off to maximise sensitivity over specificity, unless the point nearest the left-hand corner of the sensitivity versus (1-specificity) plot gave a particularly low value for sensitivity,64 or using an 80% specificity rule. 65,66 In other studies, a receiver operating characteristic (ROC) curve analysis was undertaken but was not used to calculate the MCID. 67,68 In other instances, diagnostic accuracy data were reported but a ROC curve approach was not used to determine the estimate. 69 When multiple MCID estimates were generated (e.g. from different anchor definitions or by using different anchors), an average or triangulation of the results was sometimes used. 70–72 Other less commonly used methods to derive a MCID value from an anchor-based method included various types of regression models [e.g. analysis of variance (ANOVA), logistic regression, Rasch analysis, classification and regression trees (CART) analysis, mixed-effects models and linear discriminant analysis]. 73,77 Harman and colleagues78 developed a logistic regression model for the probability of experiencing a negative life event based on prior mental health levels. A substantially different approach was proposed by Redelmeier and colleagues79–83 in which other patients were used as anchors on which a patient could rate his or her own health (or health improvement) in comparison to others.

Aside from variation in the method used to elicit a MCID value, there was variation in the anchor itself and in the ‘transition question’ used to determine whether patients had improved or not. A 15-point Likert scale was often used as the anchor,84–86 based on the method established by Jaeschke and colleagues,87 although five-point69,88,89 and seven-point54,90–92 scales were also widely used. A visual analogue scale (VAS) can be used (e.g. with < 10 mm considered ‘unchanged’ and > 30 mm considered more extreme than minimal change). 93 Existing instrument questions were also adapted, including the Short Form questionnaire-36 items (SF-36) global change score, Disabilities of the Arm, Shoulder and Hand (DASH) and the (modified) Rankin score. 77,94–97 For example, Khanna and colleagues95 used multiple anchors, including the SF-36 global change score and four additional items from the SF-36 relating to walking and climbing stairs. Several studies utilised an item from the instrument that was being assessed for MCID values as the anchor question for other items within the same instrument. 74,98–100 For example, Colwell and colleagues74 used the pain frequency and pain severity items within the Gout Assessment Questionnaire (GAQ) as anchors by which to gauge the MCID values for other items within this outcome.

In many cases, the original anchor categories were later merged because of sample constraints (e.g. poor literacy among respondents or an insufficient number of patients in a particular category of improvement to enable analysis to be undertaken separately for that category). 101,102 In some cases, if the anchor considered deteriorating states as well as improvement, the sign on the deterioration point of the Likert scale was changed and its participants were merged with the improving patients to give a larger number of patients in the anchor groups (although this makes the strong assumption that MCID values will be identical for improvement and deterioration). 103 For ROC curve analysis to be used within the anchor method, the anchor question must be dichotomous (those experiencing a MCID vs. those who did not experience a MCID). There is therefore a need to split the anchor in some way. This often requires an arbitrary decision about what points on the Likert scale constitute important change in comparison with no real change. Consensus between two colleagues was used in one instance to derive a definition for important change, along with the Rasch method to find ‘natural’ cut-points for the data. 75

The anchor question was most often posed to patients alone63,104 although in some cases the clinicians’ views alone were used, even in instances when the instrument under consideration was a patient-reported outcome. The opinion of a clinician was also used instead of a patient-reported outcome,105 or in conjunction with a patient’s score, either to determine the extent of agreement between patient and clinician scores or to average the score (e.g. to reduce potential patient biases) by incorporating both patient and clinician views. 69,71 Parents’ views were also occasionally used, especially for studies of paediatric outcomes. 106–109 Anchors do not necessarily have to be based on individuals’ opinions; ‘objective’ measures of improvement can be used (e.g. > 5 mm healthy toenail growth). 110 Other studies used retrospective analysis, for example the mean change score among those who were later hospitalised compared with those who were not later hospitalised. Readmission and/or death was also considered in this way78,111,112 If a treatment of known efficacy was the intervention that patients received, the mean change score was used as patients were expected to show clinically important improvements over time. 113 It is worth noting that the anchor method was not always successful in deriving values for interpreting an important difference. 114,115

Summary of anchor studies

A total of 447 studies were found that used the anchor method; 194 of these also used another method (see Combination of methods for details). Several studies had split their sample into separate cohorts (e.g. if the data came from two separate clinical trials). 76,116 In most instances, a rationale for calculating the MCID was not explicitly given. As many studies looked at instrument development, calculation of the MCID often formed part of a wider array of reliability and validity testing of new instruments; other studies merely noted that for a particular instrument (or use of an established instrument for a particular disease/condition) the MCID had yet to be established. Future sample size calculation was very rarely cited in the rationale for calculating the MCID. 116

Across all its different possible forms, the anchor method was used in a wide range of specialties including, but not limited to, surgery,117 orthopaedics,70 paediatrics,109 rheumatology,69 stroke rehabilitation,118 cancer,119 urology,120 respiratory medicine,75 mental health67 and emergency medicine. 121 Almost all cases looked at quality-of-life measures, particularly with regard to pain,85 function and mobility. 122,123 The method is not used exclusively for establishing the MCID for new or existing quality-of-life tools; non-scale-based outcomes were also considered. These usually related to patient physical functioning (in many cases in conjunction with a wide range of instruments for a particular condition being assessed at the same time), for example a 5-minute walk test, 1-minute stair climbing,94 comfortable gait speed77 and (more unusually) the number of palms of your hand it would take to cover up all of the psoriasis on your body. 61 The method can be used for acute124 or chronic125 conditions.

Practical considerations for use of the anchor method

A number of common issues with regard to applying the anchor method were highlighted. In particular, the generalisability of a determined MCID value was often queried. In some instances this was simply in relation to the size of the sample,75,94,105,118,121,122 which in itself is not an problem exclusive to studies determining important difference values. However, in other instances the range of applicability of the resulting MCID value may be compromised. 49,94,118,121,126 For example, a MCID value for a chronic progressive illness may not be applicable to a newly diagnosed population if the duration of illness among most participants surveyed to determine the MCID value is far longer. 116,127–130 Although it is, in principle, possible to account for this in any future anchor method studies (e.g. by providing a breakdown of MCID values for subgroups based on duration of illness), in practice there may be more complex confounding issues. Long-term sufferers of a chronic condition may have different expectations of change than a newly diagnosed population. In addition, severity of illness may not necessarily be directly correlated with duration, and this may be a more important aspect to consider. In fact, the effect of severity of illness on identified MCID values was often examined by study authors; however, not all studies found a statistically significant effect. 63,71,105,127,131

Several studies that had considered separately MCID estimates for improvement and deterioration noted differences in the values derived for these groups. 75,88,95,121,126 Although this, as with other generalisability concerns, may be related to participants’ expectations or regression to the mean, it is important to consider whether an assumption of linearity, with no change centred on zero difference, is appropriate. The decision to merge both improvement and deterioration groups (e.g. because of a small sample size) to derive one singular ‘change’ value instead may be inappropriate if the size of an important change is different for improving participants and deteriorating participants. 49,54,95,122

The validity of the anchor used was also cited as being potentially problematic from a methodological point of view. Likert scales were most commonly used as anchors to derive the MCID value, but there was considerable variation in the number of point options provided within the Likert scale. Differing sizes of anchor scales may be appropriate as the change experienced by participants, and their ability to differentiate, will vary between contexts and study populations. In addition, there may be other factors that influence the size of the Likert scale used. For example, one study noted that, if the population of interest has poor literacy, a Likert scale with a large number of options may be less suitable than one with fewer points for respondents to consider. 132 Several studies using Likert scales with a large number of options later had to merge multiple options together because so few participants had selected each option, thereby retrospectively creating a smaller Likert scale that might have been more suitable in the first place.

A more fundamental consideration when using the anchor method is how to decide on an appropriate cut-off point to apply to the anchor responses. Although any formal approach for determining this cut-off was seldom mentioned as part of the study methods, several study authors pointed out the difficulty of choosing a particular value. 59,80,88,110,116 For anchor studies using a ROC curve approach, choosing a cut-off point on the Likert scale to dichotomise the sample into those who did and those who did not experience change is essential, but the choice of cut-off is arbitrary and may compromise the extent to which an anchor method detects or fails to detect important change. In some instances, multiple cut-offs are analysed, or mean change values are reported for every different point on the Likert scale. This is useful as it allows those wishing to utilise MCID values to use the value most suitable for their own needs. Conversely, it could be argued that, by not choosing an appropriate cut-off and instead providing every possible value of clinical importance from the anchor responses, studies fail to provide guidance for which MCID values are more likely to be realistic overall. Some papers focused on only one choice of definition; this was particularly the case for papers that had used a ROC approach. Reporting of anchor methodology was sometimes insufficient to allow reanalysis of the data if an alternative anchor definition of important change is desired.

More generally, the validity of both the outcome under consideration and the anchor tool being used to define its MCID were noted as important. 85,88,122,126,133 Validity issues apply more widely to the development of any new outcome for health measurement. For example, given an ambulatory population, the outcomes used must be able to detect small changes and be free from ceiling effects, whereas for a population with severe disease, the possibility of foor effects could be more relevant. 69,127 Information on a outcome’s ability to detect change in the first place complements MCID values and helps determine whether or not a point estimate of change is suitably relevant. 63,110 The inability to achieve a high level of discrimination (e.g. good diagnostic performance if a ROC curve approach is used) would suggest that a MCID for a particular outcome cannot be reliably determined using this anchor. Possible reasons for poor performance include a disconnection between the anchor and the outcome (e.g. difference in perspective) as well as the properties of the outcome itself (e.g. low reliability).

With regard to the population of interest, the generalisability of the study population is not the only aspect of the study conduct that requires methodological consideration. One commonly cited difficulty in using the anchor method relates to the potential for participants to exhibit recall bias over the course of the study period. 70,75,85,126,129 Although this concern is not exclusive to the calculation of a MCID, it is relevant to consider the possible impact on the study estimate, particularly in instances in which there may be additional concerns about study methodology (e.g. variation in length of participant follow-up). 76,116,129,132 There are examples of methods used to counteract this bias. For example, Wyrwich and colleagues,134–137 at each data collection session, asked participants what they were doing that day. At the following session, to aid their memory, their answer from the previous session was relayed to them as a reference point to help them recall how they had felt at the previous point in time. Response shift may also be problematic, as participants’ perceptions of what amount of improvement they would be satisfied with may change during the course of their treatment, and their expectations may not be consistent over time. 138