Notes

Article history

This issue of the Health Technology Assessment journal series contains a project commissioned by the MRC–NIHR Methodology Research Programme (MRP). MRP aims to improve efficiency, quality and impact across the entire spectrum of biomedical and health-related research. In addition to the MRC and NIHR funding partners, MRP takes into account the needs of other stakeholders including the devolved administrations, industry R&D, and regulatory/advisory agencies and other public bodies. MRP supports investigator-led methodology research from across the UK that maximises benefits for researchers, patients and the general population – improving the methods available to ensure health research, decisions and policy are built on the best possible evidence.

To improve availability and uptake of methodological innovation, MRC and NIHR jointly supported a series of workshops to develop guidance in specified areas of methodological controversy or uncertainty (Methodology State-of-the-Art Workshop Programme). Workshops were commissioned by open calls for applications led by UK-based researchers. Workshop outputs are incorporated into this report, and MRC and NIHR endorse the methodological recommendations as state-of-the-art guidance at time of publication.

The authors have been wholly responsible for all data collection, analysis and interpretation, and for writing up their work. The HTA editors and publisher have tried to ensure the accuracy of the authors’ report and would like to thank the reviewers for their constructive comments on the draft document. However, they do not accept liability for damages or losses arising from material published in this report.

Declared competing interests of authors

Lisa V Hampson is an employee of Novartis Pharma AG (Basel, Switzerland) and reports grants from the Medical Research Council (MRC). Catherine Hewitt is a member of the National Institute for Health Research (NIHR) Health Technology Assessment (HTA) Commissioning Board since 2015. Jesse A Berlin is an employee of Johnson & Johnson (New Brunswick, NJ, USA) and holds shares in this company. Richard Emsley is a member of the NIHR HTA Clinical Trials Board since 2018. Deborah Ashby is a member of the HTA Commissioning Board, HTA Funding Boards Policy Group, HTA Mental Psychological and Occupational Health Methods Group, HTA Prioritisation Group and the HTA Remit and Competitiveness Group from January 2016 to December 2018. Stephen J Walters declares his department has contracts and/or research grants with the Department of Health and Social Care, NIHR, MRC and the National Institute for Health and Care Excellence. He also declares book royalties from John Wiley & Sons, Inc. (Hoboken, NJ, USA), as well as a grant from the MRC and personal fees for external examining. Louise Brown is a member of the NIHR Efficacy and Mechanism Evaluation Board since 2014. Craig R Ramsay is a member of the NIHR HTA General Board since 2017. Andrew Cook is a member of the NIHR HTA Interventional Procedures Methods Group, HTA Intellectual Property Panel, HTA Prioritisation Group, Public Health Research (PHR) Research Funding Board, Public Health Research Prioritisation Group and the PHR Programme Advisory Board.

Permissions

Copyright statement

© Queen’s Printer and Controller of HMSO 2019. This work was produced by Cook et al. under the terms of a commissioning contract issued by the Secretary of State for Health and Social Care. This issue may be freely reproduced for the purposes of private research and study and extracts (or indeed, the full report) may be included in professional journals provided that suitable acknowledgement is made and the reproduction is not associated with any form of advertising. Applications for commercial reproduction should be addressed to: NIHR Journals Library, National Institute for Health Research, Evaluation, Trials and Studies Coordinating Centre, Alpha House, University of Southampton Science Park, Southampton SO16 7NS, UK.

2019 Queen’s Printer and Controller of HMSO

Chapter 1 Introduction

Synopsis

The aim of this document is to provide practical help on the choice of target difference used in the sample size calculation of a randomised controlled trial (RCT). Advice is provided with a definitive trial, that is, one that seeks to provide a useful answer, in mind and not those of a more exploratory nature. The term ‘target difference’ is taken throughout to refer to the difference that is used in the sample size calculation (the one that the study formally ‘targets’). Please see the Glossary for definitions and clarification with regard to other relevant concepts. To address the specification of the target difference, it is appropriate, and to some degree necessary, to touch on related statistical aspects of conducting a sample size calculation. Generally, the discussion of other aspects and more technical details is kept to a minimum, with more technical aspects covered in the appendices and referencing of relevant sources provided for further reading.

The main body of this report assumes a standard RCT design is used; formally, this can be described as a two-arm parallel-group trial. Most RCTs test for superiority of the interventions, that is whether or not one of the interventions is superior to the other (Box 1 provides a formal definition of superiority and of the two most common alternative approaches). A rationale for the report is provided in Rationale for this report and a summary of the research stages that were used to inform this report is provided in Development of the DELTA2 advice and recommendations. Appendices 1 and 2 provide fuller details, which have also been published elsewhere. 9 The conventional approach to sample size calculations is discussed along with other relevant topics in Appendix 3. Additionally, it is assumed in the main body of the text that the conventional (Neyman–Pearson) approach to the sample size calculation of a RCT is being used. Other approaches (Bayesian, precision and value of information) are briefly considered in Appendix 4, with reference to the specification of the target difference. Some of the more common alternative trial designs to a two-arm parallel-group superiority trial are considered in Appendix 5.

In a superiority trial with a continuous primary outcome, the objective is to determine whether or not there is evidence of a difference in the desired outcome between intervention A and intervention B, with mean response µA and µB, respectively. 1 The null (H0) and alternative (H1) hypotheses typically under consideration are:

H0, the means of the two intervention groups are not different (i.e. µA = µB).

H1, the means of the two intervention groups are different (i.e. µA ≠ µB).

For a superiority trial, the null hypothesis can be rejected if µA > µB or if µA < µB based on a statistically significant test result. 1,2 This leads to the possibility of making a type I error when the null hypothesis is true (i.e. there is no difference between the interventions). The statistical test is referred to as a two-tailed test, with each tail allocated an equal amount of the type I error (α/2, typically set at 2.5%). The null hypothesis can be rejected if the test of µA < µB is statistically significant at the 2.5% level or the test of µA > µB is statistically significant at the 2.5% level. The sample size is calculated on the basis of applying such a statistical test, given the magnitude of a difference that is desired to be detected (the target difference), and the desired type I error rate and statistical power. Consideration of a difference in only one direction (one-sided test) is also possible.

Equivalence trialThe objective of an equivalence trial is not to demonstrate the superiority of one treatment over another, but to show that two interventions have no clinically meaningful difference, that is they are clinically equivalent (or not different). 3 The corresponding hypotheses for an equivalence trial (continuous primary outcome) take the following form.

H0, there is a difference between the means of the two groups (i.e. they are not ‘equivalent’):

or

H1: there is a no difference between the means of the two groups (i.e. they are ‘equivalent’):

where dE equates to the largest difference that would be acceptable while still being able to conclude that there is no difference between interventions. It is often called the equivalence margin. µA and µB are defined as before.

To conclude equivalence, both components of the null hypothesis need to be rejected. One approach to performing an equivalence trial is to test both components, which is called the TOST procedure. 1,3 This can be operationally the same as constructing a (1 – α)100% CI and concluding equivalence if the CI falls completely within the interval (–dE, dE). For example, dE could be set to 10 (on the scale of interest). After conducting the trial, a 95% CI for the difference between interventions could be (–3 to 7). As the CI is wholly contained within (–10 to 10), the two interventions can be considered to be equivalent.

Non-inferiority trialA non-inferiority trial can be considered a special case of an equivalence trial. The objective is to demonstrate that a new treatment is not clinically inferior to an established one. This can be formally stated under null (H0) and alternative (H1) hypotheses for a non-inferiority trial (continuous primary outcome) that take the form:

H0, treatment A is inferior to B in terms of the mean response µB – µA > dNI,

H1, treatment A is non-inferior to B in terms of the mean response µB – µA ≤ dNI,

where dNI is defined as the difference that is clinically acceptable for us to conclude that there is no difference between interventions, and a higher score on the outcome is a better outcome. Non-inferiority trials reduce to a simple one-sided hypothesis and test, and correspondingly are usually operationalised by constructing a one-sided (1 – α/2)100% CI. Non-inferiority can be concluded if the upper end of this CI is not greater than dNI. No restriction is made regarding whether the new intervention is the same as or better than the other intervention. A mean difference far from dNI, in the positive direction, is not a negative finding, whereas for an equivalence trial it could rule out equivalence.

Equivalence and non-inferiority marginsThe setting of an equivalence (and non-inferiority) margin, or limit, is a controversial topic. There are regulatory guidelines on the topic, although practice has varied. 4,5 It has been defined more tightly, and arguably appropriately, as the ‘largest difference that is clinically acceptable, so that a difference bigger than this would matter in practice’. 6 A natural approach would be for dE to be just smaller than the MCID (see Chapter 3, Methods for specifying the target difference). In the context of replacement pharmaceuticals, the margin has been suggested to ‘[be no] greater than the smallest effect size that the active (control) drug would be reliably expected to have when compared with placebo in the setting of the planned trial’. 7 An acceptable margin can therefore be chosen via a retrospective comparison with placebo that shows that the new treatment is non-inferior to the standard treatment, and thereby indirectly shows that the new treatment is superior to placebo. 8 It may also be desirable to demonstrate no substantive non-inferiority, leading to a narrower margin, similar to the approach above.

CI, confidence interval; MCID, minimum clinically important difference; TOST, two one-sided test.

Rationale for this report

A RCT is widely considered to be the optimal study design to assess the comparative clinical efficacy and effectiveness along with the cost implications of health interventions. 1 RCTs are routinely used to assess the use of new drugs prior to, and in order to secure, approval for releasing new drugs to market. More generally, they have also been widely used to evaluate a range of interventions and have been successfully used in a variety of health-care settings. An a priori sample size calculation ensures that the study has a reasonable chance of achieving its prespecified objectives. 10

A number of statistical approaches exist for calculating the required sample size. 1,11,12 However, a recent review of 215 RCTs in leading medical journals identified only the conventional (Neyman–Pearson) approach in use. 13 This approach requires establishment of the statistical significance level (type I error rate) and power (1 minus the type II error rate), alongside the target difference (‘effect size’). Setting the statistical significance level and power represents a compromise between the possibility of being misled by chance, when there is no true difference between the interventions, and the risk of not identifying a difference, when one of the interventions is truly superior, whereas the target difference is the magnitude of difference to be detected between sample sets. The required sample size is very sensitive to the target difference. Halving it roughly quadruples the sample size for the standard RCT design. 1

A comprehensive review conducted by the original Difference ELicitation in TriAls (DELTA) group14,15 highlighted the available methods for specifying the target difference. Despite there being many different approaches available, few appear to be in regular use. 16 Much of the work on identifying important differences has been carried out on patient-reported outcomes, specifically those seeking to measure health-related quality of life. 17,18 In practice, the target difference often appears not to be formally based on these concepts and in many cases appears, at least from trial reports, to be determined based on convenience or some other informal basis. 19 Recent surveys among researchers involved in clinical trials demonstrated that the practice is more sophisticated than trial reports suggest. 16 The original DELTA group developed initial advice, but this was restricted to a standard superiority two-arm parallel-group trial design and limited consideration of related issues. 20 It did not provide recommendations for practice. Accordingly, there is a gap in the literature to address this and thereby help improve current practice, which this report seeks to address. The Medical Research Council (MRC)/National Institute for Health Research (NIHR) Methodology Research Panel in the UK commissioned a workshop to produce advice on this choice of ‘effect size’ in RCT sample size calculations. From this resulting DELTA project, this report was produced. The process of its development is described in Development of the DELTA2 advice and recommendations.

Development of the DELTA2 advice and recommendations

The DELTA2 project had five components: systematic literature review of recent methodological developments (stage 1); literature review of existing funder advice (stage 2); a Delphi study (stage 3); a 2-day consensus meeting bringing together researchers, funders and patient representatives (stage 4); and the preparation and dissemination of an advice and recommendations document (stage 5). Full details of the methods and findings are provided in Appendices 1 and 2 and are summarised here.

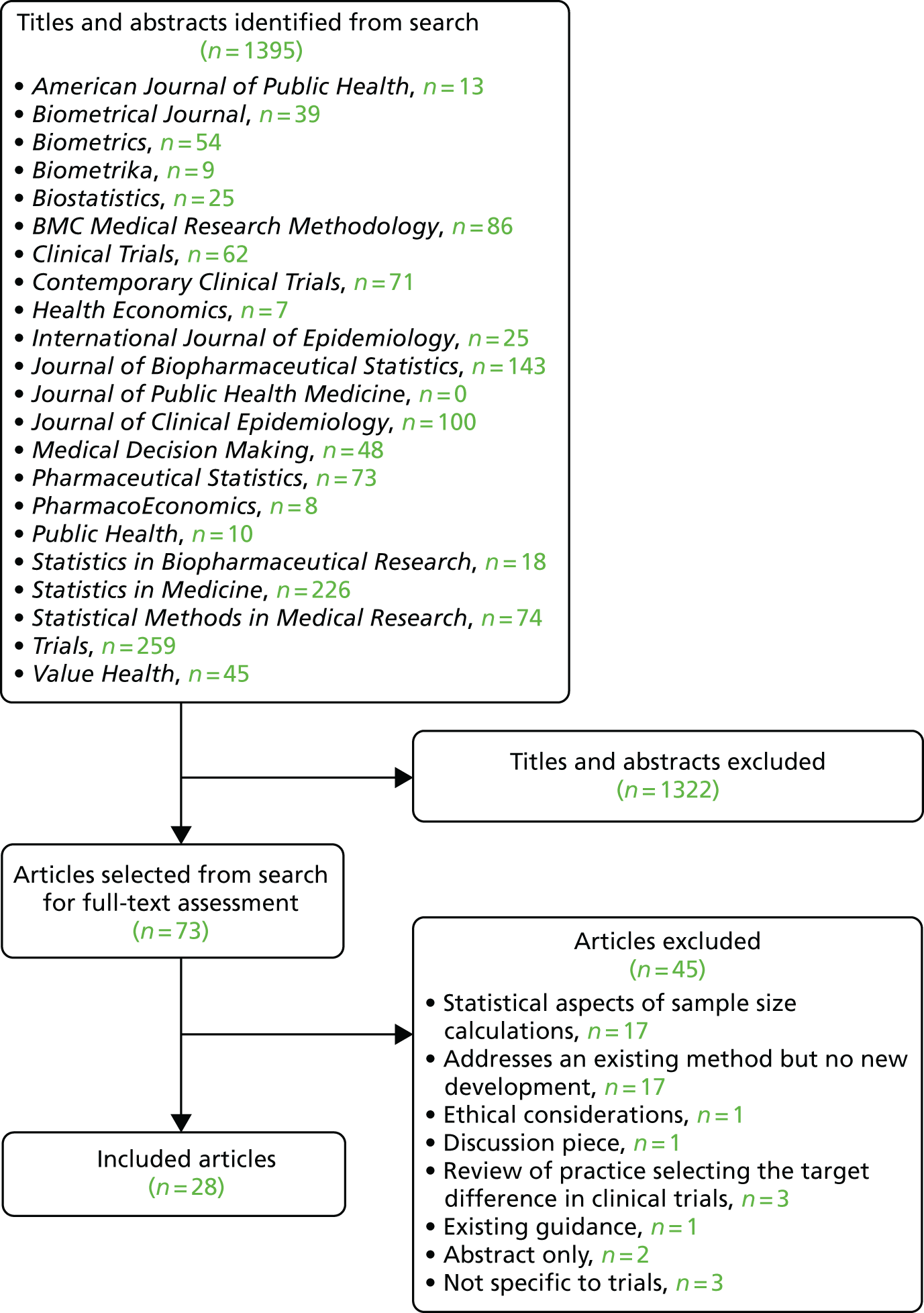

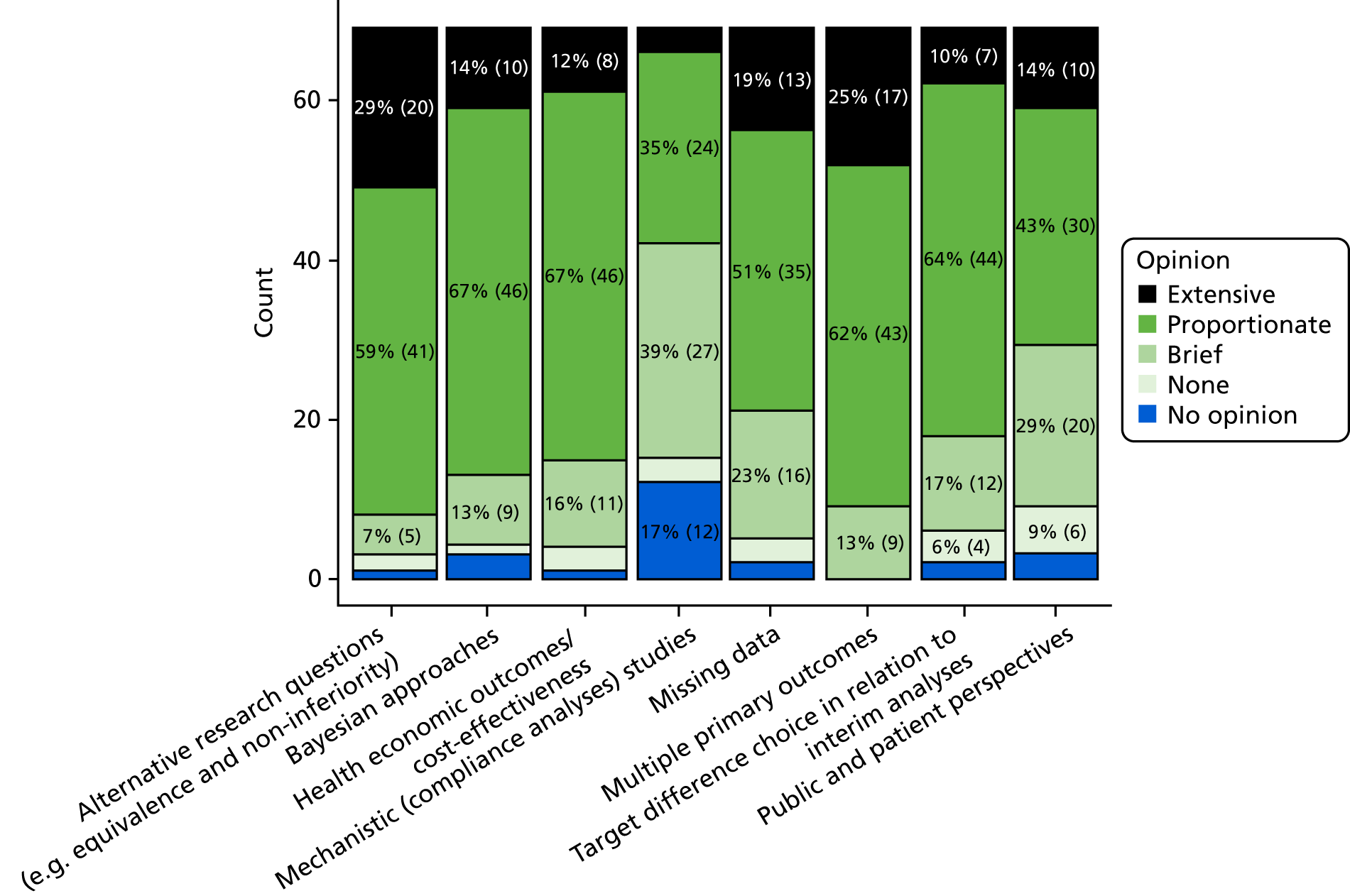

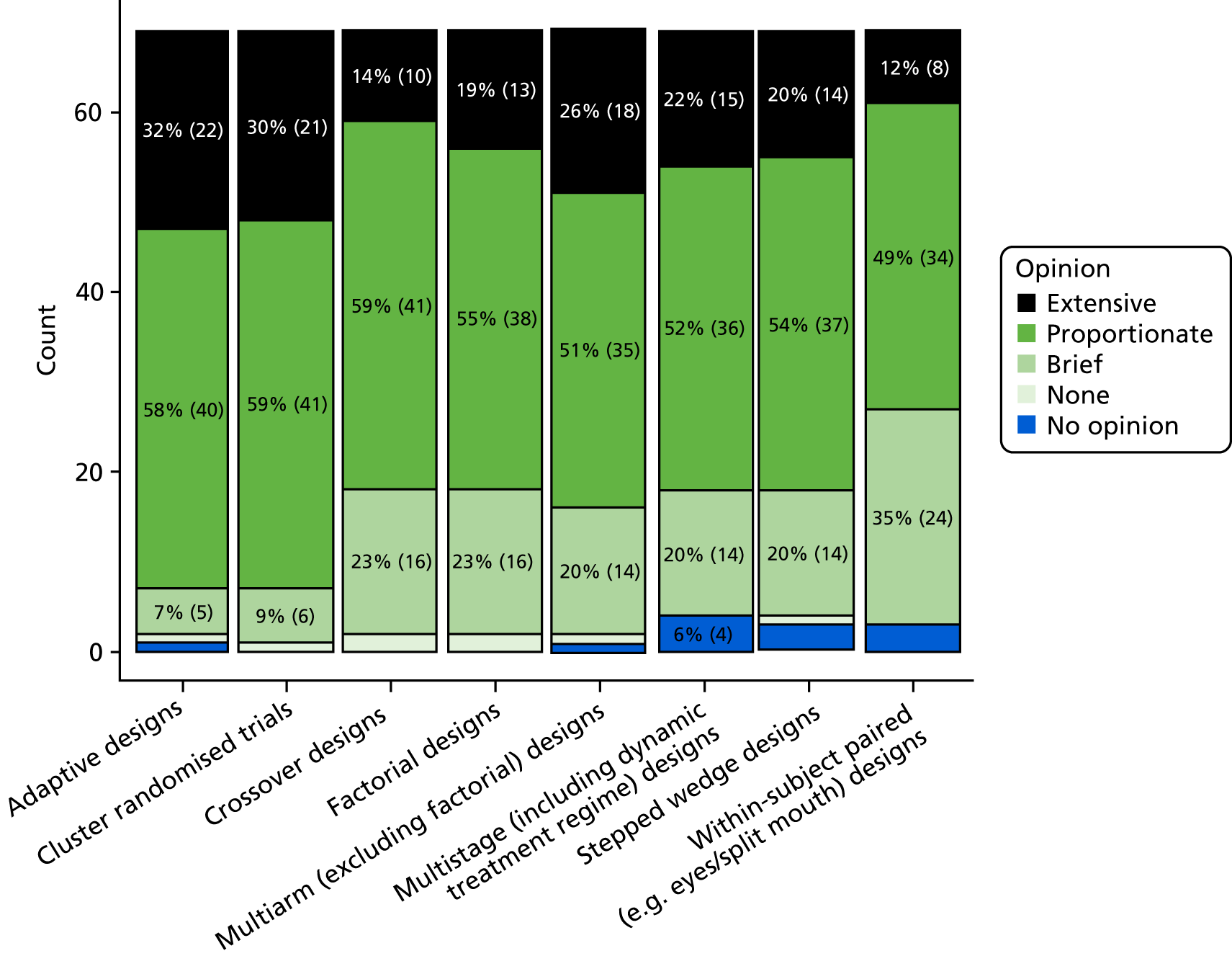

The project started in April 2016. A search for relevant documentation on the websites of 15 trial-funding advisory bodies was performed (see Appendix 1, Methodology of the literature reviews, Delphi study, consensus meeting, stakeholder engagement and finalisation of advice and recommendations). However, there was little specific advice provided to assist researchers in specifying the target difference. The literature search for methodological developments identified 28 articles of methodological developments relevant to a method for specifying a target difference. A Delphi study involving two stages and 69 participants was conducted. The first round focused mainly on topics of interest and was conducted between 11 August 2016 and 10 October 2016. In the second round, which took place between 1 September 2017 and 12 November 2017, participants were provided with a draft copy of the document and feedback was invited.

The 2-day workshop was held in Oxford on 27 and 28 September 2016, and involved 25 participants, including clinical trials unit (CTU) directors, study investigators, project funder representatives, funding panel members, researchers, experts in sample size methods, senior trial statisticians and patient and public involvement (PPI) representatives. At this workshop, the structure and general content of the document was agreed; it was subsequently drafted by members of the project team and participants from the workshop. Further engagement sessions were held at the Society for Clinical Trials (SCT), Statisticians in the Pharmaceutical Industry (PSI) and Joint Statistical Meetings (JSM) conferences on 16 May 2016, 17 May 2017 and 1 August 2017, respectively. The main text was finalised on 18 April 2018, after revision informed by feedback gathered from the second round of the Delphi study, the aforementioned engagement sessions and from funder representatives. Minor revisions in the light of editorial and referee feedback were made prior to finalising this report.

Chapter 2 General considerations for specifying the target difference

Target differences and the analysis of interest

Randomised controlled trial design begins with clarifying the research question and then developing the required design to address it. Commonly the population, intervention, control, outcome and time frame (PICOT) framework has been used for this purpose. 21 All of the relevant aspects of trial design (PICOT) should reflect the research questions of interest. The process of determining the design needs to be informed by the perspective(s) of relevant stakeholders, which are discussed in the following section (see Perspectives on the target difference of interest). A key step in the process is the selection of the primary outcome, which is considered in The primary outcome of a randomised controlled trial, given its key role in trial design and its relationship with the target difference. This section focuses on the need for clarity about how the design and intended analysis address the trial objectives.

The need for greater clarity in trial objectives with respect to the design and analysis of a RCT has been noted. 22 This reflects greater recognition of the existence of multiple intervention (or treatment) effects of potential interest, even for the same outcome. For example, we may be interested in the typical benefit a patient received if they are given a treatment, but also the benefit a patient receives if they comply fully with the treatment (e.g. they take their medication as prescribed for the full treatment period, with use of additional treatments). Treatment effects can differ subtly in the population of interest, the role for additional treatment or ‘rescue’ medication, and how the effect is expressed. The concept of estimands has been proposed as a way to bring such distinctions to the fore. An estimand is a more specific formulation of the comparison of interest being addressed. This thinking is reflected in a recent addendum to international regulatory guidelines for clinical trials of pharmaceuticals. Five main strategies are proposed. 23 Of particular note is the treatment policy strategy, which is consistent with what has often been described as an intention-to-treat (ITT)-based analysis. 22–25 That is, the ITT analysis addresses the difference between a policy of offering treatment with a given therapy and the policy of offering treatment with a different therapy, regardless of which treatments are received. Different stakeholders can have somewhat differing perspectives on the comparison of interest and therefore the estimand of primary interest. 22 Corresponding methods of analyses to address estimands that deviate from traditional conventional analyses are an active area of interest26 (see Appendix 3, Other topics of interest for a brief consideration of causal inference methods for dealing with non-compliance).

The target difference used in the sample size calculation should be one that at least addresses the trial’s primary objective and, therefore, the intended estimand of primary interest (with the corresponding implications for the handling of the receipt of treatment and population of interest). In some cases, it may be appropriate to ensure that the sample size is sufficient for more than one estimand, which might imply multiple target differences to address all key objectives. Different estimands may focus on different populations or subpopulations. Estimands will differ in their implications for the magnitude of missing data anticipated (see Appendix 3, Dealing with missing data for binary and continuous outcomes for how missing data can be taken into account in the sample size calculation in simple scenarios). Whatever the estimand of interest, the target difference is a key input into the sample size calculation.

Perspectives on the target difference of interest

Governmental/charity funder

Funders vary in the degree to which they will specify the research question. The primary concern is that the study provides value for money, by addressing a key research question in a robust manner and at reasonable cost to the funder’s stakeholders. This is typically an implicit consideration when the sample size and the target difference are determined. However, a very different approach, value of information (see Appendix 4), allows such wider considerations to be formally incorporated. The sample size calculation and the target difference, if well specified, provide reassurance that the trial will provide an answer to the primary research question, at least in terms of comparing the primary outcome between interventions. The specific criteria that proposals are invited to address, and are assessed against, vary among funders and individual schemes within a funder, as does the degree to which the research question may be a priori specified by the funder.

One particular aspect that varies substantially among funding schemes and funders is the extent to which they take into account the cost and cost-effectiveness of the interventions under consideration. Some funding schemes require the consideration of costs to come from a particular perspective; this might be the society as a whole or the health system alone. Alternatively, other schemes focus solely on clinical and patient perspectives, to greater or lesser extents.

All funders expect a RCT to have a sample size justification. 27 Typically, although not necessarily, this would be via a sample size calculation, most commonly based on the specification of a target difference. The specified target difference would be expected to be one that is of interest to their stakeholders; this is typically patients and health professionals, and sometimes the likely funder of the health care (e.g. the NHS in the UK). For industry-funded trials, the considerations are different and these are outlined in the next section (see Industry, payers and regulator).

The practical implications of an overly large trial are perhaps mostly financial (the funder has paid more than necessary to get an answer to the research question and thus there is less available for other trials). However, it is also ethically important to avoid more patients than necessary possibly receiving a suboptimal treatment, or simply to avoid unnecessary burden on further individuals and to avoid losing the opportunity to devote scarce resource funds to other desirable research. What is and is not sufficient in statistical and more general terms is often very difficult to differentiate, except in extreme scenarios. A trial that is too small is at risk of missing an effect. The funder could also later use the target difference in the context of evaluating (formally or informally) whether or not to close a study due to the probability (or lack thereof) of providing a useful answer in the face of substantially slower progression partway through a trial’s recruitment period.

Industry, payers and regulator

Industry-funded trials are typically (but not always) conducted as part of a regulatory submission for a new drug or medical device, or to widen the indications of an existing drug or device. Generally, an active intervention is compared with a placebo control, as this addresses the regulatory question of whether or not the intervention ‘works’. The main exception would be situations in which a new drug is intended to replace an established effective drug, in which case the established drug would be the control. An example is the evaluation of the newer oral anticoagulants, which have been compared with active comparators, such as warfarin or low-molecular-weight heparin, in the submissions for approval.

From an industry perspective, the target difference is often one chosen so that it is important to regulators and health-care commissioners. The key aspects of interest tend to be safety, including tolerability of treatment and consideration of side effects, whether or not the treatment is stopped due to a lack of effect and the effect within those who complete treatment. This has corresponding implications for the estimand(s) of interest. 22,23 Increasingly, payers (health insurance companies and governmental reimbursement agencies) are interested in comparisons with other active therapies, reflecting the need to inform treatment choices in actual clinical practice and considerations of affordability and cost-effectiveness. A new product will be more likely to be reimbursed if there are clinical advantages over existing therapies, in terms of either efficacy or adverse effect profiles, which are provided at an ‘acceptable’ cost. When an intervention is compared with an active control, the treatment effect between them will almost certainly be smaller and the sample size larger than for a placebo-controlled trial, all other things being equal. One common distinguishing feature between a definitive trial (e.g. Phase III) conducted in an industry setting, compared with an academic one, is that all of the evidence pertinent to planning such a trial of a new drug agent will often be readily available within the same company. It is also likely that at least some of the individuals involved will have been involved in a related earlier phase trial of the same drug.

Patient, service users, carers and the public

From the perspective of patients, service users, carers and the public,28 when a formal sample size calculation is performed, the target difference should be one that would be viewed as important by a key stakeholder group (such as health professionals, regulators, health-care funders and preferably patients). A specific point of interest, for those who serve as PPI contributors on research boards, who make funding recommendations and/or assess trial proposals, is likely to be ensuring that the study has considered the most patient-relevant outcome (e.g. a patient-reported outcome), even if it is not the primary outcome. In some situations, the most appropriate primary outcome may be a patient-reported outcome (e.g. comparing treatments for osteoarthritis, in which pain and function are the key measures of treatment benefit). It is highly desirable that a patient, service user and carer perspective feeds into the process for choosing the primary outcome in some way and, when possible, the chosen target difference reflects one that would have a meaningful impact on patient health, according to the research question. Some funders now require at least some PPI in the development of trial proposals and this perspective forms part of the assessment process. 28 It is also increasingly part of the assessment process for assessing existing evidence. 29

Research ethics

Fundamental to the standard ethical justification for the conduct of a RCT, which is a scientific experiment on humans, is (1) that it will contribute to scientific understanding and (2) that the participant is aware of what the study entails and, whenever possible, provides consent to participate. 30,31 Commonly, a third condition, that the participant has the potential to benefit, is also appropriate; this is particularly the case when there may be some risk to the participant. Whatever the specifics of the trial in terms of population, setting, interventions and assessments, it is important that the sample size for a study is appropriate to achieve its aim. There is a need for justification of some form for the number of participants required. As noted earlier, no more participants than ‘necessary’ should be recruited, to avoid unnecessary exposure to a suboptimal treatment and/or the practical burden of participation in a research study. Such a sample size justification may take the form of informal heuristics or, more commonly, a formal sample size calculation.

Clarifying what the study is aiming to achieve and determining an appropriate target difference and sample size is very important, as the research can have a big impact not only on those directly involved as participants, but also on future patients. As far as possible, it is also relevant to consider key patient subgroups or subpopulations of individuals in terms of relevance of findings to them. This could be taken into account when undertaking the sample size calculation (see Appendix 3).

The primary outcome of a randomised controlled trial

The role of the primary outcome

The standard approach to a RCT is for one outcome to be assigned as the primary outcome. 10 This is done by considering the outcomes that should be measured in the study. 32 The outcome is ‘primary’ in the sense of it being more important than the others, at least in terms of the design of the trial, although preferably it is also the most important outcome to the stakeholders with respect to the research question being posed. The study sample size is then determined for the primary outcome. As noted earlier, it is important to consider how the primary outcome relates to the population of interest and intervention effects to be estimated (the estimand of interest). Choosing a primary outcome (and giving it prominence in the statistical analysis of the estimands of interest) performs a number of functions in terms of trial design, but it is clearly a pragmatic simplification to aid the interpretation and use of RCT findings. It provides clarification of what the study primarily aims to use to identify the intervention effects. The statistical precision with which this can be achieved is then calculated according to the analysis of interest. Additionally, it clarifies the initial basis on which to judge the study findings. Specification of the primary outcome in the study protocol (and similarly reporting it on a trial registry) helps reduce overinterpretation of findings. This arises from testing multiple outcomes and selectively reporting those that are statistically significant (irrespective of their clinical relevance). This multiple testing, or multiplicity,33,34 is particularly important, given the high likelihood of chance leading to spurious statistically significant findings when a large number of outcomes are analysed. Pre-specification of a primary outcome, along with the use of a statistical analysis plan and transparent reporting (e.g. making the trial protocol available), limits the scope for manipulating (intentionally or not) the findings of the study. This prevents post hoc shifting of the focus (e.g. in study reports) to maximise statistical significance.

Choosing the primary outcome

A variety of factors need to be considered when choosing a primary outcome. First, in principle, the primary outcome should, as noted above, be a ‘key’ outcome, such that knowledge of its result would help answer the research question. For example, in a RCT comparing treatment with eye drops to lower ocular pressure with a placebo for patients with high eye pressure (the key treatable risk factor for glaucoma, a progressive eye disease that can lead to blindness), loss of vision is a natural choice for the primary outcome. 35 However, it would clearly be important to consider other outcomes (e.g. side effects of the eye drop drug). Nevertheless, knowing that the eye drops reduced the loss of vision due to glaucoma would be a key piece of knowledge. In some circumstances, the preferable outcome will not be used because of other considerations. In this glaucoma example, a surrogate might be used (intraocular pressure, i.e. pressure in the eye) because of the time it takes to measure any change in vision noticeable to a patient and also because this may enable prevention or at least a reduction in the degree of vision loss. Indeed, intraocular pressure is sometimes the primary outcome of RCTs in this area instead of vision or the visual quality of life.

Consideration is also needed of the ability to measure the chosen primary outcome reliably and routinely within the context of the study. Missing data are a threat to the usefulness of an analysis of any study, and RCTs are no different. The optimal mode of measurement may be impractical or even unethical. The most reliable way to measure intraocular pressure is through manometry;36 however, this requires invasive eye surgery. Subjecting participants to clinically unnecessary surgery for the purpose of a RCT is ethical only with very strong mitigating circumstances, particularly as an alternative, even if less accurate, way of measuring intraocular pressure exists. Furthermore, invasive measurements may dissuade participants from consenting to take part in the RCT.

Calculating the sample size varies depending on the outcome and the intended analysis. In some situations, ensuring that the sample size is sufficient for multiple outcomes is appropriate. 37 The three most common outcome types are binary, continuous and survival (time-to-event) outcomes; they are briefly considered in Box 2 and in greater depth in Appendix 3. Other outcome types are not considered here, although it should be noted that ordinal, categorical and count outcomes can be used, although a more complex analysis and corresponding sample size calculation approach is likely to be needed. Continuous outcomes (or a transformed version of them) are typically assumed to be normally distributed, or at least ‘approximately’ so, for ease and interpretability of analysis and for the sample size calculation. This assumption may be inappropriate for some outcomes, such as operation time, hospital stay and costs, which often have very skewed distributions. From a purely statistical perspective, a continuous outcome should not be converted to a binary outcome (e.g. converting a quality-of-life score to high/low quality of life). Such a dichotomisation would result in less statistical precision and lead to a larger sample size being required. 40 If it is viewed as necessary to aid interpretability, the target difference (and corresponding analysis) used in the continuous measure can also be represented as a dichotomy, in addition to being expressed on its continuous scale. Some authors, although acknowledging that this should not be routine, would make an exception in some circumstances when a dichotomy is seen as providing a substantive gain in interpretability, even if it is at a loss of statistical precision. 41 For example, the severity of depression may be measured and analysed on a latent scale, but the proportion of individuals meeting a prespecified threshold for depression or improvement might also be reported and potentially analysed. 42

The three most common outcome types (binary, continuous and time to event) are briefly described below.

BinaryA binary outcome is one with only two possible values (e.g. cured or not, and dead or alive). In terms of trials, they are usually time-bound (i.e. whether or not a participant is alive at 6 months post randomisation). Use of the date of the change in status (e.g. time of death) would lead to a survival or time-to-event outcome. Other common trial binary outcomes are the occurrence of an adverse event (e.g. surgical complication or a pharmacological event such as dryness of mouth).

ContinuousContinuous outcomes refer to those that have a numeric scale. True continuous measures (such as blood pressure measurements) have an infinite number of possible values. For example, a value of 125.2334456 mmHg for the systolic blood pressure is theoretically possible, even if it is difficult to measure it with such precision. Ordinal outcomes (with a sufficient number of discrete values) are often analysed as if they were continuous, owing to the difficulties of both calculating the required sample size and also interpreting the result from a more formal, statistically appropriate, analysis of an ordinal outcome. This is often done when analysing quality-of-life measures,38 in which a latent summary scale is produced by applying a scoring algorithm to responses to a set of items, even though there are a fixed number of discrete states (e.g. there are 243 for the EQ-5D-3L index with values from –0.594 to 1.0, using the UK population weights). The difficulty of calculating the sample size for an ordinal variable increases quickly as the number of responses increases. 39

Time to eventTime-to-event data are often called ‘survival’ data; a common application is for recording the time to death. However, the same statistical methodology can be used to analyse the time to any event. Examples include disease progression, readmission to hospital and wound healing, and positive ones such as time to full recovery.

Time-to-event data present two special problems in their analysis and hence in sample size estimation:

-

Not all participants have an event.

-

Participants are observed for varying amounts of time.

If all participants experience an event within the follow-up period, the data could be analysed as a continuous variable. In clinical studies, including RCTs, it is natural for participants to be observed for varying lengths of time. There are two reasons for this:

-

Some participants drop out before the end of follow-up.

-

Participants are recruited at different times.

Some participants drop out before the end of follow-up because they decline to take further part in the trial or because they experience some other event that means that they can no longer be followed up. For example, in a trial in which the event of interest is death from a cardiovascular cause, a participant who died in a road traffic accident would become unavailable for further follow-up and would be censored at the time of death.

If participants are followed up from recruitment to the final analysis, some will have been observed for a much longer time than others. In most clinical studies, this is the most frequent reason for varying durations of follow-up. The varying time of follow-up is the main reason why simply analysing the proportion of participants who experience an event (i.e. analyse it as if it were a binary outcome) is not appropriate.

EQ-5D-3L, EuroQol-5 Dimensions, three-level version.

Chapter 3 Specifying the target difference

General considerations

Introduction

Despite its key role, the specification of the target difference for a RCT has received surprisingly little discussion in the literature and in existing guidelines for conducting clinical trials. 10,14 As noted above, the target difference is the difference between the interventions in the primary outcome used in the sample size calculation that the study is designed to reliably detect. If correctly specified, it provides reassurance (should the other assumptions be reasonable and the sample size met) that the study will be able to address the RCT’s main aim in terms of the primary outcome, the population of interest and the intervention effects. It can also aid interpretation of the study’s findings, particularly when justified in terms of what would be an important difference. The target difference therefore should be one that is appropriate for the planned principal analysis (i.e. the estimand that is to be estimated and the analysis method to be used to achieve this). 23,25,43,44 This is typically (for superiority trials) what is known as an ITT-based analysis (i.e. according to the randomised groups irrespective of subsequent compliance with the treatment allocation). Other analyses that address different estimands22,25,44 of interest could also inform the sample size calculation (see Appendix 3, Other topics of interest for a related topic). How the target difference can be expressed will depend also on the planned statistical analysis. A target difference for a continuous outcome could be expressed as a difference in means, medians or even as a difference in distribution. Binary outcomes could be expressed as an absolute difference in proportions or as a relative difference [e.g. odds ratio (OR) or risk ratio (RR)]. Irrespective of the outcome type, there are two main bases for specifying the target difference, one that is considered to be:

-

important to one or more stakeholder groups (e.g. health professionals or patients)

-

realistic (plausible), based on either existing evidence (e.g. seeking the best available estimates in the literature) and/or expert opinion.

Recommendations on how to go about specifying the target difference are provided in Box 3. A summary of the seven methods that can be used for specifying the target difference is provided in Methods for specifying the target difference.

The following are recommendations for specifying the target difference in a RCT’s sample size calculation when the conventional approach to the sample size calculation is used. Recommendations on the use (or not) of individual methods are made. More detailed advice on the application of the individual methods can be found elsewhere. 15

Recommendations-

Begin by searching for relevant literature to inform the specification of the target difference. Relevant literature can:

-

relate to a candidate primary outcome and/or the comparison of interest

-

inform what is an important and/or realistic difference for that outcome, comparison and population (estimand of interest).

-

-

Candidate primary outcomes should be considered in turn and the corresponding sample size explored. When multiple candidate outcomes are considered, the choice of primary outcome and target difference should be based on consideration of the views of relevant stakeholders groups (e.g. patients), as well as the practicality of undertaking such a study and the required sample size. The choice should not be based solely on which yields the minimum sample size. Ideally, the final sample size will be sufficient for all key outcomes, although this is not always practical.

-

The importance of observing a particular magnitude of a difference in an outcome, with the exception of mortality and other serious adverse events, cannot be presumed to be self-evident. Therefore, the target difference for all other outcomes requires additional justification to infer importance to a stakeholder group.

-

The target difference for a definitive (e.g. Phase III) trial should be one considered to be important to at least one key stakeholder group.

-

The target difference does not necessarily have to be the minimum value that would be considered important if a larger difference is considered a realistic possibility or would be necessary to alter practice.

-

When additional research is needed to inform what would be an important difference, the anchor and opinion-seeking methods are to be favoured. The distribution should not be used. Specifying the target difference based solely on a SES approach should be considered a last resort, although it may be helpful as a secondary approach.

-

When additional research is needed to inform what would be a realistic difference, the opinion-seeking and review of the evidence-based methods are recommended. Pilot studies are typically too small to inform what would be a realistic difference and primarily address other aspects of trial design and conduct.

-

Use existing studies to inform the value of key ‘nuisance’ parameters that are part of the sample size calculation. For example, a pilot trial can be used to inform the choice of SD value for a continuous outcome or the control group proportion for a binary outcome, along with other relevant inputs, such as the number of missing outcome data.

-

Sensitivity analyses that consider the impact of uncertainty around key inputs (e.g. the target difference and the control group proportion for a binary outcome) used in the sample size calculation should be carried out.

-

Specification of the sample size calculation, including the target difference, should be reported in accordance with the recommendations for reporting items (see Chapter 4, Figure 1) when preparing key trial documents (grant applications, protocols and result manuscripts).

SD, standard deviation; SES, standardised effect size.

A very large literature exists on defining a (clinically) important difference, particularly for quality-of-life outcomes. 45–47 Much of the focus has been on estimating the smallest value that would be considered clinically important by stakeholders [the ‘minimum clinically important difference’ (MCID)]. 45–48 In a similar manner, discussion of the relevance of estimates from existing studies are also common occurrences. It should be noted that it has been argued that a target difference should always meet both of the above criteria. 49 This would seem particularly apt for a definitive Phase III RCT. There is some confusion in the reporting of sample size calculations for trials in the literature and what the use of a particular approach justifies. For example, using data from previous studies (see Pilot studies and Review of the evidence base) cannot by itself inform the importance, or lack thereof, of a particular difference.

The subsequent sections (see Individual- versus population-level important differences and Reverse engineering) consider two special topics, individual- and population-level important difference and reverse engineering of the sample size calculation, respectively.

Individual- versus population-level important differences

In a RCT sample size calculation, the target difference between the treatment groups strictly relates to the difference at the group level. In a similar manner, the health economic consideration refers to how to manage a population of individuals in an efficient and effective manner. However, the difference in an outcome that is important to an individual is not necessarily the same difference that might be viewed as important at the population level. Rose50 grappled with the meaning and relationships between individual- and population-level differences, and their implications, in the context of disease prevention. He noted that, based on data from the Framingham Heart Study,51 an average 10-mmHg lowering of blood pressure could potentially result in a 30% reduction in attributable mortality. Although a 10-mmHg change in an individual might seem small, if a treatment could achieve that average difference, it would be very beneficial. A 10-mmHg change could therefore be justified as an appropriate and important target difference for a trial in a similar population. An individual may wish a greater impact, particularly if the intervention they are to receive is burdensome or carries some risk.

More recently, researchers in other clinical areas have also distinguished between what is ‘important’ at an individual level and what is ‘important’ at a group level for quality-of-life measures. 52–54 In a RCT sample size calculation, the parameters assumed for the outcome in the intervention groups in the sample size calculation, including the target difference, should reflect the population-level values (e.g. the mean difference in Oxford Knee Score), even though individual values can vary. 55 When considering the importance of and/or how realistic a specific difference is, the intended trial population must be borne in mind. The difference that would be considered important by patients may well vary between populations (e.g. according to the severity of osteoarthritis). 56 For example, the importance of a 5-point increase (improvement) in the Oxford Knee Score for a relatively healthy population, with a mean baseline level of 30 points (out of 48), could well differ from that of a population that has severe osteoarthritis, with a mean baseline level of 10 points. Similarly, in terms of population risk (e.g. risk of a stroke), a small reduction at a population level might be considered very important, whereas for a group of high-risk patients, a more substantial reduction may be required. 50

Work has shown that individuals differ in what magnitude of difference they consider important, at least in part due to their varying baseline levels. 18,45 This general issue has implications when selecting a target difference, as it should be a difference that reflects the analysis at the group (and intended population) level and the comparison at hand. Care is therefore needed when using values from external studies to infer an important difference.

Reverse engineering

The difference that can be detected for a given sample size is often calculated. It can be apparent that this has been done (e.g. when one sees a precise target difference and a round sample size) without any other justification. For example, a target difference of 16.98 for a trial with a pooled standard deviation (SD) of 30, statistical power of 80% at two-sided 5% significance level and two treatment groups of 100 participants has clearly been reverse engineered.

A key distinction needs to be made between calculating the target difference for a prospective trial from calculating the target difference on the basis of the recruited sample size once the trial has been completed (post hoc power calculation). The former has a useful role in the process of planning and deciding what is feasible; the latter is unhelpful and uninformative. 57

Case study 6 describes a situation in which a fixed (and complete) number of observations were expected without loss due to consent or attrition-driven subsampling, but the corresponding target difference was calculated and deemed to be an important and realistic difference to use.

Methods for specifying the target difference

The methods for specifying the target difference can be broadly grouped into seven types. These are briefly described below.

Anchor

The quantification of a target difference or effect size for a sample size calculation is not straightforward for an established end-point or outcome measure. 58 For a new outcome, especially a patient-reported health-related quality-of-life measure, it is even more difficult, as clinical experience with using the new outcome may not have been sufficiently long to evaluate what a clinically meaningful or important difference might be. Additionally, for a measure such as a quality-of-life outcome, the scale has no natural meaning and is completely a function of the scoring method (i.e. a 1-point difference does not have any naturally interpretable value).

The outcome of interest can, however, be ‘anchored’ by using someone’s judgement, typically a patient or a health professional, to define what an important difference is. 46–48 This is typically achieved by comparing a patient’s health before and after a recognised treatment, and then linking the change to participants who showed improvement and/or deterioration according to the judgement of changes (e.g. on a five-point Likert scale from ‘substantial deterioration’ through to ‘substantial improvement’). Alternatively, a more familiar outcome (for which patients or health professionals more readily agree on what amount of change constitutes an important difference) can be used. In this way, one outcome is anchored to another outcome about which more is known. Contrasts between patients (such as individuals with varying severity of a disease) can also be used to determine a meaningful difference (e.g. via patient-to-patient assessments). 20,59

The Food and Drug Administration has described a variety of methods for determining the minimum important difference, including the anchor approach. 7 Changes in quality-of-life measures can be mapped to clinically relevant and important changes in non-quality-of-life measures of treatment outcome in the condition of interest (although they may not correlate strongly). 60 There are a multitude of minor variations in the approach (e.g. the anchor question and responses, or how the responses are used), although the general principles are the same. 15,46–48

Distribution

Two distinct distribution approaches can be grouped under this heading:15,45 (1) measurement error and (2) rule of thumb. The measurement error approach determines a value that is larger than the inherent imprecision in the measurement and that is therefore likely to be consistently noticed by patients. This is often based on the standard error of measurement. The standard error of measurement can be defined in various ways, with different multiplicative factors suggested as signifying a non-trivial (important) difference. The most commonly used alternative to the standard error of measurement method (although it can be thought of as an extension of this approach) is the reliable change index proposed by Jacobson and Truax,61 which incorporates confidence around the measurement error.

The rule-of-thumb approach defines an important difference based on the distribution of the outcome, such as using a substantial fraction of the possible range without further justification. An example would be viewing a 10-mm change on a 100-mm visual analogue scale measuring symptom severity as a substantial shift in outcome response.

Measurement error and rule-of-thumb approaches are widely used in the area of measurement properties of quality of life, but do not translate straightforwardly to a RCT target difference. For measurement error approaches, this is because the assessment is typically based on test–retest (within-person) data, whereas most trials are of parallel-group (between-person) design. Additionally, measurement error is not sufficient rationale as the sole basis for determining the importance of a particular target difference. More generally, the setting and timing of data collection may also be important to the calculation of measurement error (e.g. results may vary between pre and post treatment). 62 Rule-of-thumb approaches are dependent on the outcome having inherent value (e.g. the Glasgow Coma Scale score), in which a substantial fraction of a unit change (e.g. one-third or a half) can be viewed as important. In this situation, any reduction is arguably also important and the issue is more one of research practicality (as per mortality outcome) than detecting a clinically important difference.

Distribution approaches are not recommended for use to inform the choice of the target difference, given their inherently arbitrary nature in this context.

Health economic

Approaches to using economic evaluation methodology to inform the design of RCTs have been proposed since the early 1990s. 63,64 These earlier approaches sought to identify threshold values for key determinants of cost-effectiveness and are akin to determining an important difference in clinical outcomes, albeit on a cost-effectiveness scale. However, uptake has been very low. A recent review by Hollingworth and colleagues65 identified only one study that considered cost-effectiveness in the sample size calculation. They also showed that trials powered on clinical end points were less likely to reach definitive conclusions of cost-effectiveness than on clinical effectiveness.

Despite the lack of use, further development of methods has continued. A strand in the development of these methods has been to focus on a variation in the standard frequentist approach to sample size estimation. The most recent exposition of this was by Glick. 66,67 Glick focused on a particular economic metric, the incremental net benefit (INB) statistic. A key aspect of the INB is that it monetarises a unit of health effect by multiplying it by the decision-maker’s willingness to pay for that unit of health effect. Power is taken to be the chance that the lower limit of the confidence interval (CI) calculated from the future trial exceeds 0. An important difference is then any difference in INB that is ≥ 0, and the size of the trial can be set so as to detect this. However, Glick notes that willingness to pay is not known for certain (e.g. in England, the National Institute for Health and Care Excellence68 currently specifies a range of between £20,000 and £30,000 per quality-adjusted life-year gained) and that, other things being equal, increasing the decision-maker’s willingness to pay for a unit of health effect reduces the sample size. An alternative economics-based approach, value of information, is summarised in Appendix 4.

Opinion-seeking

The opinion-seeking method determines a value, a range of plausible values, or a prior distribution for the target difference by asking one or more ‘experts’ to state their opinion on what value(s) for a particular difference would be important and/or realistic. 69,70 Eliciting opinions on the relative importance of the benefits and risks of a medicine may also be used to inform the choice of non-inferiority or equivalence margins for such trials. 71,72

The definition of an expert (e.g. clinician, patient or triallist) must be tailored to the quantity on which an opinion is sought. Various approaches can be used to identify experts (e.g. key opinion leaders, literature search, mailing list or conference attendance). Other variations include the approach used to elicit opinion (e.g. group and/or individual interviews, questionnaires, e-mail surveys or workshops),73–75 the complexity of the data elicited (from a single value76 to multiple assessments incorporating uncertainty77 and/or sensitivity to key factors, such as baseline level78) and the method used to consolidate the results into an overall value, range of values or distribution. 69

Many elicitation techniques have been developed in the context of Bayesian statistics to establish a prior distribution, quantifying an expert’s uncertainty about the true treatment difference. 69 The expert will be asked a series of questions to elicit a number of summaries of their prior distribution. The number and nature of these summaries will depend on the nature of the treatment difference (i.e. whether or not this is a difference in means, RR, etc.) and what parametric distribution (if any) will be used to model the expert’s prior. Typically, more summaries are elicited than are strictly necessary, to enable model checking. Feedback of the fitted prior is an essential part of the elicitation process to ensure that it adequately captures the expert’s beliefs. Examples of prior elicitation include the Continuous Hyperfractionated Accelerated RadioTherapy (CHART) and MYcophenolate mofetil for childhood PAN (MYPAN) trials. 77,79,80 When the opinions of several experts are elicited, several priors may be used to capture a spectrum of beliefs (e.g. sceptical, neutral or enthusiastic). Priors may be used to inform the design of a conventional trial (e.g. when setting the sample size or an early stopping rule),79,81 to ensure that the study would convince a prior sceptic. Alternatively, priors may be incorporated into the interpretation of a Bayesian trial to reduce uncertainty, which may be appropriate in cases such as rare diseases, when a conventionally powered study is infeasible. 82 Bayesian approaches to sample size calculations are discussed in more detail in Appendix 4.

An advantage of the opinion-seeking method is the relative ease with which it can be carried out in its simpler forms. 78 However, the complexity increases substantially when undertaken as a formal elicitation. 77 Whatever the approach used, it should ideally match, as closely as possible, the intended trial research question. 73,78,83 Findings will vary according to the patient population and comparison of interest. Additionally, different perspectives (e.g. patient vs. health professional) may lead to very different opinions on what is important and/or realistic. 83 The views of individuals who participate in the elicitation process may not represent those of the wider community. Furthermore, some methods for eliciting opinions have cost or feasibility constraints (e.g. those requiring face-to-face interaction). However, alternative approaches, better able to capture the views of a larger number of experts, require careful planning to ensure that questions are clearly understood. Care is needed with these approaches, as they may be subject to low response rates78 or may produce priors with limited face validity.

Pilot studies

Pilot studies come in various forms. 84 A useful distinction can be made between pilot studies, per se, and the subset of pilot studies (pilot trials) that can be defined as an attempt to pilot the study methodology prior to conducting the main trial. As such, data from a pilot trial are likely to be directly relevant to the main trial. This section therefore focuses on pilot trials, although the considerations are relevant to other pilot studies that have not been designed with a particular trial design in mind. It should be noted that some Phase II trials can be viewed in a similar manner as preparing for a Phase III trial and therefore can inform sample size calculations.

Pilot trials are not well suited to quantifying a treatment effect, as they usually have a small sample size and are not typically large enough to quantify, with much certainty, what a realistic difference would be. 85 Accordingly, avoiding conducting formal statistical testing and focusing instead on descriptive findings and interval estimation is recommended. 84,86 In terms of specifying the target difference for the main trial, pilot trials are most useful in providing estimates of the associated ‘nuisance’ parameters (e.g. SD and control group event proportion; see Chapter 5, Case study 2: the ACL-SNNAP trial, for more details). 84,87 Like any quantity, these parameters will, however, be estimated with uncertainty, which has implications for the sample size of both a pilot trial and a subsequent main trial. 88

Another use of a pilot trial is to assess the plausibility (at a less exacting level of statistical certainty than would be typically required for a main trial) of a given difference considered to be important through the calculation of a CI. 87 Pilot trial-based CIs can be considered investigative and can be used to help inform decision-making. If an effect of this size is not ruled out by the CI of the estimated effect from the pilot trial, then results could be deemed sufficiently promising to progress to the main trial. 86,89

Review of the evidence base

An alternative to conducting a pilot trial is to review existing studies to assess a realistic effect and therefore inform the choice of target difference for the main trial. 15 Pre-existing studies for a specific research question can be used (e.g. using the pooled estimate of a meta-analysis) to determine the realistic difference. 14 It has been argued strongly and persuasively that this should be routine prior to embarking on a new trial. 90 Extending this general approach, Sutton and colleagues91,92 derived a distribution for the effect of a treatment from a meta-analysis, from which they then simulated the effect of a ‘new’ study; the result of this study was added to the existing meta-analysis data, which were then reanalysed. Implicitly, this adopts a realistic difference as the basis for the target difference and therefore makes no judgement about the value of the effect should it truly exist. Using the same target difference as a previous trial, although heuristically convenient, does not provide any real justification, as it may or may not have been appropriate when used in the last study.

It is likely that existing evidence is often informally used (indeed, research funders typically require a summary of existing evidence prior to commissioning a new study), although little research has addressed how it should formally be done. Estimates identified from existing evidence may not necessarily be appropriate for the population and estimand under consideration for the trial, so the generalisability of the available studies and susceptibility to bias should be considered. Indeed, the planning of a new study implies some perceived limitation in the existing literature. Imprecision of the estimate is also an important consideration and publication bias may also be an issue if reviews of the evidence base consider only published data. If a meta-analysis of previous studies is used to inform the sample size calculation for a new trial, additional evidence published after the search used in the meta-analysis was conducted may require the updating of the sample size calculation during trial conduct, to maintain a realistic difference. The control group proportion or the SD (as well as other inputs that influence the overall sample size) can be estimated using existing evidence. An analysis that also makes use of other studies (existing or ongoing) can provide a sample size justification for what may be otherwise too small a study to provide, on its own, a useful result. 93

Standardised effect size

The magnitude of the target difference on a standardised scale [standardised effect size (SES)] is commonly used to infer the value of detecting this difference when set in comparison with other possible standardised effects. 15,85 Overwhelmingly, the practice for RCTs, and in other contexts in which the (clinical) importance of a difference is of interest, is to use the guidelines suggested by Cohen94 for Cohen’s d metric (i.e. 0.2, 0.5 and 0.8 for small, medium and large effects, respectively) as de facto justification. These values were given in the context of a continuous outcome for a between-group comparison (akin to a parallel-group trial), with the caveat that they are specific to the context of social science experiments. Despite this, due in part to having some face validity and in part to the absence of a viable or ready alternative, justification of a target difference on this basis is widespread. Colloquially, and rather imprecisely, Cohen’s d value is often described as the trial ‘effect size’.

Other SES metrics exist for continuous (e.g. Dunlap’s d), binary (e.g. OR) and survival [hazard ratio (HR)] outcomes, and a similar approach can be readily adapted for other types of outcomes. 94,95 The Cohen guidelines for small, medium and large effects can be converted into equivalent values for other binary metrics (e.g. 1.44, 2.48 and 4.27, respectively, for ORs). 96 Guidelines for other effect sizes exist (including some suggested by Cohen94). Informally, a doubling or halving of a ratio is sometimes seen as a marker of a large relative effect. However, no equivalent guideline values are in widespread use for any of the other effect sizes. In the case of relative effect metrics (such as the RR), this probably reflects the difficulty in considering a relative effect apart from the control group response level.

The main benefit of using a SES method is that it can be readily calculated and compared across different outcomes, conditions, studies, settings and people; all differences are translated into a common metric. It is also easy to calculate the SES from existing evidence if studies have reported sufficient information. When calculated, the SD (or equivalent inputs) used should reflect the intended estimand (i.e. the population and outcome).

It is important to note that SES values are not uniquely defined and different combinations of values on the original scale can produce the same SES value. For example, different combinations of mean and SD values produce the same Cohen’s d statistic SES estimate. A mean of 5 (SD 10) and a mean of 2 (SD 4) both give a standardised effect of 0.5 SDs. As a consequence, specifying the target difference as a SES alone, although sufficient in terms of sample size calculation, can be viewed as insufficient, in that it does not actually define the target difference for the outcome measure of interest in the population of interest. A further limitation of the SES is the difficulty in determining why different effect sizes are seen in different studies, for example whether these differences are due to differences in the outcome measure, intervention, settings or participants in the studies, or study methodology. This approach should be viewed as, at best, a last resort. It is perhaps more useful (for a continuous outcome) to provide a benchmark to assess the value from another method. Preferably, some idea of effect sizes for an accepted treatment in the specific clinical area of interest would be available. 97

Chapter 4 Reporting of the sample size calculation for a randomised controlled trial

The approach taken and the corresponding assumptions made in the sample size calculation should be clearly specified, as well as all inputs and formula so that the basis on which the sample size was determined is clear. This information is critical for reporting transparently, allows the sample size calculation to be replicated and clarifies the primary (statistical) aim of the study. A recommended list of reporting items for recording in key trial documents (grant applications, protocols and results paper) is provided in Figure 1, for when the conventional approach to sample size calculation has been used. When another approach has been used, appropriate items should be reported sufficient to ensure transparency and allow replication.

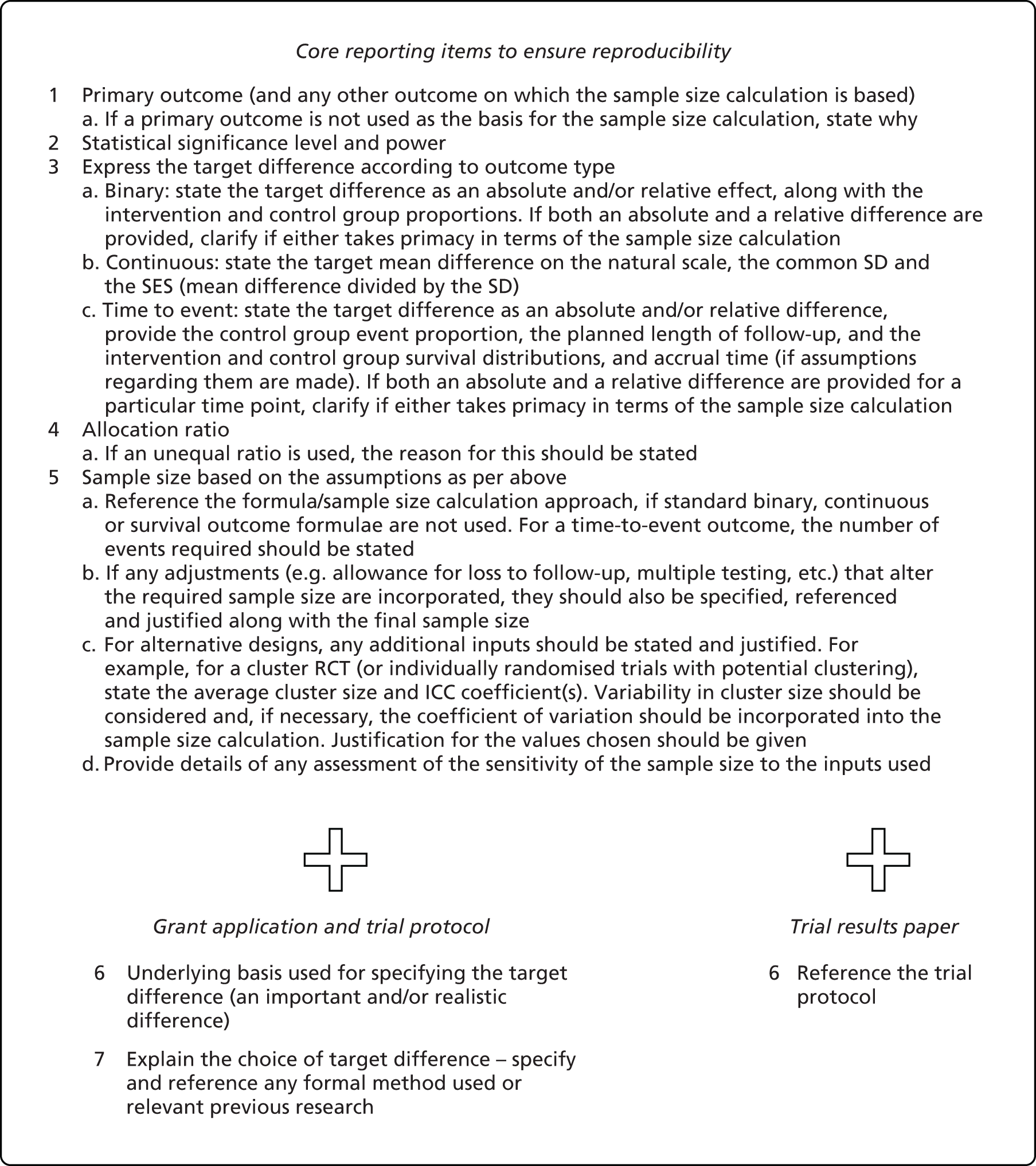

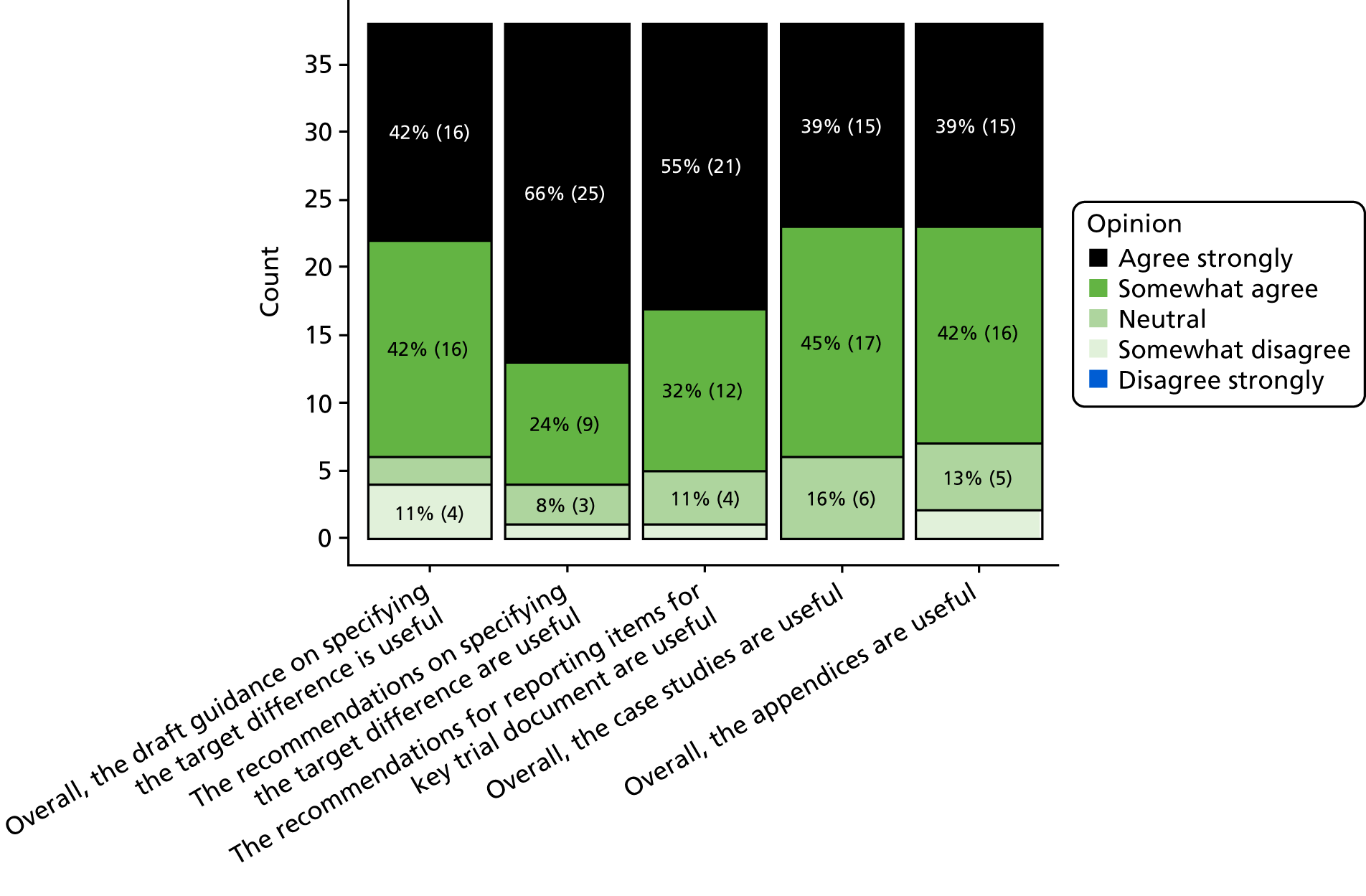

FIGURE 1.

Recommended DELTA2 reporting items for the sample size calculation of a RCT with a superiority question. ICC, intracluster correlation.

Core items sufficient to replicate the sample size calculation should be provided in all key documents. Under the conventional approach with a standard (1 : 1 allocation two-arm parallel-group) trial design and unadjusted statistical analysis, the core items that should be stated are the primary outcome; target difference, appropriately specified according to the outcome type; associated nuisance parameters; and statistical significance and power. Specification of the target difference in the sample size calculation section varies according to the type of primary outcome. The expected (predicted) width of the CI can be determined for a given target difference and sample size calculation, and can be a helpful further aid in making an informed choice about this part of a trial’s design and could also be reported. 98

When the calculation deviates from the conventional approach (see Appendix 3), whether by research question or statistical framework, this should be clearly specified. Formal adjustment of the significance level for multiple outcomes, comparisons or interim analyses should be specified. 33,34,37,99 Justification for all input values assumed should be provided.

We recommend that trial protocols and grant applications report additional information, explicitly clarifying the basis used for specifying the target difference and the methods/existing studies used to inform the specification of the target difference. Examples of a trial protocol sample size section under a conventional approach to the sample size calculation for a standard trial and unadjusted analysis are provided in Boxes 4–6 for binary, continuous and time-to-event primary outcomes, respectively. The word counts for these texts are 74–125 words, illustrating that key information can be conveyed in a limited amount of text.

The primary outcome is presence of urinary incontinence. The sample size is based on a target difference of 15% absolute difference (85% vs. 70%) at 12 months post randomisation. This magnitude of target difference was determined to be both a realistic and an important difference from discussion between clinicians and the project management group, and from inspection of the proportion of urinary continence in the trials included in a Cochrane systematic review. 101 The control group proportion (70%) is also based on the observed proportion in the RCTs in this review. Setting the statistical significance to the two-sided 5% level and seeking 90% power, 174 participants per group are required, giving a total of 348 participants. Allowing for 13% missing data leads to 200 per group (400 participants overall).

Reproduced from Cook et al. 14 Contains information licensed under the Non-Commercial Government Licence v2.0.

The primary outcome is Early Treatment Diabetic Retinopathy Study (ETDRS) distance visual acuity. 103 A target difference of a mean difference of five letters with a common SD of 12 at 6 months post surgery is assumed. Five letters is equivalent to one line on a visual acuity chart and is viewed as an important difference by patients and clinicians. The SD value is based on two previous studies – one observational comparative study104 and one RCT. 105 This target difference is equivalent to a SES of 0.42. Setting the statistical significance to the two-sided 5% level and seeking 90% power, 123 participants per group are required, giving 246 participants (274, allowing for 10% missing data) overall.

Reproduced from Cook et al. 14 Contains information licensed under the Non-Commercial Government Licence v2.0.

The primary outcome is all-cause mortality. The sample size was based on a target difference of 5% in 10-year mortality, with a control group mortality of 25%. Both the target difference and control group mortality proportions are realistic, based on a systematic review of observational (cohort) studies. 107 Setting the statistical significance to the two-sided 5% level and seeking 90% power, 1464 participants per group are required, giving a total of 2928 participants (651 events).

Reproduced from Cook et al. 14 Contains information licensed under the Non-Commercial Government Licence v2.0.

Owing to space restrictions, in many publications the main trial paper is likely to contain less detail than is desirable. Nevertheless, a minimum set of reporting items is recommended for the main trial results paper, along with full specification in the trial protocol. The trial results paper should reference the trial protocol, which should be made publicly available. The recommended list of items given in Figure 1 for the trial paper (as well as for the protocol) is more extensive than that in the Consolidated Standards of Reporting Trials (CONSORT) (including the 2010 version)108 and the Standard Protocol Items: Recommendations for Interventional Trials (SPIRIT)109 statements.

Chapter 5 Case studies of sample size calculations

Overview of the case studies

A variety of case studies are provided for different trial designs, including varying types of primary outcomes, availability of evidence to inform the target difference and level of complexity. A short description is provided in Table 1.

| Number | Description | Trial |

|---|---|---|

| 1 | A standard (two-arm parallel-group) trial, in which the opinion-seeking and review of the evidence-based methods were used to inform the target difference for a binary outcome | MAPS100 |

| 2 | A two-arm parallel-group trial, in which the anchor and distribution methods were used to inform the target difference for a continuous quality-of-life outcome | ACL-SNNAPa |

| 3 | A crossover trial, in which the opinion-seeking and review of the evidence base methods were used to inform the target difference for a binary patient-reported outcome | OPTION-DM110 |

| 4 | A three-arm parallel-group trial, in which the review of the evidence base was used to inform the target difference for a binary clinical outcome | SUSPEND111 |

| 5 | A three-arm/two-stage parallel-group trial, in which the anchor, review of the evidence base and SES methods were used to inform an important and realistic difference in a continuous quality-of-life outcome | MACRO112,113 |

| 6 | A two-arm cluster trial, in which the opinion-seeking and review of the evidence base methods were used to inform an important and realistic difference in a continuous cluster-level process measure outcome | RAPiD114 |

Case study 1: the MAPS trial

Radical prostatectomy is carried out for men suffering from early prostate cancer. The operation is usually carried out through an open incision in the abdomen, which may damage the urinary bladder sphincter, its nerve supply and other pelvic structures. Urinary incontinence occurs in around 90% of men initially, but the long-term prognosis varies from 2% to 60%, depending on how incontinence is measured and time after surgery. Successive Cochrane systematic reviews101 have shown that, although conservative treatment based on pelvic floor muscle training may be offered to men with urinary incontinence after prostate surgery, there is insufficient evidence to evaluate its effectiveness and cost-effectiveness. Men After Prostate Surgery (MAPS)100 was a multicentre RCT that aimed to assess the clinical effectiveness (primarily by looking at the presence of urinary incontinence post treatment) and cost-effectiveness of active conservative treatment delivered by a specialist continence physiotherapist or a specialist continence nurse, compared with standard management, in men receiving a radical prostatectomy at 12 months after surgery.