Notes

Article history

The research reported in this issue of the journal was funded by the HTA programme as project number 15/141/09. The contractual start date was in September 2014. The draft report began editorial review in May 2019 and was accepted for publication in November 2019. The authors have been wholly responsible for all data collection, analysis and interpretation, and for writing up their work. The HTA editors and publisher have tried to ensure the accuracy of the authors’ report and would like to thank the reviewers for their constructive comments on the draft document. However, they do not accept liability for damages or losses arising from material published in this report.

Declared competing interests of authors

none

Permissions

Copyright statement

© Queen’s Printer and Controller of HMSO 2020. This work was produced by Hernández Alava et al. under the terms of a commissioning contract issued by the Secretary of State for Health and Social Care. This issue may be freely reproduced for the purposes of private research and study and extracts (or indeed, the full report) may be included in professional journals provided that suitable acknowledgement is made and the reproduction is not associated with any form of advertising. Applications for commercial reproduction should be addressed to: NIHR Journals Library, National Institute for Health Research, Evaluation, Trials and Studies Coordinating Centre, Alpha House, University of Southampton Science Park, Southampton SO16 7NS, UK.

2020 Queen’s Printer and Controller of HMSO

Chapter 1 Introduction

In many health-care systems across the globe, decisions about the types of health technologies, strategies and service delivery options provided are informed by some form of economic evaluation. This entails making comparisons between competing options in terms of their costs and benefits. The type of economic evaluation in most widespread use is referred to as cost-effectiveness analysis (CEA). In particular, health benefits are expressed as quality-adjusted life-years (QALYs). The QALY is a metric that combines concerns for both length of life and quality of life into a single numeraire. It does so by rating health states on a scale anchored around the values of one (a year spent in full health) and zero (states equivalent to death).

The value that any specific health state can take on this scale is limited to a maximum of one, but can take negative values if it is considered to be so severe that it is deemed worse than being dead. These health state valuations, sometimes referred to as health state utilities, can be estimated using many different methods. They can also be estimated from different samples of respondents. For example, there is significant debate about whether health state utilities should reflect the values of patients with experience of the condition or the values of the general public. Each of these options leads to different valuations of health states and are important areas of research, though they are not the focus of this report.

Our aim is to present new research on a specific approach to estimating health state utilities that has become increasingly prevalent in recent years. This is the approach referred to variously as ‘mapping’, ‘cross-walking’ or ‘transfer to utility’. We use the term ‘mapping’ throughout this report.

Mapping refers to a two-stage process to estimate the utilities for the health states required for a CEA. In the first stage, the relationship between the health state utilities of interest and some set of explanatory variables is estimated using data from a sample of patients with the same clinical condition but not necessarily related to a study of the health technology that is the focus of the CEA. The explanatory variables may be some form of clinical measure, patient-reported outcome measures (PROMs) or other sociodemographic information. In the second stage, the estimated model is used to predict the health state utilities for health states required in the cost-effectiveness model.

This report provides more detail of what mapping is, how it has been performed previously and the challenges that are faced when conducting a mapping study (see Chapter 2). In Chapter 3 we introduce a series of flexible statistical methods for mapping that are designed to overcome many of these challenges. We describe how they can be used and how the results from different model types can be compared in a meaningful way. Chapter 4 presents results from a series of case studies that test and compare the performance of different mapping models as well as a separate case study of the specific case of mapping between two preference-based measures (PBMs). In Chapter 5 we present some preliminary analyses on a number of methodological issues that we have identified in the course of our research. Finally, we summarise our overall findings and provide recommendations for future research based on those findings in Chapter 6.

Chapter 2 Background

Preference-based measures

To calculate QALYs, there is a requirement not only to describe the impact of health technologies on quality of life but also for that quality of life to be valued on the appropriate scale, that is, based on the preferences of an appropriate sample and anchored around the values of one for full health and zero for states equivalent to being dead.

One option for achieving this is to ask patients with the condition in question and experiencing the health states that are relevant to the CEA to value their own health. The main methods for undertaking such valuations take the form of thought experiments and are known as time trade-off (TTO) and standard gamble (SG), though a range of other valuation methods can also be used, sometimes in combination. TTO requires respondents to indicate the length of life in full health that they would consider equivalent to some other length of life in their impaired state. They are therefore asked to directly trade off length of life for improved quality of life. SG asks respondents to trade off uncertainty (between full health and death) for health. Specifically, respondents are asked to choose between a certain option in impaired health and an option associated with a return to full health with probability p, but also a risk of death with probability 1 – p.

However, these approaches are time-consuming to administer, relatively costly and place a substantial burden on both study participants and researchers. In the context of a clinical study, these approaches would often be considered not feasible. In addition to these practical constraints on direct utility elicitation, the prevailing view of most health technology assessment (HTA) agencies is that the preferences of the general public, rather than of patients only, are those most appropriate for informing investment decisions in a publicly funded health-care system. Instead of obtaining values directly from patients, the values for health states that are then used to calculate QALYs predominantly come from generic PBMs, which are based on the preferences of some sample of the general public.

Most PBMs are intended to be applicable to a wide range of different disease areas and health technologies and hence are termed ‘generic’. These measures have become core features of the standard approach to measuring and valuing health and key inputs to the calculation of QALYs. A PBM comprises two elements: a survey instrument that is used to describe health and a valuation set that provides an ‘off-the-shelf’ set of values for each of the health states that can be described by the survey instrument. These sets of values have been calculated using methods consistent with economic theory, such as TTO and SG, in large scale samples of the general population. Thus, the practical difficulties associated with the administration of these methods in patient samples are avoided.

Several such generic PBM instruments have been developed. The most widely used examples are the EuroQoL-5 Dimensions (EQ-5D),1 of which there are two main versions [the EuroQoL-5 Dimensions, three-level version (EQ-5D-3L), and the EuroQoL-5 Dimensions, five-level version (EQ-5D-5L)]; the Short Form questionnaire-6 Dimensions (SF-6D),2 which is based on the Short Form questionnaire-36 items (SF-36);3 and the eight-dimensional Health Utility Index Mark 3 (HUI3). 4 Table 1 summarises these example measures.

| Instrument | Dimensions | Levels | Number of health states | Valuation method | Range of health utilitiesa |

|---|---|---|---|---|---|

| EQ-5D-3L | Five dimensions: mobility, self-care, usual activities, pain/discomfort and anxiety/depression | Three levels: no/some/extreme problems | 243 | TTO | –0.594 to 1 |

| EQ-5D-5L | Five dimensions: mobility, self-care, usual activities, pain/discomfort and anxiety/depression | Five levels: no/slight/moderate/severe/extreme problems | 3125 | TTO/DCE | –0.285 to 1 |

| SF-6D | Six dimensions: physical functioning, role limitations, social functioning, pain, mental health and vitality | Between four and six levels in each dimension | 18,000 | SG | 0.301 to 1 |

| HUI3 | Eight dimensions: vision, hearing, speech, ambulation, dexterity, emotion, cognition and pain | Between five and six levels in each dimension | 972,000 | SG and VAS | –0.359 to 1 |

Both the health classification systems and the response samples and analytical methods that underpin the valuation sets differ for each of these PBMs. Effectively, this means that PBMs cannot be treated as if they were interchangeable: they do not generate the same values for the same health states. 9–14 This has important implications for policy-makers seeking consistency across the health-care allocation decisions they are required to make.

The EQ-5D-3L is one of the most widely used PBMs and can be used to illustrate how PBMs are constructed and used. Its descriptive system comprises five dimensions: mobility, self-care, usual activities, pain/discomfort and anxiety/depression. Respondents are asked to indicate their health status on each dimension at one of three levels: no problems, some problems and extreme problems. In total, 243 different health states can be specified by this descriptive system. 5 In the UK, the values attached to these health states range from –0.594 for the worst health state (extreme problems in all dimensions) to 1 for full health (no problems in any dimension). Values for 42 of the 243 health states were elicited using TTO from a final sample of 3395 UK members of the general public. 5 To obtain values for all health states described by the EQ-5D-3L, a regression model was used on these data to estimate the value of all 243 health states.

Many jurisdictions recommend the use of PBMs for economic evaluation, such as those of England and Wales,15 Spain,16 France,17 Thailand,18 Finland,19 Sweden,20 Poland,21 New Zealand,22 Canada,23 Colombia24 and the Netherlands. 25 Some recommend the use of a specific instrument, usually the EQ-5D. 26

To use PBMs to facilitate the estimation of QALYs for CEA, the ideal situation in many cases would see the PBM administered to patients as a PROM, at multiple time points, in clinical studies of the health technology of interest. The patient responses to the descriptive system of the PBM would then be attached to the pre-existing, off-the-shelf values by the analyst, who would calculate the health gain, in QALY terms, associated with the health technology compared with the alternative treatment in the clinical study.

However, there are many settings where either this ideal situation does not exist or it is insufficient for the needs of the economic analysis. First, there may have been no PBM administered as part of the relevant clinical studies. PBMs are largely required for the economic analysis, yet in many situations the design of clinical studies focuses exclusively on establishing clinical effectiveness. Although HTA agencies may increasingly encourage the use of PBMs, the impact of this varies and it is only one of many different considerations for those funding and designing clinical studies. Second, there may have been a PBM administered in the relevant clinical studies but it is not the one that is recommended for the jurisdiction in which the economic evaluation is to be undertaken. This frequently occurs in cases of multinational clinical studies in which decisions about which PBM may be taken is based on judgements about the needs of the countries in which the clinical study is taking place, or commercial judgements about the most important markets. It can also be the case that, as new versions of PBMs are developed, the HTA agency recommendations change. Third, although the appropriate PBM may have been administered in clinical studies, this may be insufficient for the needs of the economic evaluation. Often, economic models are required to extrapolate the differences in costs and benefits beyond the limited time horizon of the clinical study, and/or to different populations. There may be several studies that need to be combined, and multiple comparators not included in the key studies need to be modelled. The clinical studies may not provide sufficiently large patient samples, patients may be healthier than patients in real clinical practice and there may be few observations of rare but important adverse events of disease complications. All of these factors mean that there is insufficient information on health utilities from the clinical studies.

In all of these cases there is a deficiency in the evidence base that makes it insufficient to furnish the requirements of the economic evaluation. ‘Mapping’ is one method that can be used to try to overcome this deficiency.

Mapping: what is it and why is it used?

Mapping, also referred to as ‘cross-walking’ or ‘transfer to utility’,27 is a method used to predict what the value of a health state would have been, perhaps conditional on many other factors, had it been recorded directly with the PBM of choice. Mapping requires the identification of a suitable external reference data set (meaning a different data set to the clinical studies that are deficient in some way) that contains both the PBM required for the economic evaluation and the measure(s) that have been used in the clinical study or that otherwise define the health states of interest.

The data from the mapping data set can then be used to estimate the relationship between the PBM and the clinical outcome measures, thereby providing the means to bridge the gap between the evidence available in the clinical studies and the requirements of the economic evaluation. The mapping is used to impute the utility of health states from non-utility-based information about those health states. Note that the mapping data set does not need to be from a study of the technology in question, nor does it need to be derived from a randomised clinical study, because the mapping estimation itself does not entail the estimation of a treatment effect.

The estimated statistical relationship may then be used to infer the missing PBM in the clinical study so that it can be incorporated in the economic evaluation. If y denotes health utility and x the vector of conditioning variables used in the mapping model (for example, the clinical outcome measure age or gender), the estimated mapping model gives us an estimate of the conditional distribution of the utilities, f(y|x). We can use the mapping model to do either of the below:

-

Simulate the distribution of utilities across patients using the full conditional distribution, f(y|x). This use could be appropriate for a patient-based simulation model, or an economic evaluation alongside a clinical trial, where the analyst needs to use the full distribution of patient utilities.

-

Predict the missing utility using the conditional expectation, E(y|x), as would be required for a typical cohort-based decision model.

These two alternative uses of the mapping model are related to important concepts often confused in the mapping literature. The unconditional distribution of utilities across patients, f(y), describes the distribution of health state utilities. This distribution is bounded at the top by one, the value of full health, and at the bottom by the lowest utility value for the particular instrument used (see Table 1). For a given set of conditioning variables, x = X, the conditional distribution, f(y|X), describes the distribution of utilities in the subpopulation of patients for whom the conditioning variables take the combination of values X; individuals with the same observable characteristics X differ in their observed utilities owing to an unobserved random component. The conditional expectation, E(y|X), is the mean of the conditional distribution, f(y|X); individuals with the same observable characteristics X share the same mean. Therefore, the variation in the distribution of the conditional means, E(y|X), is due to the variation in the combinations of values X in the population of interest. The conditional distribution, f(y|x), includes, in addition, the unexplained variation of utility around the conditional means. Hence, the distribution of the conditional means differs from the conditional distribution. In particular, the distribution of the conditional means will always have less variation than the conditional distribution. 28 We outline this issue in more detail in Chapter 3, Predictions: mean versus distribution. It is worth noting at this point that estimating the entire conditional probability structure, f(y|X), has the advantage of allowing the mapping model to be used for both (1) and (2) above, as the conditional means can be derived from it.

Mapping is in widespread use in HTA. A database of mapping studies updated in 2019 [Health Economics Research Centre (HERC) database of mapping studies]29 contains 182 published studies. Many more are undertaken for specific economic evaluations but are never separately published. A review of 79 National Institute for Health and Care Excellence (NICE) appraisals in 201330 found that 22% were reliant on mapping, either from published sources or mapping estimated specifically for the appraisal.

Mapping is an area in which guidance on some specific areas of good practice has been published, intended to reflect existing research evidence31,32 or provide recommendations on reporting standards. 33

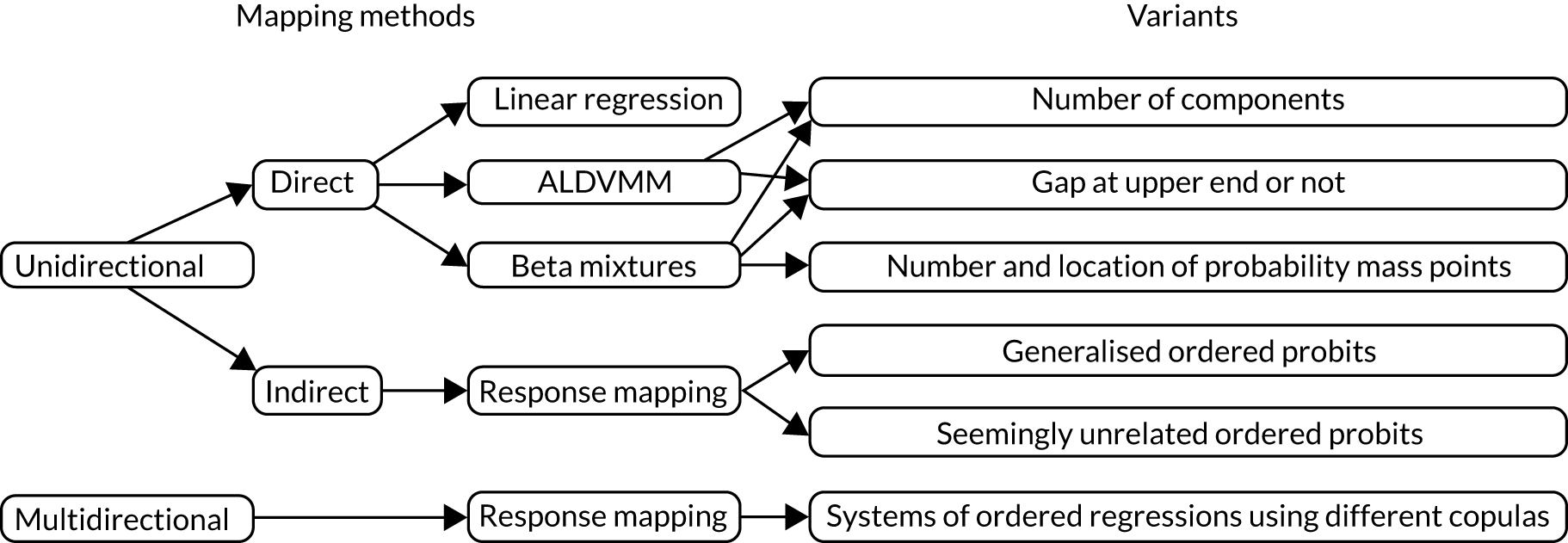

Overview of different mapping approaches

There is a longer history of studies examining alternative statistical models for cost data in the published literature than health utility data. 34,35 Cost data are characterised by non-negative values, heteroscedasticity and high kurtosis because of large proportions of respondents incurring zero costs (the lower limit). Much less attention has been given to the challenges associated with modelling health utility data until more recently. This is surprising for two reasons. First, the challenges associated with utility data are more numerous than those associated with costs. Second, the incremental benefits appear in the denominator of the incremental cost-effectiveness ratio (ICER) and are typically very small, such that apparently trivial changes arising from differences in estimation methods often lead to far from trivial differences in estimates of cost-effectiveness.

In general terms, there are two types of methods for mapping. The first is a one-step process that models the health utility values directly. Although potentially simpler, this means that the resultant mapping model is specific to the value set for which the model was estimated; it cannot be used for other countries where a different value set is relevant. This approach also discards the more detailed information contained in the responses to the individual dimensions. In some cases, this information may be quite useful.

The second set of mapping methods can be labelled as indirect mapping approaches (also referred to as ‘response mapping’), which use a two-step process. In the first step, the discrete responses to the descriptive system of the instrument are modelled. For example, in cases in which the EQ-5D-3L is the target of the mapping model, five (typically independent) discrete data models (such as ordered probits/logits, multinomial logits) are used. The models estimate the probability of the health state of the individual being at levels one, two and three for each dimension in the descriptive system. Once these models have been estimated, they can be used to calculate the expected health utility as a second, separate step, using the estimated distribution of responses together with a value set. Because it is only the second step that is value-set specific, the same first-stage mapping model can be used for any country. However, the indirect approach also needs enough responses at all levels in each dimension, otherwise the mapping model is unable to predict a full conditional probability distribution across all health states. Mappings to PBMs with a larger number of levels in each dimension are more likely to encounter this problem. 36,37

Direct methods

Modelling health utilities directly is not straightforward because such data are characterised by several challenging features. Health utility data are bounded: limited at the top at one (the value of full health) and at the bottom by the value of the worst health state described by the instrument. The location of this lower boundary differs by PBM and by the country-specific value set, but they all must have a lower bound (see Table 1). Most data sets exhibit a significant mass of observations at the upper boundary of one, immediately followed below by a relatively large gap in the distribution before the next feasible utility value. The rest of the distribution is usually characterised by multimodality and/or skewness. The degree to which these features are apparent varies by instrument, disease area and patient sample severity.

Given these distributional features, it is perhaps surprising to find the widespread use of linear regression for mapping. The HERC database29 reports the first identified mapping study as using a linear regression for modelling the relationship between EQ-5D-3L and other disease-specific outcome measures in a sample of patients with rheumatoid arthritis (RA). 38 Linear regressions are the most commonly used direct mapping model, usually estimated using ordinary least squares (OLS), with a smaller number of more recent studies using robust MM estimators (the MM estimators is a class of robust estimators for the linear model introduced by Yohai39). A recent systematic review of mapping studies to PBMs found that linear regression estimated using OLS was still the most common approach, used at least 75% of the time in each of the PBMs covered in the review. 40

Modelling PBMs using a linear regression is problematic because of the features that are typical of health utility data.

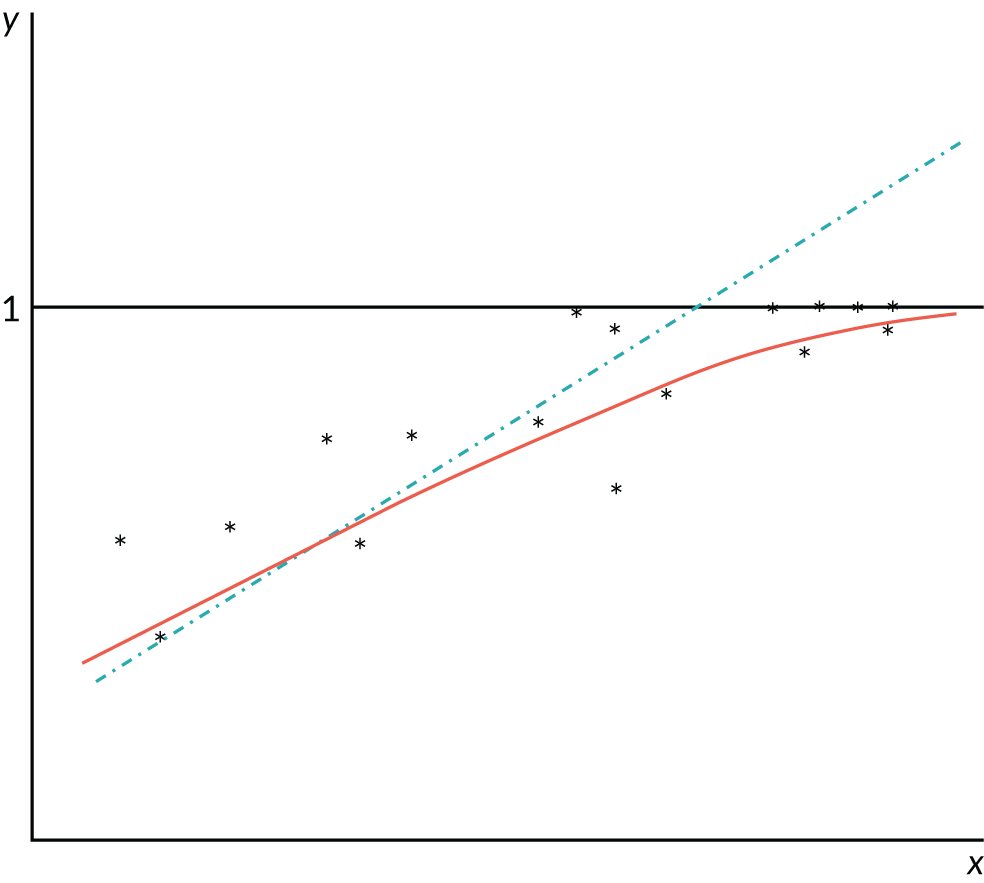

The first issue, which has received surprisingly little attention in this area, relates to the bounded nature of PBMs. Linear regression is built on the assumption that the regression function is linear. In other words, the expected value of health utility, conditional on a predictor variable, looks like a straight line when plotted against the value of the predictor variable. Figure 1 shows why that linearity assumption causes problems for regression prediction. As health utility can never exceed one, its expected value conditional on the predictor x can also never exceed one. It will generally lie below one and approach it as an asymptote as x increases (assuming x and y are positively related). Thus, the true regression relationship (the solid red line in Figure 1) must be non-linear in general. Mistakenly fitting a straight line (the dashed blue line in Figure 1) then tends to overpredict health utility for cases with health utility likely to be at or close to one. Linear regression will also tend to underpredict health utility in cases of very poor health, because the slope of the regression line is too large.

FIGURE 1.

Example of incorrect prediction by misspecified linear regression for health utilities limited at 1.

A possible response to this problem would be to use non-linear regression – in other words, fit a curve like the solid curve (solid red line) in Figure 1. This type of regression is robust in the sense that it gives approximately unbiased estimates even if the usual assumption of normally distributed and constant-variance residuals are invalid. Unbiasedness requires that the non-linear form fitted is linear in parameters; if this is not so, the non-linear regression estimator is consistent rather than unbiased, and gives good results in large samples (subject to mild regularity conditions). However, some of the standard inferences about the model [hypothesis tests, confidence intervals (CIs), etc.] will be invalid unless the constant-variance (homoskedasticity) assumption is correct. Unfortunately, a limited dependent variable model cannot have a constant residual variance: as the regression curve approaches the ceiling of one, the variance must decline towards zero, as illustrated in Figure 1. In any case, even if homoskedasticity were a tenable assumption, standard tests and CI formulas may be poor approximations in small samples, given the non-normal features of skewness, discontinuity and multimodality that characterise health utility data.

Published evidence repeatedly demonstrates the poor performance of the linear regression. Most studies illustrate this with reference to the conditional means and show that the models tend to understimate mean health utility at the upper end of the distribution, where patients are in good health, and overestimate it at the bottom end, where patients are in poorer health as expected given the discussion above. Chapter 3, Model comparisons, illustrates with an example the type of systematic underpredictions and overpredictions that one is likely to see when using linear regressions as opposed to other models that can account for all of the features of the data.

In cost-effectiveness models that assess the value of clinically-effective treatments, the use of these mapping models will bias results. They tend to underestimate the true value of health gain, resulting in lower QALYs and higher cost-effectiveness ratios. Even though the magnitude of the bias seems small, it is large relative to the range of PBMs, and, in economic models, those biases accumulate over the individual’s lifetime. Furthermore, cost-effectiveness ratios are unstable quantities usually portraying large numbers on the numerator divided by very small numbers. Small changes to the denominator typically result in large changes in the cost-effectiveness ratio that have the potential to change reimbursement decisions solely because of the mapping model used. 41 Alternative models have been considered, on the basis that they may be more suitable to some aspects of the utility distibution. For example, tobit, censored least absolute deviation and two-part models had been used in the literature to capture the large proportion of observations at full health.

The use of the tobit model in mapping has been subject to discussion in published literature in relation to its potential appropriateness or otherwise for health utility data. In particular, it has been argued that the tobit model is inappropriate for modelling health utility data because it is designed for the analysis of censored data: health utility data are not censored because values of health > 1 are not possible. 42 This criticism stems from an apparent confusion between two different applications of the tobit model that lead to the same statistical model. The tobit model can arise from true data censoring where the variable of interest is not fully observed. It is in the context of data censoring that the tobit model is most typically used in economics at present. A typical example of this is modelling data on income using data from surveys that, to encourage responses at higher levels of income, use an upper limit above which respondents need to indicate only if their income is above that limit. The data are thus observed to lie within a particular range only, which causes a pile-up of observations in that category. However, the variable of interest is still underlying income. The same tobit model can also arise from a corner solution of choice, as in Tobin’s original article. 43 Tobin developed his original model to deal with variables that are limited, not censored, such as expenditure on durable goods. Expenditure can only be positive or zero, and the variable is referred to as limited at zero. At very disaggregated levels of expenditure or for luxury goods, pile-ups at the ‘corner’ of zero are likely. There is no censoring in this case; the entire range of the variable is observed. In fact, the word ‘censored’ is completely absent from Tobin’s original paper. Health utility data are analogous to this second case. The variable of interest is the actual response variable and the pile-up at one represents a corner solution. This distinction is important for prediction purposes. The linear prediction is appropriate if the pile-up is due to censoring, but the (non-linear) prediction of the response variable should be used in the case of a corner solution.

The range of alternative methods, including the use of the tobit model, have tended to not improve over the poor fit of the linear regression. This is because these methods address only some of the challenging distributional features of health utility data. Only models that are able to address all features are true contenders for a properly specified mapping model. Worryingly, this has led to a widespread claim that mapping is fundamentally unreliable. 44 This belief has been shown to be misguided with the application of more flexible statistical methods in many studies. Austin and Escobar45 proposed the use of finite mixture models to estimate PBMs and applied it to the HUI3. Mixtures of normal distributions are very flexible and can approximate functional forms that are difficult to model using a single distribution. Their use is often linked to the presence of multimodality, but they can also approximate unimodal, highly skewed or kurtotic distributions. Austin and Escobar45 use a degenerate normal distribution with a mean of one and a very small standard deviation to account for the mass of observations at one. Mixtures of normal distributions have been used to model other PBMs, such as the EQ-5D-3L,46–49 SF-6D46 and HUI3. 48 Hernández Alava et al. 50 introduced a finite mixture model specifically developed to deal with the idiosyncrasies of EQ-5D-3L data. The model is based on underlying distributions analogous to the tobit model (corner solution), extended to allow for the gap between one and the immediately previous value encountered in health utility data. The model has been applied successfully to different disease areas51–54 as well as different PBMs. 37

Simultaneously, a separate strand of the methodological literature introduced the use of beta distributions. Beta distributions are very useful for modelling health utilities data because they are bounded and are able to accommodate a number of different shapes. Similarly, fractional response models are very useful in modelling bounded data, but, unlike models based on beta distributions, provide only estimates of the conditional means, not the conditional distribution of utilities. Basu and Manca55 proposed the use of two-part beta regressions to account for bounded data, skewness and the spike of observations at the value of full health. Some studies have suggested that beta regression is not appropriate in cases where the PBM displays negative values56 and, in some cases, researchers have converted all negative values to zero ad hoc purely for convenience,57,58 inadvertently creating a potential problem given the sensitivity of beta regressions to observations at the boundaries. Standard transformations exist and are regularly used with beta regressions in other applications. 59 The beta regression approach has been extended to the use of mixtures. 47 The development of methods based on beta mixtures is described further in Chapter 3, Flexible modelling methods for mapping, Direct methods: the beta mixture model.

Indirect methods

Fewer applications of indirect methods have been documented, which may be because of the requirement for data that spans all response categories. The first reported indirect mapping model we are aware of comprised a set of independent multinomial logit models. 60 Gray et al. 61 also estimated a set of independent logit models and coined the term ‘response mapping’, which has been adopted more widely. This approach reflects the two-stage data generating process of health state data: it separates the modelling of the responses that individuals give using the descriptive system of a quality of life instrument from the valuations of those health states.

Response mapping methods have been developed to use models that reflect the ordered nature of the response data using ordered probits/logits and generalised ordered probits. 51,53,62 Others relax the independence assumption through the use of multivariate ordered probits. 63 The multivariate model was shown to outperform competing independent dimensions models and set the ground for future avenues of research in the indirect mapping literature. However, estimating models involving high-dimensional ordinal variables remains an onerous task. The development of methods based on response mapping is described in Chapter 3, Flexible modelling methods for mapping, Indirect methods: systems of ordinal regressions using copulas.

Chapter 3 Development of methods for mapping

The characteristics of PBM data (bounded, with large numbers of observations at one boundary; non-standard distributions with gaps) as well as the small number of covariates often available for statistical modelling mean that flexible models are often required to avoid biases in the parameter estimates. This chapter summarises some of the methodological developments achieved during this project, concentrating specifically on the development of appropriate models for mapping and examining issues around model comparisons. Additional methodological developments are left to Chapter 5, in which a number of issues not directly related to model development are presented.

Flexible modelling methods for mapping describes two new flexible statistical models. Although they have been developed specifically for mapping, they can be applied outside this area in cases in which flexible models are needed. Predictions: mean versus distribution clarifies concepts often confused in the mapping literature and that have sometimes been used to dismiss mapping as a useful approach. These concepts are not exclusive to mapping; they apply equally to any statistical model. Model comparisons presents some graphical approaches to comparing mapping models to aid model selection. The advantage of these graphical approaches is that they have been designed with awareness of the role of mapping as an input into cost-effectiveness analyses.

Flexible modelling methods for mapping

Two mapping models, one based on the direct approach and one based on the indirect approach, were developed during this project to provide researchers with alternative flexible models when mapping. The direct model is based on finite mixture distributions that have already been shown to be successful in this area. It is similar to the adjusted limited dependent variable mixture model (ALDVMM),50 a model developed specifically for mapping that replaces the underlying normal distributions with beta distributions. One potential advantage of the beta distribution over the normal distribution is that its range is limited and can, therefore, easily accommodate variables such as PBMs, which have natural boundaries, although problems arise when there are large numbers of observations at those boundaries. A second potential advantage relates to the variety of shapes that it can generate with a single distribution, in contrast to the bell shape of the normal distribution. The model is briefly presented in Direct methods: the beta mixture model.

In most cases, mapping is unidirectional; we are interested in converting one measure to another, for example a disease-specific measure to a PBM, but not the other way around. The indirect model presented in this chapter (see Indirect methods: systems of ordinal regressions using copulas) has been specifically developed for cases in which the mapping model might need to be used multidirectionally. It was designed specifically for the case of mapping between two similar PBMs, EQ-5D-3L and EQ-5D-5L, but the general statistical model can be applied to other measures and extended to more than two PBMs. It is a multiequation model of ordinal regressions estimated jointly using copulas to capture the dependencies between the EQ-5D-3L and EQ-5D-5L dimensions. It also incorporates finite mixtures to allow for non-normality of the errors. The model is more challenging to estimate than unidirectional models and this complexity is not necessary for more standard uses of mapping.

Direct methods: the beta mixture model

The beta mixture model is described fully in a paper describing ,64 a Stata® versions 14 and 15 (StataCorp LP, College Station, TX, USA) community-contributed command developed to facilitate estimation of this model; its first application in the mapping literature can be found in Gray et al. 37 The following sections give some important background information about mixture models before summarising the beta mixture model.

Mixture models: background

Finite mixture models provide a very flexible statistical framework. Instead of assuming that a single distribution is enough to model a dependent variable, a number of individual component distributions are used. These distributions are mixed according to a probability structure. 65 Mixture models arise naturally when there is discrete heterogeneity in the population because the different mixture components (also known as classes) can be used to represent the different groups in the population. Although finite mixtures tend to be introduced in this context, they have another important use. Because any continuous distribution can be approximated by a finite mixture of normal densities, mixture models provide a convenient semiparametric framework to model distributional shapes that cannot be easily accommodated by standard distributions. They are parametric because each of the component distributions have a parametric form but have nonparametric features as the number of individual components is allowed to increase. Therefore, they possess a lot of the flexibility associated with nonparametric approaches as well as maintaining some of the benefits of parametric models. This flexibility is the key to their usefulness for direct modelling approaches in the mapping literature.

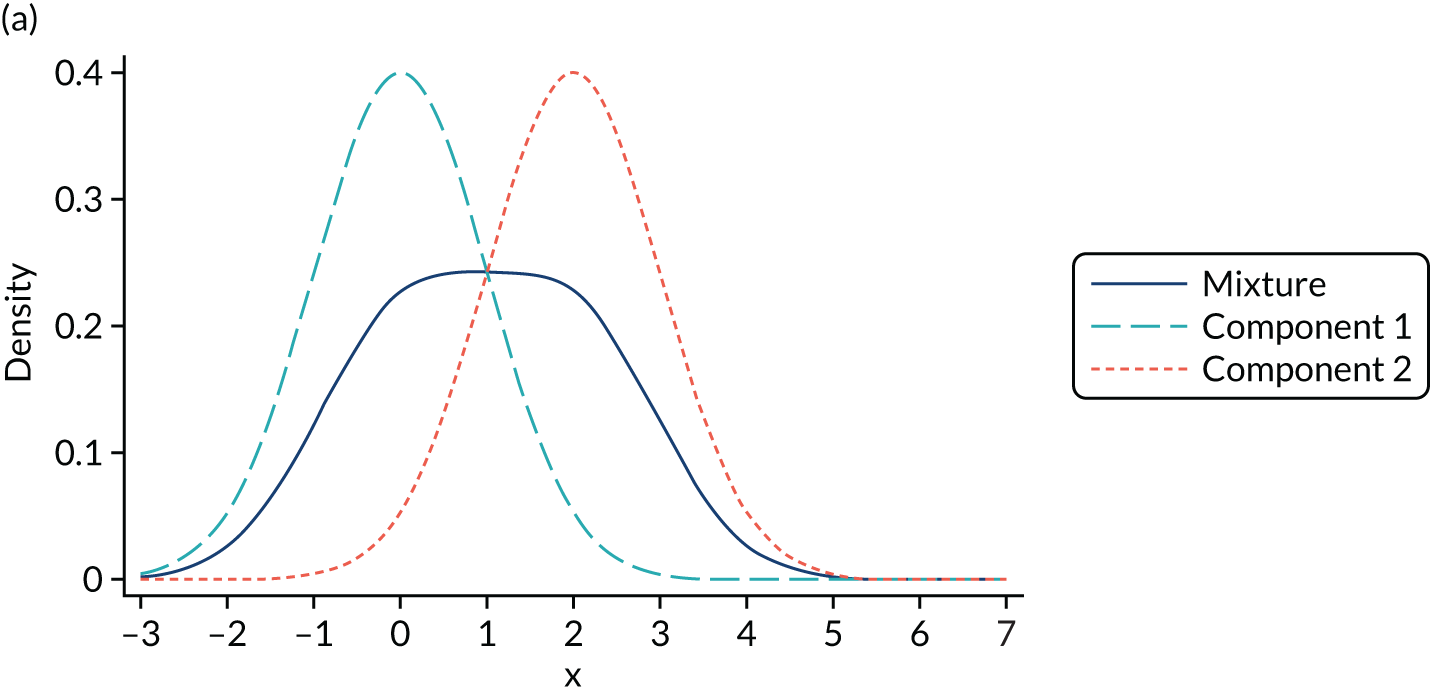

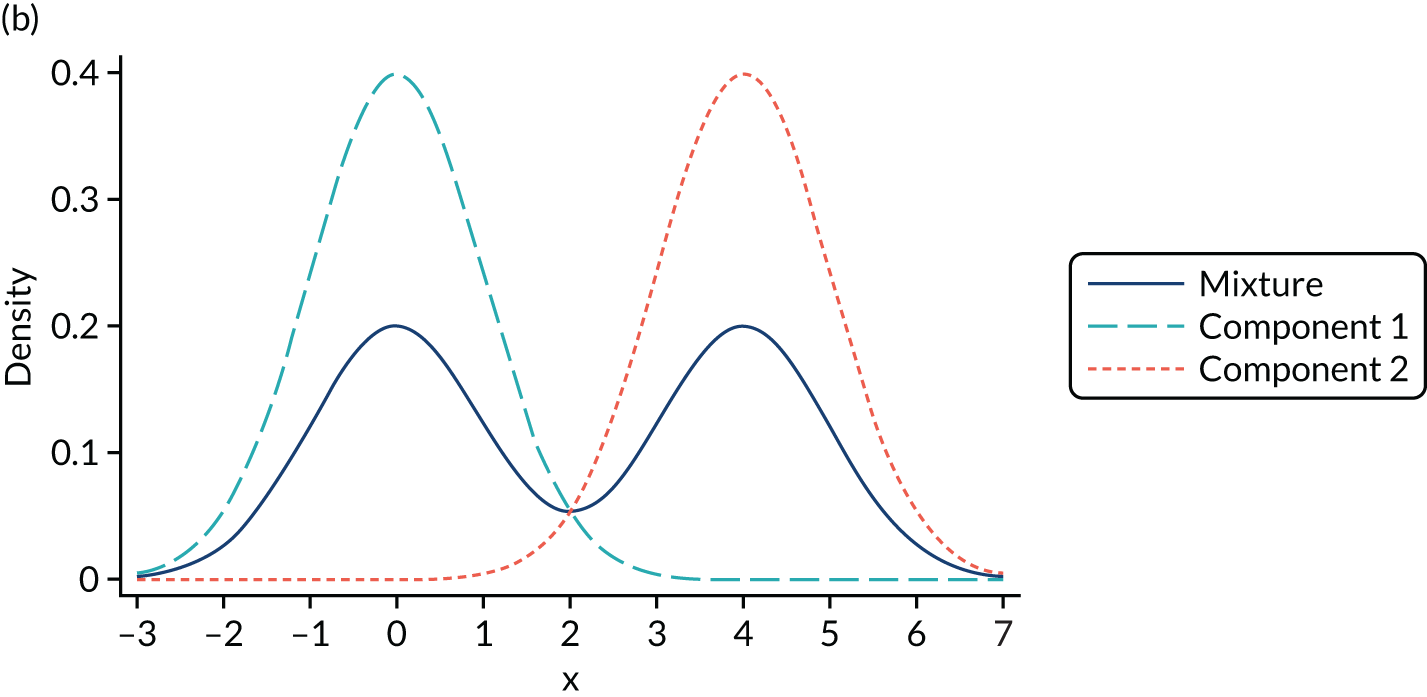

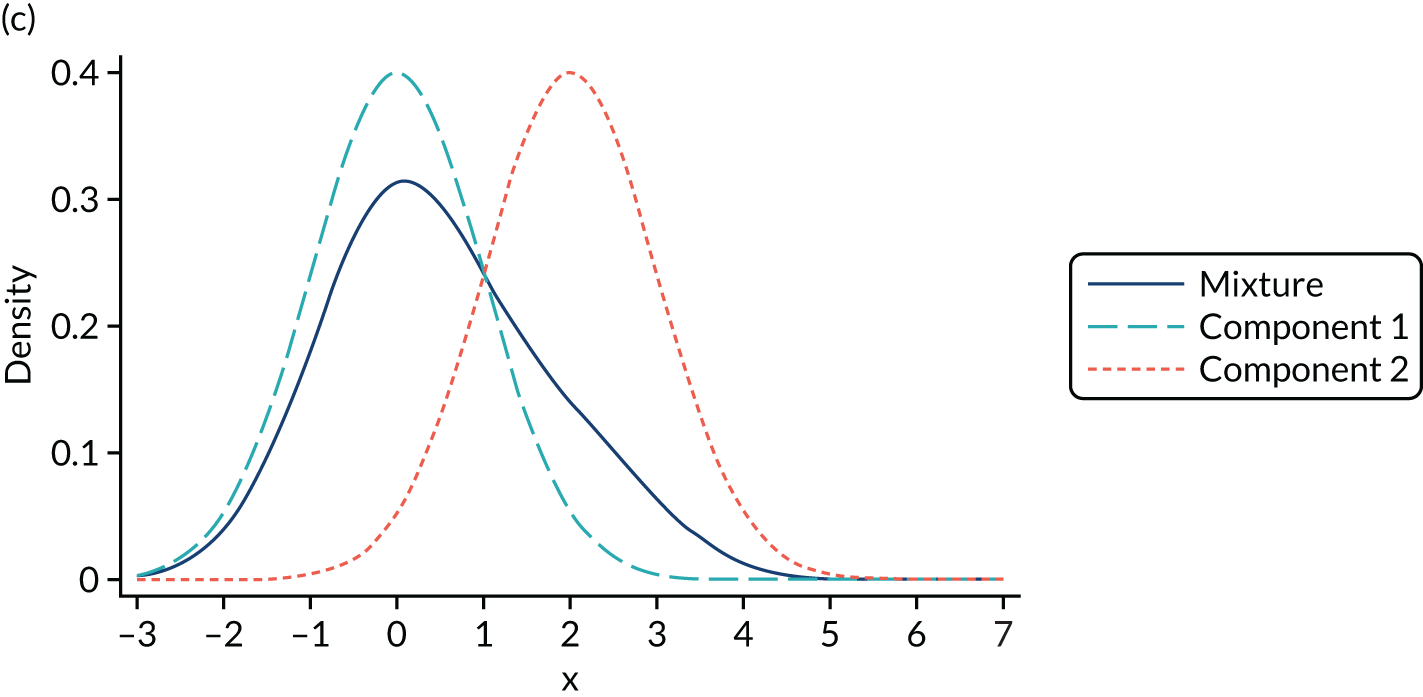

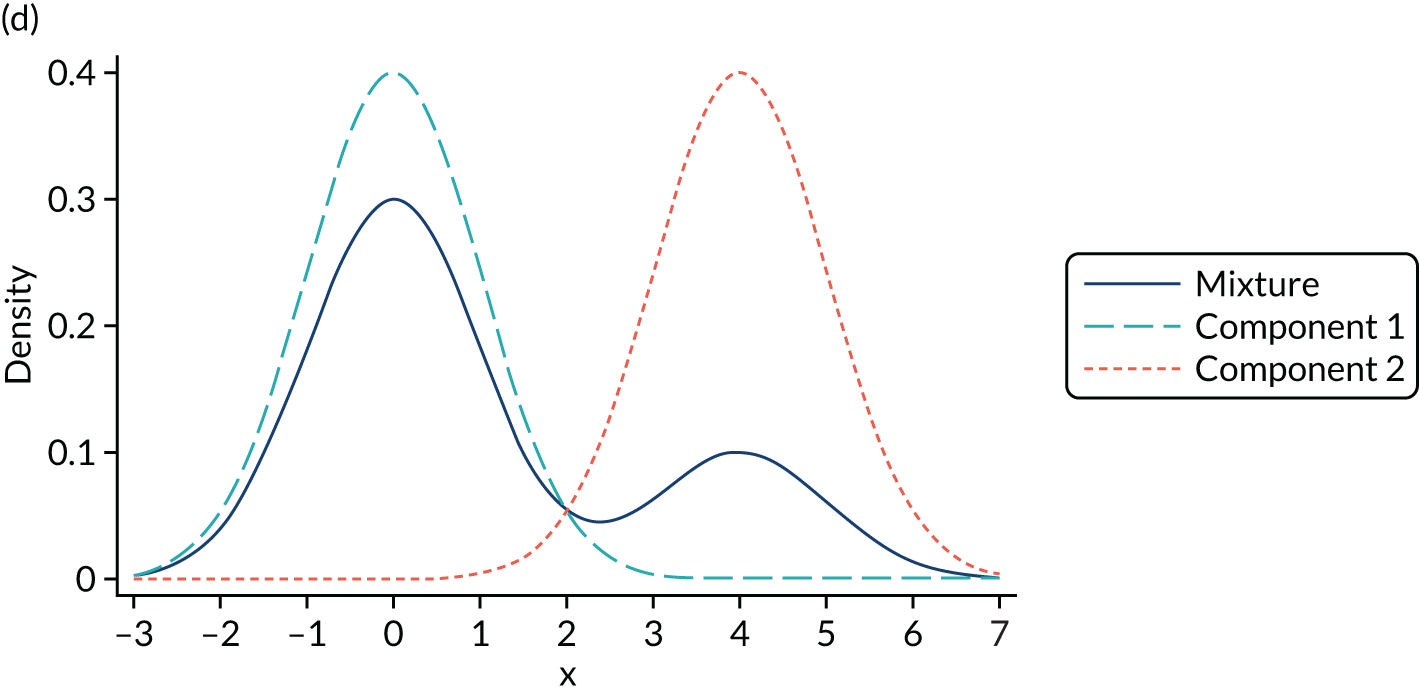

Mixture distributions are often used when distributions are multimodal as a way of capturing this feature, but it is important to understand that they can also approximate other features such as skewness, kurtosis and heteroscedasticity. Figure 2 illustrates the different shapes a mixture can take using a simple example of only two equal variance components. Figure 2a shows two normal components of variance equal to one. The first component has a mean of zero, the second component is located towards its right with a mean of two and they are mixed with equal probabilities. This produces a wide distribution with a flat top. Figure 2b differs in that the second component is now moved further to the right, having a mean of four. By allowing for a relatively larger separation between the components, a bimodal distribution is produced. Figures 2c and 2d depict the same two distributions used in Figures 2a and 2b respectively, but now the components are mixed with different probabilities. Component one has a probability of 0.75 and the second component a probability of 0.25. The wide and top flat distribution of Figure 2a turns into a skewed distribution in Figure 2c, whereas the bimodal distribution in Figure 2b is still bimodal but the modes have a different height in Figure 2d. An even larger number of shapes can be generated by also allowing the variances of the distributions to differ and by increasing the number of components.

FIGURE 2.

Examples of mixtures of two normal components with equal variance (σ2 = 1) and mean of the first component (µ1 = 0). (a) Mean µ2 = 2, mixing probability π = 0.5; (b) mean µ2 = 4, mixing probability π = 0.5; (c) mean µ2 = 2, mixing probabilities π1 = 0.75 and π2 = 0.25; and (d) mean µ2 = 4, mixing probabilities π1 = 0.75 and π2 = 0.25.

It is important to stress that the presence of two modes in a distribution does not imply that exactly two components are needed. We modelled conditional distributions, and some bimodality of the conditional distribution may be accommodated by the conditioning variables. In the case of mapping, the number of conditioning variables is typically small and, in our experience, not large enough to accommodate the extreme bimodality arising from the value set present in, for example, EQ-5D-3L UK utilities. In addition, it might be the case that more than two components are needed to accommodate a bimodal distribution if, for example, the two modes are also skewed. In such circumstances, we might need two or more components to accommodate this shape per mode in the distribution, which implies that four or more components are needed to model the distribution successfully.

It is also important to note that a mixture model is not the same as a piecewise model. The latter has also been used in mapping and splits the dependent variable in fixed ad hoc intervals and then assumes a different model distribution in each part. 49

The flexibility of mixture distributions comes at the cost of more involved estimation. The analyst needs to make judgements about (1) where to include the conditioning variables and (2) the number of components. Conditioning variables might affect the mean of each component or the probability of component membership or both. In addition, choosing the appropriate number of components involves estimating models with increasingly larger numbers of components until an acceptable model has been found. Thus, a considerable number of possible models need to be thoroughly investigated. There are practical challenges associated with fitting mixture models estimated using maximum likelihood. One of the problems relates to the presence of several local maxima in the likelihood function. Convergence to a solution does not imply that the consistent solution has been found because the optimisation algorithm could have converged to a local maximum. To identify the global maximum one can use a global optimisation algorithm such as simulated annealing. However, global optimisation algorithms are slow in comparison. Alternatively, the model can be estimated using a large number of random starting values and selecting the model with the largest likelihood. Both methods have advantages and disadvantages. A second problem arises when estimating mixture models of normal distributions with different component variances. In such circumstances, the likelihood becomes unbounded as the variance of one of the components tends to zero. As Aitkin points out, this is not a ‘real’ problem;66 it arises because the normal distribution cannot represent the likelihood when the variance tends to zero. Essentially, the component becomes a probability mass but the likelihood contribution of the density of that component becomes infinite. Provided that certain regularity conditions are met, the consistent solution will correspond to a local maximiser. 65

The first mixture model developed specifically to deal with the idiosyncrasies of PBMs was the ALDVMM. 50 The model was based on mixtures of densities similar to those underlying the tobit model but allowing for a gap between one (full health) and the next value in the PBM. It was originally developed with the characteristics of the EQ-5D-3L UK value set in mind. Here, we have generalised the ALDVMM to accommodate the boundaries of any PBMs. The model is also able to reflect the gap between full health and the next feasible health state, according to the characteristics of whichever PBM or value set is of interest, and has the additional option of not reflecting any gap at all but treating the distribution as continuous between the upper and lower bounds. All of these developments are encompassed in the freely available Stata command ALDVMM. 67

The beta mixture model

The development of the beta mixture model followed from, on the one hand, the proven advantages of the ALDVMM in modelling EQ-5D-3L and, on the other hand, the development in a different strand of the literature of models based on the beta distribution. 55 The beta distribution is very convenient when it comes to modelling PBMs; it is a bounded distribution, as are all PBMs, and can accommodate a number of different shapes: by varying its mean and precision parameter, the distribution can be symmetric or asymmetric and bell-, J-, or U-shaped. Although the beta distribution is bounded in the interval (0,1), a standard transformation can be used to change its support to any finite interval such as those covered by different PBMs. The disadvantage of the beta distribution is that the boundaries are outside its region of support. In some cases, a small amount of noise is added to the observations on the boundary to pull them inside the support region. This solution can work but only as long as the number of observations on the boundaries is relatively small. It has been shown that the beta distribution is very sensitive to large numbers of observations at the boundaries59 and the solution above might severely distort the distribution. In those cases, one can mechanically add mass points at the boundaries, but the problem then lies in the interpretation of these probability masses. In some cases it might be easy to justify the presence of a separate mass point on the boundary, but in other cases it is mere statistical convenience.

Although the beta distribution can take many shapes, bimodality is one characteristic that it cannot reproduce. It follows that augmenting the model with mixtures provides additional flexibility as well as the ability to cope with multimodality. In the area of mapping, mixtures of beta regressions have been used to model EQ-5D-3L. 47 We modify the standard mixture model to account for the gap between full health and the next feasible values as well as allowing for different approaches to model the observations on the boundaries. Details of the model are presented in full in Gray et al. 64 The model structure is presented here briefly.

It is assumed that utility for individual i, yi, is defined at full health (1) and in the interval [τ, b], with τ denoting the highest utility value below full health and τ > b. The conditional density of yi can be written as:

where x1i, x2i, x3i are vectors of covariates affecting the mean of each component, the probabilities of component membership and the probability of a boundary value, respectively. The probabilities P(yi|xi3) are defined using the following multinomial logit model:

where γk are the vectors of corresponding coefficients. The probability density function h(.) is a mixture of C-component beta distributions:

with

and the probability of latent class membership defined by a multinomial logit model with coefficients δc:

The likelihood of this particular beta mixture model is given by:

Compared with the ALDVMM, this model generates observations at full health and those below using two different processes (two-part model). The bounded nature of the data are handled naturally by a bounded distribution. These boundaries are b, the lowest value for the PBM, and τ, the highest utility value below full health, because the mass of observations at one is being handled by the other part of the model. However, values on the boundary create a problem for this distribution, which cannot handle them without further adjustment. Small numbers of observations at the boundaries (the lowest health state value and the value of the health state immediately below full health) can usually be handled by adding a small amount of noise to those observations so that they fall just inside the boundaries. However, a substantial amount of observations at the boundaries needs to be handled by adding a mass point at either or both of the boundaries to avoid distortion. Although this adaptation makes the approach appropriate for the features of the typical health utility distribution, it will increase the number of model parameters. By contrast, ad hoc mass points are not necessary when using the ALDVMM because observations can be generated at those boundary values.

Indirect methods: systems of ordinal regressions using copulas

As research and our understanding of PBMs progresses, it is inevitable that new versions of PBMs are developed. Concerns regarding the lack of sensitivity of the three-level version of the EQ-5D and the usual pile-ups of observations at full health owing to its coarse structure led to the development of a new five-level version: the EQ-5D-5L. The model introduced here was developed specifically for the purpose of testing the consistency between the responses to the EQ-5D-3L and EQ-5D-5L and to assess the likely impacts for economic evaluation of moving from the original three-level version to the newer five-level version. The NICE methods guide that was published in 201315 suggests that either version of the EQ-5D could be used in appraisal submissions once a valuation set for the EQ-5D-5L became available. However, the consequences of using different versions of the EQ-5D could not be explored until a preliminary version of the EQ-5D-5L valuation was published in 2016,68 with a final version published in 2018. 6

The standard mapping case needs to be performed in only one direction, mapping from some set of clinical or other non-PBMs to the PBM of interest. In this case, to examine the similarities and differences between the different versions of the EQ-5D, mapping needs to be bidirectional, permitting the analyst to map from the EQ-5D-3L to EQ-5D-5L and vice versa in a consistent way. This consistency cannot be achieved by estimating two separate models, one for each direction, because this ignores the relationships between the parameters in both models.

The aim is to test the hypothesis that the three-level and five-level versions of the EQ-5D instrument are mutually consistent descriptors of health states and, consequently, can be used interchangeably. The model needs to be as flexible as possible to avoid imposing unnecessary restrictions that could lead to inconsistent estimates. Several important features are introduced that build on more basic indirect mapping models described in Chapter 2, Overview of different mapping approaches, Indirect methods. First, the model needs to map from the EQ-5D-3L to EQ-5D-5L and vice versa in a consistent way. For this reason, we developed a joint model of the responses to 10 ordinal regressions (five for each dimension of each EQ-5D version). Second, we allowed the structural parts of the model to differ between the EQ-5D-3L to EQ-5D-5L so that we can test the assumption that EQ-5D-3L and EQ-5D-5L share the same underlying concept but that the five-level version involves more detailed categorisation. Third, the model captures the strong association between the same dimensions in the three-level and five-level versions using a copula representation,69 thus allowing different strengths of association across the health distribution and different patterns of association across the different dimensions of the EQ-5D. Fourth, the assumption of normality underlying the ordinal equations is generalised using two component normal mixtures (see Mixture models: background) to avoid misspecification of the distribution of the errors, which could lead to inconsistent estimates. Fifth, a random latent factor affecting all responses is introduced to reflect individual-specific effects that will manifest as dependence across all dimensions in both EQ-5D versions.

A full technical account of the model can be found in Hernández-Alava and Pudney70 and in the associated Stata command, ,71 which calculates predictions based on several estimated mapping models. The command can predict from the EQ-5D-3L to EQ-5D-5L and vice versa using either the individual-level responses or a mean utility value. The model is briefly summarised below.

The model is a system of 10 latent regressions arranged in five groups, one for each EQ-5D dimension d as follows:

where X is a matrix of covariates, β3d and β5d are column vector of coefficients and U3d and U5d are unobserved residuals, as detailed below. The observed variables Y3d and Y5d are generated by the following threshold-crossing condition:

where Qk = 3 or 5 is the number of EQ-5D levels and Γkqd are threshold parameters with Γk1d = –∞ and Γk(Qk + 1)d = +∞. The residual for individual, Ukid, is decomposed into an individual effect, Vi, which induces correlations across the responses of an individual and a specific residual, εkid, correlated within dimensions but not between:

where ψkd is a set of 10 different parameters. We make the usual assumptions that, conditional on X, Vi is independent of all εkid and all εkid are mutually independent, with the exception that within each dimension d, ε3id and ε5id can be dependent. To allow for departures from normality, Vi and all εkids are assumed to have a two-component finite mixture of normal distributions. The mixture for the errors εkid is written as follows:

where π is the mixing parameter; the location (µ1, µ2) and dispersion (σ1, σ2) parameters are constrained to satisfy the usual mean and variance normalisations, which in the case of the mixture above are:

and:

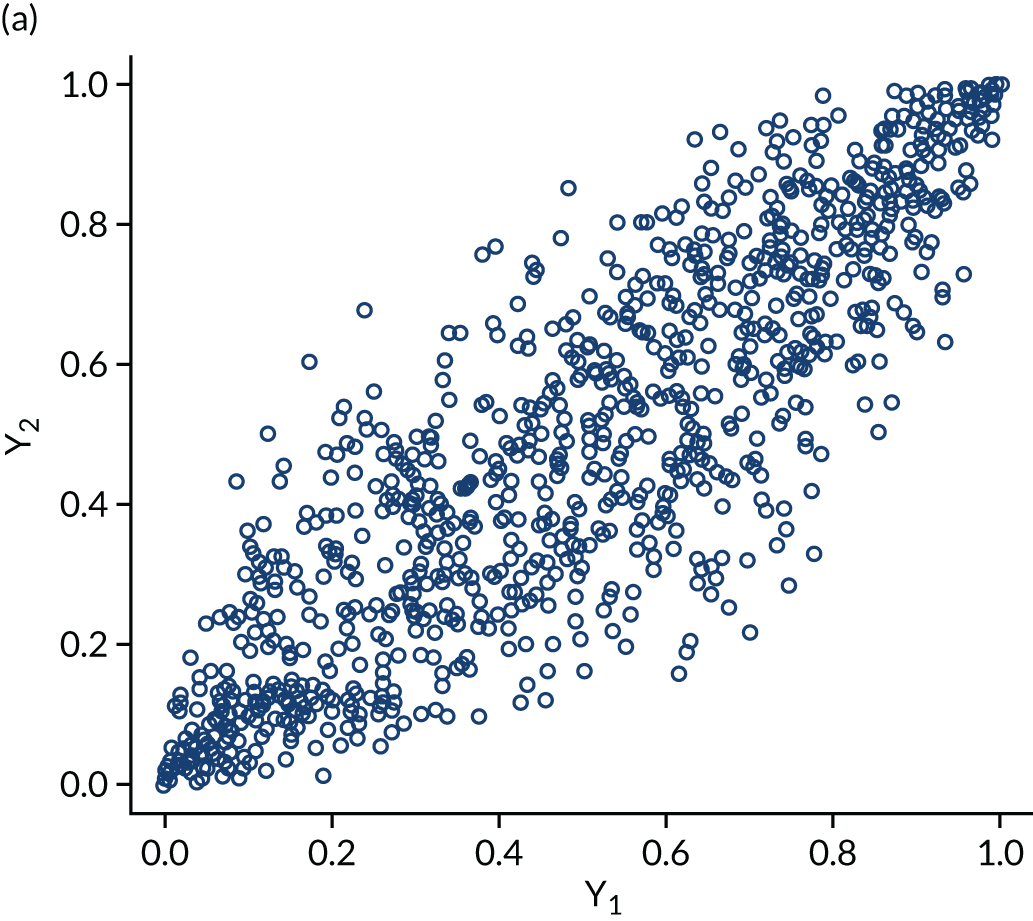

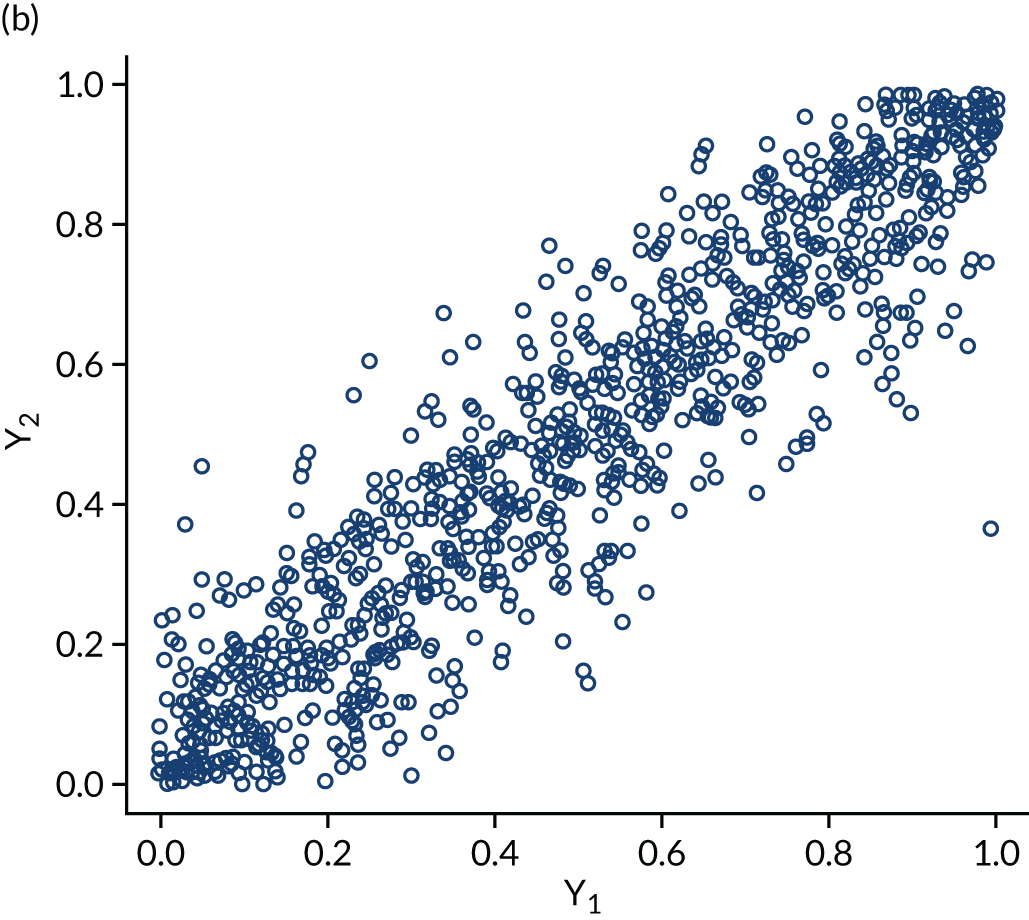

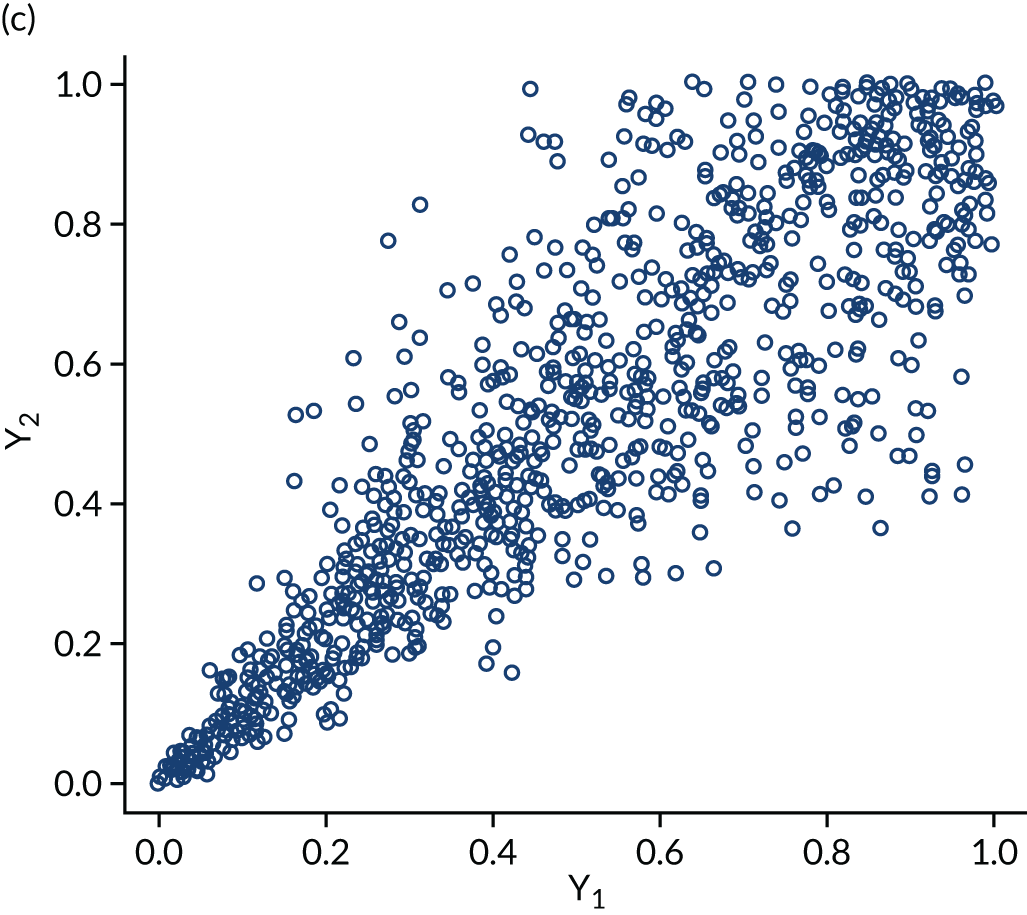

The within-domain dependency between ε3id and ε5id is captured using a copula specification66 to derive the joint distribution from the marginal distributions assumed. Copulas are very useful not only because they can be used to derive difficult joint distributions from marginals but also because they can represent a number of different dependence structures. The copulas we considered in the empirical application are independent, Clayton, Gumbel, Frank and Joe. Figure 3 shows scatterplots of samples generated by Monte Carlo simulation from three bivariate copulas, Gaussian, Frank and Clayton, all specified with a common Kendall’s τ ≈ 0.7 to illustrate the copulas’ dependence patterns. The Gaussian and the Frank copulas can exhibit positive and negative dependence, and the pattern of dependence is symmetric in both tails. However, compared with the Gaussian copula, the Frank copula exhibits weaker dependence in the tails, and dependence is strongest in the middle of the distribution. This is clearly seen in Figure 3, in which the points on the tails of the Gaussian copula are closer together than those on the tails of the Frank copula. However, in the middle of the distribution the points are closer together in the Frank copula than in the Gaussian. By contrast, the Clayton, Gumbel and Joe copulas do not allow for negative dependence, and dependence in the tails is asymmetric. The Clayton copula exhibits strong left-tail dependence (see Figure 3c, in which the points on the left-hand side are close together) and relatively weak right-tail dependence. Thus, if two variables are strongly correlated at low values but not so correlated at high values, then the Clayton copula is a good choice. The Gumbel and Joe copulas display the opposite pattern, with weak left-tail dependence and strong right-tail dependence. The right-tail dependence is stronger in the Joe copula than in the Gumbel, and thus the Joe copula is closer to the opposite of the Clayton copula.

FIGURE 3.

Scatterplots of pseudorandom samples drawn from three different bivariate copulas. (a) Gaussian copula; (b) Frank copula; and (c) Clayton copula.

An additional Stata command, ,72 developed during this project, allows analysts to estimate a simplified version of the model of the five bivariate ordinal regressions for each dimension separately. This version of the model may be used in cases where the full multivariate model is not needed; it still preserves the flexibility of the copulas for joining a pair of dimensions but does not allow for correlations across dimensions.

Predictions: mean versus distribution

Little attention has been paid to how to interpret, assess and use the results from mapping studies in economic evaluations. In particular, there has been confusion about methods for the reflection of uncertainty and variability from mapping studies.

Some authors73–77 have stated that mapping underestimates uncertainty because the sample variance of the data is always larger than the variance of the in-sample predictions. When using the expected value to predict, the sample variance of the predictions will always be smaller than the variance of the sample data because the mean predictor can only predict the variation in the utilities owing to the observed covariates. If the economic evaluation requires only the mean utility, using the mean predictor presents no problems because QALYs are a linear function of the profile of utilities over time. If, for some reason, an estimator of the variance of utilities is needed, a consistent estimator can be obtained by calculating the variance of the predictive distribution of the utilities as long as the mapping model is correctly specified; the variance of the predictions is not an appropriate estimator and should not be used. 51,70

The following sections illustrate these points using a simple case study, with data drawn from patients with RA. In brief, the data are drawn from FORWARD (the National Databank for Rheumatic Diseases)78 and have been the subject of a detailed mapping study in which the data were fully described. 51 A brief description can be found in Chapter 4, Case study data set: FORWARD. Purely for the sake of simplicity, we will consider a linear regression model that estimates health utilities using the EQ-5D-3L UK tariff as a function of the Health Assessment Questionnaire (HAQ), which is a commonly used measure of functional disability ranging from 0 (no functional disability) to 3 (maximum functional disability). In practice, there are reasons why a linear regression is unlikely to be appropriate in this situation (see Chapter 2, Overview of different mapping approaches, Direct methods), and additional covariates should be used. 50,51 Everything illustrated here applies equally to other model types, irrespective of the number of covariates and the health utility values being used as the dependent variable.

Predictions from mapping models

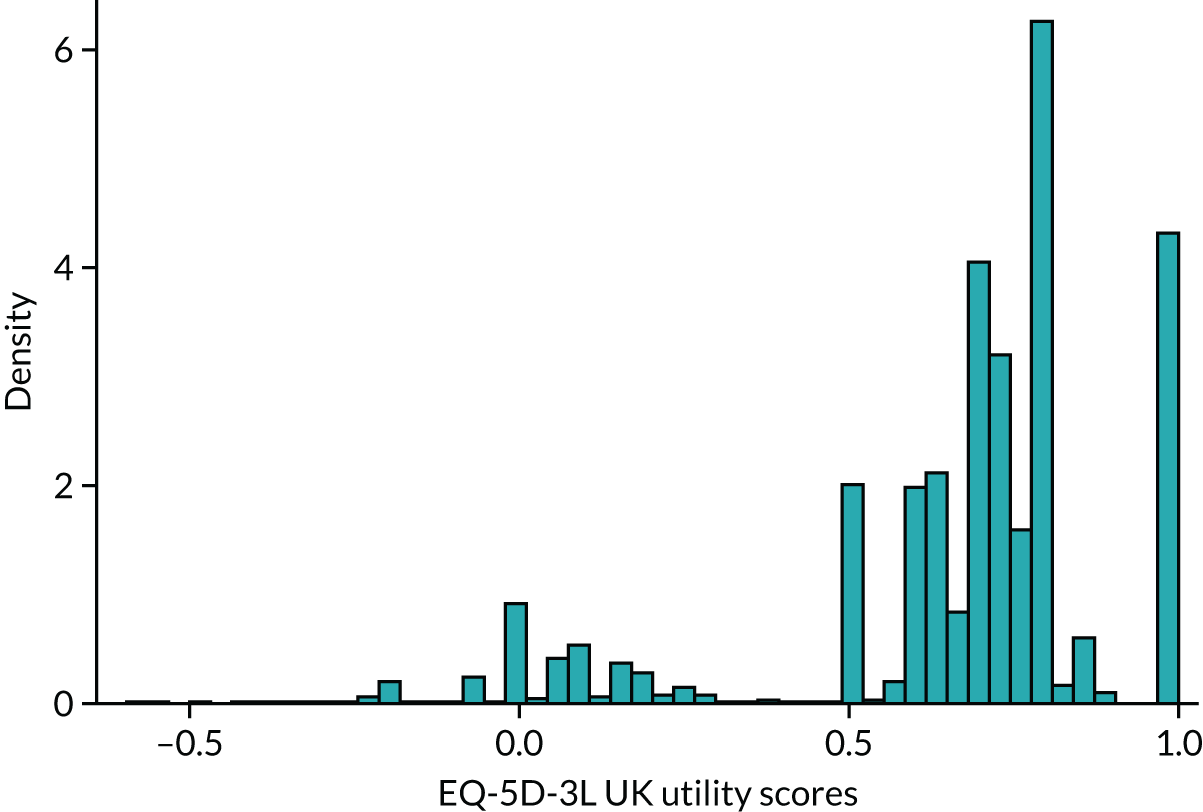

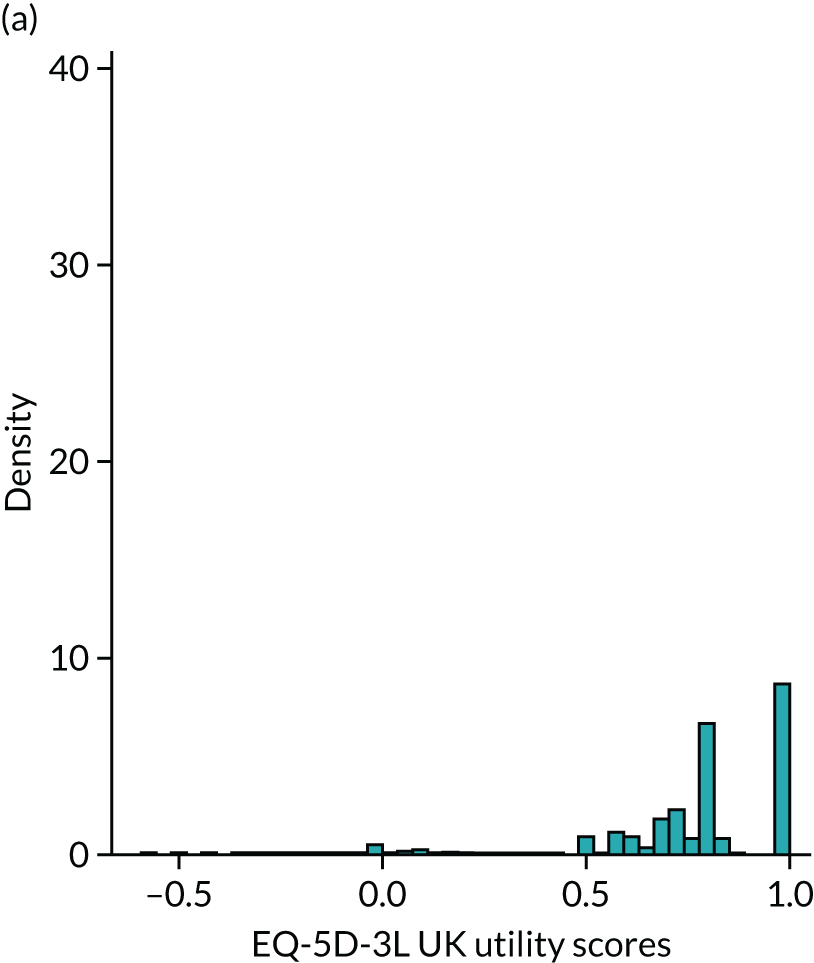

Figure 4 provides a plot of the distribution of EQ-5D-3L (UK valuation) scores from all 100,398 observations in the sample that have both EQ-5D-3L and HAQ data. It shows that the health utilities for individual patients in the sample span the whole feasible range of the EQ-5D-3L: from –0.594 (the worst heath state described by the EQ-5D-3L) to 1 (full health). The HAQ scores for these patients also vary: all the way from the least degree of functional disability (0) to the maximum (3). It is also the case that in the sample there are patients with the same level of HAQ but different levels of EQ-5D-3L.

FIGURE 4.

Distribution of EQ-5D-3L UK valuation in FORWARD (n = 100,398).

If we model the EQ-5D-3L using a simple linear regression with the HAQ as the sole explanatory variable then we are assuming that, at the population level, there is a relationship between the EQ-5D-3L and HAQ (the population regression line):

where the εi are independent and identically distributed error terms assumed (for simplicity) N(0,σ2) for individual i. This model implies that, conditional on the HAQ, the EQ-5D-3L has a normal distribution with mean:

and variance:

This means that one can never predict EQ-5D-3L values perfectly for every individual in the sample using the mean predictor because, even if α and β were known with complete certainty, for each and every value of the HAQ there is an entire distribution of values of the EQ-5D-3L in the population. In the statistical model this is reflected by the presence of the latent variable ε, which, being random itself, imparts randomness to the EQ-5D-3L. The latent variable ε exists at the population level. It does not disappear as the sample tends to the population. In other words, the model reflects the fact that individuals are different from each other. Not every person with the same HAQ value has the same EQ-5D-3L value in the data or in the population and the statistical model does not assume that they do. Indeed, individual-level data exhibit a much greater range of variation compared with aggregate-level data. This is because there are many factors unique to individuals, such as their own tastes and preferences, which cannot be observed. These factors are therefore included in ε, typically making σ2 a large component of the variation in the dependent variable. This is one of the reasons why summary measures of fit based on differences between the data and the predictions (an estimate of ε for the individual), such as R2, mean absolute error (MAE) and root mean squared error (RMSE), are quite insensitive to model specification changes (see Model comparisons).

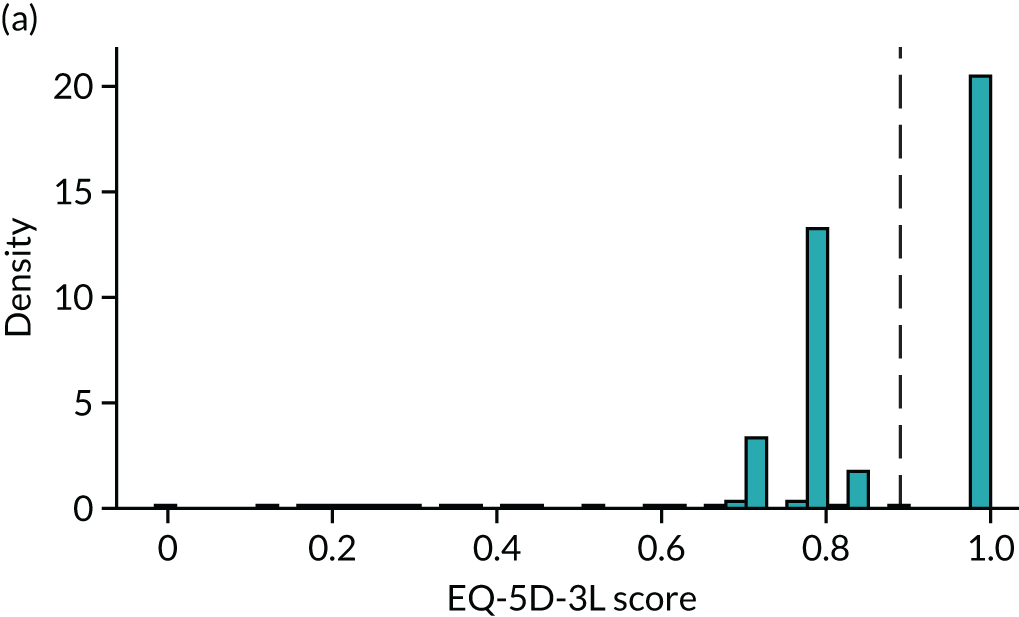

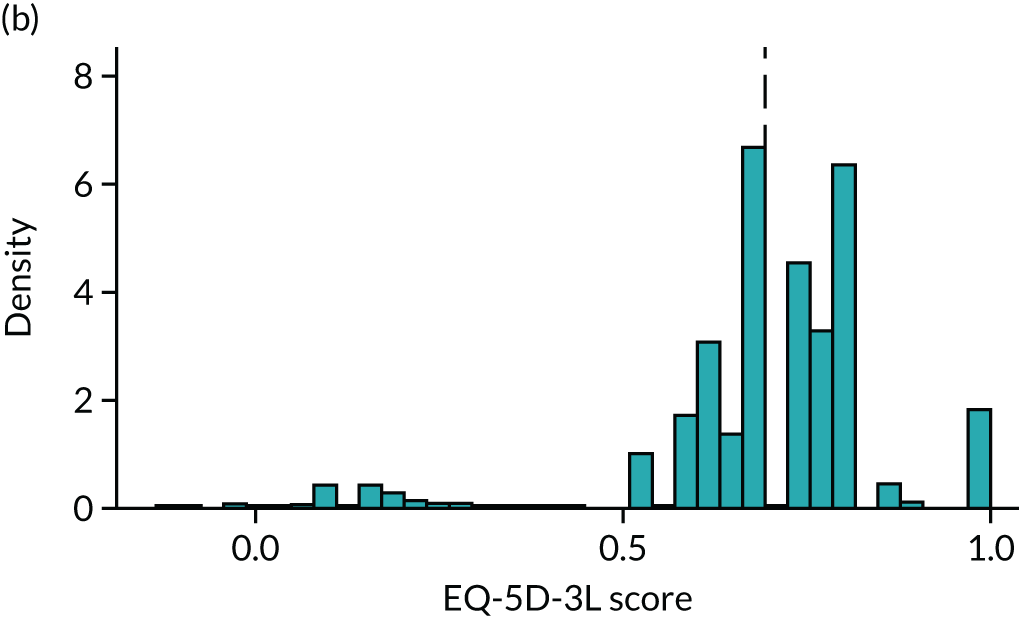

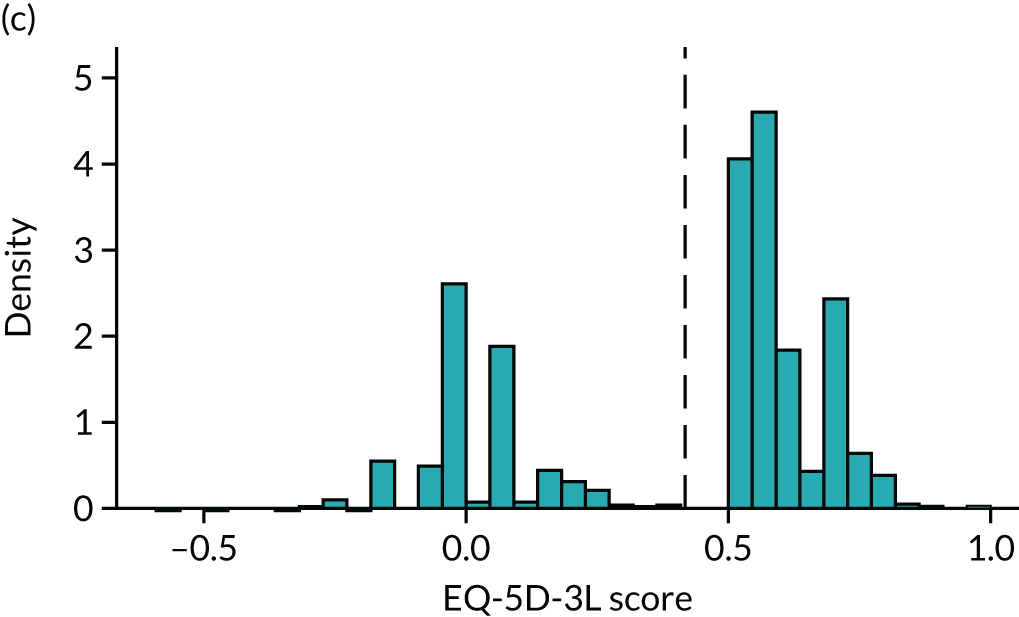

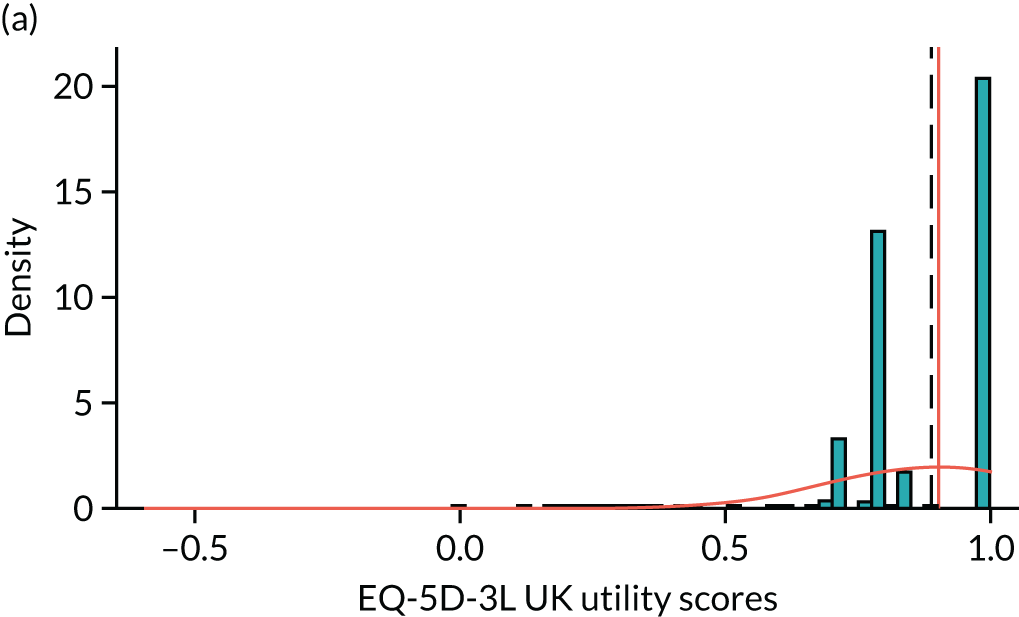

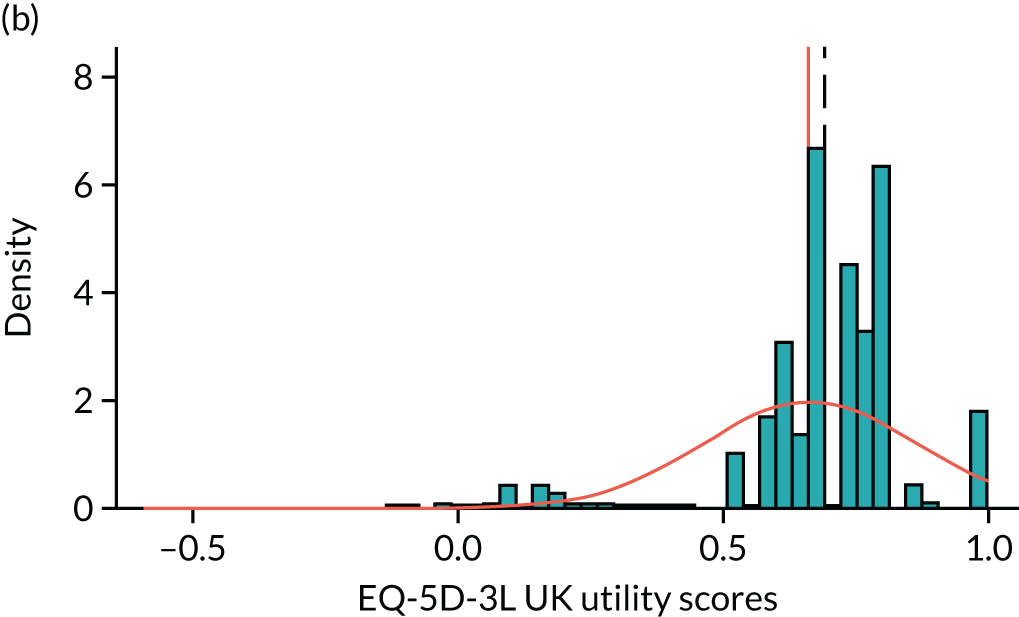

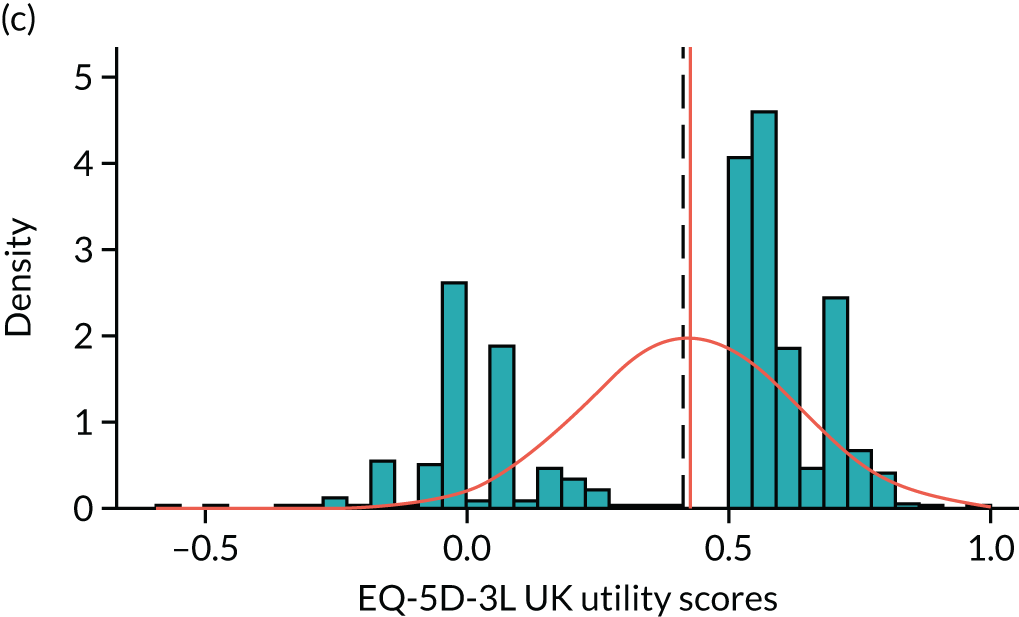

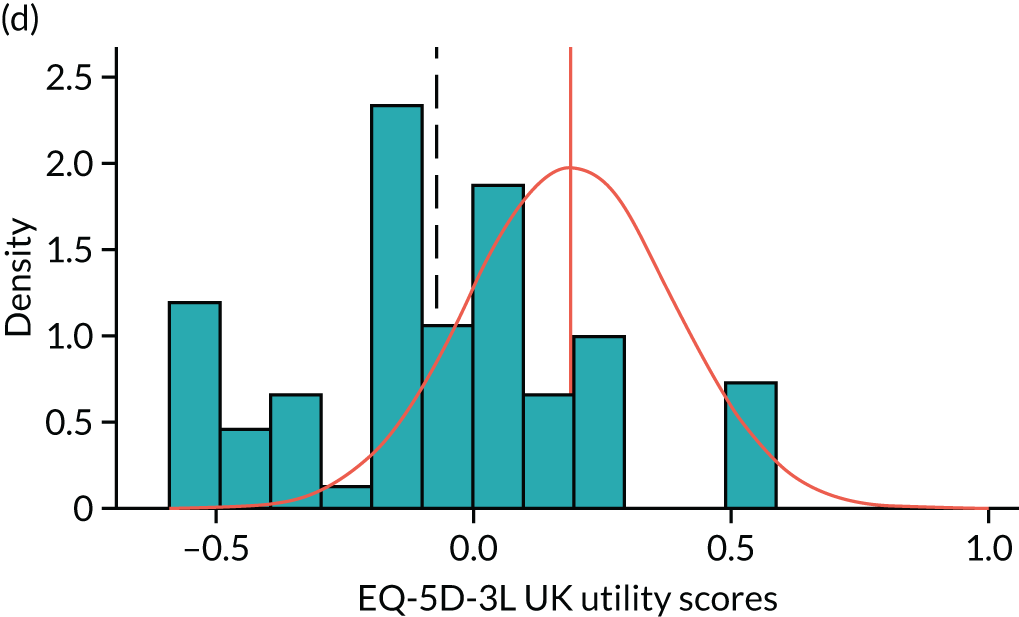

To expand on this issue: Figure 5 takes four example HAQ values (0, 1, 2 and 3) and separately plots the distribution of EQ-5D-3L health utility values for all the patients in the sample data that have these HAQ scores.

FIGURE 5.

Distribution of EQ-5D-3L scores at four different values of HAQ (FORWARD). (a) HAQ = 0; (b) HAQ = 1; (c) HAQ = 2; and (d) HAQ = 3. Vertical dashed lines indicate the mean health utility of those four sample groups (conditional distributions).

Suppose we want to use our statistical model to predict EQ-5D-3L scores conditional on HAQ scores, as might typically be the case for a cost-effectiveness model that had health states defined by these HAQ scores. If α^, β^ and σ^2 denote the estimated parameters from the linear regression above, then we can find our estimates of Equations 14 and 15 using:

and σ^2, respectively. Equation 16 is our prediction, that is, our best guess of what the mean of the conditional distribution (see Equation 14) is. These predictions must by definition be equal for every individual with the same covariate(s): they yield the expected EQ-5D-3L value conditional on HAQ value. Our prediction differs from the actual EQ-5D-3L value for any individual for two reasons. First, Equations 14 and 16 will differ because of sampling error (assuming that the functional form is right). α^ and β^ are estimated parameters associated with uncertainty because they are drawn from a finite sample of patients. The degree of that uncertainty will reduce with larger samples. Second, for each HAQ level, EQ-5D-3L differs from its mean by εi owing to variability between individuals in the population. This variability is not reduced by increasing the sample size.

To this point, it would seem reasonable that we would want a mapping model to be able to predict accurately, that is, we want the predictions from the model (the expected value conditional on covariates) to be close to the sample means for patients with the same covariates, or at least to assess the reasons why they are not close. What is not reasonable is to compare the distribution of the predicted values with the distribution of the original data. Individual patients’ utility values exhibit marked variability, even after conditioning on covariates, as illustrated in Figure 5. Predicted values from a mapping regression model simply give the average values for a set of covariates, stripping out the variability.

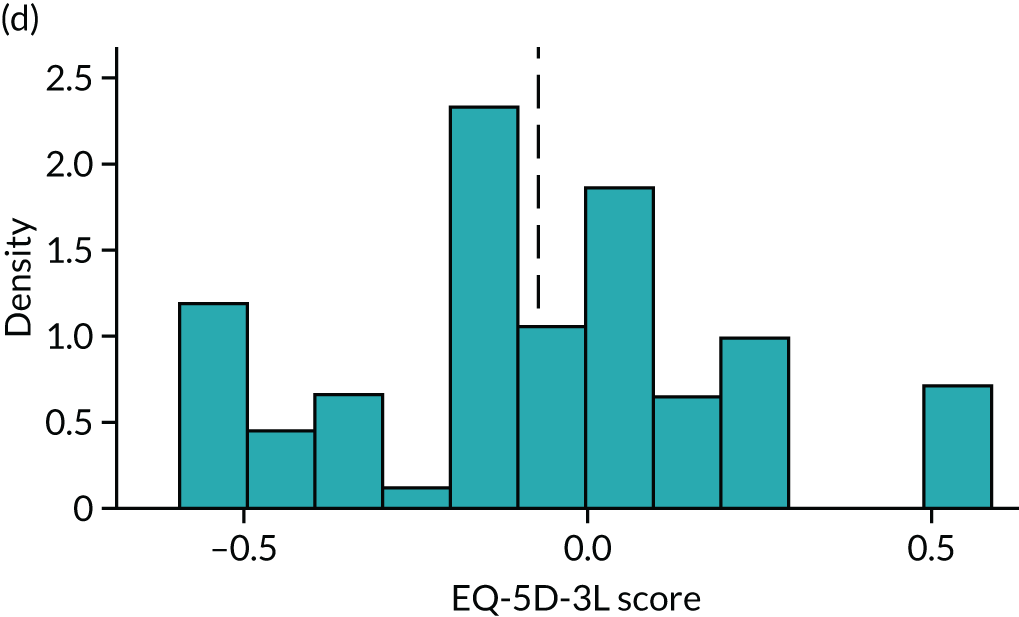

Figure 6 illustrates this for the HAQ example. Figure 6a reproduces the original data from those patients in the sample that had a HAQ of either 0, 1, 2 or 3. Figure 6b is the plot of the four predicted values conditional on HAQ. These distributions are very different, as they should be. The fitted values are conditional means, and therefore fit only four EQ-5D-3L values to each of the four HAQ values. The raw data include patient-level variability that the predictions do not. Note, for example, that no prediction yields a EQ-5D-3L value of 1 (full health) whereas in the original data there is a mass of observations at 1, typical of the EQ-5D-3L. Even for the group of patients who indicated that they have no functional disability (HAQ = 0), their mean EQ-5D-3L utility value is not 1. A utility value of 1 (full health) could be the mean for any set of patients with the same HAQ score only if every single one of them had that EQ-5D-3L value of 1.

FIGURE 6.

Comparison of (a) the sample distribution and (b) the distribution of predictions for the FORWARD data with HAQ values of 0, 1, 2 and 3.

It would be valid, and indeed an important assessment of fit, to compare the predictions and the means in the data (see the vertical dashed lines in Figure 5). In this example these predictions are 0.902, 0.664, 0.426 and 0.189 for HAQ values of 0, 1, 2 and 3, respectively. The sample means for these same groups are 0.890, 0.692, 0.414 and –0.073, respectively: the point estimate of the mean EQ-5D-3L for patients with a very high HAQ (extreme functional disability) is higher than the sample average for that group by a substantial degree.

It is not valid to compare the distribution of the data to the distribution of the predictions. As discussed in Predictions: mean versus distribution, it has often been claimed that mapping underestimates uncertainty, meaning that the variance of the predictions is smaller than the variance of the sample data or that the range of the predictions is smaller than the range of the data. Figure 6 shows that not only is the shape of the distribution different between the predicted values and the sample data but the variance is indeed also substantially lower in the former, as it should be. In fact, in this case, it is approximately half that of the original data. But these two measures of ‘variance’ refer to two different concepts and are not comparable. In fact, the variance of EQ-5D-3L in the sample is made up of two components: one relates to the variability explained by the HAQ (i.e. the predictions) and the other relates to the variability of ε (i.e. σ2). Thus, the variance of the original data is always higher than the variance of the predictions.

Table 2 further illustrates this point with some examples from mappings that we have conducted. The examples cover a range of models, PBMs and sample sizes. In all cases, the difference between the variance of the sample data and the estimated variance from the model is negligible as expected. Of course, the variance from the predicted values, which does not reflect the patient level variability, is substantially lower.

| Case study | Data | Linear | Mixture | Response | |

|---|---|---|---|---|---|

| ALDVMM | Beta | ||||

| Rheumatoid arthritis: EQ-5D-3L51 | |||||

| Sample/estimated variance | 0.070 | 0.068 | 0.066 | 0.065 | |

| Variance of the predictions | 0.036 | 0.038 | 0.038 | ||

| Ankylosing spondylitis: EQ-5D-3L53 | |||||

| Sample/estimated variance | 0.098 | 0.088 | 0.097 | 0.091 | |

| Variance of the predictions | 0.043 | 0.053 | 0.055 | ||

| Heart disease: EQ-5D-5La | |||||

| Sample/estimated variance | 0.043 | 0.043 | 0.043 | ||

| Variance of the predictions | 0.026 | 0.026 | |||

| Heart disease: SF-6Da | |||||

| Sample/estimated variance | 0.018 | 0.017 | 0.018 | ||

| Variance of the predictions | 0.012 | 0.012 | |||

| Varicose veins: EQ-5D-3La | |||||

| Sample/estimated varianceb | 0.053 | 0.053 | 0.053 | ||

| Variance of the predictionsb | 0.010 | 0.009 | |||

| Sample/estimated variancec | 0.048 | 0.051 | 0.051 | ||

| Variance of the predictionsc | 0.013 | 0.012 | |||

In the case of mapping for CEA, good practice requires a reflection of the uncertainty around the predicted values. The information with which to do this is contained in the variances (and covariances) of the estimated regression coefficients, which are α and β in the linear regression HAQ example. For non-linear models such as the ALDVMM, the covariance matrix of all the estimated parameters is needed. This includes all the regression coefficients as well as all the variances of the error terms because predictions for a non-linear model depend on all the model parameters. Repeated sampling of the coefficients, drawing on the variance–covariance matrix, and using them in recalculating the predictions generates the conditional distribution of mean EQ-5D-3L. This is a standard method used to capture parameter uncertainty using probabilistic sensitivity analysis. 79

Using mapping models to simulate data

The use of predicted values from mapping, with appropriate assessment of uncertainty, is clearly important for economic evaluation. A standard cohort-based decision model would require exactly these inputs. However, in some situations the cost-effectiveness analyst’s requirements will extend further. Often there will be a need to not only estimate the mean health utility value for a health state defined by the covariates in the mapping model but also impute actual data at the patient level. This would be the case where an individual patient level simulation model is being used. Indeed, often this type of model is used precisely because of the need to reflect patient variability to obtain an unbiased estimate of cost-effectiveness. 80 Alternatively, where a CEA is being undertaken alongside a clinical trial that has not collected health utility information, mapping is used to impute missing data for each patient and, as for all types of missing data, the analyst may not wish to simply impute the conditional mean value.

We described in Predictions: mean versus distribution, Predictions from mapping models, how the error term, ε, for the linear regression allows the statistical model to reflect variability at the individual level. The distribution of EQ-5D-3L conditional on HAQ will be normally distributed with mean equal to the prediction (α^+β^HAQi) and variance σ^2. So we can simulate data from the regression outputs using these assumptions about the error term to reflect the estimated degree of variability.

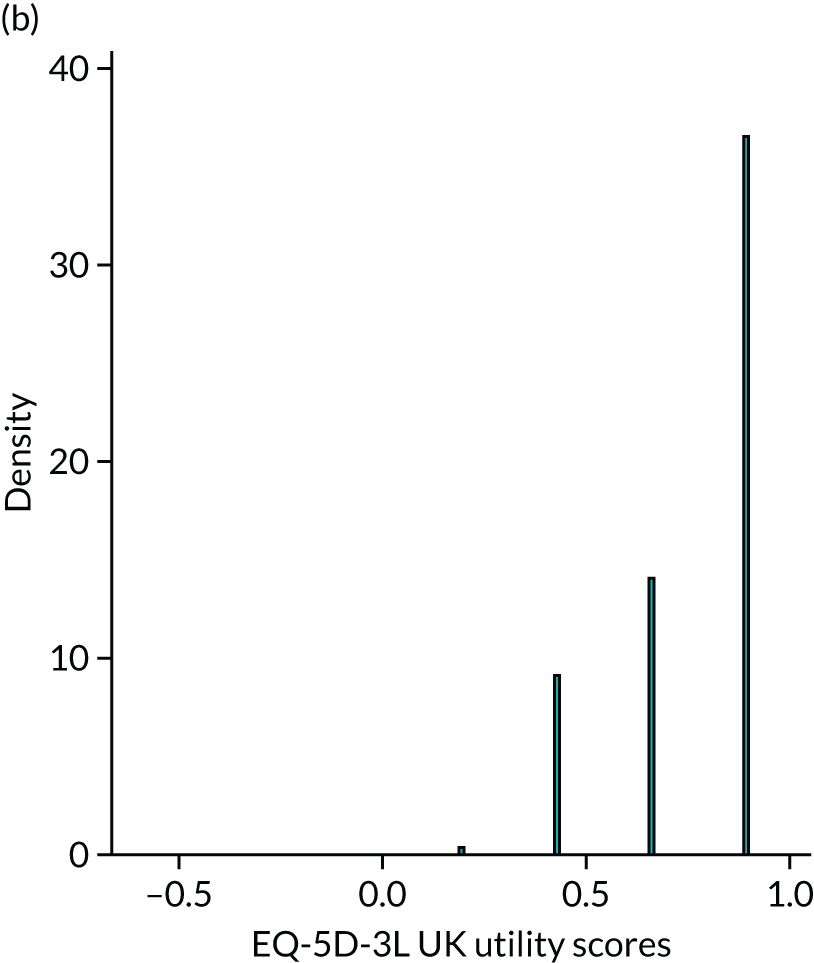

Figure 7 shows these predicted conditional distributions for our example HAQ values of 0, 1, 2 and 3 and superimposes them on the corresponding conditional distributions from the original data (as in Figure 5). For our linear regression model we can see that the mean from the simulated values is close to the sample means for each HAQ group, except those with a HAQ value of 3. The shape of that distribution does not match very closely to the original data and it is not constrained to the feasible range of the EQ-5D-3L. These features are important for assessing the credibility and suitability for potential uses of a mapping model in subsequent cost-effectiveness analyses. Reporting of results in this way has helped to demonstrate the performance of other model types previously. 50,51,62

FIGURE 7.

Distributions of EQ-5D-3L by HAQ group: sample vs model. (a) HAQ = 0; (b) HAQ = 1; (c) HAQ = 2; and (d) HAQ = 3. The dashed line represents the sample mean; the red line represents the mean prediction based on the model.

Model comparisons

The primary purpose of mapping models is to serve as an input to cost-effectiveness analyses. Therefore, any model comparisons need to take into account the possible ways in which a mapping model could be used in this context.

By far the most widely used criteria to select the appropriate model in the existing mapping literature have been measures of goodness of fit of the model such as R2/R¯2, and measures of predictive accuracy such as mean error (ME), MAE and RMSE. There are several problems when using these measures as model selection criteria in mapping. Mapping models are estimated using individual-level data and, typically, relatively few covariates of potential relevance are available in the mapping data set and/or have any realistic prospect of being used in the CEA. At the individual level there is substantial variation and factors which are typically unobserved might determine the response variables. These unobserved factors are often relatively large and, consequently, the data tend to be quite noisy, affecting measures of goodness of fit (R2/R¯2), which will tend to be relatively low compared with studies using more aggregated data (see Predictions: mean versus distribution). Furthermore, the scale of the dependent variable, health state utility, is small. Using the UK/England valuation sets, the length of the range intervals for the EQ-5D-3L, EQ-5D-5L, SF-6D and HUI3 is 1.594, 1.285, 0.699 and 1.359, respectively (see Table 1). The small range of values coupled with the relatively large contribution of unobserved factors results in relatively insensitive measures of predictive accuracy (MAE and RMSE). Often, differences between alternative mapping models will be detected only at the third or fourth decimal place of these measures. Without recognising the constrained scale of health utility, it may be tempting to conclude that model differences are slight and unimportant. However, even small differences can have serious consequences for the CEA because the incremental benefit of an intervention appears in the denominator of the ICER. Incremental benefits are typically quite small, so the ICER is very sensitive to very small differences between mapping functions. Furthermore, MAE and RMSE are aggregate measures that might conceal systematic patterns in the predictions. Systematic biases in the conditional means of the models may be a sign of model misspecification.

Some studies report the ME. Where this is close to zero, as will be the case for a linear regression estimated using OLS, this is used as support for the credibility of the mapping model despite the concerns about its suitability raised in Chapter 2, Overview of different mapping approaches, Direct methods. It is important to note that estimators such as OLS, which minimise the residual sum of squares, essentially ensure that, as long as there is a constant term in the linear regression, the mean of the predictions equals the mean of the dependent variable in the sample. Thus, the ME will be effectively zero with slight departures owing to the numerical precision used in the calculation. In fact, one could estimate a linear regression model without any covariates and find that just the inclusion of a constant will yield a ME of approximately zero. This illustrates how this criterion for model selection is not fit for the purpose of selecting a mapping model.

More recently, information criteria such as the Akaike information criterion (AIC) and the Bayesian information criterion (BIC) have also been used. One complication of these information criteria, which also applies to measures of goodness of fit such as such as R2/R¯2, is that not all models that can be used for mapping can be compared on this basis because models must have the same dependent variable. In particular, direct and indirect approaches model different dependent variables and therefore their AIC and BIC cannot be compared directly. Models based on the beta distribution need rescaling of the dependent variable to the range of the health state value set, invalidating comparisons of AIC and BIC even with other models that use direct approaches.

Another important issue is that often these criteria select different models. MAE and RMSE are based on different scoring functions that are computed at the individual level and then averaged to give a summary measure of predictive accuracy. MAE and RMSE are similar in that all individual error measures are positive, unlike ME, in which positive and negative errors can cancel each other out. RMSE gives a higher weight to large errors owing to the squared terms and thus is sensitive to large outliers. Some researchers prefer MAE whereas other researchers prefer RMSE because large errors are seen as undesirable. In our case, this is not straightforward. Because mapping feeds into a cost-effectiveness model, what is important will depend on the specific cost-effectiveness application. If the feature that matters most for the specific CEA is not having any large errors, RMSE might be more useful. However, in other cases MAE might be more appropriate to select the model. Therefore, without knowledge of the specific CEA and the consequences of large versus small errors in utility estimation, it is not possible to be prescriptive about which measure of predictive accuracy is better in various scenarios. It is important, however, that as much information as possible is provided in an accessible way so that the economic analysts using the results of the mapping can make a judgement about its suitability for each specific application.

Both AIC and BIC may also lead to different models being selected. Both models are based on penalised likelihoods but the penalty imposed for model complexity by BIC is higher than that for AIC; thus, BIC will tend to choose models with less parameters than AIC. This issue always arises when estimating mixture models with different numbers of components. Both AIC and BIC first decrease and later increase with the number of components as the increasing number of parameters starts to outweigh the benefits in terms of increased likelihood. The inflexion point tends to occur at a lower number of components for BIC than for AIC owing to its higher penalty.

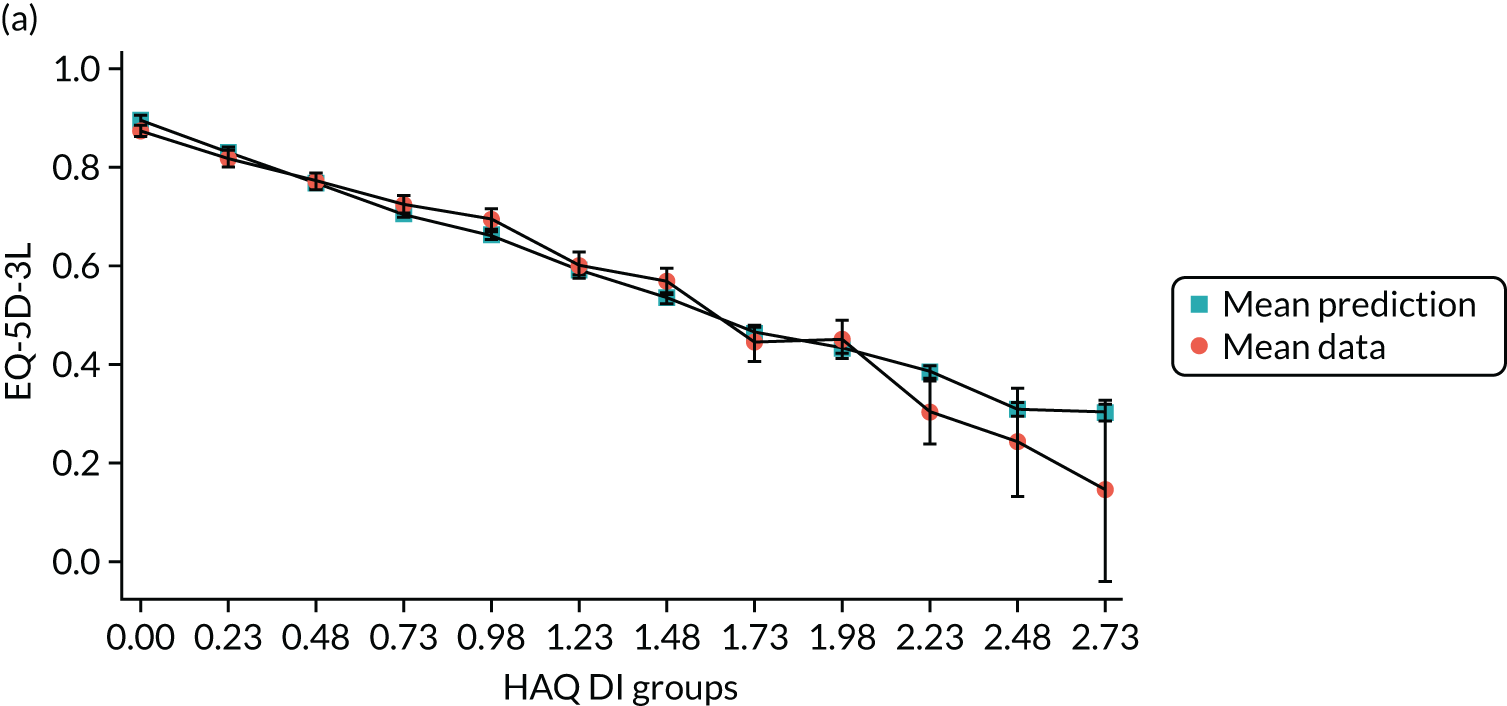

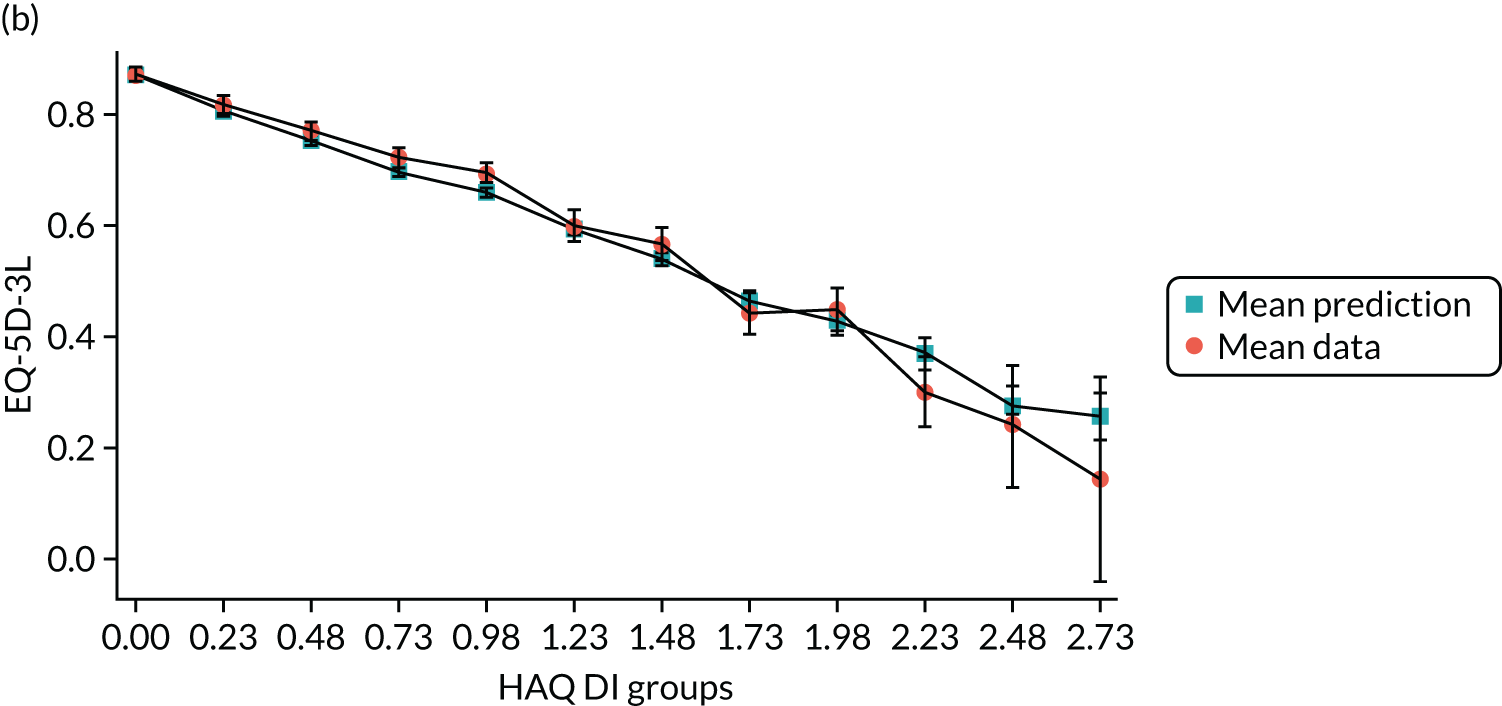

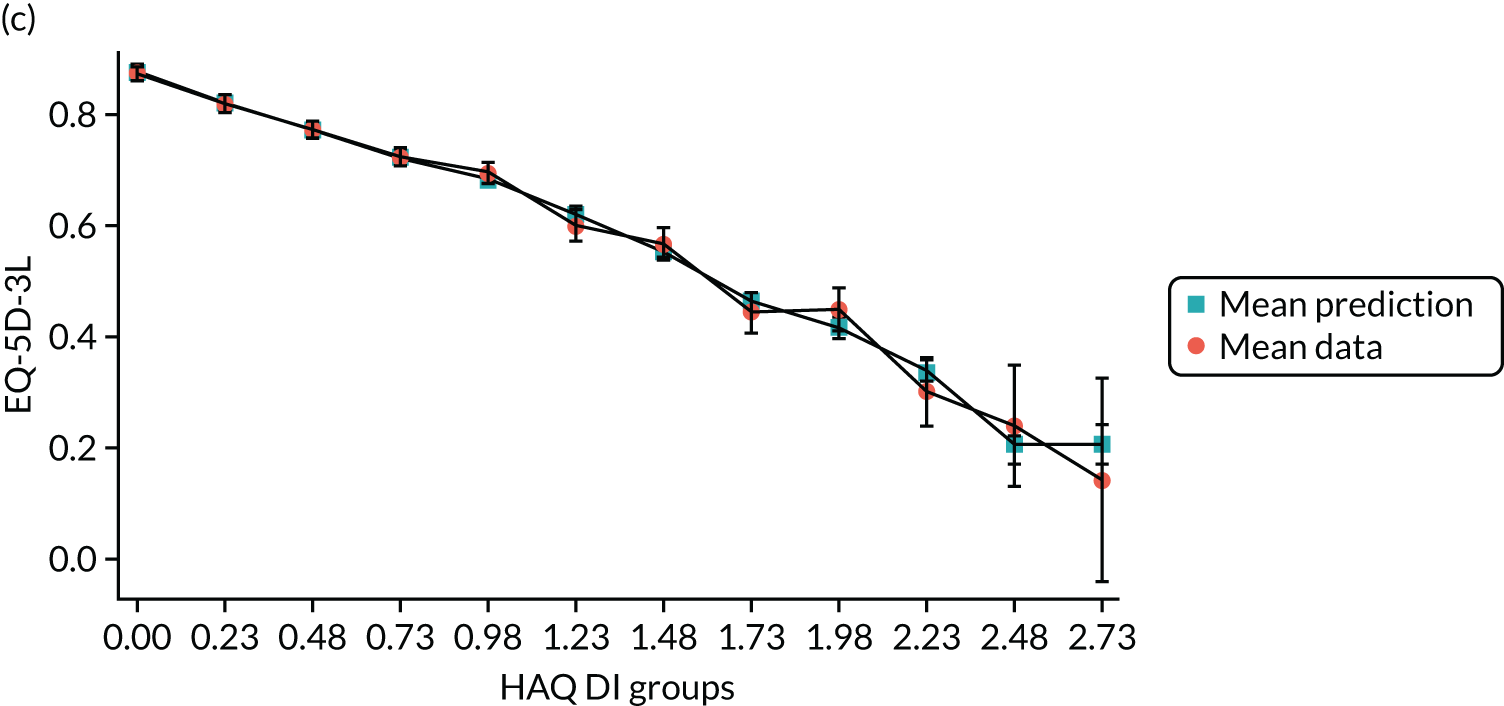

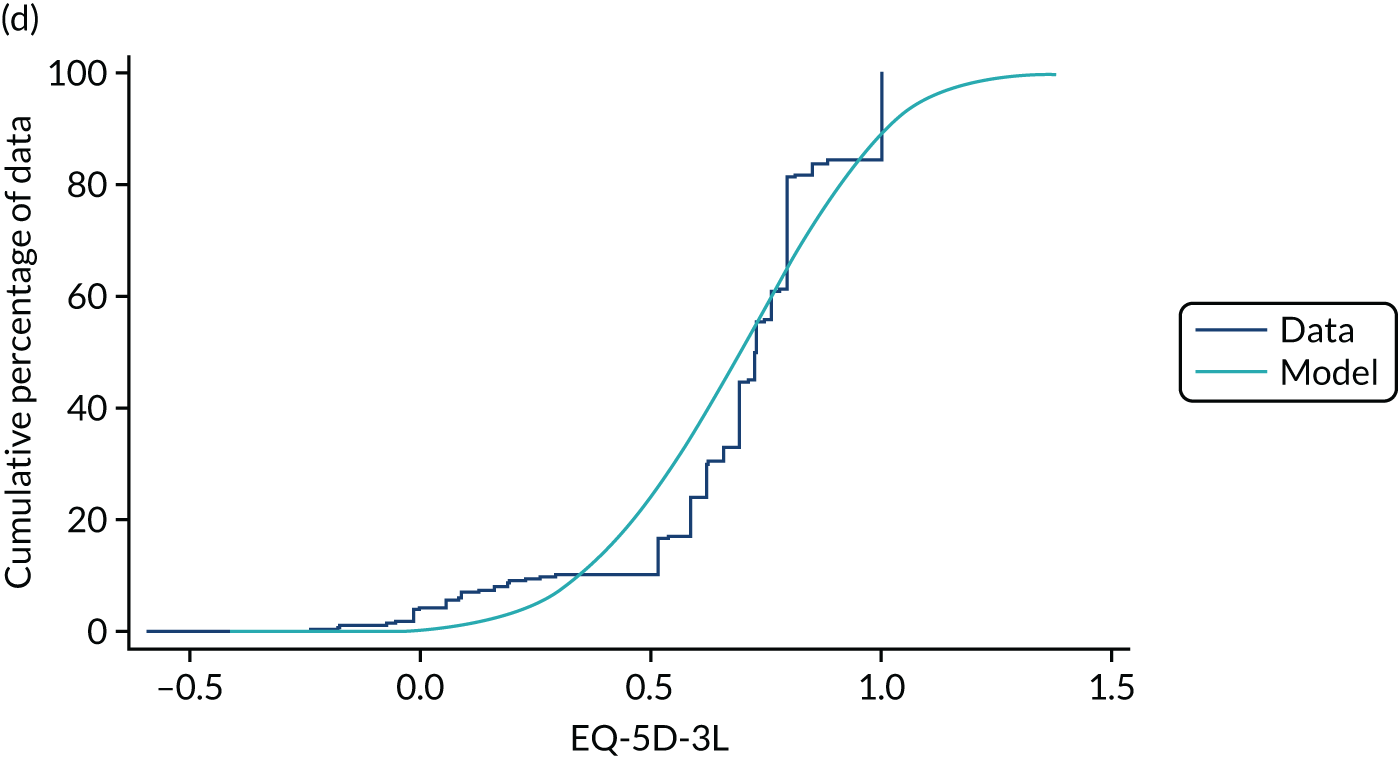

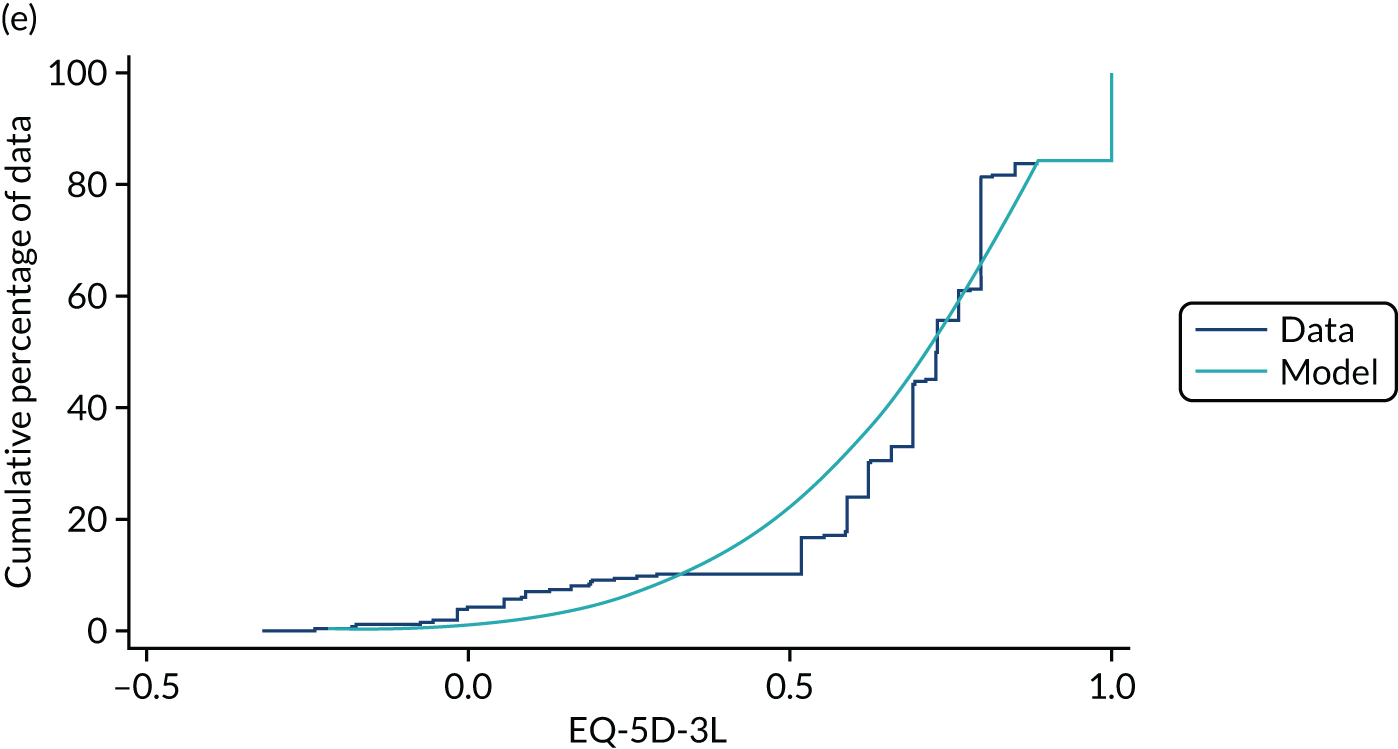

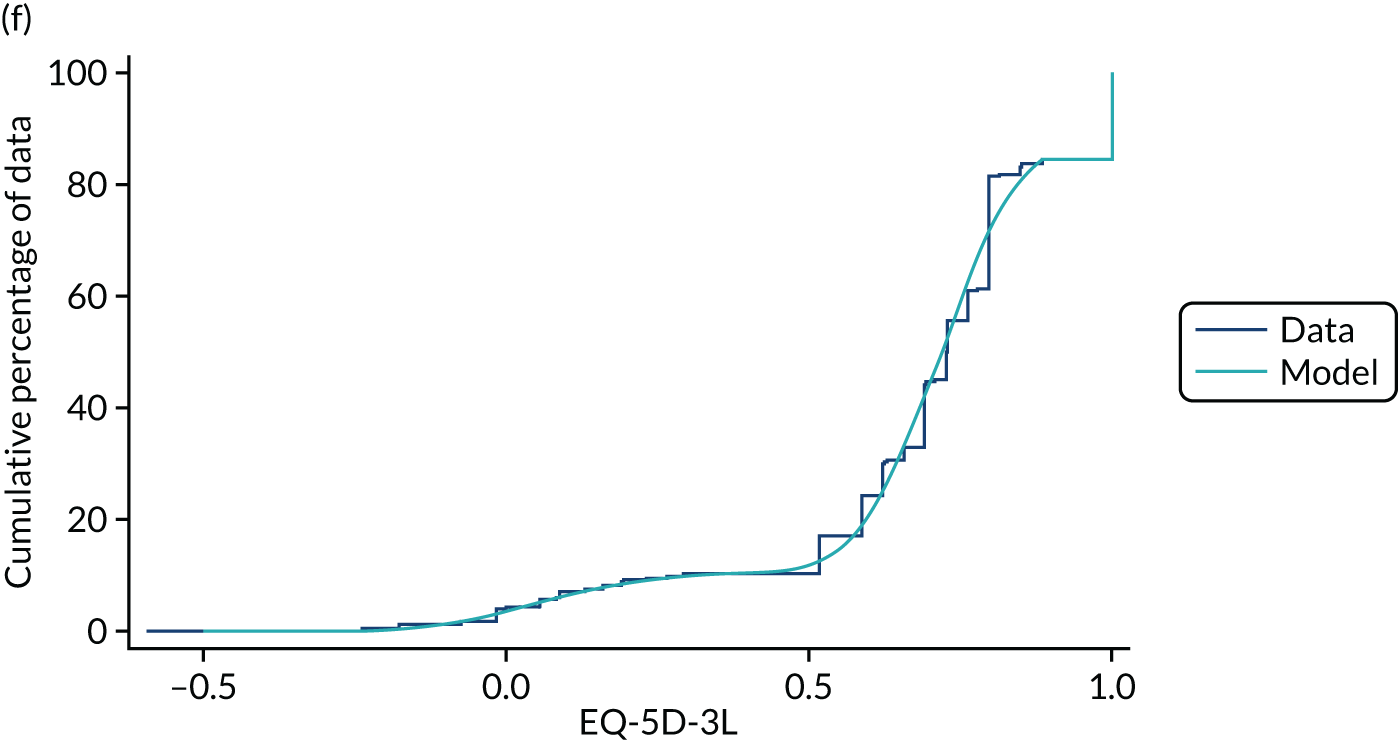

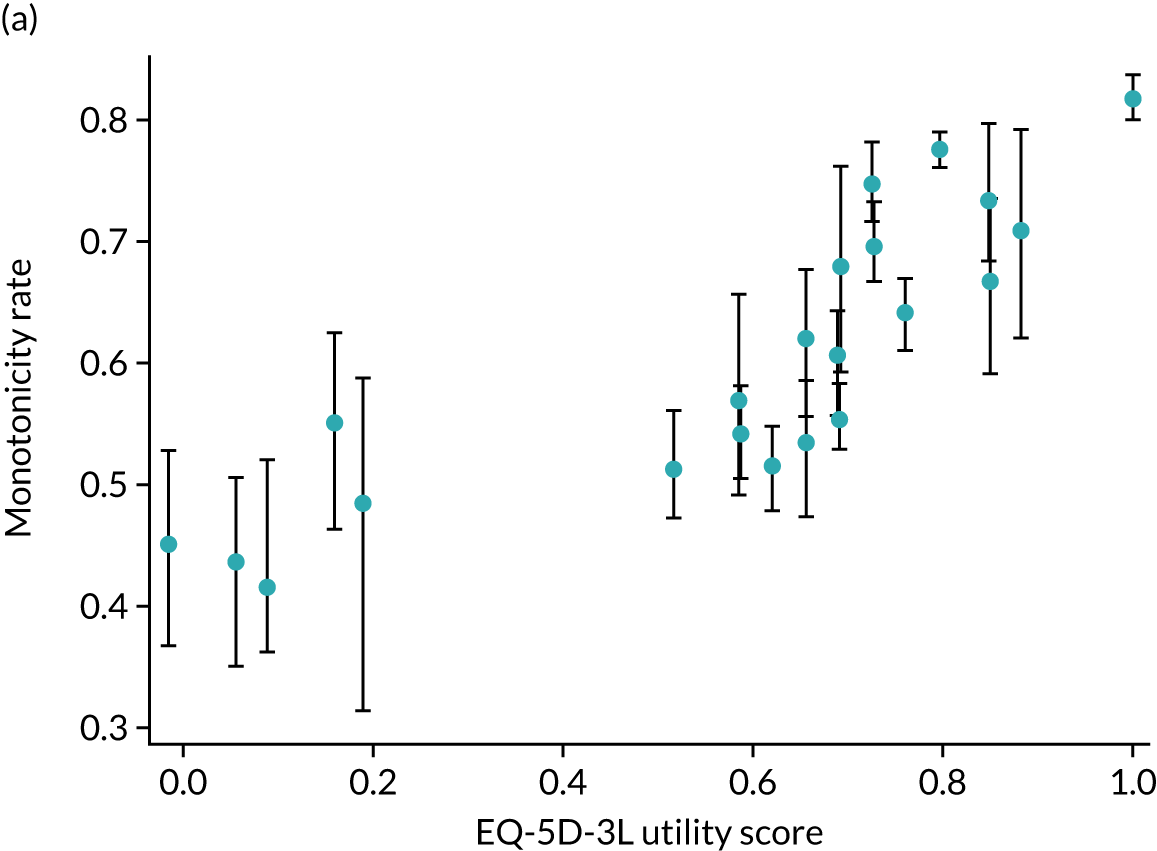

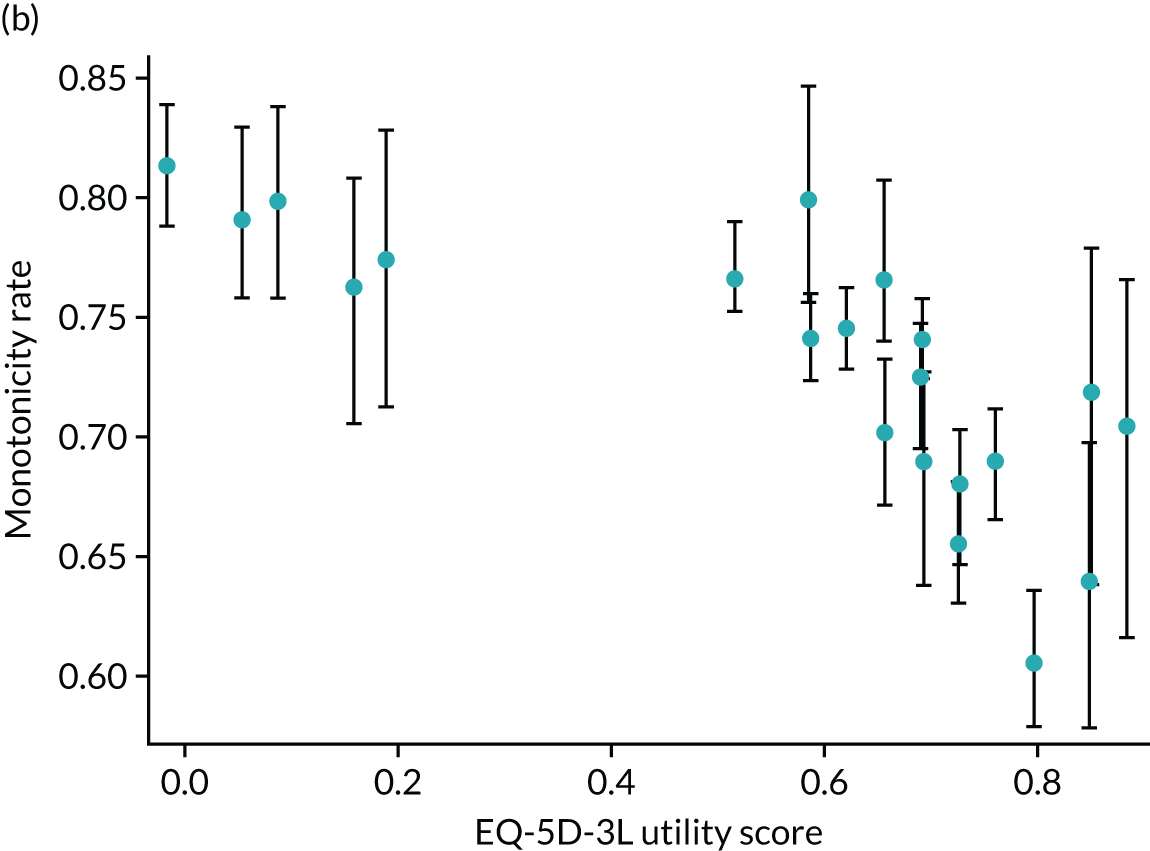

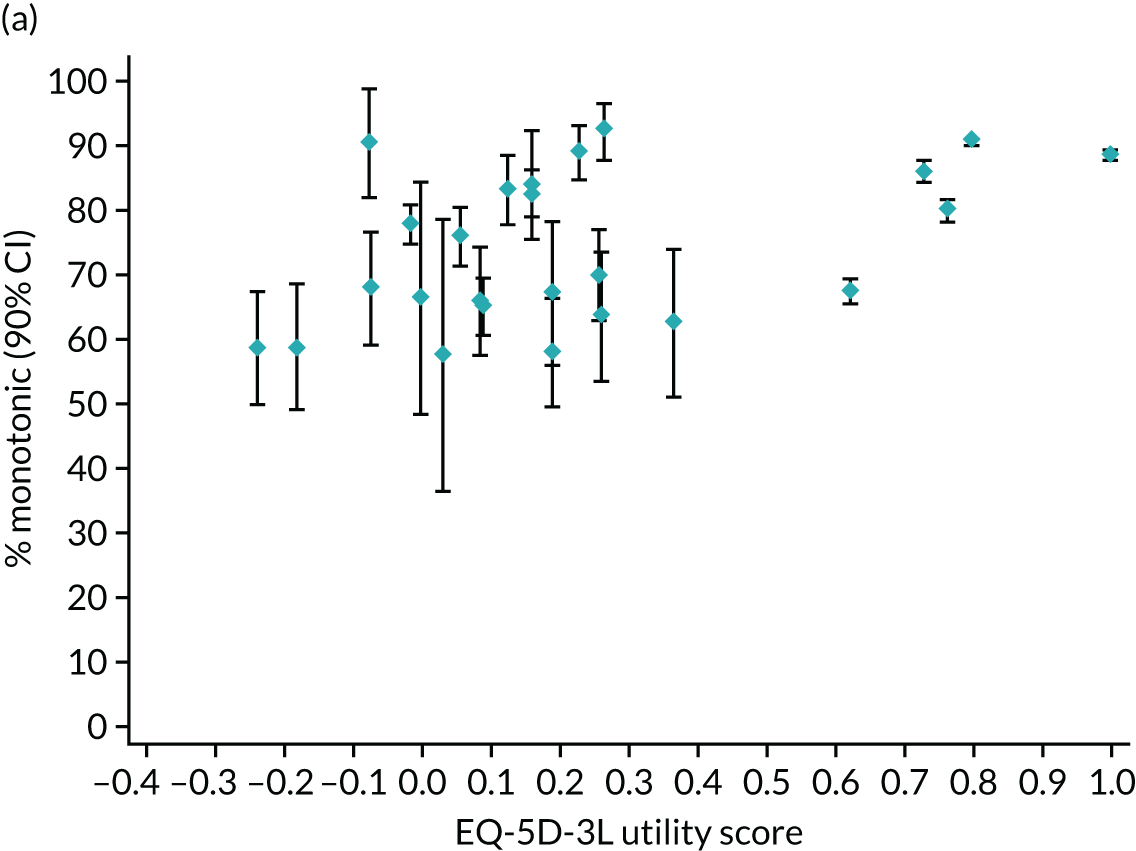

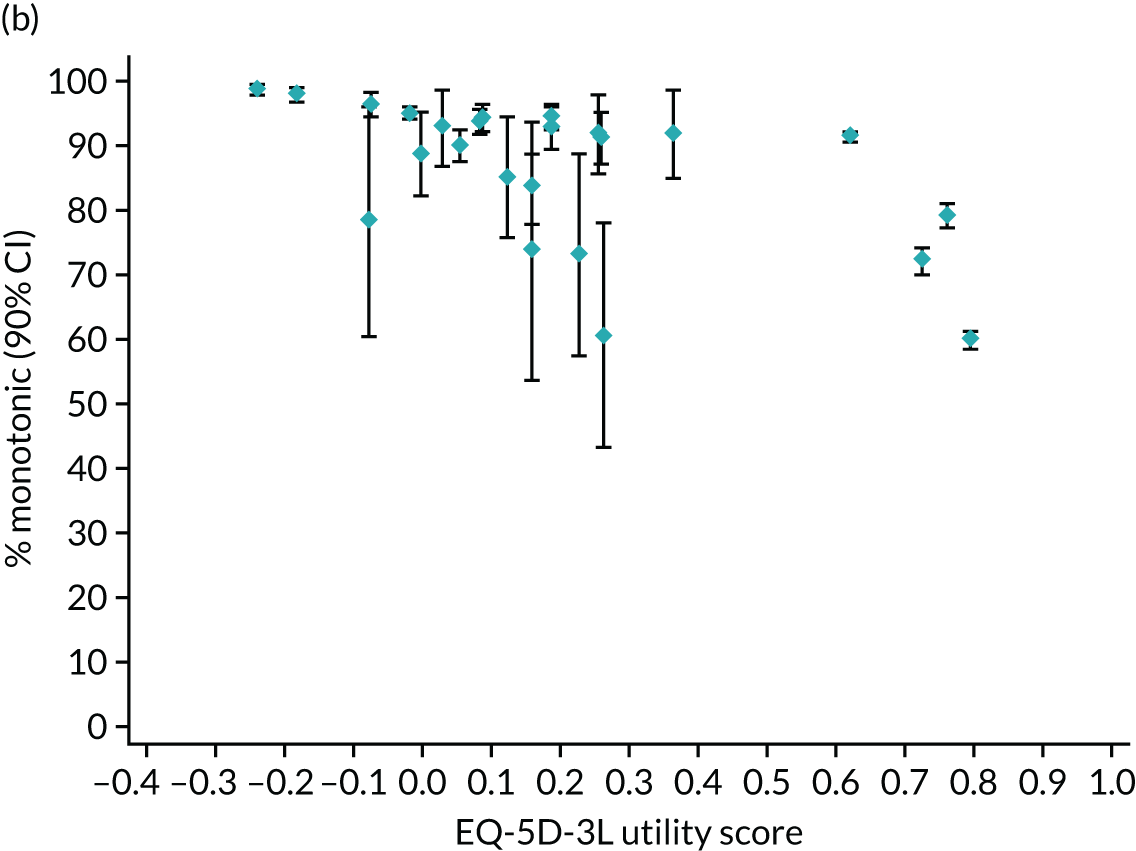

Hernández Alava et al. 50 and, more recently, Fuller et al. 54 have proposed some graphical methods suited to the specific case of mapping to help with model selection as well as also providing vital information for the economic analysts to select the right mapping model for their analysis and/or design suitable for sensitivity analyses. These graphs have been shown to be of significant value when deciding on the best mapping model in many applications (see Chapter 4 for several examples).