Notes

Article history

This issue of the Health Technology Assessment journal series contains a project commissioned by the MRC–NIHR Methodology Research Programme (MRP). MRP aims to improve efficiency, quality and impact across the entire spectrum of biomedical and health-related research. In addition to the MRC and NIHR funding partners, MRP takes into account the needs of other stakeholders including the devolved administrations, industry R&D, and regulatory/advisory agencies and other public bodies. MRP supports investigator-led methodology research from across the UK that maximises benefits for researchers, patients and the general population – improving the methods available to ensure health research, decisions and policy are built on the best possible evidence.

To improve availability and uptake of methodological innovation, MRC and NIHR jointly supported a series of workshops to develop guidance in specified areas of methodological controversy or uncertainty (Methodology State-of-the-Art Workshop Programme). Workshops were commissioned by open calls for applications led by UK-based researchers. Workshop outputs are incorporated into this report, and MRC and NIHR endorse the methodological recommendations as state-of-the-art guidance at time of publication.

The authors have been wholly responsible for all data collection, analysis and interpretation, and for writing up their work. The HTA editors and publisher have tried to ensure the accuracy of the authors’ report and would like to thank the reviewers for their constructive comments on the draft document. However, they do not accept liability for damages or losses arising from material published in this report.

Permissions

Copyright statement

Copyright © 2021 French et al. This work was produced by French et al. under the terms of a commissioning contract issued by the Secretary of State for Health and Social Care. This is an Open Access publication distributed under the terms of the Creative Commons Attribution CC BY 4.0 licence, which permits unrestricted use, distribution, reproduction and adaption in any medium and for any purpose provided that it is properly attributed. See: https://creativecommons.org/licenses/by/4.0/. For attribution the title, original author(s), the publication source – NIHR Journals Library, and the DOI of the publication must be cited.

2021 French et al.

Chapter 1 Introduction

Measuring people can affect their behaviour, their emotions and the data that they provide about themselves. 1–3 This phenomenon is sometimes known as measurement reactivity (MR). 1 Randomised controlled trials (RCTs) always include measurements of trial outcomes and commonly include further measurements as part of process evaluations. Measurements include self-reports (e.g. via questionnaires and interviews), objective measurements of behaviour (e.g. via accelerometers) and clinical markers (e.g. blood pressure or body scans that estimate body fat). Measurement techniques used in trials are typically treated as though they are inert (i.e. have no impact on participants). The usual methods of conduct and analysis of trials implicitly assume that the taking of measurements does not affect subsequent outcome measurements or interact with the trial intervention and that any effects of measurement-taking will be the same in each experimental group and, hence, are unlikely to bias treatment comparisons. 1,2,4 Any effects on participants are therefore ignored and not considered as a potential source of bias in trials (i.e. incorrect estimates of intervention effects or their standard errors). The present report aims to promote awareness of when trial measurements can produce bias and to provide recommendations to prevent such bias.

The phenomenon of MR is related to the broader term ‘Hawthorne effect’,5 which is used to refer to research participants changing their behaviour in response to being observed. The Hawthorne effect appeared in a research publication 65 years ago5 and the term is in widespread use, although it has been the subject of little empirical research. 6 It has been suggested that the Hawthorne effect is an umbrella term for a number of discrete phenomena, including MR, and it is proposed that more precise terms are needed to develop understanding of research participation effects and how they may lead to bias. 7 In the present document, the term MR is used to mean changes (in individual trial participants as well as in others such as health-care professionals) that would not occur in the absence of measurement.

There are few areas of research where there is sufficient evidence to be entirely confident that MR is present. The main exceptions are (1) the question–behaviour effect (QBE)3,8–11 and (2) pedometers. 12,13 The evidence in both of these areas is summarised in Boxes 1 and 2. In addition, there is evidence from randomised studies showing that people who complete questionnaires about the consequences of health conditions have higher anxiety levels than people who have not completed such questionnaires. 18,19 Furthermore, when people complete questionnaires about anxiety for the first time, they score more highly than when they are measured subsequently. 18,20,21 Other measurement procedures widely employed to assess outcomes in RCTs (e.g. assessing body weight) are also used as intervention techniques in their own right because they are seen to be effective at producing behaviour change. 22

Several systematic reviews, including a meta-analytic synthesis of 104 question–behaviour studies across 51 published and unpublished papers, found evidence that measuring a variety of behaviours via questionnaires can affect the subsequent performance of those behaviours. 3,8–11 Much of this evidence derives from studies in which people who were asked to complete a questionnaire about their behaviour, or attitudes or beliefs about that behaviour, showed changes in that behaviour relative to a no-questionnaire control group. Systematic reviews have consistently provided evidence of small effects on objective and subjective measures of behaviour, but there is considerable heterogeneity in the effects. Individual primary studies in the reviews have generally shown that some risk of bias and publication bias may be present, although it does not appear that bias can fully account for the effects observed. 8,9,14

A systematic review of eight RCTs and 18 observational studies found that providing people with pedometers produced an increase in physical activity, particularly when pedometers are provided in conjunction with goal-setting12 and when research participants can access step count readings. 13 Given this, pedometers are sometimes used as part of interventions15 and are often used as tools to measure outcomes. These two purposes for which pedometers are being used has flagged up that measurement is not always inert in trials and that greater consideration is needed for pedometers when used solely as measurement tools.

Although use of pedometers can produce changes in people’s behaviour, it is unclear to what extent their use causes bias in trials; however, there are plausible reasons to think that it does. The mechanism by which the provision of pedometers produces an increase in physical activity is that pedometers allow participants to self-monitor their behaviour. 1,16 The use of self-monitoring is a key component of many behaviour change interventions. 17 Therefore, the use of pedometers as a measurement tool could result in both trial arms receiving assistance in self-monitoring their behaviour when this was intended in only one arm. This would be likely to reduce the observed effect of an intervention that was designed to promote self-monitoring to increase physical activity, relative to the true effect that would be observed without the use of pedometers.

The present report considers the challenges associated with MR for all kinds of RCTs, especially in the context of behaviour change, public health and health services research. 2 The focus on trials is because of the central importance of trials evidence for health-care decision-making. The present recommendations are designed to apply when measurement is used as a method of assessment that produces unintended effects rather than when it has been used as an intended intervention. The report is structured as follows.

In summary, there are multiple and diverse empirical studies showing that measurement may produce changes in the people being measured. By contrast, there is little direct evidence regarding how much of a problem MR poses for bias in trials because there has been little research directly addressing this issue. 4,23 As a consequence, MR has generally been ignored in discussions of how to reduce bias in trials. Given this, MR is not adequately addressed in existing guidelines for designing, reporting [e.g. Consolidated Standards of Reporting Trials (CONSORT)24] and appraising trials (e.g. risk-of-bias frameworks). 25 In the present document we rely on indirect evidence regarding the likely consequences of measurement in producing bias. That is, drawing on the existing evidence regarding where measurement affects research participants, we have produced scenarios where we think it plausible that bias may be produced. The procedure by which these recommendations were developed is described in more detail in Chapter 3, involving systematic reviewing and consultation with experts as part of the MEasurement Reactions In Trials (MERIT) study. It should be noted that, given the limited direct evidence, many of the recommendations are – in the terminology of the Grading of Recommendations Assessment, Development and Evaluations(GRADE) – ‘motherhood statements’, in that to propose the opposite would not be reasonable. 26

In Chapter 2 we spell out how measurement affecting people can produce bias.

In Chapters 3 and 4 we discuss some issues for researchers to consider in relation to MR as a potential source of bias. We include a list of RCT features that should act as ‘red flags’ for researchers to consider as indicating that MR or risk of bias due to MR may be present.

In Chapter 5 we identify future research that is needed to develop a stronger evidence base on the extent of bias in trials due to MR.

The main aims of the present recommendations are to help researchers more systematically consider MR as a potential threat to the validity of trial decision-making and to select appropriate strategies to minimise this potential bias. We aim to highlight the current state of evidence regarding the extent of MR and how it can lead to bias so that researchers can select strategies that are proportionate and mindful of the many other forms of bias that need to be prevented in trials. Finally, we hope to raise awareness of the ways in which trial evidence can be affected by MR and how MR might be better understood in the future.

Chapter 2 Measurement reactivity and risk of bias

Parts of this chapter have been reproduced with permission from French et al. 27 This is an Open Access article distributed in accordance with the terms of the Creative Commons Attribution (CC BY 4.0) license, which permits others to distribute, remix, adapt and build upon this work, for commercial use, provided the original work is properly cited. See: https://creativecommons.org/licenses/by/4.0/. The text below includes minor additions and formatting changes to the original text.

This section is concerned with describing mechanisms by which MR can lead to bias. Bias has been defined as ‘systematic deviation of results or inferences . . . leading to results or conclusions that are systematically (as opposed to randomly) different’. 28 It is important to note that MR may or may not lead to bias in trials: the existence of MR in a trial does not necessarily mean that the intervention effect estimate is biased. We describe six scenarios in which MR may produce bias. These may seem in some ways closely related to each other, although they are conceptually distinct, with bias being produced via different mechanisms in each scenario. Being aware of the distinctions between them should help develop understanding of the nature of MR and the associated bias. The six scenarios are:

-

different measurement protocols across trial arms

-

contamination

-

interactions between measurement and intervention

-

dilution bias

-

other inadvertent intervention effects

-

effects on other forms of bias such as attrition or information bias.

Different measurement protocols across trial arms

Bias may arise when different measurement protocols are used across randomised trial arms, with one trial arm being measured more than, or differently from, another. If measurement has an impact on trial outcomes, then greater disparities in measurement protocols will produce greater bias. For example, participants in the experimental condition may be asked to complete process measures to assess mechanism, more frequent momentary assessments of behaviour or treatment response and/or ongoing measurements using technology (e.g. a digital application), whereas participants in the control condition are not asked to complete such measures. Such practices may be found widely in eHealth, mental health and other areas in which psychosocial and behaviour change interventions are evaluated. Ongoing measurements, for example for fidelity assessment or intervention development feedback purposes, carry the potential to serve as reinforcers, reminders or boosters of intervention effects, and thus can exaggerate the apparent effects of interventions. This entails bias when these measurements are not defined as part of the intervention to be assessed.

Contamination

Contamination refers to the inadvertent exposure of a non-experimental control group to intervention content that is an integral part of an effective experimental group treatment. For instance, if a pedometer were one component of a multicomponent intervention to promote walking, then its use as a research measure is intrinsically problematic because the non-intervention control group also has access to part of the intervention. If the intervention component in question is actually inert (i.e. it is not effective in producing change in measured outcomes), then bias would not result. It will often be the case that it is unknown whether or not a particular component will produce change, and so vigilance should be exercised when intervention content and outcome assessment are closely related. Similarities between the contents of research measurements and interventions also provide prima facie grounds for concern about bias being induced by contamination. This is because they may exert their effects via similar or the same mechanisms. In this situation, estimates of effectiveness are likely to be biased towards the null because both intervention and control groups are exposed to similar content.

Interactions between measurement and intervention

If MR is present, then the risk of bias needs to be considered. Research measurements and interventions, even when they are very distinct and there is no overlap in content, may exert their effects via similar mechanisms. For example, pedometers may be effective intervention tools because they produce effects by promoting self-monitoring of behaviour. Thus, research procedures other than pedometers (e.g. regular body weight weighing) that also stimulate participant self-monitoring may interfere with comparisons between randomised groups. That is, although the intervention tool (i.e. pedometers) and measurement tool (i.e. weighing) take different formats, they both may promote self-monitoring and hence the control group may be exposed to content that underpins the anticipated effect of the intervention. In this example the biasing effect will be similar to that of contamination (i.e. towards the null). There are other circumstances in which it goes in the opposite direction.

This scenario illustrates a wider point about how randomisation may not always safeguard against bias due to MR, making it difficult to distinguish true change in outcomes arising from the intervention from change due to a combination of intervention and measurement. 4 This is true even in the absence of contamination. If there are similar levels of reactivity between experimental groups in a trial, it might be considered that the true effects of interventions are safeguarded by randomisation, but this does not take into account the possibility that measurements might interact with interventions to either strengthen or weaken the observed effects and, therefore, lead to biased estimates of effect (see Appendix 2). For example, research measurement could prepare experimental group participants to be more receptive to an intervention by prompting contemplation of the reasons for behaviour change. 4 Similarly, measurement may also obstruct or diminish the means by which interventions produce their effects (e.g. if it creates or reinforces negative views towards the intervention target).

These examples suggest interaction effects between measurement and intervention on trial outcomes. When measurement invites thinking about barriers to successfully performing physical activity (e.g. resulting in problem-solving on the part of the participant) and when barriers to anticipation and problem-solving are not an intended part of the intervention, it would be surprising if this had no relevance to the effects of the intervention. Alternatively, a food diary may draw attention to the elements of a dietary intervention that are being tested in the experimental arm and, therefore, enhance the effects of intervention components. Such interactions between measurement and intervention could be widespread in the case of interventions whose effects rely on behaviour change, but this possibility has received little empirical attention.

Dilution bias

Dilution bias refers to the situation in which MR has an impact on both arms in a two-arm trial and interferes with the estimation of effect sizes through restrictions on the possible range of measured outcomes. 4 For example, there may be a finite limit to the distance walked that a walking intervention can reasonably stimulate. The more pedometers or other measurement procedures unintentionally stimulate the behaviour that is the target of the intervention, the less scope there is for the intervention to be more effective than the control. This situation will also arise for other behaviours or targets for health interventions that are susceptible to measurement reactions. Consideration of the likely maximum effects on the extent of change possible following intervention is therefore needed to appreciate this particular risk of bias. When measurement reactions account for change and there are finite limits to how much change is possible, MR may lead to dilution bias, making it less likely that intervention effects will be identified. 4

Other inadvertent intervention effects

There are both clinical and research practices associated with measurement that can lead to bias when MR is present. Sharing measurement data that are surprising or that can have an impact in other ways could prima facie be regarded as particularly likely to be produce reactions to measurement. For example, the process of collecting measurement data taken during the course of a trial may alter the care provided by health-care professionals, which may lead to bias if such alterations are implemented differently for different randomised groups. This may happen when one group of patients have more frequent contact with health-care professionals (e.g. through regular assessment of body weight, blood pressure or blood tests to assess liver function). This is a specific case of the wider class of performance bias. 29 Both experimental and control groups can be exposed to inadvertent intervention effects in this way, making it possible that the direction of the bias could go either way.

Effects on other forms of bias such as attrition or information bias

Reactions to measurement can take many forms. They can also be implicated in other well-known forms of bias in addition to those discussed above. For example, from the participant’s perspective, too much measurement can increase the burden of trial participation so that they decide to drop out. Measurement content lacking in salience can also produce such reactions. 30 In principle, there should be no bias when such effects are equivalent between randomised groups. The potential for them to interact with randomisation status becomes clearer in situations in which there are already differences in participant burden between randomised arms due to intervention exposure. When interventions are somewhat onerous and the burden quite different for control group participants, MR may be more likely to produce differential attrition.

Measurement reactivity may also be implicated in information bias, particularly when trial outcomes are self-reported and measurement leads participants, for whatever reason, to inaccurately report data about themselves. This may be a problem particularly for socially undesirable behaviours. 31 Again, for bias to be introduced to intervention effect estimates in trials, the effects of biased reporting need to be differential between randomised arms. So the question becomes ‘How likely is it that intervention content has any impact on the likelihood of biased reporting?’. When the intervention concerns the socially undesirable behaviour, this seems very likely.

Summary

In this chapter we have made a series of subtle distinctions between different forms of bias and how they may be induced by MR. In scenarios 1 and 2, it is the main effects of MR that can lead to bias, whereas in the other scenarios (i.e. scenarios 3–6) the mechanisms are more complex and involve interactions with other aspects of the study design. In scenarios 1 and 3, the effects of MR are different between randomised arms. The implication here is that MR has undermined equivalence between randomised groups. In scenarios 2 and 4, MR may lead to bias by thwarting the intended experimental contrast. In the last two scenarios (i.e. scenarios 5 and 6), MR may also generate bias through established forms of bias (e.g. performance bias) that are not usually thought of in the context of MR. Specific recommendations about how to detect MR, investigate further for its presence and deal with MR when it appears to be an important source of risk of bias is covered in the next chapter.

Chapter 3 Research informing the development of recommendations

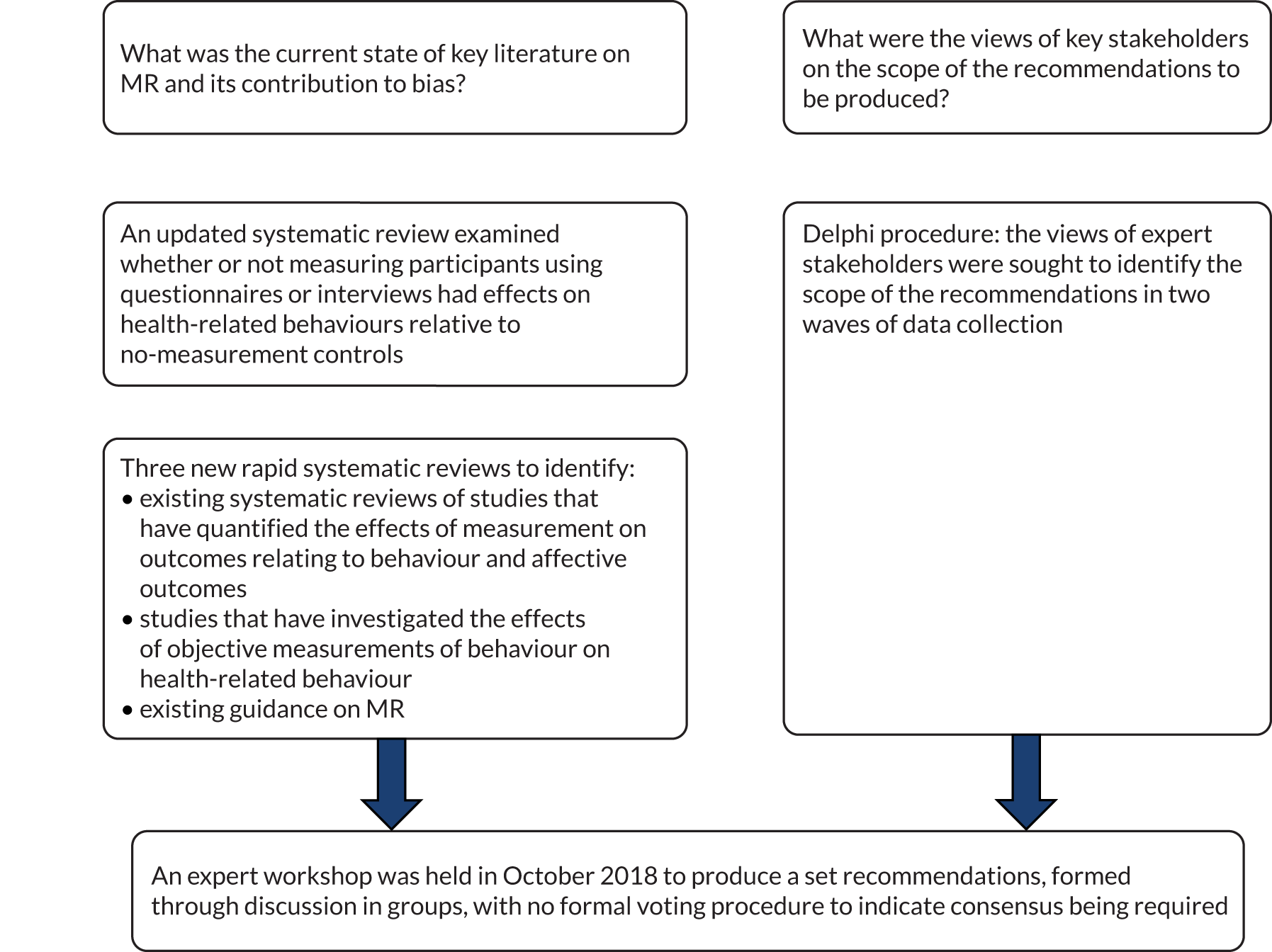

The present research used a variety of methods to produce recommendations to minimise risk of bias from MR in trials of interventions to improve health. Specifically, we conducted (1) a series of systematic and rapid reviews, (2) a Delphi study and (3) an expert workshop to develop recommendations on how to minimise bias in trials due to MR. The study protocol has been published. 2 The present chapter describes the methods employed in each of these elements, which are summarised in Figure 1.

FIGURE 1.

Overview of research activities in the MERIT study that informed the development of the recommendations.

The team conducting the MERIT project was led by Professor David French (University of Manchester) and consisted of Dr Lisa Miles (University of Manchester), Professor Diana Elbourne (London School of Hygiene and Tropical Medicine), Professor Andrew Farmer (University of Oxford), Professor Martin Gulliford (King’s College London), Professor Louise Locock (University of Aberdeen), Professor Jim McCambridge (University of York) and Professor Stephen Sutton (University of Cambridge). The team was formed with the intention of providing a wide variety of expertise relevant to the formation of recommendations on this topic.

We planned to involve the public, including patients and service users, as one of the key stakeholders in the present research in the Delphi process (described in Delphi procedure to inform the scope of the recommendations). However, despite approaching a number of people who have fulfilled these roles in other research that the team have been involved in, we were not successful in recruiting anyone in the time available. It may be that the present research, which involves conducting research on the research process, is less appealing to non-specialists, even those with considerable experience in patient and public involvement.

Asking questions changes health-related behaviour: an updated systematic review and meta-analysis of randomised controlled trials

Objective

An existing systematic review on the QBE on health-related behaviours9 found that asking people questions can result in changes in behaviour. However, the overall effect was small, with many of the included studies at high risk of bias, and publication bias was also detected. A lack of pre-registration of these studies was a particular issue because many of these studies included consideration of the QBE as part of other studies, leading to a concern that, if no QBE was found, then the findings in relation to the QBE were not published. Subsequent to the search for this systematic review, which was conducted in 2012, larger studies with pre-registered protocols have been published,32,33 with generally null findings. For this reason, it seemed timely to update this systematic review to inform the MERIT study. As with the original review, this review included RCTs investigating the QBE.

Study design and methods

A systematic search for newly published trials covered January 2012 to July 2018. Eligible trials randomly allocated participants to measurement conditions, to non-measurement control conditions or to different forms of measurement conditions. Studies that reported health-related behaviour as outcomes were included and meta-analysis was performed. Subgroup analyses were conducted to assess the impact of potential prespecified moderators of the QBE and sensitivity analyses were conducted to assess whether or not there were differences in QBE on the basis of risk of bias or presence of a pre-registered protocol.

Results

Forty-three studies (33 studies from the original systematic review and 10 new studies) compared measurement with no measurement. An overall small effect was found using a random-effects model [standardised mean difference 0.06, 95% confidence interval (CI) 0.02 to 0.09; n = 104,388]. Statistical heterogeneity was substantial (I2 = 54%). In an analysis restricted to studies with a low risk of bias, the QBE remained small but significant. Sensitivity analyses indicate that there was still substantial unexplained variance, probably due to large variation in studies with respect to content of measurement, types of health-related outcomes, length of follow-up and characteristics of participants. Subgroup analyses suggested that the QBE was present for some health-related behaviours more than others. There was positive evidence of publication bias.

Conclusion

This update shows a small but significant QBE in trials with health-related outcomes, but with considerable unexplained heterogeneity. Future trials with lower risk of bias, pre-registered protocols and greater attention to blinding are needed.

Note on publication

The systematic review update on the QBE has been published. 34

Further evidence reviews to inform development of the recommendations

Three new rapid reviews were conducted to identify (1) systematic reviews of studies that have quantified the effects of measurement on outcomes relating to behaviour and affective outcomes in health and non-health contexts, (2) studies that have investigated the effects of objective measurements of behaviour on concurrent or subsequent behaviour itself and (3) existing guidance on MR.

Rapid ‘review of reviews’ of studies of measurement reactivity

This review aimed to identify existing systematic reviews of studies that have quantified the effects of measurement on outcomes relating to behaviour and affective outcomes in both health and non-health contexts to identify relevant background literature for the MERIT study.

Reviews that provide a quantitative estimate of a measurement effect are briefly described in terms of their aims, scope, methods, quality, findings and conclusions. A detailed critique of each review is not provided, but important limitations that affect the validity of the conclusions are mentioned.

Methods

The following databases were searched, limited to English-language articles published in peer-reviewed journals between 2008 and 2018 (inclusive): PsycINFO, MEDLINE, PubMed and the Cochrane Database of Systematic Reviews. Search terms were developed and tested to check that they identified reviews that were already known to the research team. The final search strategy is given in Appendix 3. Two searches were run for each database: (1) a general search to identify relevant systematic reviews and meta-analyses and (2) a more specific search for reviews and meta-analyses of the QBE or mere measurement effect. The searches were limited to the titles and abstracts of the papers in the above databases. The reference lists of identified reviews were searched manually for additional relevant reviews.

Titles and abstracts of identified records were screened by one reviewer. Full-text versions of relevant articles were obtained and screened by the same reviewer. No data extraction form was used. Relevant study characteristics and data from the final set of reviews were extracted directly to tables and text in this report. Included reviews were rated for quality by the same reviewer, using A MeaSurement Tool to Assess systematic Reviews version 2 (AMSTAR 2). 35

A search of PROSPERO using the search terms in Appendix 3 identified two reviews9,11 that had already been published, but no ongoing reviews.

Results

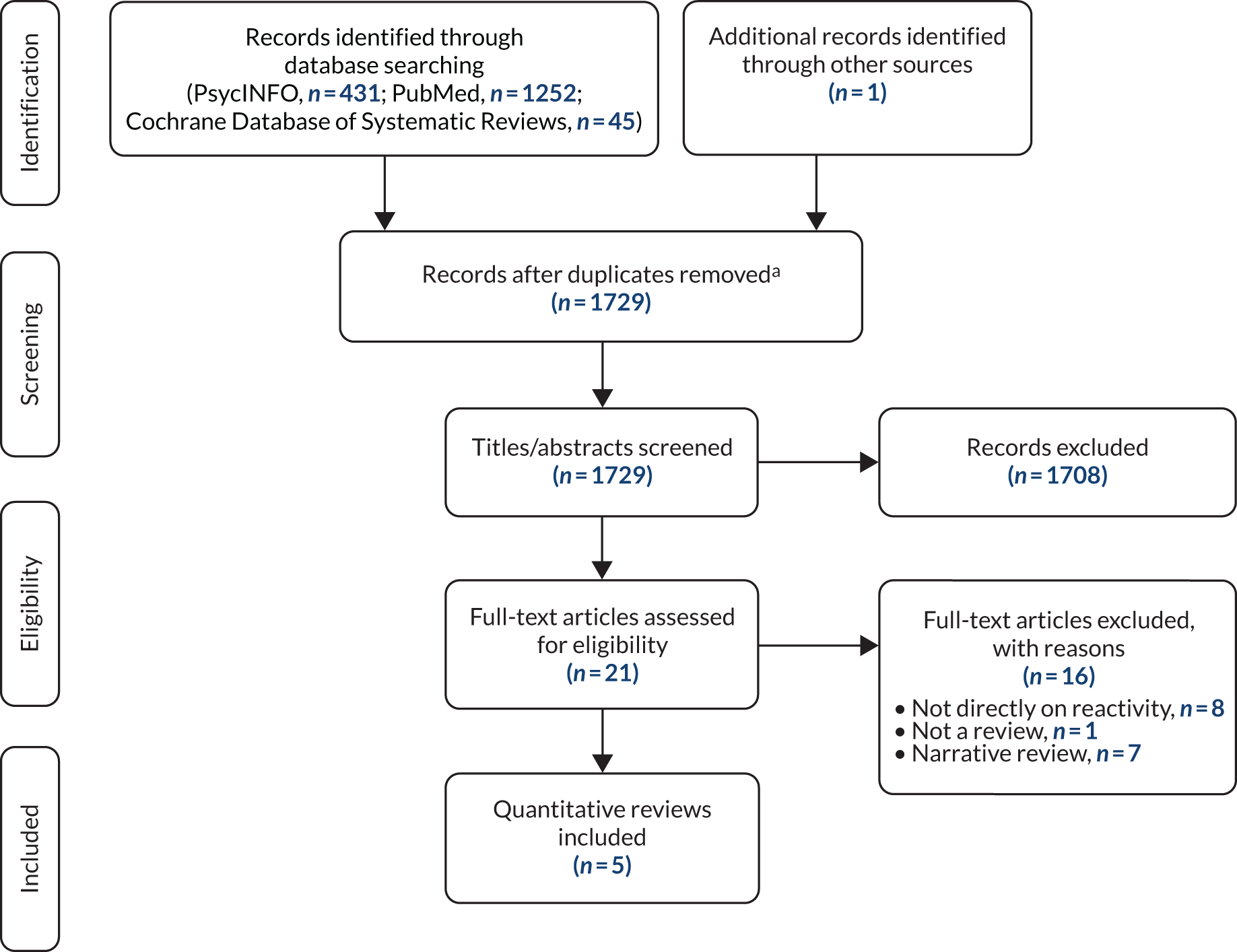

The searches of PsycINFO, PubMed and the Cochrane Database of Systematic Reviews yielded 1728 records. One additional record was identified from manually searching the reference lists of identified reviews. Twenty-one full-text articles were screened. Sixteen of these articles were excluded, in most cases because they did not directly address the topic of MR or they were narrative reviews or discussion papers on reactivity. 1,36–43 Several of these articles addressed reactivity of assessment of alcohol use (e.g. in the context of alcohol brief interventions). 6,36–40,42,44–52 One article37 discussed assessment reactivity in studies of interventions for intimate partner violence. However, none of these reviews reported a quantitative estimate of MR and these were therefore excluded from the present review.

A flow diagram showing the search and screening process is shown in Figure 2.

FIGURE 2.

Flow diagram of search and screening process. a, It was more efficient to screen the records for each database separately without identifying duplicates across databases.

The searches failed to identify the review by McCambridge et al. 23 of evidence from Solomon four-group studies. An additional search was therefore run for reviews of studies using the Solomon design, but none was identified. The paper by McCambridge and Kypri8 was also not identified in the searches; however, the paper is clearly relevant because it includes an effect size estimate. It is therefore included in this review for the sake of completeness, although it differs in focus from the other included reviews.

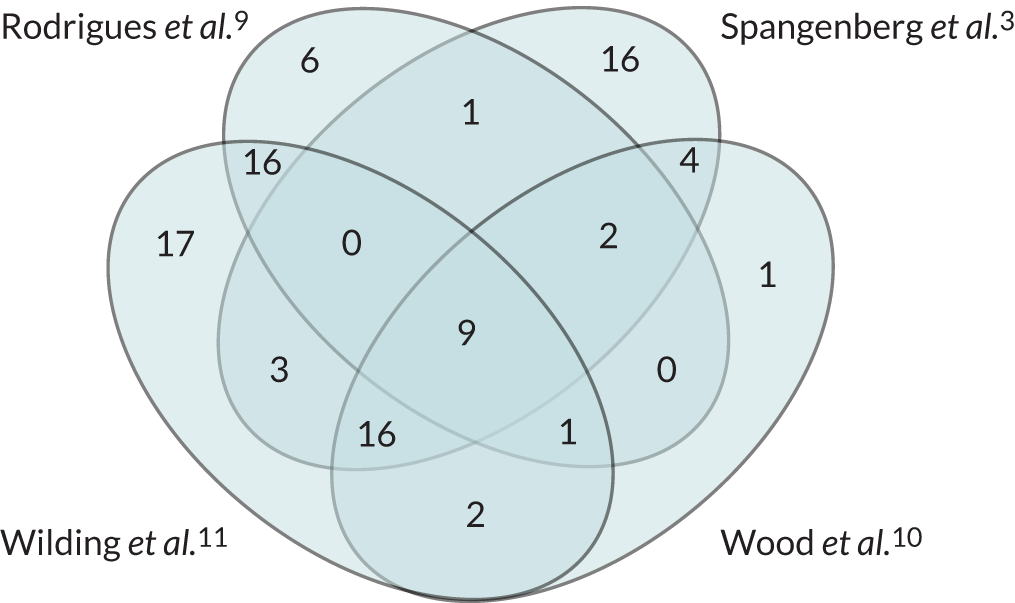

The five quantitative reviews3,9–11,53 that were identified in the searches all focused on the QBE. These are described in turn. The first four reviews analysed studies that included a relevant comparison or control group (Rodrigues et al. ,9 Spangenberg et al. ,3 Wood et al. 10 and Wilding et al. 11) and the fifth (Mankarious and Kothe53) analysed prospective studies of the theory of planned behaviour (TPB). 54

The four reviews3,9–11 of the QBE that included studies with a relevant comparison or control group used broadly similar systematic review and meta-analytic methods but differed in terms of scope (type of behaviour), research designs included (RCT only vs. RCT plus non-RCT designs), type of questioning and potential moderators investigated. The headline effect size estimates are given in Table 1. The overlap between the four reviews3,9–11 in terms of included studies is shown in the Venn diagram in Appendix 4 (see Figure 4). A total of 94 studies were included in the reviews (see Appendix 4). Only nine of these studies were included in all four reviews3,9–11 and significant numbers of studies were included in only one review (e.g. 17 studies in Wilding et al. 11 and 16 studies in Spangenberg et al. 3) (see Figure 4).

| Review | Number of studies | Cohen’s d | 95% CI |

|---|---|---|---|

| Rodrigues et al.9 | 33 | 0.09 | 0.04 to 0.13 |

| Spangenberg et al.3 | 104 | 0.28 | 0.24 to 0.32 |

| Wood et al.10 | 116 | 0.24 | 0.18 to 0.30 |

| Wilding et al.11 | 94 | 0.15 | 0.11 to 0.19 |

Rodrigues et al.

Rodrigues et al. 9 meta-analysed data from 41 RCTs of the QBE in the domain of health-related behaviours. The authors found a small overall QBE (Cohen’s d = 0.09, 95% CI 0.04 to 0.13). Studies showed variable risk of bias and evidence of publication bias (studies with smaller or no effects were less likely to be published). No significant moderators of the effect were identified. There were no significant differences in QBE by type of behaviour, but QBEs for three behaviours (i.e. dental flossing, physical activity and screening attendance) were significantly different from zero. The authors conclude that the observed small effect size may be an overestimate of the true effect and note that in some studies participants received intervention techniques in addition to questionnaires (e.g. thank you letters). They recommend that future studies should be pre-registered.

Spangenberg et al.

Spangenberg et al. 3 synthesised findings from 104 QBE studies from 51 published and unpublished studies; all were randomised studies with a control condition in which participants responded to a neutral control question or no question. There was no restriction on the types of behaviour included. The overall weighted mean effect size (product–moment correlation) was r = 0.137 (95% CI 0.115 to 0.158; equivalent to Cohen’s d = 0.28, 95% CI 0.24 to 0.32). The authors conclude that ‘Our results clearly support that questioning people about a target behaviour is a relatively simple yet robust influence technique producing consistent, significant changes in behaviour across a wide set of behavioural domains’. 3 However, the main aim of the study was to examine a number of prespecified potential moderating variables relating to four different theoretical mechanisms (i.e. attitudes, consistency, fluency and motivations) proposed to underlie the QBE. The authors found some support for each of the four mechanisms and suggest that there may be multiple mediating processes. Significant moderator analyses showed larger effects for computer surveys (compared with paper and pencil, telephone, individual mailers and face-to-face interviews), prediction questions (compared with intentions or expectations), not specifying a time frame in the question when the question required a dichotomous response (compared with continuous or multinomial responses), behaviours related to participants’ personal welfare (compared with behaviours related to social welfare, consumption or other types of behaviours), behaviours measured by self-report, novelty of behaviour, and psychological and social risks associated with not performing the target behaviour.

Wood et al.

Wood et al. 10 meta-analysed 55 studies of the QBE. There was no restriction on the type of behaviour. Studies had to include an appropriate comparison control condition, but it is not clear if only randomised studies were included. The overall effect size from 116 tests of the QBE was a Cohen’s d of 0.24 (95% CI 0.18 to 0.30).

Like Spangenberg et al. ,3 this meta-analysis focused on potential moderators that may inform possible mediating mechanisms. Univariate moderator analyses showed larger QBEs for greater attitude accessibility; lower ease of representation; asking prediction or expectation questions (compared with mixed items or intention items only); not asking anticipated regret questions; health, consumer or other behaviours (compared with prosocial and risky or undesirable behaviours); more socially desirable behaviours; less difficult behaviours; smaller time intervals between questioning and behaviour measurement; laboratory-based studies (compared with field studies); providing an incentive to respond; and student samples (compared with mixed, unreported or non-student samples). The authors interpret these results as showing little support for any of the proposed explanations of the QBE.

Wilding et al.

In the most recent meta-analysis of the QBE, Wilding et al. 11 included 65 papers reporting 94 tests. The authors note that this is between 12 and 30 papers more than previous meta-analyses. The authors included non-RCT designs as well as RCTs. Overall, the meta-analysis yielded a small but significant effect size (Cohen’s d = 0.15, 95% CI 0.11 to 0.19).

Moderator analyses showed larger effects for student samples; laboratory settings; question type that was self-prediction or intention; specific behaviours (especially flossing, health assessment and risky driving); desirable health behaviours; behaviours not measured at baseline; studies in which baseline questioning was carried out face to face; studies that included a per-protocol analysis (compared with an intention-to-treat analysis); when the research design was a non-RCT; shorter follow-up periods; and studies at high or unclear risk of bias (compared with low).

Mankarious and Kothe

Mankarious and Kothe53 conducted a meta-analysis of 66 TPB studies that measured health behaviours at two or more time points. These were prospective observational studies and not RCTs, as in the other quantitative reviews of the QBE. They calculated Cohen’s d to estimate the standardised mean difference for behaviour from baseline to the first follow-up measurement for each study. The average change in behaviour from baseline to follow-up across all studies was small and negative (Cohen’s d = –0.03, 95% CI –0.04 to 0.11). Length of follow-up was a significant moderator in that the change in behaviour from baseline to follow-up increased as the length of follow-up increased. Behaviour type was also a significant moderator in that socially desirable behaviours showed a small increase from baseline to follow-up, whereas socially undesirable behaviours showed a small but significant decrease. Subgroup analyses showed significant decreases in binge drinking, risky driving, sugar snack consumption and sun-protective behaviour.

The authors conclude that ‘Measurement of intention at baseline resulted in significant decreases in undesirable behaviours. Changes in undesirable behaviours reported in other studies may be the result of the mere measurement effect’. 53 However, there are several problems with this interpretation. The included studies measured all the TPB constructs at baseline and so it is difficult to attribute any mere measurement effect to the measurement of intention specifically. Although the authors argue that by selecting prospective studies research participant effects other than mere measurement can be ruled out, it is not clear that this is the case. For example, observed changes in behaviour could result from social desirability effects. It is also not clear whether the observed changes represent real changes in behaviour or simply changes in reporting.

The reviews varied in quality. AMSTAR 2 total scores (calculated by assigning 1 point for ‘yes’ and 0.5 points for ‘partial yes’, with a maximum of 16 points) ranged from moderate (i.e. 8.5 points for Rodrigues et al. 9 and 9 points for Wilding et al. 11) to low (i.e. 3 points for Spangenberg et al. 3 and 3.5 points for Wood et al. 10). Although the checklist is not designed to generate a total score, the score nevertheless gives an overall indication of quality.

McCambridge and Kypri

McCambridge and Kypri8 included eight trials of the effect of answering questions on alcohol drinking behaviour. Between-group differences were 13.7 (95% CI –0.17 to 27.6) grams of alcohol per week and 1 (95% CI 0.1 to 1.9) point on the Alcohol Use Disorders Identification Test score. Therefore, answering questions on drinking in brief intervention trials appears to alter subsequent self-reported behaviour.

Discussion

The four recent reviews3,9–11 of the QBE that included studies with a comparison or control condition yielded similar small, positive effect size estimates, ranging from 0.09 to 0.28 (Cohen’s d). These should not be considered to be completely independent estimates because of the overlap in included studies. However, the overlap was less than might be expected. The reviews come to different conclusions about the practical significance of these findings. For example, Wood et al. 10 state that ‘Within the health domain, a large number of studies have demonstrated that the QBE can be harnessed as an effective intervention . . .’. 10 By contrast, Rodrigues et al. 9 suggested that the observed effect size could overestimate the true effect size and that future studies should compare the QBE with simply sending reminders to perform the behaviour (see also the commentary by Rodrigues et al. 14).

The existing findings for moderators could be used to identify conditions under which a small or zero QBE could be expected, which would enable investigators to minimise the QBE. However, the findings for moderating variables are relatively weak. They are based on correlational evidence (i.e. study characteristics that are associated with larger or smaller effect sizes for the QBE) and the moderators are frequently correlated with each other. In some cases, the moderators were assessed only indirectly. For example, Wood et al. 10 assessed the potential moderator ‘attitude accessibility’ for each included study by multiplying an independent rating (by the review team) of attitude for the target behaviour and sample by the response rate to the questionnaire. The findings are often based on a small subset of studies and statistical significance is often close to 0.05. In many cases the results need to be replicated.

Rapid review of studies of reactivity to objective measurement of behaviour

In research on health behaviours, self-report measures of behaviour are ubiquitous. Such measures have well-known limitations (e.g. social desirability bias), and it is common to see recommendations for researchers to use so-called objective measures of behaviour that are assumed to have fewer limitations. However, objective measures may have their own limitations. For example, objective measures may have reactive effects on behaviour (e.g. measuring behaviour objectively may lead to increases in that behaviour).

To identify relevant background literature for the MERIT study this review aimed to identify studies that have examined the possible reactive effects of objective measurement of behaviour. The review included the following health-related behaviours: physical activity, diet/food choice, smoking, alcohol and drug use, dental behaviours and medication adherence.

The following databases were searched, limited to English-language articles published in peer-reviewed journals between 2008 and 2018 (inclusive): PsycINFO, MEDLINE and PubMed. Searches were limited to the titles and abstracts of the papers. The reference lists of identified papers were searched manually for additional relevant papers. Titles and abstracts were screened by one reviewer. Full-text versions of relevant articles were obtained and screened by the same reviewer.

Fourteen articles13,55–67 on physical activity and two papers68,69 on medication adherence were included in the review. No studies of smoking, alcohol, drugs, diet or dental behaviours were included.

Evidence of reactivity was found in some physical activity studies but not in others. Based on studies that used experimental research designs, the following broad conclusion for practice can be made:

-

If the aim is to measure physical activity, rather than to increase it, do not ask participants to use an unsealed pedometer (i.e. a pedometer that discloses step counts to the participant) and to record their steps. Instead, use either an accelerometer or a sealed pedometer (i.e. a pedometer that does not disclose step counts to the participant) and exclude data from the first few days of use.

The two studies68,69 of reactivity to objective measurement of medication adherence using electronic containers suggest that objective measurement may increase adherence but that the effect is temporary and/or relatively small and so can be ignored, particularly if a run-in period is used (i.e. a period of monitoring from which adherence data are discarded).

This review shows clearly that more work is needed on the possible reactive effects of objective measurement. Future work should consider using experimental designs rather than simply longitudinal studies of objective measurement, the findings from which are difficult to interpret. Experimental designs can be used to test whether or not there is a ‘main effect’ of objective measurement and to estimate the size of this effect. They can also be used to isolate key components of measurement that may account for the reactive effect (e.g. feedback of behavioural information to the participant via a display of steps on a pedometer as opposed to simply wearing a pedometer with no feedback).

With developments in technology and increasing awareness of the limitations of self-report measures of behaviour there is likely to be increasing use of objective measurement of behaviours in health behaviour research.

Existing guidance on measurement reactivity

The aim of the third rapid review was to identify existing guidance statements or recommendations on how to reduce bias from MR in trials.

A search was conducted of all CONSORT statements/papers and Medical Research Council (MRC) framework/guidance on complex interventions (all versions as well as of MRC guidance on process evaluation in trials. Each document was reviewed for any relevant content related to guidance on reducing the risk of bias from MR in trials. Furthermore, the full texts of the studies included in the two rapid reviews discussed in Rapid ‘review of reviews’ of studies of measurement reactivity and Rapid review of studies of reactivity to objective measurement of behaviour were checked for any reference to existing guidance on MR. Members of the MERIT study team were also consulted to find out if they were aware of any existing guidance or recommendations related to MR.

We were not able to identify any existing guidance statements or recommendations on how to reduce bias from MR in trials from any of these sources. To the best of our knowledge, the present document is the first to present recommendations on how to reduce bias from MR in trials.

Delphi procedure to inform the scope of the recommendations

The MERIT study included a Delphi procedure70 to explore and, as far as possible, combine the views of experts to reach agreement on the precise issues that the recommendations will cover (i.e. the scope of the recommendations). The objectives of the Delphi procedure were to:

-

seek expert opinion from stakeholders on the specific topics where recommendations on MR are needed and likely to produce the largest benefits

-

identify key background literature and expertise on MR.

Methods

Delphi participants were purposively recruited and identified by examining authorship of relevant studies as well as using knowledge within the multidisciplinary research team of people with relevant expertise. The aim was to identify individuals with wide-ranging expertise relating to MR, trial design, conduct and analysis as well as to identify individuals who are likely to be key users of the final recommendations, including those involved in research synthesis and funding (see Acknowledgements).

Participants were asked to complete two rounds of an online questionnaire over a period of approximately 12 weeks from May 2018. The first round of the Delphi procedure involved 15 open-ended questions, allowing participants to share their views on what sorts of bias can arise from MR, the mechanisms by which measurement produces changes in people, and the characteristics of study design, interventions, measurement and context that can lead to such biases. Suggestions were also sought on key literature on MR.

Responses from round 1 of the Delphi were developed into themes that were then used to inform the round 2 questions. Participants from round 1 of the Delphi were asked to complete round 2. The second round of the Delphi presented participants with a list of specific topics that recommendations might consider. Participants were asked to rate their agreement with these suggested topics as well as to provide open-ended comments if they thought that any key issues were missing.

Results

A total of 40 participants took part in round 1 of the Delphi procedure (119 invitations were sent in total), covering a wide range of expertise, as shown in Table 2. Among these, 31 (78%) participants took part in the second round. The findings of the Delphi procedure were then provided to delegates at an expert workshop (see Expert workshop to develop content of the recommendations).

| Expertise | Number of Delphi round 1 respondents |

|---|---|

| Evidence synthesis | 17 |

| Trial conduct | 16 |

| Health psychology/behaviour change | 12 |

| Public health/epidemiology | 11 |

| Qualitative/mixed methods | 11 |

| Trial statistics | 7 |

| Sociology | 7 |

| Measurement methods | 7 |

| MR | 6 |

| eHealth | 6 |

| Research funding | 5 |

| Ecological momentary assessment | 3 |

| Unknown | 2 |

| Health economics | 1 |

| Lay/patient | 1 |

The results of round 2 of the Delphi process are shown in Table 3. Each specific topic for inclusion was categorised into a subgroup topic (see Table 3, last column). These subgroup topics formed major components of the agenda at the expert workshop held in October 2018. Participants were provided with the ratings in Table 3 to help inform discussion.

| Topic | Response, % (n) [points] | Response total | Points | Average | Subgroup | ||||

|---|---|---|---|---|---|---|---|---|---|

| Not at all important (1 point) | Somewhat important (2 points) | Moderately important (3 points) | Very important (4 points) | Extremely important (5 points) | |||||

| Strategies for carefully designing trials to reduce the risk of bias due to MR | 0 (0) [0] | 3.23 (1) [2] | 3.23 (1) [3] | 38.71 (12) (48] | 54.84 (17) [85] | 31 | 138 | 4.45 | Study design |

| How to predict when MR could lead to bias in a trial | 0 (0) [0] | 0 (0) [0] | 9.68 (3) [9] | 38.71 (12) [48] | 51.61 (16) [80] | 31 | 137 | 4.42 | Bias/appraisal |

| Approaches to ensure measurement/assessments are not confounded with the intervention | 0 (0) [0] | 3.33 (1) [2] | 13.33 (4) [12] | 40 (12) [48] | 43.33 (13) [65] | 30 | 127 | 4.23 | Study design |

| How to anticipate when MR is likely to be present in a trial | 0 (0) [0] | 0 (0) [0] | 12.9 (4) [12] | 58.06 (18) [72] | 29.03 (9) [45] | 31 | 129 | 4.16 | Study design/appraisal |

| How to identify risk of bias due to MR in existing trials | 0 (0) [0] | 3.23 (1) [2] | 6.45 (2) [6] | 61.29 (19) [76] | 29.03 (9) [45] | 31 | 129 | 4.16 | Appraisal |

| How to interpret trials that are at risk of bias due to MR | 0 (0) [0] | 0 (0) [0] | 16.13 (5) [15] | 51.61 (16) [64] | 32.26 (10) [50] | 31 | 129 | 4.16 | Appraisal |

| How to identify the types of bias arising from MR | 0 (0) [0] | 0 (0) [0] | 23.33 (7) [21] | 50 (15) [60] | 26.67 (8) [40] | 30 | 121 | 4.03 | Bias |

| Considerations in selecting measurement tools (e.g. objective vs. subjective) to reduce the risk of bias due to MR | 0 (0) [0] | 6.45 (2) [4] | 22.58 (7) [21] | 38.71 (12) [48] | 32.26 (10) [50] | 31 | 123 | 3.97 | Measures |

| Recommendations on how unobtrusive methods of data collection could be used to remove or reduce the risk of bias due to MR | 0 (0) [0] | 9.68 (3) [6] | 16.13 (5) [15] | 41.94 (13) [52] | 32.26 (10) [50] | 31 | 123 | 3.97 | Measures |

| Considerations in planning the timing and number of repeated measurements to reduce the risk of MR | 0 (0) [0] | 3.23 (1) [2] | 25.81 (8) [24] | 45.16 (14) [56] | 25.81 (8) [40] | 31 | 122 | 3.94 | Study design |

| Provision of hypothetical/existing study examples to illustrate principles behind guidelines | 0 (0) [0] | 3.23 (1) [2] | 29.03 (9) [27] | 41.94 (13) [52] | 25.81 (8) [40] | 31 | 121 | 3.9 | All |

| Strategies for statistical analyses of trial outcome data that aim to estimate, and adjust for, the risk of bias due to MR | 0 (0) [0] | 6.67 (2) [4] | 30 (9) [27] | 33.33 (10) [40] | 30 (9) [45] | 30 | 116 | 3.87 | Analysis |

| Strategies to improve use of self-report measures to reduce the risk of MR | 0 (0) [0] | 6.45 (2) [4] | 29.03 (9) [27] | 35.48 (11) [44] | 29.03 (9) [45] | 31 | 120 | 3.87 | Measures/trial conduct |

| Considerations in undertaking pilot studies to identify potential measurement reactions and how they may be addressed | 0 (0) [0] | 9.68 (3) [6] | 22.58 (7) [21] | 48.39 (15) [60] | 19.35 (6) [30] | 31 | 117 | 3.77 | Study design |

| How to conceal measurements from participants or limit feedback as a way to reduce the risk of MR | 0 (0) [0] | 19.35 (6) [12] | 16.13 (5) [15] | 32.26 (10) [40] | 32.26 (10) [50] | 31 | 117 | 3.77 | Measures/trial conduct |

| Recommendations on how the research team should interact with research participants to reduce the risk of MR | 0 (0) [0] | 3.33 (1) [2] | 30 (9) [27] | 53.33 (16) [64] | 13.33 (4) [20] | 30 | 113 | 3.77 | Trial conduct |

| Strategies for handling risk of bias when a study’s aims require different measurement procedures across arms of a trial | 0 (0) [0] | 6.45 (2) [4] | 35.48 (11) [33] | 35.48 (11) [44] | 22.58 (7) [35] | 31 | 116 | 3.74 | Analysis |

| The circumstances in which one might use non-standard trial designs (e.g. Solomon four-group designs) to assess extent of bias and/or yield unbiased estimates of effects | 0 (0) [0] | 6.45 (2) [4] | 38.71 (12) [36] | 32.26 (10) [40] | 22.58 (7) [35] | 31 | 115 | 3.71 | Study design |

| Identification of gaps in knowledge on MR and how to minimise risk of bias in trials due to MR | 0 (0) [0] | 19.35 (6) [12] | 19.35 (6) [18] | 35.48 (11) [44] | 25.81 (8) [40] | 31 | 114 | 3.68 | All |

| Identification of research priorities for better understanding of MR and potential for bias | 3.23 (1) [1] | 16.13 (5) [10] | 19.35 (6) [18] | 35.48 (11) [44] | 25.81 (8) [40] | 31 | 113 | 3.65 | All |

| Which fields of research are most affected by bias due to MR | 0 (0) [0] | 12.9 (4) [8] | 29.03 (9) [27] | 45.16 (14) [56] | 12.9 (4) [20] | 31 | 111 | 3.58 | Bias/appraisal |

| How to assess extent of MR during an internal pilot phase of a trial | 0 (0) [0] | 16.13 (5) [10] | 25.81 (8) [24] | 41.94 (13) [52] | 16.13 (5) [25] | 31 | 111 | 3.58 | Analysis/trial conduct |

| Ethical implications of strategies to address MR | 6.45 (2) [2] | 16.13 (5) [10] | 25.81 (8) [24] | 32.26 (10) [40] | 19.35 (6) [30] | 31 | 106 | 3.42 | All |

| How biases caused by MR might relate to existing risk-of-bias frameworks | 0 (0) [0] | 12.9 (4) [8] | 45.16 (14) [42] | 35.48 (11) [44] | 6.45 (2) [10] | 31 | 104 | 3.35 | Bias |

| Theoretical explanations that may plausibly explain the effects of measurement on people who have been measured (mechanisms) | 3.23 (1) [1] | 22.58 (7) [14] | 32.26 (10) [30] | 29.03 (9) [36] | 12.9 (4) [20] | 31 | 101 | 3.26 | Mechanisms |

| Recommendations on selection of research participants (recruitment strategies and inclusion criteria) to reduce the risk of MR | 6.45 (2) [2] | 32.26 (10) [20] | 32.26 (10) [30] | 16.13 (5) [20] | 12.9 (4) [20] | 31 | 92 | 2.97 | Trial conduct |

| How research participants may make use of measurement for their own purposes (which may lead to bias) | 0 (0) [0] | 43.33 (13) [26] | 30 (9) [27] | 16.67 (5) [20] | 10 (3) [15] | 30 | 88 | 2.93 | Trial conduct |

| Total number of respondents (for this survey round) | 31 | ||||||||

| Total number of responses | 832 | ||||||||

| Point average (total points all rows/responses for all rows) | 3.79 | ||||||||

| Point weighted average (total points all rows/responses for all rows) | 3.79 | ||||||||

Expert workshop to develop content of the recommendations

An expert workshop was held in Manchester on 4 and 5 October 2018. A total of 23 delegates attended the workshop. The delegates covered a broad range of expertise similar to that covered by the Delphi procedure (see Acknowledgements). Delegates were provided with reports of the evidence reviews (see Asking questions changes health-related behaviour: an updated systematic review and meta-analysis of randomised controlled trials, Rapid ‘review of reviews’ of studies of measurement reactivity, Rapid review of studies of reactivity to objective measurement of behaviour and Existing guidance on measurement reactivity) and the results of the Delphi procedure (see Delphi procedure to inform the scope of the recommendations) and were encouraged to refer to these as a basis for further discussions. The content of appropriate recommendations was discussed by the workshop delegates and these statements form the central part of the current recommendations. As informed by the Delphi procedure, discussions were conducted in subgroup and plenary sessions and structured around study design and bias, measurement procedures, appraisal of existing trials, trial conduct and statistical analysis.

The MERIT study team have written the current report based on notes of the workshop discussions. All workshop delegates were given opportunity to review and comment on the report and recommendations before it was finalised.

A notable limitation of the MERIT study was the shortage of high-quality evidence regarding the extent of MR (with some notable exceptions) and the circumstances that are likely to bring it about. There is a particular lack of direct evidence regarding the extent to which MR produces bias in trials. Accordingly, development of the final recommendations has relied extensively on indirect evidence processed by expert opinion, which is contingent on reasonable inference regarding the likely consequences of measurement in producing bias.

Chapter 4 Recommendations

Parts of this chapter have been reproduced with permission from French et al. 27 This is an Open Access article distributed in accordance with the terms of the Creative Commons Attribution (CC BY 4.0) license, which permits others to distribute, remix, adapt and build upon this work, for commercial use, provided the original work is properly cited. See: https://creativecommons.org/licenses/by/4.0/. The text below includes minor additions and formatting changes to the original text.

The present chapter makes a series of recommendations for people designing and conducting trials. In developing these recommendations, a limitation is the current state of knowledge. There is some evidence regarding the circumstances under which measurement will lead to reactivity, but little direct evidence about the extent to which it causes bias, let alone the effectiveness of any steps that could be taken to reduce bias. 2 Given this, many of these statements are broad recommendations about issues that may be useful to consider on the basis of indirect evidence processed by expert opinion.

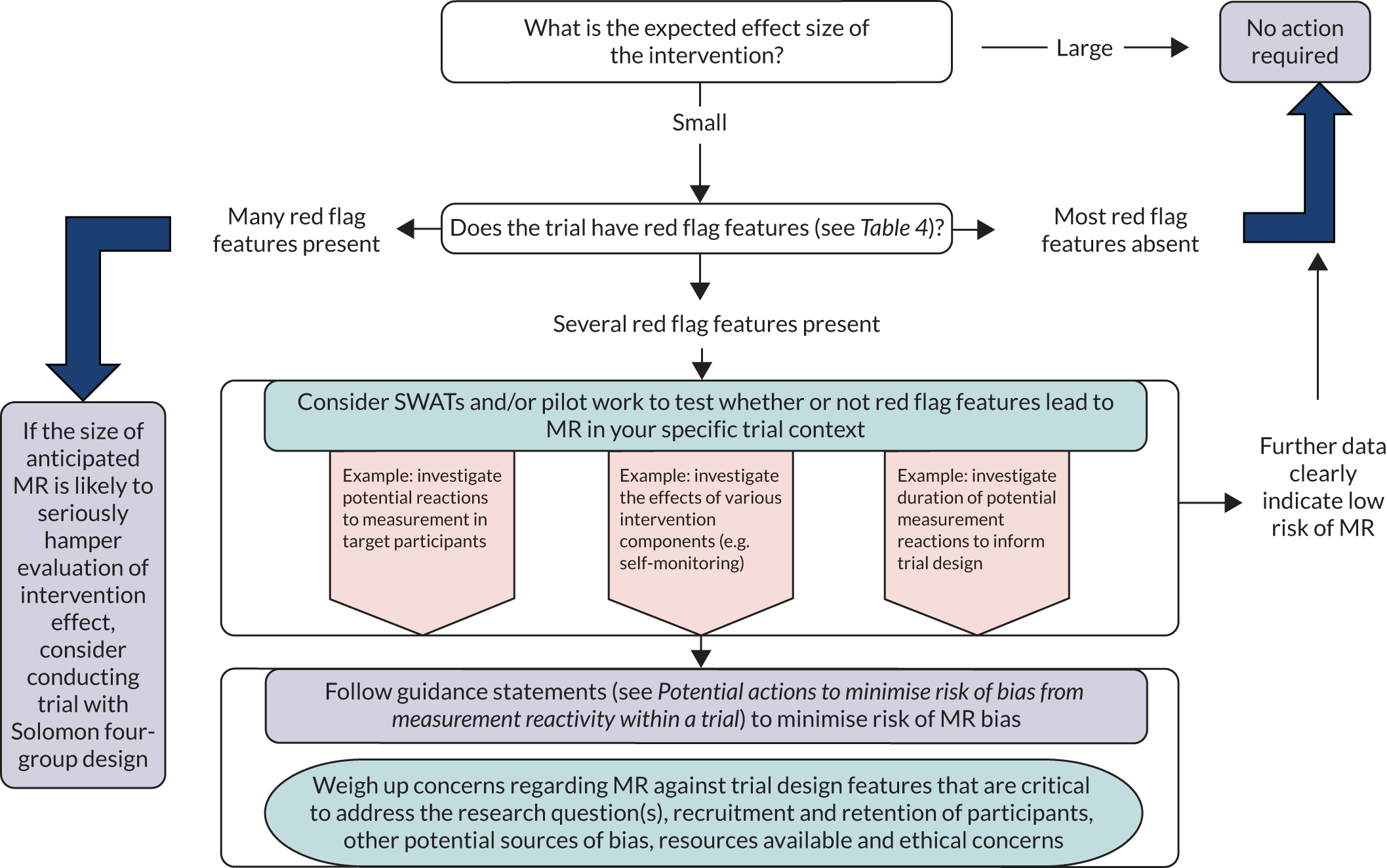

The recommendations are grouped into three broad types of recommendations: (1) identify whether or not MR is likely to be a major source of bias for a new trial, (2) collect further data to inform decisions about whether or not there is risk of bias resulting from MR and (3) potential actions to minimise risk of bias from MR within a trial.

Identify whether or not measurement reactivity is likely to be a major source of bias for a new trial

It is worth noting that, in some cases, the risk of bias from MR in a particular trial may be so small that it can safely be ignored. Consideration of the ways in which MR may lead to bias, as well as other potential sources of bias, and how bias can be prevented lies at the heart of rigorous approaches to trial design and conduct. No triallist can discount selection bias or other forms of bias without properly accounting for the risk in the specific circumstances of their trial. 25 In many circumstances, although bias from MR may be present, it is likely to be of small magnitude compared with other sources of bias, such as failure of randomisation. 9 It may also be impossible to isolate bias from MR if there are other sources of bias. The features listed in Table 4 suggest circumstances in which MR bias may be more important. It is, however, worth considering that reactions to assessment can exacerbate or contribute to other sources of bias that have previously received more attention. 7 As discussed in Chapter 2, reactions to measurement can be implicated in several well-known forms of bias.

| Criterion indicating risk of bias | Circumstances under which risk of bias is likely to be higher |

|---|---|

| Participant selection | |

| Recruitment | Selection on personal motivation for participation in the trial |

| Eligibility criteria | Restrictive eligibility criteria |

| Education | More educated (e.g. university students) |

| Measurements | |

| Features of health outcome of interest | |

| Participant awareness of health-related outcome of interest | Participants aware of outcome of interest (open) |

| Nature of health-related outcomes | Outcomes focused on behaviour or anxiety; health-promoting behaviours (e.g. physical activity) |

| Social desirability of health outcome | Outcomes with well-recognised social norms (e.g. body weight) |

| Follow-up | |

| Number of measurement occasions | Measurements repeated on several occasions |

| Length of time to follow up | Short in relation to possible measurement effects |

| Features of measurement procedures/tools | |

| Equivalence of measurement procedures across trial arms | Differential across trial arms |

| Similarity between measurement and BCTs | Measurement directly mimics BCTs |

| Source of data | New data collected specifically for this study |

| Measurements open to subjectivity | Self-report measures |

| Disclosure of measured values to participants | Values disclosed to participants (immediately) |

| Burden of measurement task | Onerous for participant |

| Complexity of measurement task | Complex for participant |

| Measurement framed in terms of goals/targets | Participants measured against specific goals/targets |

| Context | Laboratory setting |

| Interventions and comparators | |

| Nature of the intervention | Behavioural and/or self-monitoring components included |

| Blinding to arm allocation | Lack of blinding to arm allocation |

| Process evaluation | |

| Process measures | Measures included are assessing mechanisms of action on the primary outcome |

| Timing | Conducted before/during trial outcome assessments |

| Trial arms included | Conducted in only one trial arm |

| Number of data collected | Extensive data collected from all participants |

Recommendation 1: consider potential for measurement reactivity causing bias at the design stage of a trial

It will be easier to prevent MR causing bias than it will be to deal with the consequences of bias through analysis after the event. Therefore, researchers should consider at the outset whether or not the trial they are planning is likely to produce this bias. It is important to consider the many measurement and assessment processes involved in a trial. This may include assessment of eligibility, baseline assessments, assessments of adherence or fidelity, process evaluations (quantitative and qualitative) and interim/final outcome assessments. Each of these measurement or assessment processes has the potential for causing MR and thereby introduces the potential for bias.

It is also important to be clear when measurement is an integral part of the intervention and, hence, should not be considered a source of bias per se, although there may be contamination issues to consider carefully. In many studies ongoing measurement may be part of an intervention (i.e. it would be part of the intervention were it to be rolled out in practice outside a trial). For instance, many weight management programmes may include regular measurement of body weight as an integral part of the intervention. 71 In this case, although regular weighing would be carried out only in the intervention group and not in the control group, any reactions to this measurement would not constitute bias (i.e. the reactions are due to an integral part of the intervention). By contrast, if such regular weighing were not part of the programme were it to be rolled out, then the assessments would be a feature of the trial design rather than the intervention design, and this imbalance between experimental arms has the potential to produce bias. For these reasons, it makes sense to have a single clear purpose for each measurement.

Recommendation 2: consider potential for measurement reactivity as a source of bias throughout the research process

As one moves from early trial planning through detailed study design to giving attention to issues arising from study conduct, it is important to consider all instances of measurement that can occur throughout the research process. For instance, when assessing eligibility of a potential participant for a trial, disclosure of health status (e.g. blood pressure or cholesterol level) to research participants at the beginning of a clinical trial could potentially lead to measurement reactions. For example, in a trial evaluating the effect of a behavioural intervention compared with usual care, disclosure of health status to participants could motivate the comparison group to seek additional support. Participants’ knowledge of their health status could also make them more or less receptive to an intervention. 72 This might be particularly problematic when subgroups of the population with a particular health risk are recruited to take part in a trial (e.g. people with obesity or people who drink alcohol heavily). 8 It is not difficult to imagine how, by simply engaging in the measurements required to assess eligibility into the trial, participants become aware of their health status and might change their thoughts, emotions or behaviour as a result. In such instances, there is a need to be careful around communications with participants regarding how measurements are used.

At the consent stage participants are often informed of the trial objectives through the patient information sheet as well as through possible informal interactions with trial personnel. Participants may then perceive specific behavioural trial outcomes to be implied norms or goals in the context of the research. This may predispose to MR, which may introduce bias subsequent to randomisation. 4 Patient information sheets should be carefully drafted so as to emphasise the concept of equipoise and the equal value attached to alternative trial interventions and outcomes. Patients may be asked to consent to masking of measurements and non-disclosure of measurement values (see Recommendation 13: consider the potential benefits of masking measures and/or withholding feedback of measured values against ethical considerations). Trial personnel should follow standard operating procedures (SOPs) that regulate informal communications with participants at the recruitment/consent stage.

In some trials, for example cluster RCTs73 and others based on routine health records,32,33 consent may not always be required at the individual participant level. Consent at general practice level, for example, enables trials to be conducted with lower levels of awareness among patients of research participation. Gaining consent at a group level in this way can help to avoid participants’ awareness of the health outcome of interest for the trial and the potential for MR to take place based on this knowledge. However, MR alone is unlikely to be a main justification for choice of a cluster randomised design.

Baseline measurements in a trial typically contribute to efficient design by enabling more precise estimation of the intervention effect. 74 However, when trial participants are exposed to baseline measurements this may contribute to MR and heighten risk of bias. This is because experiences of a previous measurement within a trial may influence responses at later measurement occasions and/or interact with the study intervention. These testing effects may differ according to subsequent trial arm allocation.

It is generally recognised that recording the delivery of face-to-face interventions results in greater adherence to intervention protocols, which can be problematic when fidelity assessments are taking place. 75 This is probably unproblematic in efficacy or ‘proof of principle’ studies, in which high levels of adherence allow easier interpretation of whether or not an intervention delivered as intended demonstrates efficacy. 76 However, in effectiveness studies, in which one is aiming to examine the effects of an intervention delivered in more ‘real-world’ settings and in which such fidelity assessments would not be enacted in routine implementation, any reactions to these assessments form an example of MR, as discussed in the present document. When the fidelity assessments are enacted in routine implementation (e.g. as part of quality assurance processes), they can be considered to be part of an intervention and, hence, any reactions to these assessments are not problematic. Assuming that adherence to the intervention protocols is not part of future practice (assuming the intervention is successful) and is likely to result in greater effects, then the use of fidelity assessments is likely to result in bias through overestimation of intervention effects (see Chapter 2).

It is good practice to draw up SOPs for the measurement procedures that encompass issues noted in this report, including consistency of measurement procedures across trial arms, masking and non-disclosure of measurement values, number of measurement occasions, etc. The SOPs should extend to regulating informal contacts/communications between trial participants and health-care providers or trial personnel either at the time measurements are taken or on other occasions.

The prospect of future measurement may also produce changes in research participants. For example, anticipatation of measurement of body weight can lead to changes in feelings of self-efficacy and self-control, as well as increased accountability for one’s own actions,77 which could potentially affect adherence to physical activity and healthy eating guidance, particularly shortly before such measurements. Similarly, electronic monitoring of medication adherence can lead to changes in adherence. 69 That is, knowledge or anticipation of measurement or disclosure of outcomes, as well as actual measurements conducted, should be considered as potential sources of reactivity.

Recommendation 3: consider specific trial features that may indicate heightened risk of bias due to measurement reactivity

Table 4 provides a series of ‘red flags’ or trial features that might indicate that MR should be considered a possible risk of bias in a study. Based on the consensus views of experts consulted for this report, it highlights features of study participants, types of interventions, features of study design and measurement issues where MR may be more likely to occur and lead to bias. Table 4 should be consulted alongside the explanatory text in Appendix 5. When direct evidence is available to support entries in the table, then this is cited in Appendix 5; otherwise, the entries are based on consensus views of experts consulted as part of the MERIT study (see Chapter 3 for a description of the process used to develop recommendations).

The entries in Table 4, or ‘red flag’ features of study design, indicate only potential for bias from MR, which may be absent on closer examination or identified as a possibility and mitigated through careful study design. The potential for measurement as a co-intervention leading to bias is implicit but not widely articulated in existing tools intended to assist in study design. 78 Table 4 provides a checklist to identify such concerns. Researchers should refer to Table 4, and associated text in Appendix 5, to determine whether or not MR and risk of bias arising from this is likely to be particularly relevant to their particular study and, if so, should further consider the following recommendations about reducing the potential for bias. Some key issues contained in Table 4 are illustrated in worked examples contained in Boxes 3 and 4.

We describe below a hypothetical trial in which there is a high likelihood of bias due to MR to illustrate the issues highlighted in Table 4. Please note that this example includes several features of poor trial design. This is deliberate to illustrate issues that are explicitly related to ‘red flags’ in Table 4.

A trial is designed to assess the effectiveness of an intervention to promote maintenance of weight loss in people with type 2 diabetes who have lost 5 kg, relative to usual care. The intervention is designed to be delivered entirely online and involves self-monitoring of body weight, physical activity and dietary behaviours as well as encouragement to seek social support for maintenance of weight loss. To assess the intervention’s mechanism of effect, 25% of participants in the intervention group are required to engage in a process evaluation. This involves patients attending their general practices to be weighed. These participants are also asked to complete questionnaires about their dietary and physical activity behaviours online. A different subsample is asked to participate in focus groups to discuss how useful they found the online intervention.

This trial has numerous features that suggest it is likely to produce a biased outcome due to MR. First, the intervention is a fairly minimal contact digital intervention and so any effect of this intervention on body weight is likely to be small. Therefore, the effects of MR do not have to be large to have a relatively substantial effect. Second, all participants are likely to be motivated to achieve the outcome, given that they have already lost 5 kg in body weight, and so they are likely to be very interested in the results of measurements made. Third, there is unbalanced measurement, with those in the intervention group receiving substantially more process measurement (i.e. attendance at general practices involves a quite burdensome form of measurement). Fourth, the process evaluation mimics some of the intended effects of the intervention because it involves (1) regular weighing, (2) regular reflection on behaviour through completion of questionnaires and (3) contact with other people who could provide social support. Fifth, because the study is not blinded, the staff at the general practices will be aware that the intervention participants are trying to maintain weight loss and may offer encouragement or other advice. The mere fact that body weight will be monitored by others may promote attempts to maintain weight loss. Last, the outcomes of measurement are likely to be available to intervention participants and so they may be receiving more information than participants in the other experimental condition.

This box considers what actions could reasonably be taken to address the high likelihood of risk of bias of the hypothetical trial described in Box 3. Please note that, even in the absence of the onerous process evaluation, the evaluation of this particular intervention in a trial invokes the risk of measurement reactions introducing bias because measurement is intrinsic to both intervention and trial design. The selection criteria create risks to be considered because they seek participants who have already demonstrated that they are motivated volunteers and they may have varying strengths of preferences for allocation. Communications with trial participants from the information sheet onwards need to be carefully constructed throughout the study to provide assurance about the equal value attached to both trial arms.

The process evaluation makes measurement differential between arms and so a strong justification is required, which makes the risks of bias worth considering in relation to other considerations. To inform this process of decision-making it would be helpful to elaborate a programme theory of how this intervention seeks to produce the weight loss maintenance outcome. This could be useful because it will clarify thinking about how measurement and other features of study design may operate in relation to the possible mechanisms of effect.