Notes

Article history

The research reported in this issue of the journal was commissioned by the HTA programme as project number 07/54/01. The contractual start date was in May 2007. The draft report began editorial review in March 2009 and was accepted for publication in November 2009. As the funder, by devising a commissioning brief, the HTA programme specified the research question and study design. The authors have been wholly responsible for all data collection, analysis and interpretation, and for writing up their work. The HTA editors and publisher have tried to ensure the accuracy of the authors’ report and would like to thank the referees for their constructive comments on the draft document. However, they do not accept liability for damages or losses arising from material published in this report.

Declared competing interests of authors

None

Permissions

Copyright statement

© 2010 Queen’s Printer and Controller of HMSO. This journal is a member of and subscribes to the principles of the Committee on Publication Ethics (COPE) (http://www.publicationethics.org/). This journal may be freely reproduced for the purposes of private research and study and may be included in professional journals provided that suitable acknowledgement is made and the reproduction is not associated with any form of advertising. Applications for commercial reproduction should be addressed to: NETSCC, Health Technology Assessment, Alpha House, University of Southampton Science Park, Southampton SO16 7NS, UK.

2010 Queen’s Printer and Controller of HMSO

Chapter 1 Introduction

Background

The National Institute for Health and Clinical Excellence (NICE) in England and Wales and similar structures elsewhere are required to make health-policy decisions that are relevant, evidence-based and transparent. Decision-analytic modelling is recognised as being well placed to support this process. 1 The key role that models play is, however, reliant on their credibility. Credibility in decision models depends on a range of factors including the coherence of the model with the beliefs and attitudes of the decision-makers, the decision-making framework within which the model is used, the validity of the model as an adequate representation of the problem in hand and the quality of the model.

The predominant software platform for Health Technology Assessment (HTA) models is the spreadsheet. In studies of generic spreadsheet system development, there is abundant evidence of the ubiquity of errors and some evidence of their potential to impact critically on decision-making. The European Spreadsheet Risks Interest Group (EuSPRIG) maintains a website recording research on risks in spreadsheet development and on public reports of errors (www.eusprig.org/ accessed 20 February 2009). In 1993, Cragg and King2 undertook an investigation of spreadsheet development practices in 10 organisations and found errors in 25% of the spreadsheets considered. Since 1998, Panko3 has maintained a review of spreadsheet errors and this suggests that spreadsheet error rates are consistent with error rates in other programming platforms, and when last updated in 2008 error rates had not shown any marked improvement.

To date, there has been no formal study of the occurrence of errors in models within the HTA domain. However, high-impact errors have been recorded, perhaps the earliest being the case of the sixth stool guaiac test, where Neuhauser and Lewicki4 estimated an incremental cost of $47 million per case of colorectal cancer detected. Brown and Burrows5 subsequently identified an error in the model and generated a comparatively modest corrected estimate. The grossly inflated incorrect figure has, however, subsequently been used (and is still in use) by authors including Culyer6 and Drummond et al. 7,8 in their seminal texts to demonstrate the importance of incremental analysis in health economics. The failure in this case was traced back to an error in the interpretation and subsequent modelling of diagnostic test characteristics. In a study in 2000 investigating the quality of models used to support a national policy-making process, Australia’s Pharmaceutical Benefit Advisory Committee (PBAC) reported that around 60% were flawed in some way and 30% demonstrated problems in the modelling. 9 Although it is tempting to think that things may get better over time, a recent repeat retrospective analysis reported in 2008 demonstrated that in fact the situation had appeared to deteriorate with 82% (203 out of 247) of models reviewed being considered by PBAC to be flawed in some respect. 10

Errors in software implementing mathematical decision models or simulation exercises are a natural and unavoidable part of the software development process. 11 This is recognised outside the HTA domain and accordingly there exists research, for example in the computer sciences field and in the operational research field, in model testing and verification. In contrast, the modelling research agenda within HTA has developed primarily from a health-services research, health economics and statistics perspective and there has to date, been very little attention paid to the processes involved in model development. 12 The extent of this shortcoming is reflected in the fact that guidance on good practice either acknowledges the absence of methodological and procedural guidance on model development and testing processes, or, makes no reference to the issue. 13–17

A wide range of strategies could be adopted with the intention of avoiding and identifying errors in models. These include improving the skills of practitioners, improving modelling and decision-making processes, improving modelling methods, improving programming practice and improving software platforms. Within each of these categories there are many further options, so improving the skill base of practitioners might include: the development and dissemination of good practice guides, identification of key skills and redesign of training and education, sponsoring of skills workshops, and determination of minimum training/skill requirements. These interventions again might impact across the whole range of disciplines involved in the decision support process, including information specialists, health economists, statisticians, systematic reviewers and not to forget, modellers themselves. Outside the HTA domain, Panko identifies a wide range of initiatives for best practice in spreadsheet development, testing and inspection, including such things as guidelines for housekeeping structures in spreadsheets. 18 However, Panko also goes on to review the evidence on the effectiveness of these interventions and identifies first a lack of high-quality research on effectiveness and second that what evidence does exist is at best equivocal. 18 This, taken together with the evidence that models are not improving over time, indicates that caution should be exercised in recommending quick-fix, intuitively appealing solutions.

In the absence of an understanding of error types and the causes of errors, it is difficult to evaluate the relative merits of such initiatives and to develop an efficient and effective strategy for improving the credibility of models. This study seeks to understand the nature of errors within HTA models, to describe current processes for minimising the occurrence of such errors and to develop a first classification of errors to aid discussion of potential strategies for avoiding and identifying errors.

Aim and objectives

The aim of this study is to describe the current comprehension of errors in the HTA modelling community and to generate a taxonomy of model errors, to facilitate discussion and research within the HTA modelling community on strategies for reducing errors and improving the robustness of modelling for HTA decision support.

The study has four primary objectives:

-

to describe the current understanding of errors in HTA modelling, focusing specifically on

-

types of errors

-

how errors are made

-

-

to understand current processes applied by the technology assessment community for avoiding errors in development, debugging models and critically appraising models for errors

-

to use HTA modellers’ perceptions of model errors together with the wider non-HTA literature to develop a taxonomy of model errors

-

to explore potential methods and procedures to reduce the occurrence of errors in models.

In addition, the study describes the model development process as perceived by practitioners working within the HTA community, this emerged as an intermediate objective for considering the occurrence of errors in models.

Chapter 2 Methods

Methods overview

The project involved two methodological strands:

-

A methodological review of the literature discussing errors in modelling and principally focusing on the fields of modelling and computer science.

-

In-depth qualitative interviews with the HTA modelling community, including:

-

– Academic Technology Assessment Groups involved in supporting the NICE Technology Appraisal Programme (hereafter referred to as Assessment Groups)

-

– Outcomes Research and Consultancy Groups involved in making submissions to NICE on behalf of the health-care industry, (hereafter referred to as Outcomes Research organisations).

-

In-depth interviews with HTA modellers

Overview of qualitative methods

Interviews with 12 mathematical modellers working within the field of HTA modelling were undertaken to obtain a description of current model development practices, to develop an understanding of models errors and strategies for their avoidance and identification. From these descriptions, issues of error identification and prevention were explored. 19

Sampling

The interview sample was purposive, comprising one HTA modeller from each Assessment Group contracted by the National Coordinating Centre for Health Technology Assessment (NCCHTA) to support NICE’s Technology Appraisal Programme, as well as HTA modellers working for UK-based Outcomes Research organisations. The intention of the sampling frame was to identify diversity across modelling units. Characteristics of the interview sample are detailed at the end of this chapter.

Qualitative data collection

Face-to-face in-depth interviews19 were used as the method of data collection; this is particularly appropriate given their focus on the individual and the need to elicit a detailed personal understanding of the views, perceptions, preferences and experiences of each interviewee. A topic guide was developed by the research team that enabled the elicitation of demographic information, as well as views and experiences of the modelling process and issues around modelling error (see Appendix 1). Interviews began with a discussion of background details and progressed to a more in-depth exploration of modellers’ experience and knowledge. The topic guide was piloted internally with one of the authors (AR) to ascertain clarity of the questions; the topic guide was subsequently revised. The topic guide was designed to facilitate the flow of the interviews, although the interviews were intentionally flexible and participant-focused. During the interviews, interviewees were asked about their personal views rather than acting as representatives of their organisation.

During each interview the participant was asked to describe their view of the model development process. This description was sketched onto paper by a second researcher and subsequently reviewed with the participant who was asked to confirm the sketch or ‘map’ as a representative and accurate record of their view. This diagrammatic personal description of the model development process was instrumental within the interviews, representing a personalised map against which to locate model errors according to the perceptions of the interviewee, thereby guiding the content and agenda of the remainder of the interview. 20 ‘Prompting’ was used to ‘map’ and ‘mine’ interviewee responses while ‘probing’ questions were used to further elaborate responses and to provide richness of depth in the interviewees’ responses.

All interviews were undertaken between September and October 2008 by three members of the research team (PT, JC and AR). All three interviewers have experience in developing and using health economic models. One of the interviewers (PT) received formal training in in-depth interviewing techniques and analysis of qualitative data, this training being shared with the modelling team working with the advice of a qualitative reviewer (MJ). All but two interviews were paired, involving a lead interviewer and a second interviewer. The role of the second interviewer was to facilitate the elicitation of the model development process by developing charts and to ensure relevant issues were explored fully by the lead interviewer. The remaining two interviews were undertaken on a one-to-one basis. Each interview lasted approximately 1½ hours.

Data analysis and synthesis

Interviews with participants were recorded and transcribed verbatim; all qualitative data were analysed using the Framework approach. 21 Framework is an inductive thematic framework approach particularly suited to the analysis of in-depth qualitative interview data, involving a continuous and iterative matrix-based approach to the ordering and synthesising of qualitative data. The first step in the analysis involved familiarisation with the interview data, leading to the identification of an initial set of emergent themes (e.g. definition of model error), subthemes (e.g. fitness for purpose) and concepts. Transcript data were then indexed according to these themes, both by hand and using NVivo® software (QSR International, Southport, UK). Full matrices were produced for each theme, detailing data from each interviewee across each of the subthemes. Data within each subtheme was then categorised, ensuring that the original language of the interviewee was retained, and classified to produce a set of dimensions that was capable of both describing the range of interview data and discriminating between responses. Discussions within the team were carried out during coding and categorisation to obtain consensus before the next stage. Descriptive and explanatory accounts were used to interrogate the data within and across themes and subthemes. The key stages of analysis and synthesis are described in more detail below.

The coding scheme

The coding scheme covers seven main themes together with a range of subthemes. This coding system was initially based on the topic guides used within the interviews. Upon further interrogation of the interview transcript data, a revised coding scheme was developed through discussion between the three interviewers. Table 1 presents a brief description of each theme together with a brief description of its content.

| Theme | Description of theme | Chapter |

|---|---|---|

| 1. Organisation, roles and communication | Background of the interviewee, roles and experience, use of specific software platforms, working arrangements with other researchers | 2 |

| 2. The model development process | Key sets of activities and processes in the development of health economic models | 3 |

| 3. Definition of error | The interviewee’s perception of what does and what does not constitute a model error | 4 |

| 4. Types of model error | Specific types of model error discussed within the interviews | 5 |

| 5. Strategies for avoiding errors | Approaches to avoiding errors within models | 6 |

| 6. Strategies for identifying errors | Approaches to identifying errors within models | 7 |

| 7. Barriers and facilitators | Potential interventions to avoid and identify errors within models and constraints on their use | 8 |

The first theme (Organisation, roles and communication) includes mainly demographic information that is used to describe the sample of participants and compare data between groups. Although this is not central to the qualitative synthesis, it does provide relevant information that helps to interpret and explain variations in stated views between respondents.

The second theme (see Chapter 3) relates to data from the model development process maps and interview transcript data. A meeting was held with all the authors to analyse and synthesise evidence from the process maps. This meeting assisted the analysis by providing an overview of the data and informing decisions regarding the subsequent qualitative process. The analysis of the model development charts unearthed a potential typology of modelling processes followed by practitioners. Generalities could be made to some extent around particular steps taken during the modelling process, and so these were explicitly drawn up on a separate generic map/list during discussions between the authors. In addition, a comprehensive descriptive analysis of the perceived model development process was undertaken using the interview transcript data. Finally, a stylised model development process was developed; this model attempts to both capture and explain key stages in the model development process as well as important iterations between stages, based on the perceived processes of individual interviewees. This was used to explain some of the nuances in the data and variations in processes between respondents.

The third theme (see Chapter 4) was analysed using the Framework approach. Key dimensions of what is perceived to be and what is not perceived to be an error were drawn out from the interview transcript material. Literature concerning the verification and validation of models from outside the HTA field was used to facilitate the interpretation of qualitative evidence on the characteristics of model errors.

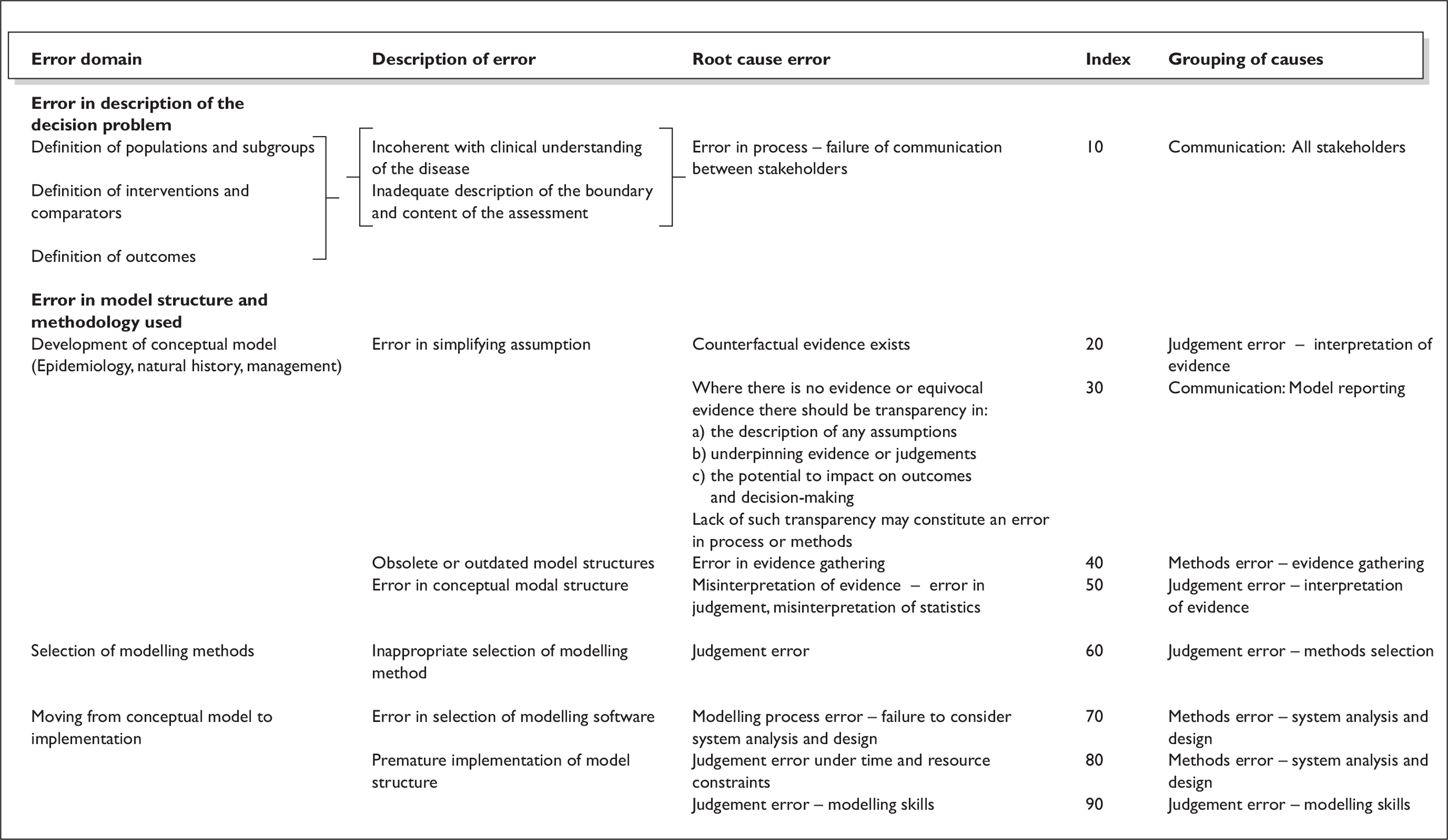

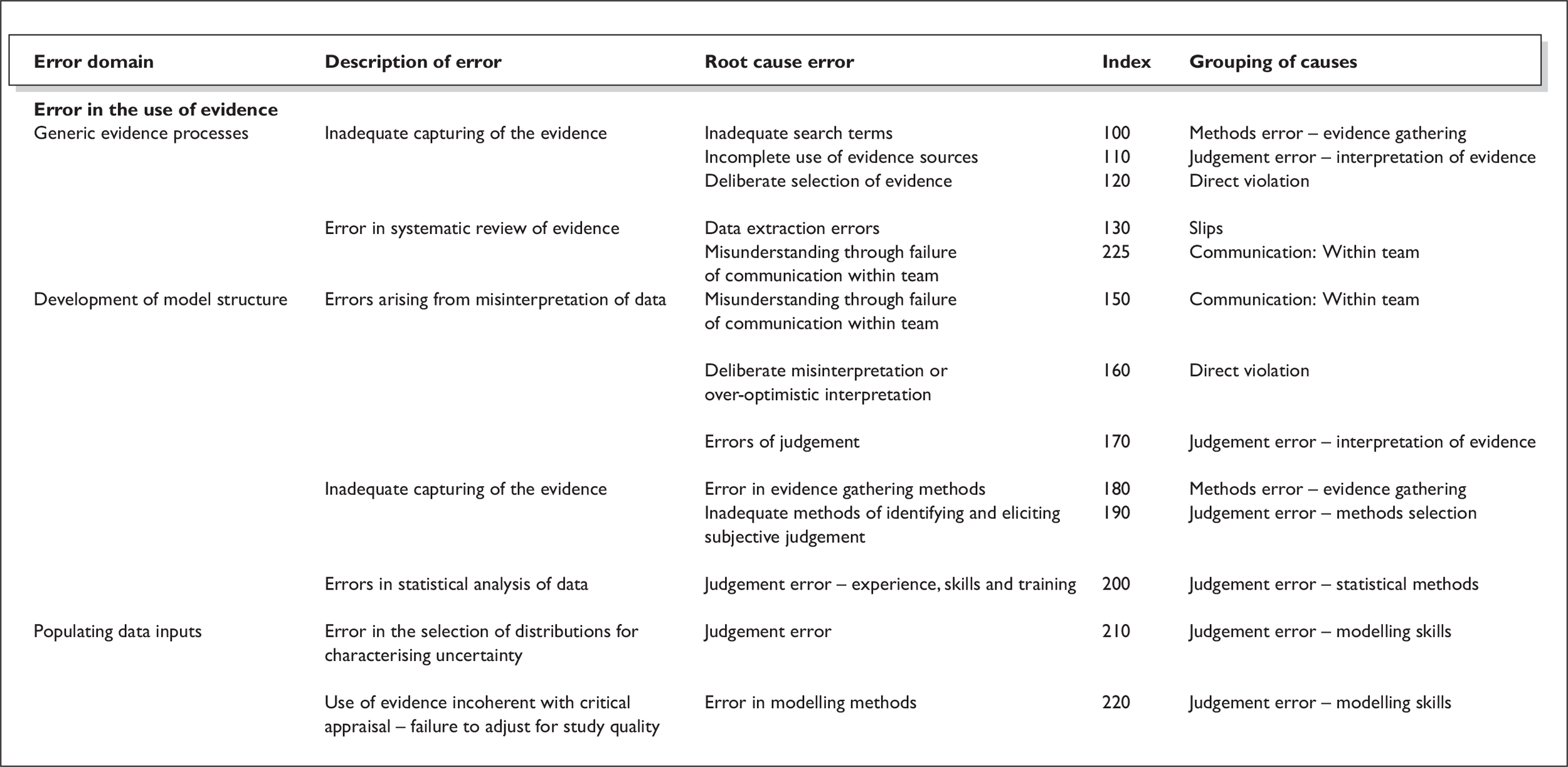

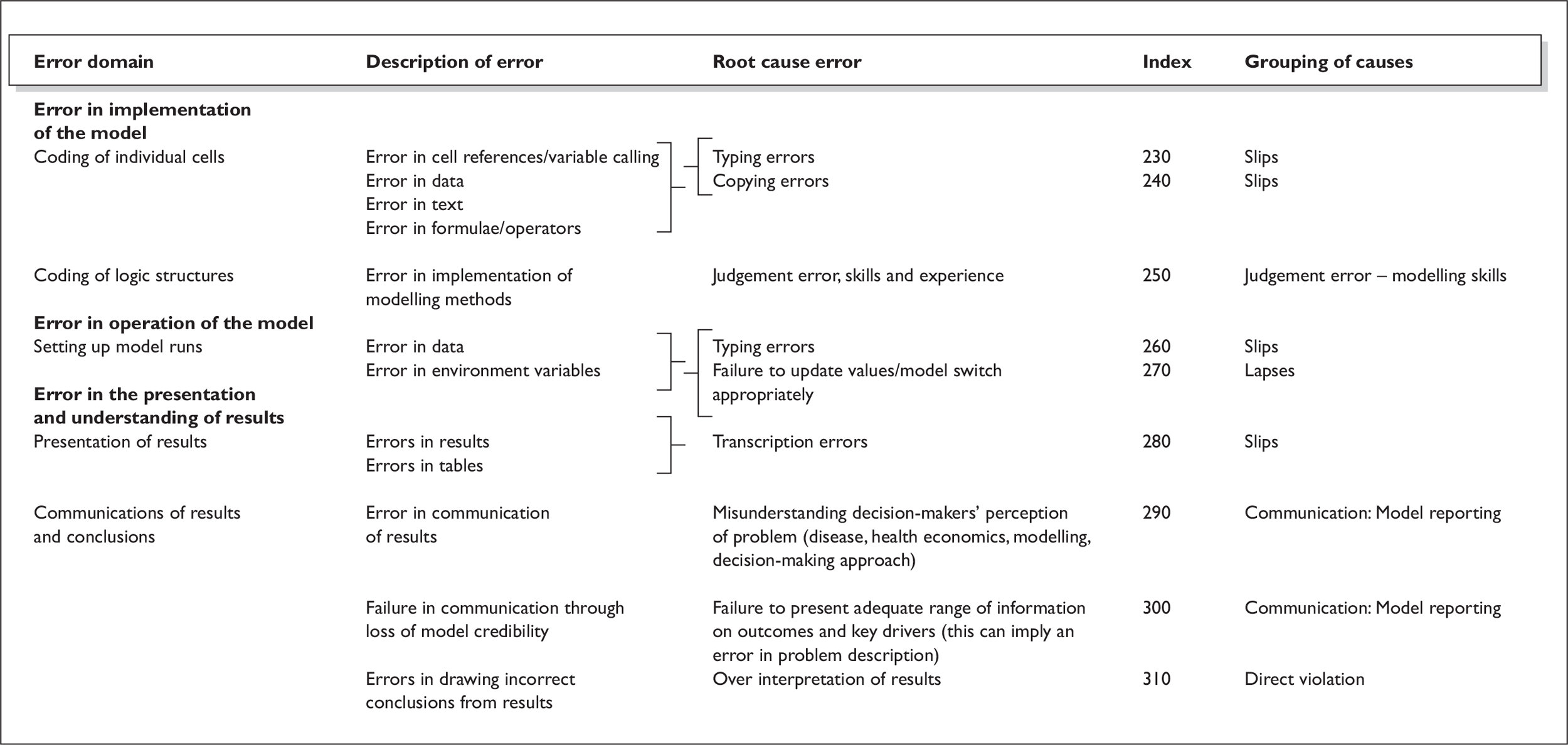

The fourth theme (see Chapter 5) involved a descriptive analysis of interview data according to the initial framework analysis coding scheme: error in understanding the problem; error in the structure and methodology used; error in the use of evidence; error in implementation of the model; error in operation of the model; and error in presentation and understanding of results. As with other emergent themes within the interview data, the descriptive analysis was developed through a process of categorisation and classification of comments that made direct or indirect reference to error types. 21 The structure of the taxonomy emerged from the descriptive analysis of interview data. The taxonomy identifies three levels:

-

the error domain, that is the part of the model development process in which the error occurs

-

the error description, illustrating the error in relation to the domain

-

the root cause error, which attempts to identify root causes of errors and to draw out common types of error across the modelling process.

Non-HTA literature concerning modelling error classifications and taxonomies was identified from searches and used to facilitate the interpretation of the qualitative data.

The fifth and sixth themes (see Chapters 6 and 7) present an analysis of techniques and processes for avoiding and identifying errors in HTA models, as discussed by interviewees. Within both chapters, a descriptive analysis is presented together with an explanatory analysis, which relates current error identification and avoidance methods to the classification of model errors. The seventh theme (see Chapter 8) briefly presents a descriptive analysis of interviewees’ views on barriers and facilitators to error checking activities.

Literature review search methods

At the outset the scope and fields of literature relevant to the study were not known. The purpose of the searches was twofold; first exploratory searches in advance of the interviews were undertaken to inform the development of the scope and content of the in-depth interviews, second the searches after the interviews were undertaken to expand the discussion on key issues raised by the interview analysis and to place the interview data in the context of the broader literature.

An iterative approach was taken to searching the literature. This is recognised as being the most appropriate approach to searching, in the case of reviews of methodological topics, where the scope of relevant evidence is not known in advance. 22 Based on information-seeking behaviour models23 the exploratory search strategy used techniques including author searching, citation searching, reference checking and browsing.

The scoping search was undertaken to establish the volume and type of literature relating to modelling errors within the computer science and water resources fields both identified as potentially rich fields. Highly specific and focused searches were carried out in Computer and Information Systems Abstracts and Water Resources Abstracts.

The interviews and scoping search of the literature identified the need for further reviews including: first, a topic review of error avoidance and identification; second, a review of model verification and validation; and third a review of error classifications and taxonomies. The searches were undertaken in February 2008 and January 2009. The search strategies and sources consulted are detailed in Appendix 2.

Validity checking

The report was subjected to internal peer review by a qualitative researcher, mixed methodologist, modellers not involved in the interviews and a systematic reviewer. The final draft report was distributed to all interviewees to obtain their feedback on whether the findings are representative of their views, hence providing respondent validation. 24

The organisation of the interview sample

This section briefly details the background, expertise, experience and working arrangements of the 12 individuals included in the interview sample.

Organisation

Of the 12 respondents, four work primarily for industry and eight are employed to produce reports for NICE and are university-based. Of those interviewees working within Assessment Groups, two also discussed work undertaken for commercial clients. The respondents reported a variety of work types including economic evaluations, as well as primary and secondary research. Commercial work included health outcomes and pricing and reimbursement work as well as cost-effectiveness modelling. The size of the organisations varied with a range of 12 to 180 staff employed. Three interviewees discussed working internationally.

Composition of the research teams

The respondents reported a variety of working arrangements, to demonstrate the range of organisational arrangements these included:

-

a unit with 30 health economists with close links to another unit with 60–70 health-service researchers

-

a small team of two dedicated modellers with links to a team who undertake systematic reviews

-

a unit including operational researchers, health economists, health-service researchers and literature reviewers with direct access to clinical experts

-

a large international organisation covering many different disciplines

-

a team of 14–15 health economists with no mention of systematic reviewers.

Background of the interviewees

Five of the 12 respondents came from an economics or health economics background, two from a mathematical background, one of whom previously worked as an actuary. Five respondents have an operational research or modelling background. All interviewees included in the sampling frame have considerable experience developing health economic models and were purposefully selected on this basis.

Platforms and types of models

All respondents apart from one, mentioned Microsoft excel® as one of the preferred modelling software platforms, although two mentioned potential limitations. Treeage was frequently mentioned and Simul8 was mentioned by two respondents. Other software programs and applications discussed by interviewees included Crystal Ball, stata, winbugs, Delphi, Visual Pascal and spss. Ten of the twelve respondents mentioned the use of Markov models. Also reported were microsimulation/discrete event simulation, decision analysis and decision tree models.

Chapter 3 The model development process

Overview

This chapter presents a descriptive analysis of individual respondents’ perceptions of the model development process. The purpose of eliciting information concerning current model development processes within the interviews was twofold. First, it allows for the development of an understanding of the similarities and variations in modelling practice between modellers that might impact on the introduction of certain types of model error. Second, the elicitation of interviewee’s personalised view of the process was used to form the structure of the remaining portion of the interview, with the model development charts therefore forming a map against which specific types of model error could be identified and discussed.

The descriptive analysis was undertaken according to the coding scheme, and examined six key stages of the model development process: (1) understanding the decision problem; (2) use of information; (3) implicit/explicit conceptual modelling; (4) software model implementation; (5) model checking activities; and (6) model reporting. It should be noted that these six stages were not specified a priori, but rather they emerged from the qualitative synthesis process. A seventh theme concerning iterations between stages of model development was also analysed. The descriptive synthesis of the model development process is presented together with an inferred stylised model development process describing both the range and diversity of responses.

Understanding the decision problem

For all 12 interview participants, the first stage in the model development process involved developing an understanding of the decision problem. Broadly speaking, this phase involved a delicate balancing/negotiation process between the modeller’s perceived understanding of the problem, the clinical perspective of the decision problem, as well as the clients’ needs and the decision-makers’ needs. This phase of model development may draw on evidence, including published research and clinical judgement, and experience within the research team. In some instances the research question was perceived to follow directly from the received decision problem, whereas other respondents expressed a perception that the research question was open to clarification and negotiation with the client/decision-maker. In this sense the research question represents an intellectual leap from the perception of the decision problem. One respondent noted that this aspect of model development may not be a discrete step which is finalised before embarking on research:

The whole process starts at the point where we are thinking about a project but it will continue once a project has actually started, almost until the final day when the model is finalised that process will continue…we will not finalise the structure of the model and the care pathway perhaps until quite close to the end of the whole project because we will be constantly asking for advice about whether or not we have it correct or at least as good as we can get it.

[I8]

Similarly, another respondent highlighted that the decision problem may not be fixed even when the model has been implemented, indicating that modelling may have a role in exploring the decision problem space. Hence, for instance, modelling can answer questions such as ‘Is technology A economically attractive compared to technology B’ or ‘Under what circumstances is A economically attractive compared to B’. These are fundamentally different decision problems however the decision-makers may change their focus throughout the course of an analysis and are dependent on the outcomes of the analysis.

…commonly at the analysis stage, you have the opportunity or there’s the possibility of changing the question that you’re asking. So, if the results of the model are in any way unexpected or in fact, if they’re in any way insightful, then you might want to refine or amend the outcome or the conclusion you’re going to try and support. So if we start off with an analysis of a clinical trial population that is potentially cost-effective, in the course of the model, you find that the product is particularly appropriate for a subgroup of patients, or there are a group of patients where the cost-effectiveness is not attractive, then we’d refine the question.

[I6]

Several interviewees noted the importance of understanding the decision problem and defining the research question appropriately; in particular, one respondent indicated the fundamental importance of understanding the decision problem before embarking on the implementation of any model:

…it all stems from what is the decision problem, to start off with…no matter what you’ve done, if you’ve got the decision problem wrong…basically it doesn’t matter how accurate the model is. You’re addressing effectively the wrong decision.

[I7]

Written documentation of the decision problem and research question

Several interviewees mentioned that explicit documentation of the perceived decision problem and the research question forms a central part of the proposal within consultancy contracts. The discussions suggested that such documentation extends beyond the typical protocols produced within the NICE Technology Appraisal Programme, including a statement of the objective, a description of the patient population and subgroups, and a summary of key data sources. Several respondents highlighted that although the decision problem may be set out in a scope document, the appropriate approach to addressing the decision problem may be more complex.

…you’ve read your scope you think you know what it’s about. But then you start reading about it, and you think, ‘Oh! This is more complicated than I thought!’

[I3]

This point was further emphasised by another respondent, who highlighted that there was a need to understand not only the decision-makers’ perceived decision problem, but also what the research question should be (so questioning the appropriateness of that perception) and understanding wider aspects of the decision problem context outside its immediate remit:

…our entire initial stage is to try and understand what the question should be and what the processes leading up to the decision point and the process that goes from that decision point.

[I8]

The issue of generating written documentation of the proposed model to foster a shared agreement of the decision problem was raised only by two interviewees, both of whom work for Outcomes Research organisations:

It’s almost like a mini report really. It’s summarising what we see as the key aspects of the disease, it’s re-affirming the research question again – what’s the model setting out to do. It’s pitching the model and methodology selected and why, so if it’s a Markov [model] it’s kind of going on to detail what the key health states are. We try and not make it too detailed because then it just becomes almost self-defeating but you are just trying to, again, with this, we are always trying to get to a point at the end of that particular task where we have got the client signed in and as good a clinical approval I guess, as we can get.

[I10]

For several respondents, an important dimension of understanding the decision problem involved understanding the perceived needs of the decision-maker and the client, mediated by the ability of the modelling team to meet these. A common theme which emerged from the interviews with Outcomes Research modellers was an informal iterative process of clarification of the decision-maker needs; that the potential cost of not doing so is the development of a model which does not adequately address the decision problem. The analysis suggested some similarities in the role of initial documentation between Assessment Groups and Outcomes Research organisations. In particular, modellers working for the pharmaceutical industry highlighted the use of a Request For Proposal (RFP) document and the development of a proposal; these appear to broadly mirror the scope and protocol documents developed by NICE and the Assessment Groups, respectively. There was some suggestion that RFPs were subject to clarification and negotiation whereas scope documents were not.

The first thing is to get an idea of exactly what the client wants. On a number of occasions you get a RFP through which is by no means clear what the request is for and it won’t be very helpful to rush off and start to develop any kind of model on that kind of platform…you can often get a situation where you answer the question that they have asked and they then decide that was not the question they had in mind so certain processes of ensuring clarity are useful.

[I12]

Methods for understanding the decision problem

Methods used by modellers to understand the perceived decision problem varied between respondents, including ‘immersion’ in the clinical epidemiology and natural history of the disease, understanding the relationship between the decision point and the broader clinical pathway, as well as understanding relevant populations, subgroups, technologies and comparators.

…you start by, by just immersing yourself in whatever you can find that gives you an understanding of…all the basics.

[I3]

…it’s the process of becoming knowledgeable about what you are going to be modelling…reading the background literature, knowing what the disease process is, knowing the clinical…pathways typically that patients experience within the situation you are modelling. Going to see clinical experts to ask questions and find out more and gradually hone in on an understanding on the clinical area being studied in a way that enables you to begin to represent it systematically.

[I4]

Conceptual modelling

Nine of the twelve interviewees either alluded to, or explicitly discussed, a set of activities involving the conceptualisation and abstraction of the decision problem within a formal framework before implementing the mathematical model in a software platform.

Conceptual modelling methods

The extent to which conceptual modelling is demonstrated in practice appears to be highly variable between participants; variation was evident in terms of the explicitness of model conceptualisation methods and the extent to which the conceptual model was developed and shared before actual model programming. The interview data for three respondents implied that no distinct formal or informal conceptual modelling activities took place within the process, that the model was conceptualised and implemented in parallel. One of these respondents highlighted an underlying rationale for such an approach.

…I’d rather go with a wrong starting point and get told it’s wrong than go with nothing.

[I2]

Explicit methods for conceptual modelling included developing written documentation of the proposed model structure, assumptions, the use of diagrams or sketches of model designs and/or clinical/disease pathways, memos, representative mock-ups to illustrate specific issues in the proposed implementation model, and written interpretations of evidence.

The extent to which the conceptual model was complete before embarking on programming the software model varied among interviewees; several respondents indicated that they would not begin implementing the software model until some tangible form of conceptual model had been agreed, or in other cases, ‘signed-off’ by the client or experts. Across the nine interviewees who did undertake some degree of explicit conceptual modelling, the main purposes of such activities included fostering agreement with the client and decision-maker, affirming the research question, pitching and justifying the proposed implementation model, sense-making or validity checking, as well as trying ideas, getting feedback, ‘throwing things around’, ‘picking out ideas’and arranging them in abstraction process. Broadly speaking, such activities represent a communication tool, that is, a means of generating a shared understanding between the research team, the decision-maker and the client.

…we’ll get clinical advisors in to comment on the model’s structure and framework, comment on our interpretation of the evidence, and…we think we’ve got a shared understanding of that clinical data but are we really seeing it for what it is?

[I10]

…for us, whether we’ve written it or not, we’ve got an agreed set of health states, assumptions, and…an agreed approach to populating the parameters that lie within it…which I think is the methods and population of your decision model.

[I7]

The extent to which the conceptual model is formally documented varied between the interviewees. One respondent represented a deviant case in the sense that whereas the majority of the model development process concerns understanding the decision problem and (potentially) implicit conceptual modelling, very little time is spent on actual model implementation.

So every aspect of what you then need to, to programme in and populate it is…in people’s brains to various degrees…if you get all that agreed, then the actual implementation in excel should be pretty straightforward. But if that process has taken you 90% of your time, then…you build a model pretty quickly.

[I7]

For three interviewees [I5, I2 and I12] there was no formal distinction between conceptual modelling activities and software model implementation, rather they occur in parallel. Among these three interviewees, there was a degree of consistency in that all three respondents discussed the development of early implementation models (sometimes referred to by interviewees as ‘quick models’ or ‘skeleton models’) as a basis for eliciting information from clinical experts, for testing ideas of what will and will not work in the final implementation model, or as a means of generating an expectation of what the final model results are likely to be:

So there is an element of dipping your toe in the water and seeing what looks like it’s going to work. It’s actually being prepared to say no this is not going to work.

[I12]

I would generally get a rough feel for the answer within three or four days which is almost analogous to the pen and paper approach which is why I do that. If you know that 20,000 people have this disease which is costing x thousand per year and you know your drug which costs y pounds will prevent half of it and you know what utilities are associated with it you can get a damn good answer.

[I2]

Identifying key model factors

Several interviewees discussed the processes they undertake in developing the structure of the model. One interviewee described this activity as a means of:

…identifying the fundamental aspects of the decision problem and organising them into a coherent framework.

[I4]

Much of the discussion concerned identifying and specifying relationships between key outcomes, identifying key states to be differentiated, identifying transitions, capturing the importance of patient histories on subsequent prognosis, and addressing the need for more complex descriptions of health states. Several interviewees suggested that this process was at least to some degree, data-driven, for example, having access to patient-level trial data may influence the structure of a survival model and the inclusion of specific coefficients. Several interviewees discussed the inevitability of simplification; one suggested the notion of developing ‘feasible models’, ‘best approximations’ and ‘best descriptions of evidence’.

…I think it’s a judgement call that modellers are constantly forced to make. What level of simplification, what level of granularity is appropriate for the modelling process? I think what’s very important is to continually refer back to the decision that you are hoping to support with your model. So don’t try and answer questions that aren’t going to be asked…

[I4]

…the aim is to produce the feasible model that best approximates what we think we’re going to need…on occasions we will just have to say yes we would love to put this into a model but the trials have not recorded this…

[I5]

One respondent had a different viewpoint concerning how to identify key factors for inclusion in the mathematical model, indicating an entirely data-led approach whereby the decision to include certain elements of the decision problem in the implementation model was determined by its influence on the model results:

…what to keep in and what to chuck out…it depends on how quickly you’ve got the data. If you’ve got all of the data then keep everything in, if you’ve already got it, because you can tell with an EVSI [expected value of sample information] or an EVI [expected value of information] what is important or not even if you or you fit a meta-model to it or the ones that are capped fall straight out…

[I2]

…you are only including these things because you think they will affect the ICER [incremental cost-effectiveness ratio], if they don’t affect the ICER you shouldn’t be including them.

[I2]

Clearly, decisions concerning what should and should not be included in the model, how such factors should be captured and related to one another, and the appropriate level of complexity and granularity are highly complex. Indeed, two respondents highlighted that this aspect of model development was hard to teach and was learned through experience.

I don’t think…that I can sit here and write out how you build a model. I think it’s something which comes with experience.

[I7]

Formal a priori model specification

One respondent [I1] highlighted the absence of an important stage of the model development process common within software development projects: that of model design and analysis, whereby the proposed model is formally specified and its software implementation is planned before the model is programmed. In this sense, the design and analysis stage represents a direct link between the conceptual model and the implementation model. Such activity would usually include producing a formal model specification in advance of any programming, including details of how the model will be programmed, where parameters will be stored and linked, housekeeping issues and an a priori specification of how the model will checked and tested.

Use of information in model development

For many of the interviewees, the use of evidence to inform the model development process was broader than the generation of systematic reviews of clinical effectiveness. The use of evidence used to determine the model structure was also commonly discussed. Further, the use of evidence in informing the model was seldom restricted to a single stage in the development process; for several respondents, evidence was used to understand, shape and interpret all aspects of model development.

Use of previous models

Views concerning the appropriate use of previous models to inform the model under development varied between study participants. One subtle but potentially important difference in use of previous models concerned the extent to which such models developed for a different decision problem, decision-maker and purpose, would be used to inform the structure of the current model. For some respondents, previous models were examined as a means of ‘borrowing’ model structures, whereas for others, the model represents a starting point for thinking about an underlying conceptual framework for the model:

…suppose the first thing we will do actually is see if there are any existing models that we can build from and if there are and we think they are any good then we have a structure there.

[I5]

…it’s not a question of what people have done in the past and whether they did a good job or not, it’s seeing what we can borrow from those that can be relevant.

[I12]

The interview data suggest the notion of being ‘data-led’ in model structuring. One may infer two risks associated with being dependent on previous model structures: one uses an existing model which is not adequately structured to address the decision problem at hand, or one misses out on developing an understanding and agreement of the decision problem through conceptual modelling (see Conceptual modelling. This inference appears to be supported by the views of a further respondent:

If you set two modelling teams the same task I think it’s quite likely they would come up with very different models, which is one of the aspects of familiarisation. Unless of course there were lots of existing models that they could draw on and fall into familiar grooves in terms of the way you model a particular process so that often happens you look at what has already been done in terms of the modelling process and that is part of the familiarisation process and you say oh yeah that makes perfect sense we will do the same again.

[I4]

I think there is a danger in that as well you know if you just slavishly adopt a previous structure to a model and everybody does the same there’s no potential for better structures to develop or for mistakes to be appealed so I think there is a danger in that in some ways.

[I4]

Use of clinical/methodological expert input

The extent to which clinical experts were involved within the model development process varied considerably between interviewees. Several interviewees indicated that they would involve clinical experts in the research team itself, from the very outset of the project, to help develop an understanding of the decision problem and to formulate the research question; this was particularly the case where in-house clinical expertise was not available.

…we try to get in clinical experts as part of the team and they will usually also be authors on the report and it tends to be very much a joint process of structuring the problem.

[I5]

At the other end of the spectrum, one respondent suggested that they would only involve clinical experts after having built a skeleton model.

I would build the model, just build it straight off, I mean it depends on how quick you work but if you build it quickly then you can have all that in place before you talk to clinicians or other peer reviewers…

[I2]

Relationship between clinical data and model structure

The majority of the interviewees highlighted the existence of a complex iterative relationship between model structuring processes and data identification and use; whereby the model structure has a considerable influence on the data requirements to populate the model, whereas the availability of evidence may in turn influence the structure of the model (whether implemented or conceptualised). In effect, the ‘worldview’ or weltenschauungen25 of the modeller influences the perception of what evidence is required, and the identification and interpretation of that evidence in turn, influences and adapts that weltenschauungen.

I divided it between design[ing] and populat[ing]…the populating being searching for, locating the information to actually parameterise those relationships. And then going back and changing the relationships to ones that you can actually parameterise from the data that’s available, and then changing the data that you look for to fit your revised view of the world.

[I6]

Two respondents indicated that previous working arrangements had led to the systematic review and modelling activities being perceived as distinct entities that did not inform one another, so hindering the iterative dynamic highlighted above. However, both of these respondents indicated a change in this working culture, suggesting a joint effort between the modeller and the other members of the research team:

I think things are…kind of trying to be changed. I’m not seeing a systematic review…as something separate from modelling; now they are working together and defining what [it] is…that we are looking for together.

[I1]

Several respondents highlighted the importance of this iterative phase of model development and its implications for the feasibility of the resulting mathematical model.

The most difficult ones aren’t just the numbers; they are where there’s a qualitative decision. You know, is there any survival benefit? Yes or no? If there is a survival benefit, it’s a different model?…So it’s structural.

[I6]

…it’s no good having an agreed model structure that’s impossible to populate with data so you obviously give some thought as to how you are going to make it work that’s part of the agreement process. You outline what the data requirements are likely to be and step through with the people collecting the data…

[I4]

One respondent highlighted the difficulties of this relationship, suggesting a danger for models to become data-led rather than problem-led.

We try as a matter of principle not to let data blur the constraints of models too much but in practice sometimes it has to.

[I5]

One respondent highlighted the use of initial searches and literature summaries as a means of developing an early understanding of likely evidence limitations, a process undertaken alongside clinical experts, before model development. Another respondent highlighted what he considered a key event in the model development process concerning iterations between the model structure and the evidence used to inform its parameters:

We have a key point which we call data lockdown because that’s key to the whole process, if you are working to a tight timescale you need to make sure the modellers have enough time to verify their model with the data.

[I4]

Differences between the model structure and the review data

A common emergent theme across respondents was the idea that the data produced by systematic reviews may not be sufficient or adequate for the model, meaning that either the data would need transforming into a format in which the model could ‘accommodate’ the evidence (for example premodel analysis such as survival modelling), or the developed model itself would need to be restructured to allow the data to be incorporated into the model.

…but it wouldn’t be just a question of understanding the clinical data, it’s a question of what it’s going to mean for the modelling.

[I12]

A further point raised by one respondent concerned the Assessment Groups’ perception of what constitutes evidence for the model; this particular respondent indicated that his institution is likely to represent a deviant case in this respect.

I wouldn’t say we had a procedure, but an underlying principle…as a department we don’t distinguish between direct and indirect evidence. We just think it’s all evidence.

[I7]

[We] are less beholden to statistical significance; other people are more conventional in their sort of…approaches. One…set of researchers may say that…there is no statistical significance of heterogeneity…[that] we can assume that all this evidence can be pooled together may not be considered by…another set of researchers to be an appropriate sort of thing to do…

[I7]

Interestingly, almost all discussions concerning the use of evidence in populating models focused entirely on clinical efficacy evidence; very little discussion was held concerning methods for identifying, selecting and using non-clinical evidence to inform other parameters within models, for example costs and health-related quality of life. Across the 12 interviewees, it was unclear who holds responsibility for identifying, interpreting and analysing such evidence or how (and if) it influences the structure of the model, and how such activities differ from the identification and use of clinical efficacy data.

Implementation model

All respondents discussed a set of activities related to the actual implementation of a mathematical model on a software platform. Such activities either involve the transposition of the prespecified soft conceptual model framework into a ‘hard’ quantitative model framework involving various degrees of refinement and adaptation, or the parallel development of an implicit conceptual model and explicit implementation model.

Model refinement

Several respondents discussed activities involving model refinement at the stage at which the model is implemented, although the degree to which such activities are required was variable. The interview data suggest that this aspect of model development was a key feature for the three respondents who do not separate conceptual and implementation model processes. As noted earlier (see Conceptual modelling), although these individuals do not draw a distinction between the conceptual and the implementation, they do appear to develop ‘skeleton models’ to present to clinical experts, which evolve iteratively and take shape over time.

I will actually literally take them through a patient path and actually run through, do a step-by-step running of the model in front of them.

[I5]

However, one respondent highlighted the potential dangers of developing software models without having fully determined the underlying structure or having the agreement of experts:

…whether it’s adding something in or taking something out there is the worry at the back of your mind that it’s going to affect something else in a way that perhaps you don’t observe…there is a danger if you make the decision to include or exclude definitively that you might regret it later on, it might not be a question of states it might be a question of which outcomes to model on as well…

[I12]

The interview data suggested that model refinement activities were less iterative and burdensome for those respondents who had the conceptual model agreed or ‘signed-off’ before implementation; this was particularly true for one respondent:

…then build an excel model…it’s an iterative process; you don’t want to keep on rebuilding your model,…I can’t imagine what I’d do in excel which would make me rethink the way I structure my model…most of the big issues I see are all about the sort of thought processes behind defining that decision problem, defining the structure, defining the core set of assumptions…if we can get agreement about that, the implementation of it is really straightforward.

[I7]

At the other extreme, however, one respondent mentioned rare occasions in which the research team had developed a model, decided it was not adequate and subsequently started from scratch.

Obviously it doesn’t very often happen that we have built a whole model and have had to tear up a whole model but if necessary we would rather do that than carry on with a model that isn’t what we intended.

[I5]

Model checking

Given the extent of discussion around model checking during the interviews, a detailed analysis of current methods for checking models is presented (see Chapters 6 and 7). Model checking activities varied and appear to occur at various stages in the model development process. One interviewee suggested that model checking begins once the model has been built; however, for other respondents, the checking process happens at various distinct stages. One respondent indicated that model checking activities are undertaken throughout the entire model development process. Whereas, subject to certain nuances in the precise methods employed, much of the discussion around current model checking activities focused on testing and understanding the underlying behaviour of the model and making sure that it makes sense:

…it’s about finding out what the real dynamics of the model are so what are the key parameters that are driving the model, where do we need to then emphasise our efforts in terms of making sure our data points are precise? What are the sensitivities, in which case what subgroups might we need to think about modelling? Discussing how the model is behaving in certain situations. What the likely outcome is. If the ICER is near to the critical thresholds for the decision-maker then where we need to pay attention if the ICER is way off to the side, if you’ve got an output that’s dominated or if you have got an ICER that’s half a million pounds it seems likely that unless the model is wildly wrong the decision is going to be fairly clear cut. So if you are operating at the decision-making threshold how precise and careful you have to be and what kind of refinement you need therefore to build into the model.

[I4]

Other model checking activities included examining the face validity of the model results in isolation or in comparison to other existing models, internal and clinical peer review, checking the input data used in the model and checking premodel analysis can replicate the data, and checking the interpretation of clinical data. Many of these approaches involve a comparison of the actual model outputs against some expectation of what the results should be; the interview data were not clear how such expectations were formed.

Either the intuition or the modelling or the data is wrong and we tend to assume that it is only one of them…I think you tend to assume that once they’re [clinical experts] not surprised by the thing then, that means you have got it right.

[I5]

Two respondents indicated a minimalist approach to model checking activities:

So we do just enough…just enough but not as much as you’d want to do.

[I10]

…it depends whether you’re error searching for what I term significant errors or non-significant errors, I probably would stop and the non-significant errors that are probably in a larger percentage of models than people would like to know would remain.

[I2]

Reporting

The majority of respondents referred to model reporting as the final step in the model development process. This stage typically involved writing the report, translating the methods and results of the model and other analysis; and engaging with the decision. Although little of the interview content concerned this phase of the process, two important points were raised within the interviews. First, one respondent highlighted that the reporting stage may act as a trigger for checking models; where the results are unexpected or queried by internal or external parties. The second point concerned the importance of the interpretation of the model by decision-makers and other process stakeholders:

I think the recommendation we would make is we need to pay more attention to understanding how our models are understood and how we present them, a lot of work that can be done in model presentation.

[I4]

…reportable convention standards can be very important in ensuring everyone has a clear view of what’s being said. There are ways in which model outputs can be more transparently depicted and the key messages conveyed to users more clearly, often some can get lost in the text or hidden away somewhere either intentionally or unintentionally.

[I4]

Synthesised model development process

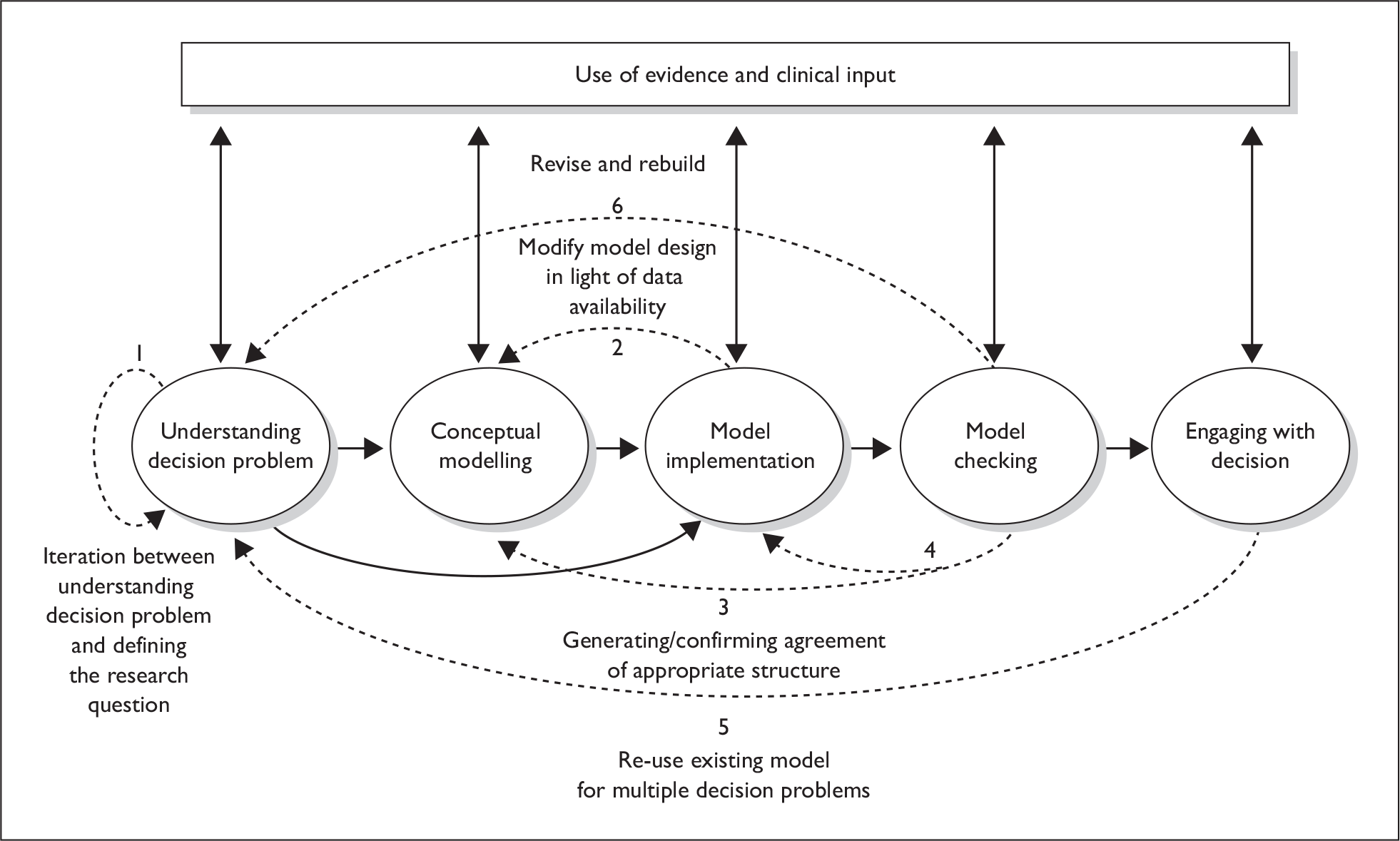

Figure 1 shows a stylised representation of the model development process generated through a synthesis of all interview transcripts and from the charted descriptions of the model development process elicited from the interviewees. The stylised model development process is comprised of five broad bundles of activities including; understanding the decision problem, conceptual modelling, implementing the model within a software platform, checking the model, and finally engaging with the decision. It should be noted that these activities do not perfectly match the coding scheme used to undertake the Framework analysis. The general remit of these sets of activities is as follows.

FIGURE 1.

Stylised model development process showing key iterations between sets of activities.

Understand the decision problem This phase involves immersion in research evidence, definition of research question, engaging with clinicians, engaging with decision-makers, understanding what is feasible, understanding what the decision-maker wants/needs and engaging with methodologists.

Conceptual modelling It is a commonplace to state that all mathematical models are built upon a conceptual model. At its most banal it is impossible to implement a model without first conceiving the model. The key issue in the decision-making endeavour and reflected in the modelling process is communication: specifically the process of developing a shared understanding of the problem. Conceptual modelling is the process of sharing, testing, questioning and agreeing this formulation of the problem; concerned with defining the scope of a model and providing the inputs to the process of systems analysis and design associated with defining a solution to the problem. The scope of a model deals with defining the boundary and depth of a model, to identify the critical factors that need to be incorporated in the model. Models are necessarily simplifications of our understanding of a problem. The conceptual model allows a description of one’s understanding of the system that is broader than the description of the system captured in the model. An implemented model will therefore be a subset of a conceptual model. This hierarchy allows simplifications represented in the model to be argued and justified. This process of simplification is the process of determining relevance.

The arrow leading from ‘understanding the decision problem’ to ‘implementation model’ reflects the views of three interviewees who implied that they either do not build a conceptual model or that conceptualisation and implementation are simultaneous activities.

Implementation model This phase involves the implementation of the model within a software platform.

Model checking This stage includes all activities used to check the model. This includes engaging with clinical experts to check face validity, testing extreme values, checking logic, checking data sources etc. A detailed description of current model checking activities is presented in Chapters 6 and 7.

Engage with decision This phase concerns the reporting and use of the model with and by the decision-maker.

Activities concerning the use of evidence, peer review and other clinical consultation may arise within any or all of the five model development phases.

Several points are of note in the interpretation of the diagram. First, as the diagram has been developed through a synthesis of all interview data, respondents did not adopt a uniformly standard model development process, and respondents discussed different activities to varying degrees of detail, the lines of demarcation between main sets of activities were not entirely clear. Second, the diagram is intended to be descriptive rather than prescriptive; it describes current model development processes rather than a normative judgement about how models should be developed. The dashed arrows in Figure 1 indicate significant iterations between key sets of model development activities. It should also be noted that each individual phase is likely to involve iterations within sets of activities, e.g. there may be several versions of an implementation model as it is being developed.

The iterative nature of developing mathematical models is well documented. The interview data suggest six key points of model iteration within the development process. The first substantial iteration (marked as number 1 on Figure 1) concerns developing an understanding of the decision problem; this may involve iterations in terms of striking a balance between what the analyst believes are the decision-makers needs, what is feasible within the project resources and negotiations therein.

The second notable iteration (marked as number 2 on Figure 1) involves looping between the development of an explicit conceptual model and its implementation in a software platform. Several respondents highlighted the inevitable existence of a circular loop between these two sets of activities whereby the intended conceptual model structure is redefined in light of limited evidence available to populate that structure, and whereby the evidence requirements are redefined in light of the intended model structure.

The interview data suggest two further loops relating to iterations from the model checking stage to revising or refining the conceptual and/or implementation model (marked as numbers 3 and 4 on Figure 1). The interview data suggest that any of the large number of checking activities currently undertaken have the capacity to result in backwards iterations to earlier steps.

The fifth key iteration of note (marked as number 5 on Figure 1) concerns the use of an existing model for multiple decision problems. In such instances, there is a loop between the final and first stages of the process. Two respondents highlighted examples of this iteration: one in terms of the use of ‘global models’ for use in different decision-making jurisdictions, and one in terms of the ongoing development of independent models across multiple appraisals. As noted earlier (see Use of information in model development), several respondents discussed the use of existing models as the basis for developing a model structure. In such circumstances, there is a danger that adopting an existing model developed by another party and applying this to a new decision problem could effectively represent a loop back to a conceptual model without a comprehensive understanding of the decision problem.

The final iteration (marked as number 6 on Figure 1) loops from model checking to understanding the decision problem. The three respondents who highlighted that implementation and conceptualisation activities occur in parallel all indicated the possibility of revising and rebuilding the model from scratch. The same suggestion was not indicated by the remaining nine respondents. At the other extreme, one respondent indicated that there was no significant backwards iteration once the implementation model was under way, i.e. the conceptual model was not amended once agreed.

Discussion of model development process

The analysis presented within this chapter highlights considerable variation in modelling practice between respondents. This may be in part explained by the apparent variations in expertise, background and experience between the respondents. The key message drawn from the qualitative analysis is that there is a complete absence of a common understanding of a model development process. Although checklists and good modelling practice have been developed, these perhaps indicate a general destination of travel without specifying how to get there. This represents an important gap and should be considered a priority for future development.

The stylised model development process presented in Figure 1 represents a summary of all interviewees’ stated approaches to modelling. It should be noted that the diagram does not entirely represent the views of any single individual, but is sufficiently generalisable to discriminate between the views of each interviewee; in this sense, any modeller should be able to describe their own individual approach to model development through reference to this diagram. As noted above, one particularly apparent distinction that emerged from the qualitative synthesis was the presence or absence of explicit conceptual modelling methods within the model development process. Although it is difficult to clearly identify a typology on these grounds from a mere 12 interviewees, the qualitative data are indicative of such a distinction. Related to this point is the issue of the a priori specification of the model design and analysis, which represents the leap from the conceptual model to the implementation model. Some respondents did discuss the preparation of materials which go some way in detailing proposed conceptual models, platforms, data sources, model layout, checking activities and general analytical designs; however, the extent to which these activities are specified before implementation appears to be limited.

A common feature of modelling critiques that is reflected in the interviews is the statement that different teams can derive different models for the same decision problem. This arises from the largely implicit nature of existing conceptual modelling processes described by the interviews. It is suggested that focusing on the process of conceptual modelling may provide the key to addressing this critique. Furthermore, the quality of a model depends crucially on the richness of the underlying conceptual model. Critical appraisal checklists of models all currently include bland references to incorporating all important factors in a disease yet do not address how these ‘important factors’ can be determined. It is the conceptual modelling process that is engaged with identifying and justifying what are considered important factors and what are considered minor or irrelevant factors for a decision model.

The conceptual modelling process is centrally concerned with communication and this can be supported by many forms including, conversation, meeting notes, maps (causal, cognitive, mind etc.), skeletal or pilot models. However, care needs to be exercised in using methods that focus on the problem formulation rather than formulation of the solution. 26

The description of the model development processes adopted by the interviewees within this chapter is directly used to inform the development of the taxonomy of model errors (see Chapter 5) and to provide a context for analysing strategies for identifying and avoiding model errors (see Chapters 6 and 7).

Chapter 4 Definition of an error

Overview

This chapter presents the description of key dimensions and characteristics of model errors and attempts to draw a boundary around the concept of ‘model error’, i.e. ‘what is it about a model error that makes it an error?’. The descriptive analysis highlighted both overt and subtle variations in respondents’ perceptions concerning what constitutes a model error. In seeking to explain, assign meaning and draw a boundary around the concept of model error, the respondents identified a variety of factors including:

-

non-deliberate and unintended actions of modellers in the design, implementation and reporting of a model

-

the extent to which the model is fit for purpose

-

the relationship between simplifications, model assumptions and model errors

-

the impact of error on the model results and subsequent decision-making.

The above factors are discussed in this chapter, the interview findings are then placed in the context of the literature on model verification and validation.

Key dimensions of model error

Table 2 presents the key characteristics and dimensions of model error as suggested by the 12 interview respondents (note: this has attempted to retain the natural language of respondents but does involve a degree of paraphrasing by the authors).

| Dimensions which are perceived to constitute a model error | Dimensions which are perceived not to constitute a model error |

|---|---|

|

a model that is not fit for the purpose for which it was intended something that causes the model to produce the wrong result something that causes the model to lead to an incorrect interpretation of the results/answer an aspect of the model that is either conceptually or mathematically invalid failure to accord with accepted best practice conventions – not doing something that you should have done use of inappropriate assumptions that are arbitrary use of inappropriate assumptions that contradict strong evidence use of assumptions that the decision-maker does not own, do not feel comfortable with or cannot support choices made on the grounds of convenience rather than evidence something that causes the model to produce unexpected results something you would do differently if you were aware of it an aspect of the model that is unambiguously wrong an aspect of the model that does not make sense an aspect of the model that does not reflect the intention of the model builder for an unplanned reason a model that inappropriately approaches the scope or modelling technique an unjustified mismatch between what the process says should be happening and what actually happens an inappropriate relationship between inputs and outputs an implementation model that does not exactly replicate the conceptual model something that markedly changes the incremental cost-effectiveness ratio irrespective of whether it changes the conclusion something in the model that leads to the wrong decision a mistake at any point in the appraisal process – from decision problem specification to interpretation of results |

software bugs unwritten assumptions that are never challenged choices made on the grounds of evidence matters of judgement inconsistencies with other reports simplifying assumptions that are explained use of simpler methodologies/assumptions aspects of the model that do not influence the results small technical errors with limited impact overoptimistic interpretation of data mistakes that are immediately corrected reporting errors |

Table 2 highlights a diverse set of characteristics of model errors as discussed by interviewees. Evidently, there is no clear consensus concerning what constitutes a ‘model error’. Conflicting views were particularly noticeable in factors concerning model design, for example model structures, assumptions and methodologies. The respondents’ explicit or implied construct of a model error appeared to be considerably broader than ‘technical errors’ relating to the implementation of the model.

Intention, deliberation and planning

The interview data strongly suggested a general consensus that model errors were unintended, unplanned or non-deliberate actions; instances whereby the modeller would have done things differently had they been aware of a certain aspect or underlying characteristic of the model; or instances whereby the model produced unexpected or inconsistent results. The unintended actions of the modeller and the non-deliberate nature of the modellers’ actions when developing models appeared to be central to the interviewees’ definitions of model error.

Extent to which the model is fit for purpose

A commonly cited dimension of a model error concerned the extent to which the model could be considered fit for purpose. In particular, respondents highlighted that a model could be unfit for its intended purpose in terms of the underlying conceptual model as well as the software implementation model, so representing distinct errors relating to model design as well as errors relating to the execution of the model. Respondents implied a relationship between the concept of model error and the extent to which a model is fit for purpose, suggesting that the presence of model errors represents a threat to the model’s fitness for purpose, whereas the development and use of a model that is unfit for its intended purpose is a model error in itself.

Several interviewees drew reference to concepts of verification and validity as a means through which to define the concept of model error. Where interviewees used these terms there was a consensus regarding their definition, with verification referring to the question ‘is the model right?’ and validation referring to ‘is it the right model?’. Although there was general consensus that the term model error included issues concerning the verification of a model, there was less clarity about the relationship between model errors and validity. For instance, problems in the conceptualisation of the decision problem and the structure of the model were generally referred to as ‘inappropriate’ rather than ‘incorrect’, yet nonetheless these were described as model errors by some respondents.

I think it’s probably easier to know that you have made an error of verification where there’s clearly a bit of wrong coding or one cell has been indexed wrongly or the wrong data has been used for example which wasn’t intended. So those are all kinds of error that are all verification type errors. Errors in the model rather than it being the wrong model which is a much bigger area the area of validation and there people can argue and it can be open to debate.

[I4]

Further, it should be noted that one interviewee indicated that errors affecting the model validity, that is errors in the description of the decision problem and conceptual model potentially dwarfed technical errors that affected its verification.

…my belief is that if you spend…the majority of your time, as I say, getting the decision problem right, making sure you understand data and how that data relates to the decision problem and decision model, that…the errors of programming itself, those ones which are much more minor.

[I7]

A repeatedly stated dimension of model error that emerged from the interviews was the failure to adhere to best practice or failure to accord to current conventions. Alternative viewpoints included the notions of failure to do ‘the best you can do’ or failure to do ‘what you should do’. It was noted that in some instances this may be the result of a lack of skills. Further complexity was apparent in terms of the selection of modelling method and its relationship to model errors:

I don’t think I would class [it] as an error when someone says that…they developed this hugely complex…kind of Markov-type model, and then said they’d much rather have done it as a simulation.

[I11]

Simplification versus error

The qualitative synthesis suggested mixed views between the concepts of model assumptions and model errors. For example, one respondent appeared to hold a strong view that all inappropriate assumptions were model errors, highlighting the importance of the perspective of the stakeholder (or model user) in distinguishing between the two concepts:

…One man’s assumption is another man’s error…

[I3]

Other respondents highlighted a fine line between the necessary use of assumptions, in model simplification and the introduction of errors into the model:

If you have an inaccuracy in the model that has no, or a trivial effect, then it’s not obvious to me where that stops being a simplification that you’ve made to make the decision problem tractable, and where it becomes an error,…something that’s inaccurate and we wish to avoid…that point would be where it starts to cause a risk that the model is giving a wrong answer, or is not addressing the question that you wanted it to.

[I6]