Notes

Article history

The research reported in this issue of the journal was commissioned by the National Coordinating Centre for Research Methodology (NCCRM), and was formally transferred to the HTA programme in April 2007 under the newly established NIHR Methodology Panel. The HTA programme project number is 06/92/02. The contractual start date was in October 2007. The draft report began editorial review in November 2008 and was accepted for publication in June 2009. The commissioning brief was devised by the NCCRM who specified the research question and study design. The authors have been wholly responsible for all data collection, analysis and interpretation, and for writing up their work. The HTA editors and publisher have tried to ensure the accuracy of the authors’ report and would like to thank the referees for their constructive comments on the draft document. However, they do not accept liability for damages or losses arising from material published in this report.

Declared competing interests of authors

None

Permissions

Copyright statement

© 2010 Queen’s Printer and Controller of HMSO. This journal may be freely reproduced for the purposes of private research and study and may be included in professional journals provided that suitable acknowledgement is made and the reproduction is not associated with any form of advertising. Applications for commercial reproduction should be addressed to: NETSCC, Health Technology Assessment, Alpha House, University of Southampton Science Park, Southampton SO16 7NS, UK.

2010 Queen’s Printer and Controller of HMSO

Chapter 1 Introduction

Synthesis of published research is becoming increasingly important in providing relevant and valid research evidence to clinical and health policy decision-making. However, the validity of research synthesis based on published literature will be threatened if published studies comprise a biased selection of all studies that have been conducted. 1

A previous Health Technology Assessment (HTA) monograph published in 2000 comprehensively reviewed studies that provided empirical evidence of publication and related biases, and studies that developed or tested methods for preventing, reducing or detecting publication and related biases. 2 The review found evidence indicating that studies with significant or favourable results were more likely to be published, or were likely to be published earlier than those with non-significant or unimportant results. There was limited and indirect evidence indicating the possibility of full publication bias, outcome reporting bias, duplicate publication bias, and language bias. The review identified little empirical evidence relating to the impact of publication and related biases on health policy, clinical decision-making and the outcome of patient management. Considering that the spectrum of the accessibility of research results (dissemination profile) ranges from completely inaccessible to easily accessible, it was suggested that a single term, ‘dissemination bias’, could be used to denote all types of publication and related biases. 2

In the previous HTA report published in 2000, the available methods for dealing with dissemination biases were classified according to measures that could be taken before, during or after a literature review: to prevent publication bias before a literature review (e.g. prospective registration of trials), to reduce or detect publication and related biases during a literature review (e.g. locating grey literature or unpublished studies, and funnel plot related methods), and to minimise the impact of publication bias after a literature review (e.g. confirmatory large-scale trials, updating systematic reviews). 2 It was concluded that the ideal solution to publication bias is the prospective, universal registration of all studies at their inception. It was also concluded, although debatable, that available statistical methods for detecting and adjusting publication bias should be mainly used for the purpose of sensitivity analysis. 2

Since the publication of the 2000 HTA report on publication bias, many new empirical studies on publication and related biases have been completed and published. For example, Egger et al. (2003) provided further empirical evidence on publication bias, language bias, grey literature bias and MEDLINE index bias,3 and Moher et al. (2003) evaluated language bias in meta-analyses of randomised controlled trials. 4 Recently, more convincing evidence on outcome reporting bias has been published. 5–7 The new empirical evidence may contradict or strengthen the empirical evidence included in the previous HTA report. There are also some new published studies that investigated methods for dealing with publication bias (e.g. references 8 and 9). More importantly, perhaps, new initiatives have been introduced to enhance the prospective registration of clinical trials. 10

This report aims to update the 2000 HTA report on publication bias, by incorporating findings from newly identified studies. We first discuss the concepts and definitions about publication and related biases in this chapter. After a description of review objectives and methods in Chapter 2, evidence from empirical studies on the existence and consequences of publication bias is summarised in Chapters 3 to 5. We discuss sources of publication bias in Chapter 6, while methods for dealing with publication bias are examined in Chapters 7 and 8. The results of a survey of systematic reviews published in 2006 are presented in Chapter 9. Finally, the major findings of this updated review are discussed in Chapter 10.

Definition of publication and related biases

The observation that many studies are never published was termed ‘the file-drawer problem’ by Rosenthal in 1979. 11 The importance of this problem depends on whether or not the published studies are representative of all studies that have been conducted. If the published studies are the same as, or a random sample of, all studies that have been conducted, there will be no bias and the average estimate based on the published studies will be similar to that based on all studies. If the published studies comprise a biased sample of all studies that have been conducted, the results of a literature review will be misleading. 12 The efficacy of a treatment will be exaggerated if studies with positive results are more likely to be published than those with negative results.

Publication bias is specifically defined as ‘the tendency on the parts of investigators, reviewers, and editors to submit or accept manuscripts for publication based on the direction or strength of the study findings’. 13 In the definition of publication bias, there are two basic concepts: study findings and publication status. Study findings are commonly classified as being statistically significant or non-significant. In addition, study results may be classified as being positive or negative, supportive or unsupportive, favoured or disliked, striking or unimportant. It should be noted that the classification of study findings is often dependent on subjective judgement and may be unreliable. For example, people may have a different understanding about what are positive or negative findings.

The formats of publication include full publication in journals, presentation at scientific conferences, reports, book chapters, discussion papers, dissertations or theses. In fact, ‘publication is not a dichotomous event: rather it is a continuum’. 14 Although a study that appears in a full report in a journal is generally regarded as published, there may be different opinions about whether it should be classified as published or unpublished when results are presented in other formats.

The accessibility of research results is dependent not only on whether a study is published but also on when, where and in what format this occurs. In the 2000 HTA report on publication bias, we used the term ‘dissemination profile’ to describe the accessibility of research results, or the possibility of research findings being identified by potential users. The spectrum of the dissemination profile ranges from completely inaccessible to easily accessible, according to whether, when, where and how research is presented or stored. Dissemination bias occurs when the dissemination profile of a study is determined by its results. The term dissemination bias could be used to embrace publication bias and other related biases caused by time, type and language of publication, multiple publication, selective citation, database index, and biased media attention (see Box 1 for definitions).

Occurs when the dissemination profile of a study’s results depends on the direction or strength of its findings. The dissemination profile is defined as the accessibility of research results or the possibility of research findings being identified by potential users. The spectrum of the dissemination profile ranges from completely inaccessible to easily accessible, according to whether, when, where and how research is published.

Publication biasOccurs when the publication of research results depends on the nature and direction of the results. Because of publication bias, the results of published studies may be systematically different from those of unpublished studies.

Outcome reporting biasOccurs when a study in which multiple outcomes were measured reports only those that were significant.

Time lag biasOccurs when the speed of publication depends on the direction and strength of the trial results. For example, studies with significant results may be published earlier than those with non-significant results.

Grey literature biasOccurs when the results reported in journal articles are systematically different from those presented in reports, working papers, dissertations or conference abstracts.

Full publication biasOccurs when the full publication of studies that have been initially presented at conferences or in other informal formats is dependent on the direction and/or strength of their findings.

Language biasOccurs when languages of publication depend on the direction and strength of the study results.

Multiple publication bias (duplicate publication bias)Occurs when studies with significant or supportive results are more likely to generate multiple publications than studies with non-significant or unsupportive results. Duplicate publication can be classified as ‘overt’ or ‘covert’. Multiple publication bias is particularly difficult to detect if it is covert, when the same data are published in different places or at different times without providing sufficient information about previous or simultaneous publication.

Place of publication biasIn this review, this is defined as occurring when the place of publication is associated with the direction or strength of the study findings. For example, studies with positive results may be more likely to be published in widely circulated journals than studies with negative results. The term was originally used to describe the tendency for a journal to be more enthusiastic towards publishing articles about a given hypothesis than other journals, for reasons of editorial policy or readers’ preference.

Citation biasOccurs when the chance of a study being cited by others is associated with its result. For example, authors of published articles may tend to cite studies that support their position. Thus, retrieving literature by scanning reference lists may produce a biased sample of articles and reference bias may also render the conclusions of an article less reliable.

Database bias (indexing bias)Occurs when there is biased indexing of published studies in literature databases. A literature database, such as MEDLINE or EMBASE, may not include and index all published studies on a topic. The literature search will be biased when it is based on a database in which the results of indexed studies are systematically different from those of non-indexed studies.

Media attention biasOccurs when studies with striking results are more likely to be covered by the media (newspapers, radio and television news).

The advantages of the term ‘dissemination bias’ are that it avoids the need to define publication status and it is more directly related to accessibility than publication. For example, media attention can have a major impact on dissemination, but it is not normally included within the definition of publication bias. Along with the development of information technology and changes in regulations and policy, data from some ‘unpublished’ studies may be conveniently accessible to the public, and formal publication in journals is only one of several ways to disseminate research findings. Therefore, dissemination bias is a better expression to replace this broad use of the term publication bias. However, the term ‘publication bias’ and ‘publication and related biases’ are already established in research literature and they will also be used for discussion in this report.

Chapter 2 Review objectives and methods

The current review is an update of a previous Health Technology Assessment (HTA) report. 2 This review is divided into two parts: a review of empirical and methodological studies on publication and related biases, and a survey of publication bias in a sample of published systematic reviews.

Objectives

-

To identify relevant evidence studies published since 1998. Evidence studies are defined as those that provide empirical evidence on the existence, consequences, causes and risk factors of dissemination bias.

-

To identify relevant method studies published since 1998. Method studies are those that have developed or investigated methods for preventing, reducing or detecting dissemination bias.

-

To categorise evidence and method studies identified according to a conceptual framework of dissemination profile, and to critically appraise studies that provided direct empirical evidence.

-

To synthesise findings from newly identified and previously included studies to enable us to assess whether each type of dissemination bias does exist, and if so the extent of the effect that it may have on results of systematic reviews and hence decision-making.

-

To assess the usefulness and limitations of available methods through synthesis of the methodological studies.

-

To examine measures taken in a representative sample of published systematic reviews to prevent, reduce and detect different types of dissemination bias. We included both narrative and quantitative (meta-analytic) systematic reviews that evaluated effect of health-care interventions, systematic reviews that evaluated the accuracy of diagnostic tests, systematic reviews that evaluated association between genes and disease, and systematic reviews of epidemiological studies that evaluated association of risk factors and health outcomes.

-

To bring together current evidence on the existence and scale of each type of dissemination bias, effects of methods to combat these biases, and current use of these methods to create recommendations for reviewers, policy-makers and health professionals.

Review of empirical and methodological studies

Criteria for inclusion

We included studies that provide empirical evidence on the existence, consequences, causes and/or risk factors of types of dissemination bias; and method studies that develop or evaluate methods for preventing, reducing or detecting dissemination bias in biomedical or health-related research. Evidence studies are those that provided empirical evidence on the existence, consequences, causes and risk factors of types of dissemination bias; and method studies are those whose main objectives involved one of the following: to develop or evaluate methods for preventing, reducing or detecting dissemination bias. In some cases, a study may be considered as both an evidence study and a method study.

Literature search strategy

The following health-related or biomedical bibliographic databases were searched to identify relevant studies pertaining to empirical evidence and methodological issues concerning publication and related biases: MEDLINE, the Cochrane Methodology Register Database (CMRD), EMBASE, AMED and the Cumulative Index to Nursing and Allied Health Literature (CINAHL). The strategies used to search these electronic databases are presented in Appendix 1. The period searched was from 1998 to August 2008. A further search of PubMed (from 2008 to 2009), PsycINFO (from 1998 to 2009), and OpenSIGLE (from 1998 to 2009) was carried out in May 2009 by one reviewer to identify relevantly published or grey literature studies. References (titles with or without abstracts) gathered by searching MEDLINE and CMRD were independently examined by two reviewers. References from other databases were assessed by one reviewer because they were mostly duplicates of those from MEDLINE.

Literature searches for methodological studies are often difficult because of ill-defined boundaries and inappropriate indexing in commonly used bibliographic databases. 15 In addition, a large number of relevant issues need to be considered in this methodological review. It is hence possible that many relevant studies may be missed by formal searches of electronic databases. Therefore, an iterative approach for literature search was adopted by examining the reference lists of retrieved studies, and examining citations of identified key studies, to identify additional relevant studies. A more focused search of databases was also conducted during the review of specific issues.

Classification of identified relevant studies

According to findings from the previous HTA report,2 the relevant evidence and method studies were numerous in quantity and substantially diverse in quality. To facilitate subsequent assessment and synthesis, identified studies were classified according to a framework of study classification (Figure 1).

FIGURE 1.

Classification of identified relevant studies on publication and related biases.

The identified studies were initially classified by one reviewer as evidence or method studies. Empirical evidence studies were further subcategorised into various types of dissemination bias according to a framework of dissemination profile: non-publication (never or delayed); incomplete publication (outcome reporting or abstract bias); limited accessibility to publication (grey literature, language or database bias); other biased dissemination (citation, duplicate or media attention bias). Some studies were included in more than one category.

The evidence studies were separated into two groups – direct and indirect evidence studies. Direct evidence referred to findings that could be used directly to indicate dissemination bias, including admissions of bias on the part of those involved in the publication process, comparison of the results of published and unpublished studies, and the prospective and retrospective follow-up of dissemination profile of cohorts of studies. Indirect evidence referred to findings that could presumably have some relation with dissemination bias but where other alternative explanations could not be completely excluded. The availability of empirical evidence is very different for different types of research dissemination bias. This updated review focused on direct evidence, although indirect evidence was also considered when direct evidence was limited or absent.

The initial search of the electronic databases yielded a total of 1353 records, with much duplication, many studies being indexed in several different databases. These search results were assessed by one reviewer and 705 potentially relevant articles were identified. These studies were then independently assessed by two reviewers based on their abstracts. Finally, 300 studies were included, of which 109 were classified as evidence studies, 52 as method studies and 9 as both evidence and methods. The remaining studies were classified as background or other studies.

Data extraction and synthesis

We planned to apply a checklist of quality assessment critically to appraise studies that provided empirical evidence (Appendix 2). However, we found it was extremely difficult because of poor reliability of the checklist; different reviewers often disagreed about the overall quality of studies. The task of quality assessment was made more difficult because the designs and objectives of relevant studies in this review were highly diverse. Considering the very limited time available, we decided not to apply the checklist for quality assessment. However, we did try to identify and summarise the main limitations in studies that provided empirical evidence on publication bias in the review, although this assessment of study validity was not as systematic as specified in the protocol.

Initially, data from the included studies were independently extracted by two reviewers using separate data extraction forms for empirical and methodological studies (Appendix 2 and Appendix 3) and any disagreements were resolved by consensus. However, we found that data extracted using Appendix 2 and Appendix 3 were often insufficient, and the extraction of data from studies directly into study tables was more flexible and efficient. To save time, one reviewer extracted data directly into tables, which were checked by a second reviewer.

Findings from the newly identified studies and the previously identified studies2 are summarised to assess each type of publication bias and the impact of these biases on the results of the systematic review and consequently decision-making. Evidence and method studies were narratively synthesised. Where judged appropriate, the results have been quantitatively pooled (e.g. the odds ratio of full publication of studies according to results). Heterogeneity across studies within each subgroup was measured using the I2 statistic. 16 Meta-analyses were carried out using Review Manager (RevMan Version 5.0. Copenhagen: The Nordic Cochrane Centre, The Cochrane Collaboration, 2008).

Assessment of a sample of published reviews

In the previous HTA report, 193 systematic reviews taken from the Database of Abstracts of Reviews of Effectiveness (DARE) were used to identify further evidence of dissemination bias and to illustrate the methods used in systematic reviews for dealing with publication bias. However, there were several shortcomings in our previous assessment. Firstly, systematic reviews included in the DARE database might on average have been of better quality than those from the general bibliographic databases (such as MEDLINE) so the representativeness of systematic reviews assessed in the previous HTA report was questionable. Secondly, 91% of systematic reviews evaluated effectiveness of health-care interventions and 9% evaluated the accuracy of diagnostic technologies, and these were not separately assessed. The problem of dissemination bias might be different between the two types of systematic reviews. Thirdly, neither reviews of epidemiological studies of association between risk factors and health outcomes nor reviews of studies of association between genes and diseases were included in the previous HTA report.

To overcome these shortcomings, in the current updated review we have obtained a representative sample of systematic reviews from the general bibliographic database MEDLINE and have separately assessed them as (1) systematic reviews of studies on effects of health-care interventions or treatment reviews, (2) systematic reviews of epidemiological studies on association between risk factors and health outcomes or epidemiological reviews, (3) systematic reviews of genetic studies on association between genes and disease or genetic reviews, and (4) systematic reviews of studies on accuracy of diagnostic tests or diagnostic reviews. We have also assessed a sample of systematic reviews that tested for publication bias.

Identifying and sampling of reviews

A search of MEDLINE using ‘systematic review’ or ‘meta-analysis’ (in titles or in abstracts) identified 3503 English-language references published in 2006 and 2007. In this updated review, any published literature reviews of primary studies that reported methods for literature search were considered systematic reviews. Editorials, letters and review of reviews were excluded. These references were assessed by one reviewer to identify systematic reviews. Then the identified systematic reviews (n = 2481) were categorised by one reviewer into four categories – treatment effect, diagnostic accuracy, risk factors, and gene-disease association reviews – and checked by another reviewer. The final sample of reviews comprised 1448 treatment reviews, 251 diagnostic reviews, 598 epidemiological reviews and 184 genetic reviews. We then obtained computer-generated random numbers to select a random sample of 100 systematic reviews of treatment effects, 50 reviews of studies of diagnostic accuracy, 100 reviews of epidemiological studies, and 50 reviews of gene-disease associations.

For the assessment of reviews that explicitly considered or tested for publication bias, we used a restrictive search strategy limited to systematic reviews or meta-analyses that tested publication bias and were published from 2000 to 2008 in the English language (Appendix 1). The search was conducted in August 2008 and identified 204 potentially relevant reviews. These were assessed by one reviewer to identify those reviews that tested for publication bias and then computer-generated random numbers were used to select a random sample of 50 such reviews.

Data extraction and analysis

Using a data extraction form (Appendix 4, slightly revised according to types of reviews), two reviewers independently extracted data from included systematic reviews. Any disagreements between the two reviewers were resolved by discussion or by scrutiny from a third reviewer. A pre-derived scoring system was tested to assess the reviewers’ judgement on efforts taken to reduce publication bias and risk of publication bias to assess the degree of agreement between the two reviewers. According to measures taken to deal with publication and related biases in a systematic review, efforts to minimise publication bias were judged to be ‘sufficient’, or ‘partial sufficient’ or ‘insufficient’. Risk of publication bias was correspondingly considered to be ‘low’, or ‘moderate’ or ‘high’ (see Chapter 9 for more details).

Data were separately extracted from systematic reviews of effects of health-care interventions, systematic reviews of epidemiological studies, systematic reviews of genetic studies and systematic reviews of studies on accuracy of diagnostic tests. Each category of systematic reviews was analysed separately and then compared. Within each category of systematic reviews, methods used for identifying and preventing or reducing publication and related biases were examined and compared. Data from the reviews that tested for publication biases were assessed separately to find the most commonly used method to test publication bias and risk of publication bias in such reviews. The findings are synthesised and discussed in detail in Chapter 8. Systematic reviews of effects of health-care interventions and systematic reviews of diagnostic accuracy published in the previous HTA report were also compared with the present findings to examine whether the reporting and treatment of dissemination bias have improved over time.

Chapter 3 Evidence from cohort studies of publication bias

Evidence of publication bias can be classified as direct or indirect. 17 Indirect evidence includes observations of a disproportionately high percentage of positive findings in the published literature, and a larger effect size in small studies as compared with large studies. This evidence is indirect because factors other than publication bias may also lead to the observed disparities. The existence of publication bias was first suspected by Sterling in 1959, after observing that 97% of studies published in four major psychology journals were statistically significant. 18 In 1995, the same author concluded that the practices leading to publication bias had not changed over a period of 30 years. 19

Direct evidence includes the admissions of bias on the part of those involved in the publication process (investigators, referees or editors), comparison of the results of published and unpublished studies, and the follow-up of cohorts of registered studies. 2 The 2000 HTA report on publication bias included both direct and indirect evidence. Because of a large amount of new direct evidence, this updated review focuses on direct evidence from empirical studies, but indirect evidence will also be considered when direct evidence is limited. Surveys of authors and investigators that provide evidence on publication bias are included in Chapter 5 (sources of publication bias).

This section includes any empirical studies that tracked a cohort of studies before their formal publication and reported the rate of publication by study results. Cohort studies on time to publication and selective outcome reporting will be reviewed later. Included cohort studies of publication bias were classified into four subgroups according to the starting point of follow-up of cohorts: inception cohort studies, regulatory cohort studies, abstract cohort studies and manuscript cohort studies. A study that followed up a cohort of research from their beginning (even if retrospectively) was termed an inception cohort study. A regulatory cohort study refers to a study that examined formal publication of research submitted to regulatory authorities. An abstract cohort study refers to a study that investigated the subsequent full publication of abstracts presented at conferences. A manuscript cohort study refers to a study of manuscripts submitted to journals. Primary studies included in cohort studies of publication bias may be clinical trials, observational studies or basic research.

Publication of a study was usually defined as the full publication in journals. However, study results may be categorised differently in the included cohort studies. In this review, study results were classified as ‘statistically significant (p ≤ 0.05)’ versus ‘non-significant (p > 0.05)’ or ‘positive’ versus ‘non-positive’. Positive results included those that were considered being ‘positive’, ‘favourable’, ‘significant’, ‘important’, ‘striking’, ‘showed effect’ and ‘confirmatory’. Non-positive result refers to other results labelled as being ‘negative’, ‘non-significant’, ‘less or not important’, ‘invalidating’, ‘inconclusive’, ‘questionable’, ‘null’ and ‘neutral’.

Inception cohort studies

Five cohort studies were included in the 2000 HTA report. 20–24 Cohorts of research protocols approved by Research Ethics Committees (REC), Institutional Review Boards (IRB) or registered by research sponsors were followed up to investigate factors associated with subsequent publication. The study by Ioannidis included randomised controlled trials (RCTs) conducted by two groups of trialists [sponsored by the National Institutes of Health (NIH) from 1986 to 1996] and was focused mainly on time lag bias. 23 The rate of publication ranged from 60% to 98% for studies with statistically significant results and from 20% to 85% for studies with statistically non-significant results. Dickersin (1997) combined the results from four cohort studies20–22,24 and found that the pooled adjusted odds ratio (OR) for publication bias (publication of studies with significant or important results versus those with unimportant results) was 2.54 (95% CI: 1.44 to 4.47). 25

The updated review included seven additional inception cohorts that provided data on the rate of publication according to study results (Appendix 5). 26–32 Seven other inception cohort studies were excluded because they did not examine the association between publication and study results. 33–39

The cohort study by Bardy (1998) included 188 of the 274 drug trials notified to the Finnish National Agency in 1987. 26 Study results were classified as being positive if the risk-benefit ratio was in favour of the drug under investigation, or if the objective of the study was supported. Results were considered inconclusive if the risk-benefit assessment was inconclusive or if the study was non-comparative, whereas studies were judged as negative if the benefit-risk ratio was not in favour of the drug or no different from placebo. The rate of publication was 47% for positive results, 33% for inconclusive results, and 11% for negative results. 26

Cronin and Sheldon (2004) sent a questionnaire to project leaders of 101 projects sponsored by the UK NHS R&D (research and development) programme to obtain information on study findings and publication status. 27 The method suggested by Dickersin and Min40 was used to define study results. Studies were categorised as ‘showed (an) effect’ or not, depending on whether results were statistically significant (p < 0.05) or considered to be of great importance. The rate of publication of studies with statistically significant or important results was not statistically significantly different from those with non-significant or non-important results (76% versus 64%). 27

Two cohort studies by Decullier and colleagues (2005, 2006) followed up biomedical research protocols approved by French RECs in 1994 and 1997. 28,29 In one of the two French studies, results of 501 completed studies were classified by original investigators as being confirmatory, invalidating or inconclusive (see Appendix 5 for details). 28 The rate of publication of studies with confirmatory results was higher than those with inconclusive results (OR = 4.59; 95% CI: 2.21 to 9.54). 28 In the other French study of 47 completed studies, the importance of results was subjectively rated by investigators from 1 to 10, and important results were those graded as > 5. 29 The rate of publication was 70% for studies with important results and 60% for those with less important results (OR = 1.58; 95% CI: 0.37 to 6.71). 29

Misakian and Bero identified a cohort of 61 passive smoking research projects that were sponsored by 76 organisations between 1981 and 1995. 30 A semi-structured telephone interview of investigators was carried out to verify study results and publication status. Study results were classified as statistically significant or statistically non-significant. The mixed result refers to a situation in which at least one of multiple primary outcomes was statistically significant. The rate of publication was 85% for statistically significant results, 86% for non-significant results, and only 14% for the mixed results. 30

The publication status of 68 RCTs processed through the pharmacy department of an eye hospital since 1963 was examined by Wormald et al. 31 This study was published only as a conference abstract and additional data were provided in Dwan et al. 41 The rate of publication was 93% for statistically significant results and 71% for non-significant results.

Zimpel and Windeler investigated the subsequent publication of 140 medical theses on complementary medical subjects. 32 Results were classified as positive or non-positive (this classification is slightly unclear as the article was published in German). Publication status was tracked by searching MEDLINE and by personal communication with authors or supervisors. The rate of publication was 40% for positive results and 28% for negative results. 32

Regulatory cohort studies

No regulatory cohort studies of publication bias were included in the previous HTA report. In this updated review we identified four regulatory cohort studies that examined formal publication of clinical trials submitted to regulatory authorities (Appendix 6). 42–45 Of the four regulatory cohort studies, two did not specify clinical fields42,44 and two focused on antidepressants. 43,45 One study was not included because the association of journal publication and study results was not reported. 46

Melander et al. conducted a study of 42 randomised placebo-controlled trials of five antidepressants submitted by industry to the Swedish drug regulatory authority for marketing approval. 43 Studies were classified according to whether they found the test drug was significantly more effective than the placebo with the primary outcome. Publication status (including stand-alone, pooled or multiple publications) of the trials was investigated by searching electronic bibliographic databases and contacting the companies. All 21 studies with significant results were published (stand-alone or pooled) while only 81% of studies with non-significant results were published. 43

Turner et al. examined 74 clinical trials of 12 antidepressant agents submitted to the US Food and Drug Administration (FDA) between 1987 and 2004. 45 Trial results were classified as positive, questionable or negative according to the FDA’s regulatory decisions. Publication status of trials was determined by searching literature databases and contacting trial sponsors. The rate of publication was 97% for studies with positive results, 50% for studies with questionable results, and 33% for studies with negative results. 45

In a study by Lee et al. , formal publication of 909 trials supporting 90 new drugs approved by the FDA between 1998 and 2000 was verified by searches of PubMed and other databases. 42 Statistical significance of the primary outcome was defined as being p < 0.05 or a CI excluding no difference. For equivalency or non-inferiority trials, the statistically significant result refers to those with ‘p > 0.05 or a CI including no difference or a CI excluding the pre-specified difference described in the trial’. 42 It was reported that the rate of formal publication was higher for trials with significant results than those with non-significant results (66% versus 36%).

Similar to the above study, a more recently published study included all efficacy trials supporting new drug applications approved by the FDA from 2001 to 2002. 44 Favourable results were those being significantly (p < 0.05) in favour of the new drug or those confirming equivalence in non-inferiority trials. Trials with favourable results were more likely to be published compared with trials with not favourable or unknown results (82% versus 64%). 44

Cohorts of meeting abstracts

In the 2000 HTA report on publication bias,2 we identified eight reports that examined the association between study results and the subsequent full publication of research initially presented as abstracts in meetings or journals. 47–54 A Cochrane Methodology Review included 79 studies of the subsequent full publication of biomedical research results initially presented as abstracts or in summary form. 55 Sixteen of the 79 studies reported data on the rate of publication by significance or importance of study results. Our updated search identified 22 additional cohort studies of research abstracts that provided data on publication bias (for details of all these studies, see Appendix 7). 56–77 Almost all of the 30 cohort studies of conference abstracts were restricted to a specific clinical field, such as emergency medicine, anaesthesiology, perinatal medicine, cystic fibrosis or oncology. The rate of full publication of meeting abstracts ranged from 37% to 81% for statistically significant results, and from 22% to 70% for non-significant results.

Manuscript cohort studies

We identified four studies of cohorts of manuscripts submitted to journals (Appendix 8). 78–81 Two studies examined manuscripts submitted to general medical journals [Journal of the American Medical Association (JAMA), British Medical Journal (BMJ), The Lancet and Annals of Internal Medicine]78,81 and two used manuscripts submitted to the Journal of Bone and Joint Surgery (American Version). 79,80 The study results of submitted papers were classified according to the significance of statistical tests (p < 0.05 or not) in the two studies of manuscripts submitted to general medical journals. 78,81 In the studies of manuscripts submitted to the Journal of Bone and Joint Surgery, results were classified as being positive or negative or neutral, although the definitions of these outcomes may be different between the two studies (Appendix 8). 79,80 Results from these studies suggested that the acceptance of submitted papers for publication by journals was not significantly associated with the direction or strength of their findings.

In the study of Olson et al. ,81 133 accepted manuscripts were further examined and it was found that time to publication was not associated with statistical significance (median 7.8 months for positive and 7.6 months for negative results, p = 0.44). 82 However, a subgroup analysis of 156 manuscripts with a high level of evidence (level I or II) in the study by Okike et al. found that the acceptance rate was significantly higher for studies with positive or neutral results than for studies with negative results (37%, 36% and 5% respectively; p = 0.02). 80

These manuscript cohort studies are generally well designed and conducted. Although no conflict of interest was declared in these studies, this kind of study will always need support or collaboration from editors of the journals. In prospective studies, editors’ decisions on the acceptance of manuscripts may be influenced by their awareness of the ongoing study. 81

Pooled analyses of cohort studies

Results from different studies of publication bias have been quantitatively combined in previous reviews,25,55,83 although it is still controversial because of heterogeneity across individual studies. 41 Pooled estimates may improve statistical power and the generalisability of results. In this review, the association between study results and the possibility of subsequent publication was measured by using odds ratios (OR). Heterogeneity across studies within each subgroup was measured using the I2 statistic. 16 A random-effects model was used in meta-analyses.

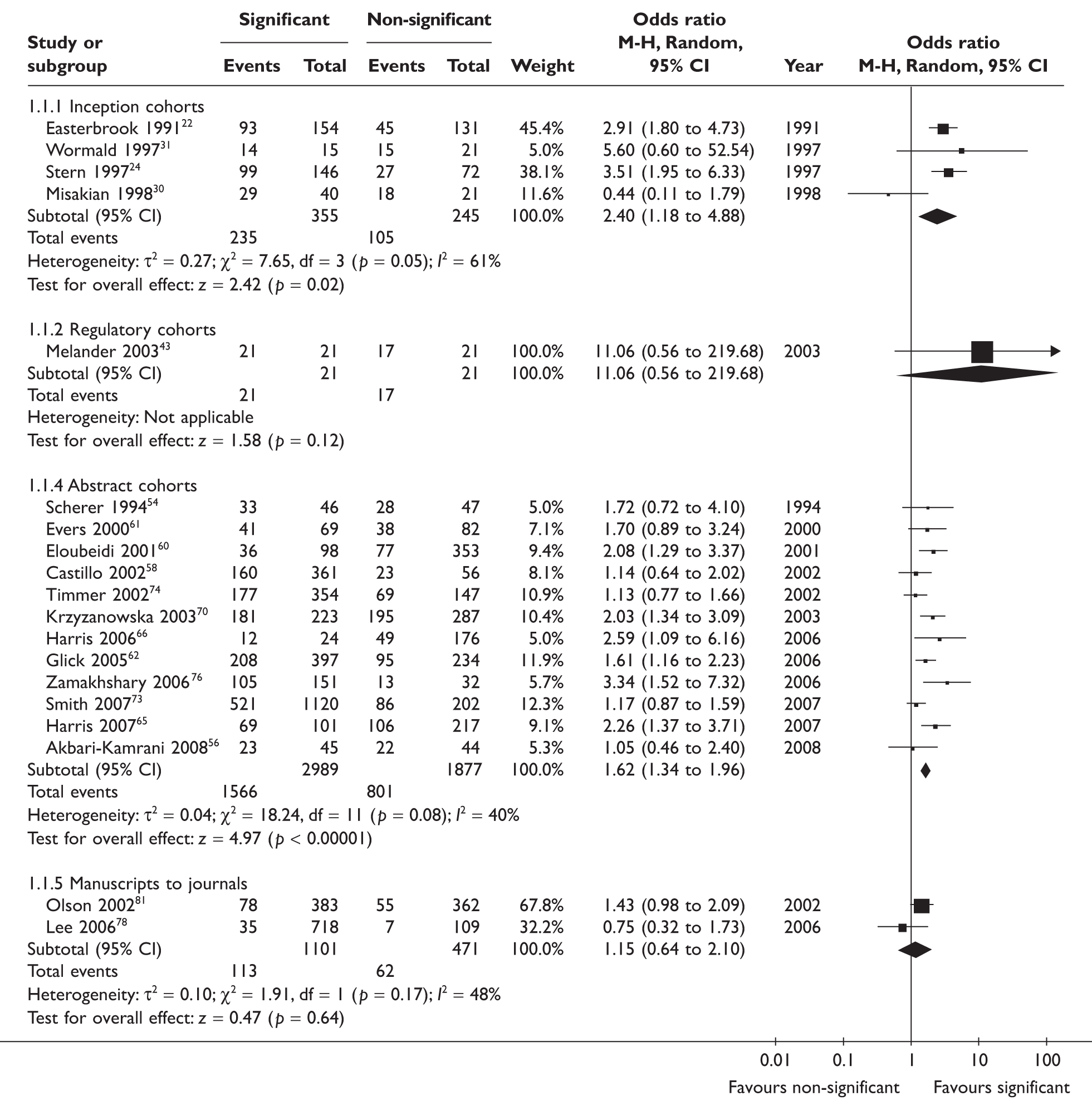

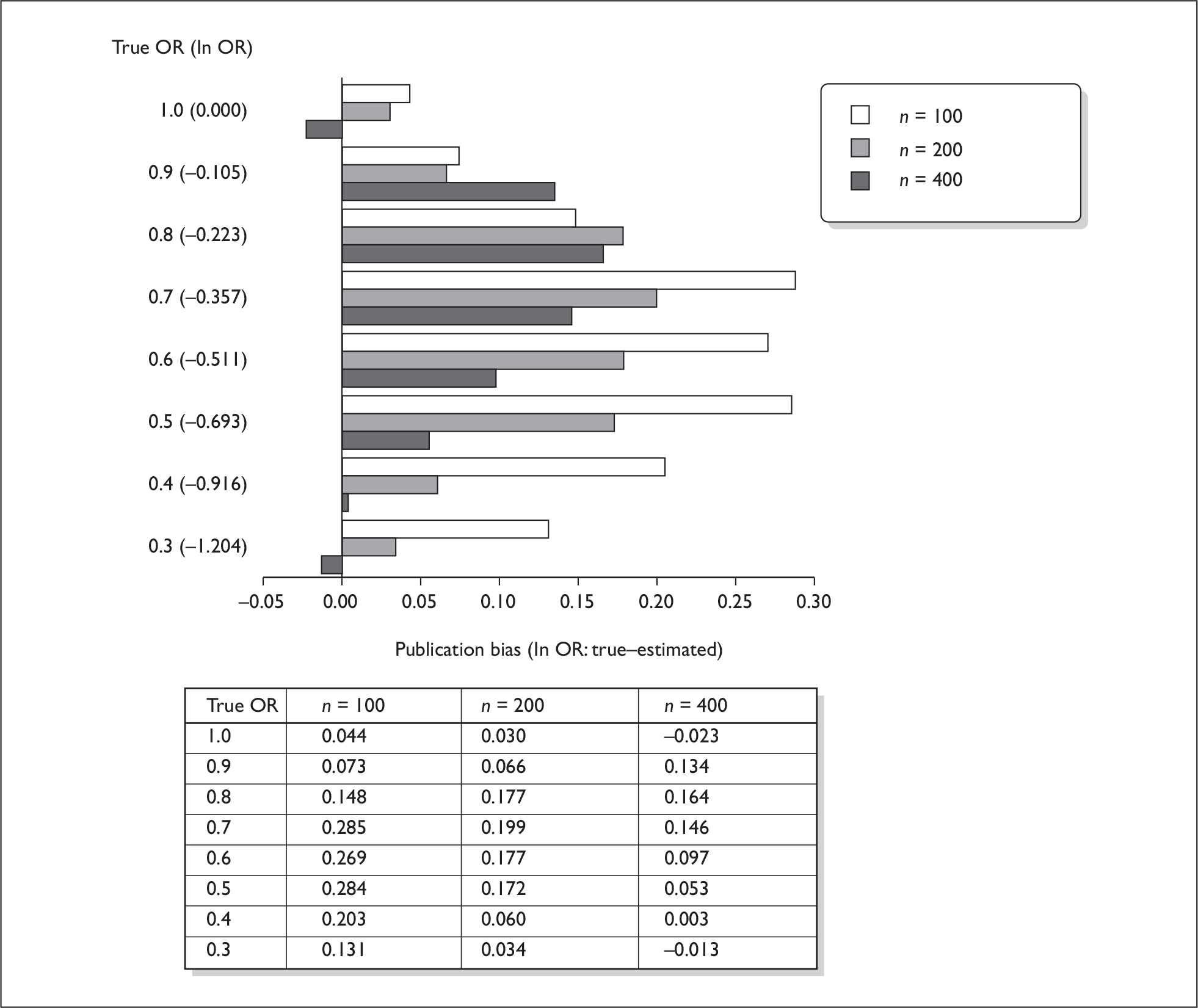

The formal publication of statistically significant results (p < 0.05) could be compared with that of non-significant results in four inception cohort studies, one regulatory cohort study, 12 abstract cohort studies and two manuscript cohort studies (Figure 2). The rate of publication of studies in the four inception cohorts ranged from 60% to 93% for significant results and from 20% to 86% for non-significant results. The rate of full publication of meeting abstracts ranged from 37% to 81% for statistically significant results, and from 22% to 70% for non-significant results. Heterogeneity across the four cohort studies from the inception subgroup was statistically significant (I2 = 61%, p = 0.05). There was no statistically significant heterogeneity across studies within the cohort studies of abstracts and cohort studies of manuscripts. The pooled odds ratio for publication bias by statistical significance of results was 2.40 (95% CI: 1.18 to 4.88) for the four inception cohort studies, 1.62 (95% CI: 1.34 to 1.96) for the 12 abstract cohort studies, and 1.15 (95% CI: 0.64 to 2.10) for the two manuscript cohort studies (Figure 2).

FIGURE 2.

Rate of publication of statistically significant versus non-significant results: all studies. After excluding Misakian and Bero (1998)30 from inception cohort studies, pooled OR = 3.19 (2.21 to 4.61); heterogeneity: I2 = 0%, p = 0.79.

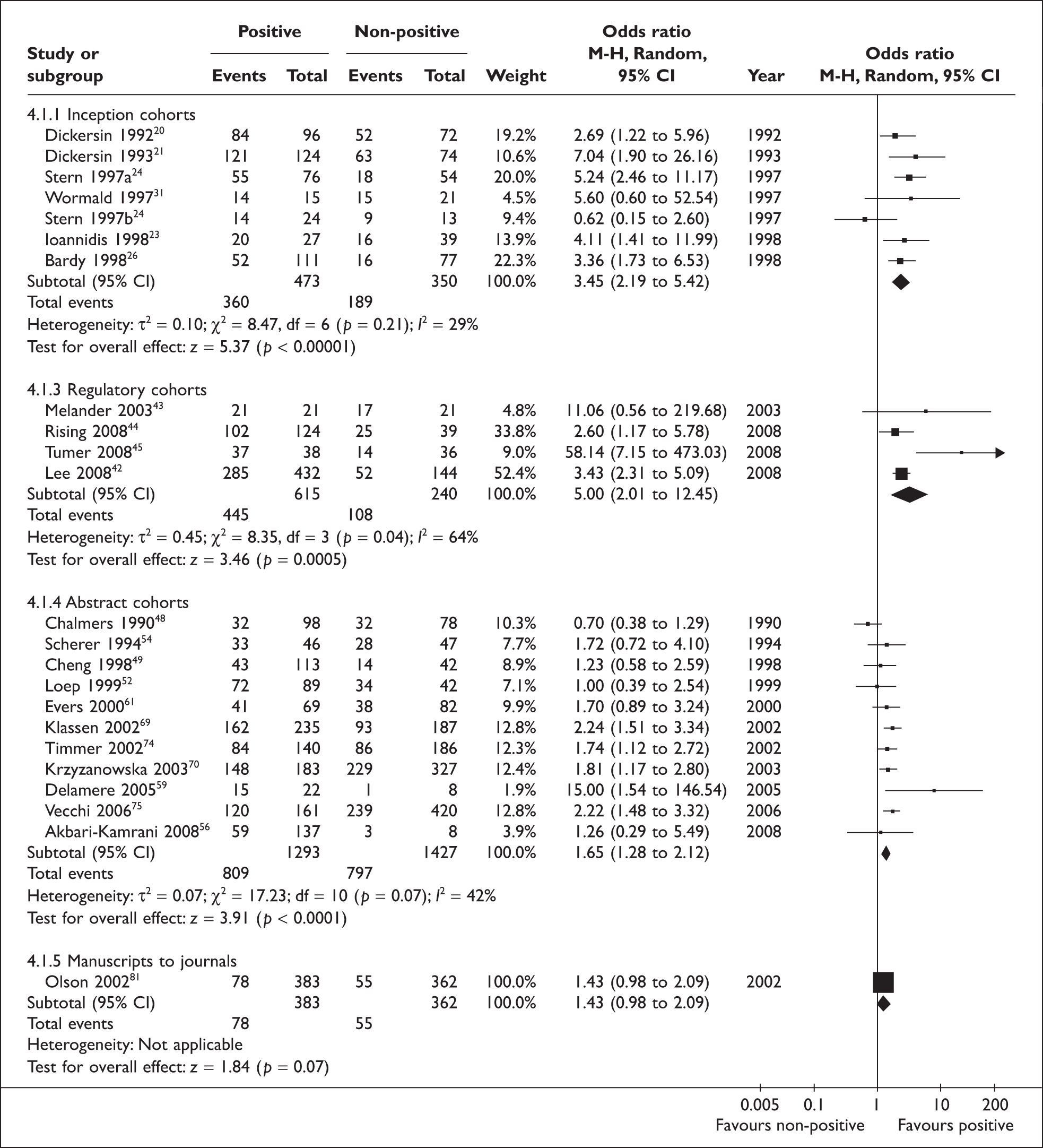

To include data from other cohort studies, a positive result was defined as being important or confirmatory or significant, while a ‘non-positive’ result included negative, non-important, inconclusive or non-significant results. This more inclusive definition of positive results allowed the inclusion of all 12 inception cohort studies, four regulatory cohort studies, 29 abstract cohort studies, and four manuscript cohort studies (Figure 3). There was statistically significant heterogeneity across cohort studies within the inception (p = 0.06), regulatory (p = 0.04) and abstract subgroups (p < 0.001). Pooled estimates of odds ratios consistently indicated that studies with positive results were more likely to be published than studies with non-positive results, but this was not true after the submission to journals (Figure 3).

FIGURE 3.

Rate of publication of positive versus non-positive results: all studies. After excluding Misakian and Bero (1998)30 from inception cohort studies, pooled OR = 2.93 (2.31 to 3.71); heterogeneity: I2 = 17%, p = 0.27.

Types of studies included in the cohort studies varied, and included basic experimental, observational and qualitative research, and clinical trials. When the analyses were restricted to clinical trials, the result was not significantly different from that based on all studies. Although the number of cohort studies that could be included was small, clear evidence of publication bias can still be observed when the analysis was restricted to clinical trials (Figure 4 and Figure 5).

FIGURE 4.

Rate of publication of statistically significant versus non-significant results: clinical trials only.

FIGURE 5.

Rate of publication of positive versus non-positive results: clinical trials only.

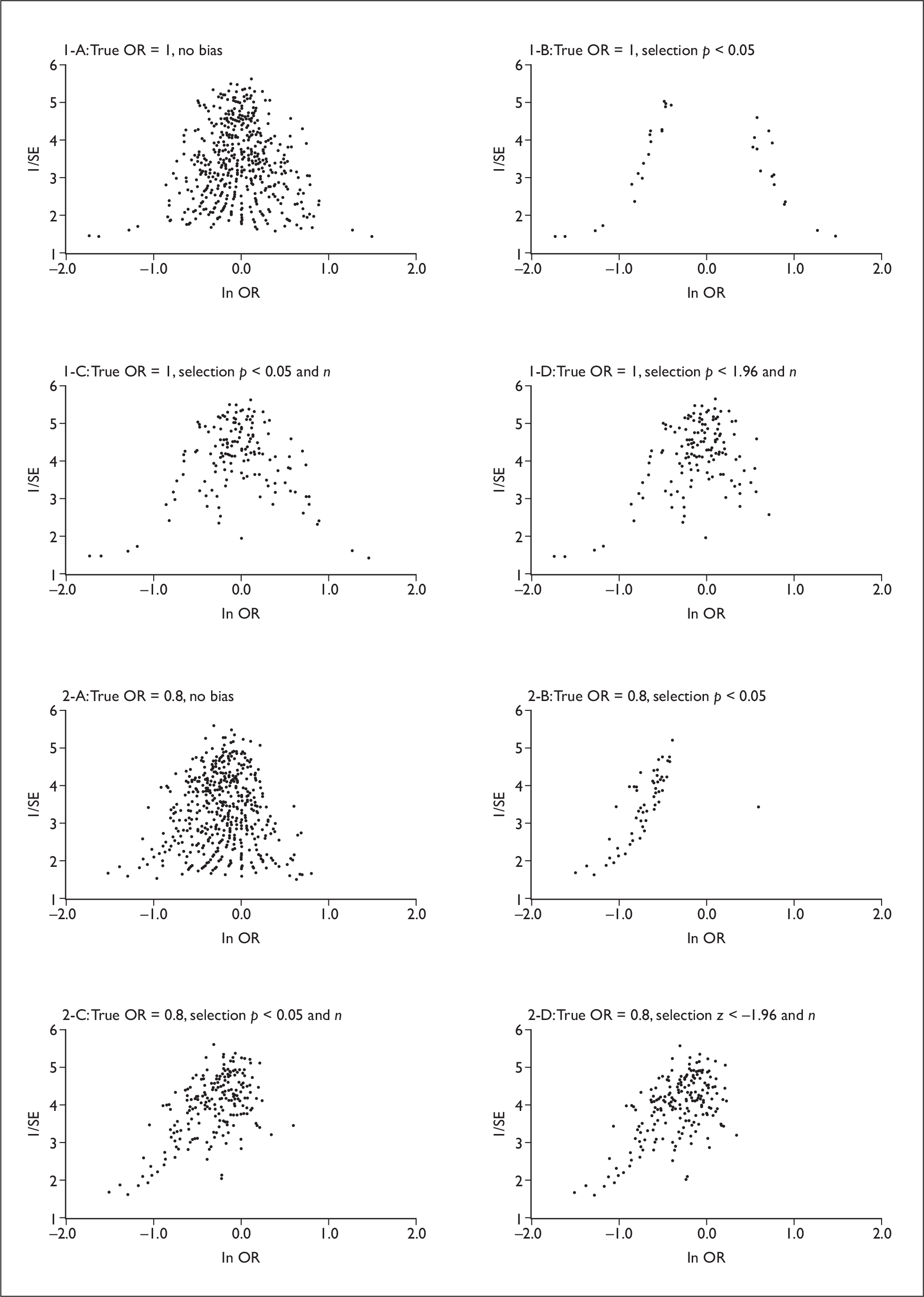

We constructed funnel plots separately for the four subgroups of cohort studies (Figure 6). The asymmetry of these funnel plots was tested using the method recommended by Peters et al. 9 (This is a method of linear regression analysis, using log odds ratio as the dependent variable and the inverse of the total sample size as the independent variable. Please see Chapter 8 for more details about this method.) There was no statistically significant asymmetry for the funnel plots of inception cohort studies (p = 0.178), regulatory cohort studies (p = 0.262), abstract cohort studies (p = 0.233) or manuscript cohort studies (p = 0.942).

FIGURE 6.

Funnel plots – publication of positive and non-positive results.Funnel plot asymmetry test using the method of Peters et al. :9 p = 0.178 for inception cohort studies; p = 0.262 for regulatory cohort studies; p = 0.233 for abstract cohort studies; and p = 0.942 for manuscript cohort studies.

Main results of the above meta-analyses of cohort studies are summarised in Table 1.

| Cohort category | No. of cohort studies | Pooled odds ratio (95% CI) | Heterogeneity test: I2 (p value) |

|---|---|---|---|

| Statistically significant vs non-significant results | |||

| Inception cohorts | 4 | 2.40 (1.18 to 4.88) | 61% (0.05) |

| Regulatory cohorts | 1 | 11.06 (0.56 to 219.68) | |

| Abstract cohorts | 12 | 1.62 (1.34 to 1.96) | 22% (0.24) |

| Manuscript cohorts | 2 | 1.15 (0.64 to 2.10) | 48% (0.17) |

| Positive vs non-positive results | |||

| Inception cohorts | 14 | 2.73 (2.06 to 3.62) | 39% (0.06) |

| Regulatory cohorts | 4 | 5.00 (2.01 to 12.45) | 64% (0.04) |

| Abstract cohorts | 29 | 1.62 (1.38 to 1.93) | 62% (< 0.001) |

| Manuscript cohorts | 4 | 1.06 (0.80 to 1.39) | 22% (0.28) |

Factors associated with publication bias

Some cohort studies have examined the impacts of certain factors on the publication of research. The factors investigated included study design, type of study, sample size, funding source and investigators’ characteristics. Easterbrook et al. (1991) conducted subgroup analyses to examine susceptibility to publication bias among various subgroups of studies. They found that observational, laboratory-based experimental studies and non-randomised trials had greater risk of publication bias than RCTs. Factors associated with less bias included a concurrent comparison group, a high investigator rating of study importance and a sample size above 20. 22

Dickersin et al. (1992) investigated the association between the risk of publication bias and type of study (observational, clinical trial), multi- or single centre, sample size, funding source and principal investigator (PI) characteristics (such as gender, degree and rank). They found that none of the factors examined was associated with publication bias. 20

Dickersin and Min (1993) reported that the OR for publication bias was 0.84 (95% CI: 0.07 to 9.68) for multicentre studies compared to 21.14 (95% CI: 2.60 to 171.7) for single centre studies. 21 In addition, the risk of publication bias was different between studies with a female PI (OR = 0.47; 95% CI: 0.02 to 11.61) and studies with a male PI (OR = 20.70; 95% CI: 2.61 to 164.2). One interesting explanation for the difference in study publication between female and male PIs posted by Dickersin and Min was that ‘women have fewer studies to manage’, related to their relatively lower rank (35% of women PIs were professors compared to 65% of male PIs), and are thus less selective in study publication. They did not find an association between publication bias and other study features such as the use of randomisation or blinding, having a comparison group or a large sample size. 21

Stern and Simes (1997) found that the risk of publication bias tended to be greater for clinical trials (OR = 3.13; 95% CI: 1.76 to 5.58) than other studies (for all quantitative studies OR = 2.3; 95% CI: 1.47 to 3.66). When analysis was restricted to studies with a sample size ≥ 100, publication bias was still evident (HR = 2.00; 95% CI: 1.09 to 3.66). 24

Discussions of findings from cohort studies

The updated review identified limited new evidence on publication bias based on a follow-up of research protocols, and a large number of new studies on subsequent publication of meeting abstracts. Updated analyses yielded results similar to those from the 2000 HTA report and other existing reviews: studies with statistically significant or positive results are more likely to be formally published than those with non-significant or non-positive results. 2,25,41,55,83 Dickersin in 1997 combined the results from four inception cohort studies20–22,24 and found that the pooled adjusted odds ratio for publication bias (publication of studies with significant or important results versus those with unimportant results) was 2.54 (95% CI: 1.44 to 4.47). 25 A recent systematic review of inception cohort studies of clinical trials found the existence of publication bias and outcome reporting bias, although pooled meta-analysis was not conducted due to perceived differences between studies. 41 A Cochrane methodology review of publication bias by Hopewell et al. 83 included five inception cohort studies of trials registered before the main results were known,20,21,23,24,26 in which the pooled odds ratio for publication bias was 3.90 (95% CI: 2.68 to 5.68). In a Cochrane methodology review by Scherer et al. , the association between the subsequent full publication and study results was examined in 16 of 79 abstract cohort studies. 55 According to these 16 abstract cohort studies, the subsequent full publication of conference abstracts was statistically significantly associated with positive study results (pooled OR = 1.28; 95% CI: 1.15 to 1.42).

Compared with the previous reviews on cohort studies of publication bias, our review is more inclusive in terms of types of studies and is the first to enable an explicit comparison of results from cohort studies of publication bias with fundamentally different sampling frames. Biased selection for publication may affect research dissemination over the whole process from before study completion, to presentation of findings at conferences, manuscript submission to journals, and formal publication in journals. It seems that publication bias occurs mainly before the presentation of findings at conferences and before the submission of manuscript to journals (see Figure 2 and Figure 3). The subsequent publication of conference abstracts was still biased but the extent of publication bias tended to be smaller compared with all studies conducted. After submission of manuscript to journals, editorial decisions were not clearly associated with study results.

Limitations of the available evidence on publication bias

There are some caveats to the available evidence on publication bias. Study findings have been defined differently among the empirical studies assessing publication bias. The most objective method would be to classify quantitative results as statistically significant (p < 0.05) or not. However, this was not always possible or appropriate. When other methods were used to classify study results as important or not, bias may be introduced due to inevitable subjectivity.

The funnel plot asymmetry is not statistically significant for inception, regulatory, abstract cohort studies and manuscript cohort studies (see Figure 6). However, there are reasons to suspect the existence of publication and reporting bias in studies of meeting abstracts. A large number of reports of full publication of research abstracts were assessed for inclusion into this review but did not mention the association between publication and study results and so were excluded. This association might not have been examined; or not reported when the association was not significant. As an example, Zaretsky and Imrie (2002)77 reported no significant difference (p = 0.53) in the rate of subsequent publication of 57 meeting abstracts between statistically significant and non-significant results; but this study was not included in the analysis as insufficient data were provided.

Large cohort studies on publication bias usually included cases that were highly diverse in terms of research questions, designs and other study characteristics. Many factors (e.g. sample size, design, research question and investigators’ characteristics) may be associated with both study results and the possibility of publication. Adjusted analyses by some factors may be conducted but it was generally impossible to exclude the impact of confounding factors on the observed association between study results and formal publication. There is very limited and conflicting evidence on factors (such as study design, sample size, etc.) that may be associated with publication bias. To improve the understanding of factors associated with publication bias, findings from qualitative research on the process of research dissemination may be helpful. 84,85

There was statistically significant heterogeneity within subgroups of inception, regulatory and abstract cohort studies (see Table 1). The observed heterogeneity may be a result of differences in study designs, research questions, how the cohorts were assembled, definitions of study results, and so on. For example, the statistically significant heterogeneity across inception cohort studies was due to one study by Misakian and Bero (see Figure 2 and Figure 3). 30 After excluding this cohort study, there was no longer significant heterogeneity across inception cohort studies. The cohort study by Misakian and Bero included research on health effects of passive smoking, and the impact of statistical significance of results on publication may be different from studies of other research topics. 30

The four cohorts of trials submitted to regulatory authorities showed greater extent of publication bias than other subgroups of cohort studies (see Figure 3). 42–45 Only 855 primary studies were included in the regulatory cohort studies, and two of the four regulatory cohort studies focused on trials of antidepressants. 43,45 Therefore, the four regulatory cohort studies may be a biased selection of all possible cases.

Conclusions

Despite many caveats about the available empirical evidence on publication bias, there is little doubt that dissemination of research findings is likely to be a biased process. There is consistent empirical evidence that the publication of a study that exhibits statistically significant or ‘important’ results is more likely to occur than the publication of a study that does not show such results. Indirect evidence indicates that publication bias occurs mainly before the presentation of findings at conferences and the submission of manuscripts to journals.

Chapter 4 Evidence of different types of dissemination bias

This chapter reviews available empirical evidence on different types of research dissemination bias, including outcome reporting bias, time lag publication bias, grey literature bias, language bias, citation bias, duplicate or multiple publication bias, place of publication bias, database bias, country bias and media attention bias.

Outcome reporting bias

Outcome reporting bias occurs when studies with multiple outcomes report only some of the outcomes measured and the selection of an outcome for reporting is associated with the statistical significance or importance of the result. This bias is due to the incomplete reporting within published studies, and is also called ‘within-study reporting bias’ in order to distinguish it from selective non-reporting of a whole study,86 or publication bias ‘in situ’. 87

Number of outcomes measured within trials

The existence of a large number of measured or calculated outcomes within a study is the prerequisite of selective reporting bias, which is present in almost all research studies. The selection of outcomes to report can be further classified into three categories:87 (1) the selection of outcomes investigated, (2) the selection of methods to measure the selected outcome, and (3) the selection of results of multiple subgroup analyses. A large number of results can be generated by the combination of all possible choices. Pocock et al. (1987) found that the median number of reported end points was six per trial. 88 They also discussed selective reporting of results and related issues of subgroup analyses, repeated measurements over time, multiple treatment groups, and multiple tests of significance. 88 Tannock (1996) examined 32 RCTs published in 1992 and found that the median number of therapeutic end points per trial was five (range 2–19) and 13 trials did not define their primary end point. 89 Each of the 32 trials, on average, reported six (range 1–31) statistical comparisons of major outcome parameters; and more than half of the implied statistical comparisons had not been reported. 89

The number of outcomes estimated from published articles may underestimate the actual number of outcomes measured in trial studies. Based on information from trial protocols, Chan et al. 6,7 found that the median number of efficacy outcomes was 20 per trial and the median number of harm outcomes was six or five per trial.

Although outcome reporting bias was highly suspected, there was very limited empirical research included in the 2000 HTA report. 90 The updated review has identified many recently published empirical studies that provided direct evidence with which to assess the existence and extent of outcome reporting bias (see Appendix 9 for details of the included studies).

Direct evidence on outcome reporting bias

The most direct evidence on the existence and extent of outcome reporting bias is from studies that compared outcomes specified in research protocols and those reported in subsequent articles. A pilot study by Hahn et al. (2002)36 was the first attempt to compare outcomes specified in trial protocols approved by a local REC and results reported in subsequent publications. They compared outcomes in 15 pairs of protocols and journal articles. Six of the 15 studies stated primary outcome variables in their protocols and four used the same outcomes as primary outcomes in the reports. An analysis plan was mentioned in eight studies, but the plan was followed in only one published report.

Chan and colleagues provided the most direct evidence on outcome reporting bias by investigating a cohort of 102 RCT protocols approved by the Danish REC from 1994 to 1995,6 and another cohort of 48 RCT protocols approved by the Canadian Institutes of Health Research from 1990 to 1998. 7 Data on unreported and reported outcomes were collated based on trial protocols, subsequently published journal articles, and a survey of trialists. If a published article provided insufficient data for meta-analysis, the outcome was defined as being incompletely reported. They found that 50% of efficacy outcomes and 65% of harm outcomes were incompletely reported in the Danish cohort; and 31% and 59% respectively in the Canadian cohort. Primary outcomes specified in protocols were different from primary outcomes stated in the corresponding journal articles in 62% (Danish cohort) and 40% (Canadian cohort) of cases. Statistically significant outcomes were more likely to be fully reported than non-significant outcomes. The odds ratio of an efficacy outcome being fully reported if it were statistically significant versus non-significant was 2.4 (95% CI: 1.4 to 4.0) in the Danish cohort and 2.7 (95% CI: 1.5 to 5.0) in the Canadian cohort. The biased reporting of significant outcomes appears more severe for harm data, the odds ratios were 4.7 (95% CI: 1.8 to 12.0) and 7.7 (95% CI: 0.5 to 111) respectively. 6,7

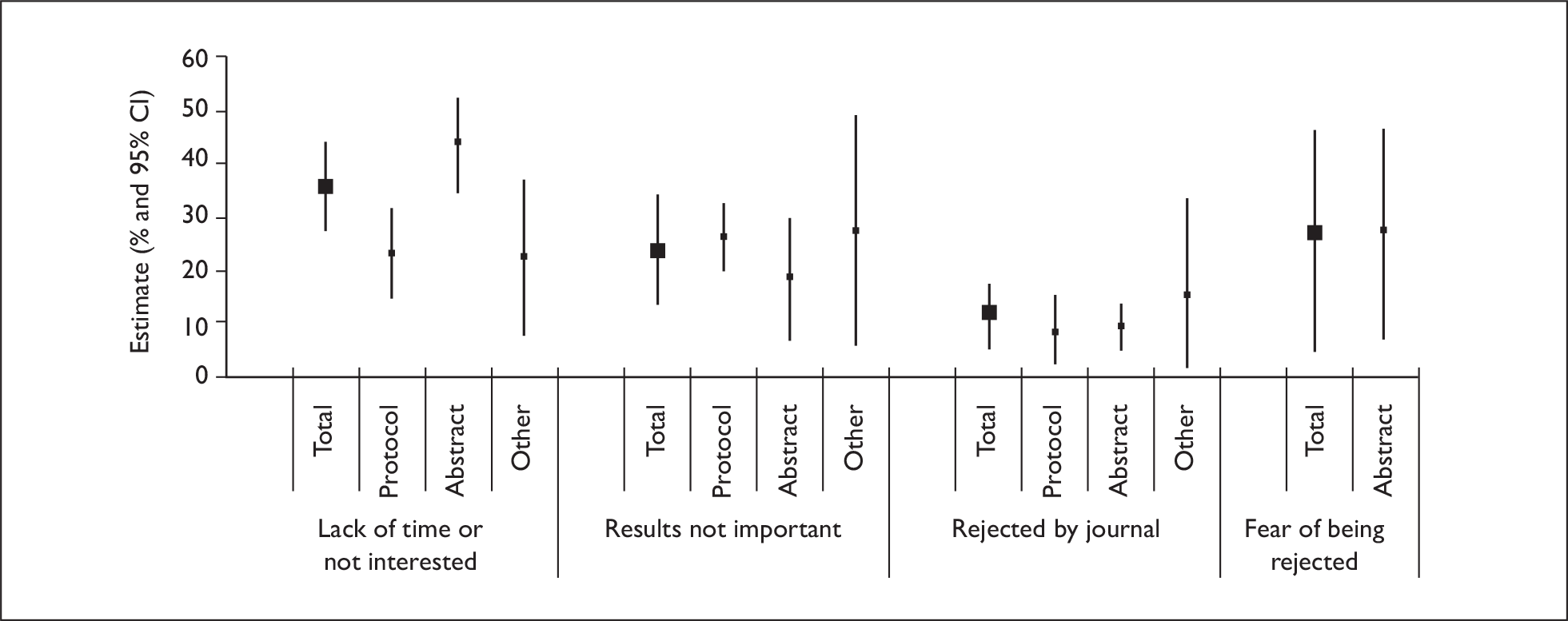

Further work by Chan and Altman (2005) identified 519 RCTs indexed in PubMed in December 2000, and they conducted a survey of trialists to obtain information on unreported outcomes. 5 The median proportion of incompletely reported outcomes per trial was 42% for efficacy outcomes and 50% for harm outcomes. The pooled odds ratio for outcome reporting bias was 2.0 (95% CI: 1.6 to 2.7) for efficacy outcomes and 1.9 (95% CI: 1.1 to 3.5) for harm outcomes. Reasons given by authors for not reporting efficacy and harm outcomes included space constraints (47% and 25%), lack of clinical importance (37% and 75%), and being statistically non-significant (24% and 50%). 5

Ghersi et al. (2006) compared 103 published RCTs and their protocols approved by Central Sydney Area Health Service REC from 1992 to 1996. 91 They found that 17% of primary outcomes specified in the protocols and 15% reported in articles differed between the protocols and publications. Trials for which all comparisons were statistically significant were more likely to report all outcomes fully (p = 0.06). As the study by Ghersi et al. was presented only as an abstract there was a lack of information on its study design and other results. 91

Other evidence

One consequence of outcome reporting bias is that many trials cannot be included in meta-analyses because of incompletely reported outcomes in published papers. Although unreported data may be available from trialists, such communication can be time consuming and often does not result in additional data becoming available.

McCormack et al. 92 compared the results of a meta-analysis based on published data with an updated IPD (individual patient data, where the complete original datasets of the included studies are used to pool study data, rather than simply using summary measures from published reports) meta-analysis of trials of hernia surgery. For the outcome of hernia recurrence, the number of contributing RCTs was similar and the results were not significantly different between the two analyses. For the outcome of persisting pain, IPD meta-analysis included many more RCTs (3 versus 20) and provided qualitatively divergent results, as compared with the meta-analysis of published data. This case study indicates that some outcomes (e.g. persisting pain) may be more vulnerable to selective reporting than other outcomes (e.g. hernia recurrence). 92

In a recent study, Bekkering et al. examined 767 observational studies (with 3284 results) and found that only 61% of the reported results could be used in meta-analyses investigating dose–response associations between diet and prostate or bladder cancer. 93 Usable results were more likely to indicate the existence of the association than those that were not usable. 93

Furukawa et al. 94 investigated the association between the proportion of contributing RCTs and the pooled estimates of 156 Cochrane systematic reviews. A median of 46% [interquartile range (IQR) 20% to 75%] of identified RCTs in each meta-analysis contributed to the pooled estimates. The results of regression analysis revealed a general trend that the greater the proportion of contributing RCTs the smaller the treatment effect. It was concluded that outcome reporting may be biased. 94

A methodological study by Williamson and Gamble95 provided a motivating example and four cases in which results of Cochrane reviews were compared with results of sensitivity analysis (by imputation) when within-study outcome reporting bias was suspected. The example was a meta-analysis of beta-lactam versus a combination of beta-lactam and aminoglycoside in the treatment of cancer patients with neutropenia, in which only five of the nine eligible RCTs could be included. They found that the pooled treatment effect was considerably decreased in sensitivity analysis where the missing results were imputed. For the other four selected cases, within-study selection was suspected in several trials but the impact on the conclusions of the meta-analyses was minimal. 95

Scharf and Colevas compared adverse events reported in 22 published articles and corresponding protocols or data from Clinical Data Update System (CDUS, the National Cancer Institute’s electronic database of clinical trial information). 96 The study found considerable mismatch in high-grade adverse events between the articles and the CDUS data, but it was not clear whether the mismatch was due to bias. It is important to note that published articles under-reported low-grade adverse effects: only 58% of low-grade adverse effects recorded in the CDUS database were reported in articles. 96

Selective reporting of multiple alternative analyses

Bias may be introduced by selective reporting of multiple results generated by different analyses for a given outcome. Melander et al. 43 compared 42 trials of five selective serotonin reuptake inhibitor (SSRI) antidepressants submitted to the Swedish Drug Regulatory Authority for marketing approval and published articles. The study considered only one outcome, response rate. Both intention-to-treat (ITT) analysis and per protocol analysis were presented in 41 of the 42 submitted reports, but in only two stand-alone publications. The stand-alone publications tended to report the more favourable result by per protocol analysis. 43

Compared with clinical trials, epidemiological studies may be more susceptible to selective reporting of results because of their exploratory nature. Kyzas et al. (2005)97 conducted a meta-analysis to assess the association between a prognostic factor, the tumour suppressor protein 53 (TP53), and mortality outcome of patients with head and neck squamous cell cancer. They compared the results using (1) data from 18 studies that were indexed with ‘survival’ or ‘mortality’ in MEDLINE or EMBASE, (2) data from 13 published studies that were not indexed with ‘survival’ or ‘mortality’ in MEDLINE or EMBASE, and (3) data retrieved from authors for 11 studies in which data on mortality were collected but no usable data were reported. The pooled relative risk for mortality was 1.27 (95% CI: 1.06 to 1.53) using 18 published and indexed studies, 1.13 (95% CI: 0.81 to 1.59) using 13 published but not indexed studies, and 0.97 (95% CI: 0.72 to 1.29) using retrieved data.

According to the study by Kyzas et al. ,97 TP53 status can be measured by different methods. When available, Kyzas et al. used immunohistochemistry data, and a TP53-positive status was defined as nuclear staining in at least 10% of tumour cells or at least moderate staining in qualitative scales. They also standardised all-cause mortality to 24 months of follow-up. It was found that the association was stronger by using the definitions preferred by each publication (RR 1.38; 1.13–1.67) than when definitions were standardised (RR 1.27; 1.06–1.53).

Kavvoura et al. investigated the discrepancy between abstracts and full papers of epidemiological studies using 389 abstracts and 50 randomly selected full papers. 98 In the abstracts, 88% reported one or more statistically significant relative risks and only 43% reported one or more non-significant relative risks. The prevalence of significant results was less prominent in full texts of the articles. A median of nine (IQR 5–16) significant and six (IQR 3–16) non-significant relative risks were presented in the full text of the 50 articles. They also found that ‘investigators selectively present contrasts between more extreme groups when relative risks are inherently lower’. 98

Summary of evidence on outcome reporting bias

The most direct evidence is from the two studies that compared outcomes specified in trial protocols and outcomes reported in subsequent publications. 6,7 The results of unreported outcomes were obtained by a survey of original investigators. Due to low response rates and insufficient data for 2 × 2 tables, many included cases were excluded from the calculation of odds ratios. In the Danish cohort of 102 trials,6 the odds ratio for reporting bias was based on 50 trials for efficacy outcomes and 18 trials for harm outcomes. Thirty trials for efficacy and only four trials for harm outcomes were used in the Canadian cohort of 48 trials. 7 This low response rate is likely to lead to an underestimation of outcome reporting bias.

Trials included in these two studies were mostly published before the CONSORT statement appeared in 2001, so it would be interesting to investigate whether outcome reporting bias has been reduced in trials published since 2001.

Findings from ongoing studies may provide further empirical evidence. For example, one study by Ghersi et al. compared 103 published RCTs and their protocols, and was presented in 2006 as a conference abstract. It is now available only in abstract form with insufficient study detail. 91 We also identified an ongoing MRC-funded study99 that aims to investigate the proportion and impact of within-study outcome reporting in an unselected cohort of 300 Cochrane systematic reviews.

Although case studies often yield evidence of limited usefulness, they may provide evidence indicating what further research is required. For example, the study by McCormack et al. of IPD meta-analysis indicated that some subjectively assessed outcomes may be more vulnerable to reporting bias than objectively assessed outcomes. 92

Time lag bias

When the speed of publication depends on the direction and strength of the study results, this is referred to as time lag bias. 100 Empirical evidence on time lag bias could be separated into two categories: (1) the relationship between the study results and time to publication, and (2) changes in reported effect size over time.

Time to publication

The process of research is usually complex and involves several important milestones. These include development of the research proposal, approval by a research ethics committee, obtaining research funding, recruitment of participants, completion of follow-up, submission of manuscripts to a journal, and final publication in peer-reviewed journals. Measurement of elapsed ‘time to publication’ could be considered to start from any of these milestones, for example, from the date of REC approval, funding received, initiation or completion of enrolment, completion of follow-up, or manuscript submission.

Four cohort studies were analysed in the 2000 HTA report (see Appendix 10). Simes (1987) examined the time from trial closure to publication, using 38 published or unpublished trials on advanced ovarian cancer or multiple myeloma. 101 All six trials that showed a statistically significant survival difference were published within 5 years of study closure, while 5 of the 32 trials that showed no significant difference were published more than 5 years after study closure, and 7 of the 32 trials with non-significant results were not yet published. 101

In a survey of 218 quantitative studies approved by a hospital Ethics Committee in Australia, Stern and Simes observed that the median time from granting of ethical approval to the first publication in a peer-reviewed journal was 4.8 years for studies with significant results as compared with 8.0 years for studies with null results (HR 2.32; 95% CI: 1.47 to 3.66). 24 Adjustment for other factors that affect publication (e.g. research design and funding source) did not change this result materially. When only the large quantitative studies (sample size > 100) were analysed, the time lag bias remained evident (HR 2.00; 95% CI: 1.09 to 3.66). 24 Studies with non-significant trend (0.05 < p < 0.10) were published later compared with studies with null results (p > 0.10) (HR 0.43; 95% CI: 0.15 to 1.24). For qualitative studies (n = 103), there was no clear evidence of time lag bias involving studies with unimportant or negative results. 24

Further empirical evidence on time lag bias came from a cohort of 66 completed phase 2 or phase 3 trials, conducted between 1986 and 1996 by a clinical trials group on AIDS. 23 The results were classified as ‘positive’ if an experimental therapy for AIDS was significantly (p < 0.05) better than the control therapy. ‘Negative results’ included those with no statistically significant difference and those in favour of the control therapy. Definition of publication was that the trial findings had to be published in a peer-reviewed journal. The median time from start of enrolment to publication was 4.3 years for positive trials as compared to 6.4 years for negative trials (p < 0.001). Positive trials were submitted for publication more rapidly after completion (median 1.0 year versus 1.6 years; p = 0.001) and were published more rapidly after submission (median 0.8 years versus 1.1 years; p = 0.04), compared with negative trials. 23

Misakian and Bero identified 61 completed studies through a survey of 89 organisations that supported research on the health impact of passive smoking. 30 Time to publication was assessed from the start date of funding because it was difficult to decide the time of study completion. ‘Published studies’ were those that appeared in a peer-reviewed, non-peer-reviewed, or in-press publication, but not if published only as abstracts. The median time from funding start to publication was 5 years (95% CI: 4 to 7) for statistically non-significant studies, and 3 years (95% CI: 3 to 5) for statistically significant studies (p = 0.004). Multivariate analysis revealed that time to publication was associated with the statistical significance of the results (p = 0.004), experimental study design (p = 0.01), study size (p = 0.01) and animals as subjects (p = 0.03). 30

The updated review identified five new empirical studies of the interval before manuscript submission to publication (Appendix 10),27,37,102–104 and one study of time from manuscript submission to publication. 81

Min and Dickersin found that statistically significant or important results were associated with time from completion of enrolment to full publication (HR = 1.75; 95% CI: 1.14 to 2.93), according to a study of 242 observational studies initiated at Johns Hopkins University. 104 However, Cronin and Sheldon examined a cohort of 70 studies sponsored by the UK NHS R&D programme and did not observe a significant difference in time from study completion to publication by whether a study result was significant (p < 0.05 or important) or not (HR = 0.53; 95% CI: 0.25 to 1.1). 27 Similarly, study results were not found to be significantly associated with time to publication, according to findings from the remaining three empirical studies (Appendix 10); however, in all of the studies where data were provided the trend suggested a shorter time for statistically significant or ‘positive’ studies than for non-significant or negative ones, and the cohorts of studies were often small. 37,102,103

Dickersin et al. tracked 133 manuscripts of comparative studies accepted for publication by the Journal of the American Medical Association (JAMA) and investigated time from manuscript submission to publication. 82 Results were classified as positive if a statistically significant (p < 0.05) difference was reported for the primary outcomes. Seventy-eight (59%) manuscripts reported positive results, 51 (38%) reported negative results and the results of four (3%) articles were unclear. The median time interval between submission and publication was 7.8 months for positive studies versus 7.6 months for negative studies (p = 0.44). 82 Findings of this study indicated that time lag bias (if any) may likely occur before, not after, the manuscript submission to journals.

We also identified six studies that investigated the time from abstract presentation at meetings to subsequent full publication (Appendix 10). 49,60,61,69,70,105 The study by Krzyzanowska et al. 70 included 510 abstracts of large (n > 200) phase 3 trials presented at an oncology meeting between 1989 and 1998. They found that trials with statistically significant results were published earlier than those with non-significant results (median time to publication 2.2 versus 3.0 years; HR = 1.4; 95% CI: 1.1 to 1.7). 70 Findings from two other studies60,69 also suggested that time from abstract presentation to full publication was associated with significant results. However, the observed association between study results and time from abstract presentation to publication was not statistically significant in the remaining three studies (two of which were small in terms of the number of abstracts assessed). 49,61,105

Change in reported effect size over time

The 2000 HTA report on publication bias2 discussed only two brief reports on the temporal trend of reported effect size. 106,107 Rothwell and Robertson found that the treatment effect was overestimated by early trials as compared with subsequent trials in 20 of the 26 meta-analyses of clinical trials. 106 In another report, a significant correlation (p < 0.10) between the year of publication and the treatment effect was observed in 4 of the 30 meta-analyses published in BMJ or JAMA during 1992–6. 107

The updated review included two new case studies of pharmaceutical interventions108,109 and two studies in research on ecology or evolution110,111 (Appendix 10). Gehr et al. found that reported effect size significantly decreased over time in three of the four meta-analyses of studies on pharmaceutical interventions. 108 In a case study of N-acetylcysteine in the prevention of contrast-induced nephropathy, Vaitkus and Brar found that trials published earlier reported more favourable results than trials published later. 109